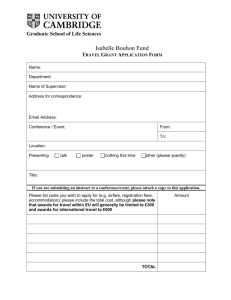

conference materials

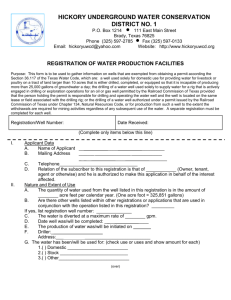

advertisement