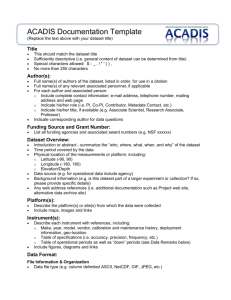

WD-ImageDM-20131115

advertisement