RELIABILITY & AVAILABILITY Within The Facility presented by Geoff

advertisement

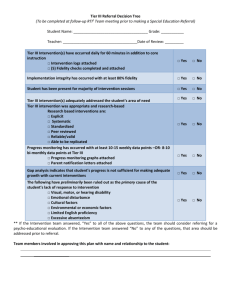

RELIABILITY & AVAILABILITY Within The Facility presented by Geoff Cope, PE Reliability & Availability for Mission Critical Design Reliability & Availability Basics Reliability & Availability Modeling Practical Examples Conclusions Questions & Answers Reliability & Availability for Mission Critical Design Reliability/Availability study is used for making comparisons. Industry standards Infrastructure architecture (making design decisions) Infrastructure improvements (Before & After) Identify most important component (Weakest Link) Helping with financial decisions (reliability Vs cost) Reliability & Availability for Mission Critical Design Industry Standard “Uptime Institute” Developed Tier Classifications Tier I & Tier II may have single points of failure (99% available) Tier III may have some single points of failure (99.9% available) Tier IV has no single points of failure (99.99% available) Recently modified white paper to include electrical distribution concepts Tier III & Tier IV are provided with dual corded mechanical systems and redundant pathways. Reliability & Availability for Mission Critical Design Infrastructure Architecture System Architecture UPS Systems Stored Energy Systems Mechanical Systems Single Point of Failure (SPOF) Reliability & Availability for Mission Critical Design System Architecture Single System (N) Multi-unit systems Parallel System (N) Parallel Redundant (N+1, N+2, etc…) 2N or System + System 2(N+1) two parallel redundant systems Isolated redundant system Catcher System Distributed Redundant System These configurations greatly affect the reliability of a system Reliability & Availability for Mission Critical Design UPS Systems Double Conversion Rotary line interactive Off-Line Low Voltage Medium Voltage UPS types do not generally affect the reliability analysis for Tier III & Tier IV Systems. Reliability does not equal power quality. Reliability & Availability for Mission Critical Design Electrical System Stored Energy Chemical Batteries (wet cell, dry cell) Fly Wheels Super Capacitors Fuel Cells Mechanical System Stored Energy Ice Storage Chilled Water Storage Stored Energy Approaches may have a significant impact. Reliability & Availability for Mission Critical Design Mechanical Systems Reliability modeling is not typically used Data is not as readily available for mechanical components. Useful models can be made Reliability level on mechanical system is significantly lower than electrical system Reliability & Availability for Mission Critical Design A single point of failure (SPOF) is a point within a system that will bring the system’s process to a halt. Common SPOFs include: EPO systems Single module systems Parallel redundant systems Automatic transfer switches Shared infrastructure space Reliability & Availability for Mission Critical Design A facilities reliability is dependant on the number of single points of failure within a system. If your facility can pass the following tests, then you have a highly reliable facility. Shotgun Chainsaw Fire bomb Hand grenade Eliminate Single Points of Failure to Improve reliability Reliability & Availability for Mission Critical Design The only way to survive a catastrophic facility failure is to have: Redundant Staff Data Mirroring Capabilities Geo-Redundancy (A Redundant Facility) Reliability & Availability Basics Reliability Vs Availability 757 Example: A Boeing 757 is a very reliable aircraft in which the jet may fly halfway around the world two or three times and is then placed into a maintenance shop for one to two weeks for maintenance. This aircraft is very reliable, but due to the amount of required maintenance, it has a low availability. Reliability & Availability Basics Bathtub curve shows failure over various region of life. It is a function describing the variation of hazard rate with time ( life of a unit) Reliability & Availability Basics Reliability: probability that an item will perform its function under given condition for a stated time interval . . . It is not a guarantee!! Availability: Probability that an item will perform its required function under given conditions at a stated instant in time Steady-State Availability Or : Reliability & Availability Basics MTTR & MTBF Mean Time To Repair = MTTR (1/μ) It is the mean time to restore the system to an operating condition MTBF = Mean Time Between Failures (1/) λ = Failure rate (probability that an item will fail in the time (t0, t0+Δt) giving the item is operable at time t0 Reliability & Availability Basics MTBF MTBF is also the Statistical point at which 63% of a large homogenous population of items will fail Example: a homogenous population of UPS's have an MTBF of 250,000h. This means that in about 28 years 63% of the UPS’s have failed to perform their function MTBF has nothing to do with Lifetime. MTBF significantly exceeds the lifetime. Example: Current word Average Life Expectancy is 66 years. The U.S. has a failure (death) rate of 9.6 people per 1000 persons/year, therefore an MTBF of 104 years!! Reliability & Availability Basics Reliability Vs Availability Reliability is time dependant The longer the time, the lower the reliability, regardless of the system design. The availability of a system is always calculated the same regardless of past or future events An availability of 99.9% could mean that a facility was down for 8.76 hours per year or suffer 525 one minute outages per year. Reliability & Availability Basics Reliability Not a Commonly used Metric for Data Center Discussions People are used to hearing about 5-9s of availability. Reliability numbers are much lower (2-9s). Reliability numbers are directly proportional to a period of time. Reliability numbers will indicate how often a facility’s infrastructure requires maintenance. Reliability & Availability Basics Availability Common Misuses of the Availability Metric Extracting the mean downtime (MDT) from Inherent Availability Equating the hours of downtime per year. If a facility has 99.999% availability, it implies that the facilities downtime may be 5.26 minutes per year, but a better assumption would be that it may suffer a 63 minute outage over a 12 year life expectancy of the facility infrastructure. Reliability & Availability Basics System Reliability A system is a collection of components arranged according to a certain structure. Subsystem: unit or device that is part of a larger system Reliability modeling: - Analyzing components and their relationships. - Relating components states (working or failed) to system state. System reliability analysis tools: - Reliability Block Diagram (RBD) - Fault Tree Analysis (FTA) Reliability & Availability Basics Other Factors to Consider Maintainability: Probability that an item can be repaired in the interval (0, t). If all single points of failure are eliminated, in most cases the system will be maintainable. Scale-ability: A system should be design such that it can be increased in capacity so that the infrastructure can grow with the load. Flexibility: Design your facility such that the electrical and mechanical systems can be easily reconfigured to adapt to changing technologies. Simplicity: Keep it Simple. The more complex a system is, the more unreliable the system will become. Reliability & Availability Basics Inherent Availability is used in lieu of Operational Availability Considers only down time for repair of failures Excludes unscheduled and scheduled maintenance Excludes Logistics Time: Part & Technician Availability Excludes delays related to schedule Is useful as a metric for analyzing the system design as uncontrolled parameters need to be kept the same. By eliminating all of the variations, it is possible to calculate the inherent availability of a system regardless of all logistic matters. Reliability & Availability Modeling Assumptions: Binary Reliability Analysis The system has n components; A component may only be in two possible states, working or failed. Xi is used to indicate the state of component i. The system may only be in two possible states, working or failed; Φ(X) is used to denote the state of the system (Structure function). The state of the system is completely determined by the states of the components. Reliability & Availability Modeling Typical System Reliability Models Series systems: System fails if one component fails. Parallel systems: System works if there is one component working. Paralleled systems always share a single point of failure. Reliability & Availability Modeling Typical System Reliability Models Series-parallel systems: Parallel-series systems: Reliability & Availability Modeling Typical System Reliability Models K-out-of-N systems (NODES) Example: UPS Set Reliability & Availability Modeling Modeling Examples Existing infrastructure 2N UPS 2N Generator Plant 2N UPS Distribution N+2 Mechanical System Mechanical equipment single corded System Availability = 99.99% Modeling Examples Identified single points of failure Identified most important component Made Recommendations Modeling Examples Proposed infrastructure Eliminate single points of failure Eliminate most important component “ATS 4” Dual cord the Mechanical equipment System Availability = 99.995% Modeling Examples Model Redundant Utilities Small improvement on availability System Availability = 99.997% Expensive to implement Recommended not to implement Modeling Examples Program Requirements 2N Utility 2(N+1) UPS N+2 Generator Plant 2N UPS Distribution N+2 Mechanical System Dual Corded Mechanical Equipment Modeled System Availability = 99.9995% Cost: $20,000,000 Modeling Examples Distributed Redundant Option 2N Utility N+1 UPS N+2 Generator Plant 2N UPS Distribution N+2 Mechanical System Dual Corded Mechanical Equipment Modeled System Availability = 99.9992% Cost: $16,000,000 Modeling Examples Medium Voltage Design 2N Utility 2N UPS N+2 Generator Plant 2N UPS Distribution N+2 Mechanical System Dual Corded Mechanical Equipment Modeled System Availability = 99.9994% Cost: $18,000,000 Modeling Examples Mechanical system 2N Mechanical system Modeled System Availability = 99.97% Modeling Examples Mech. & Elec. System 2N Mechanical system with 2N Electrical system Modeled System Availability = 99.92% RELIABILITY VS COST Conclusion Questions & Answers