CyberneticsForEngineers_RevisionF

advertisement

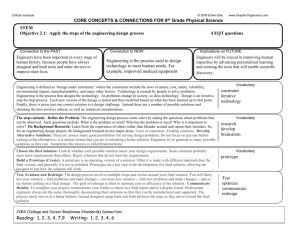

Cybernetics for Systems Engineers – DRAFT F

Cybernetics for Systems Engineers

(DRAFT)

1 Contents

1

Introduction .................................................................................................................................. 12

2

Cybernetics: Basics ........................................................................................................................ 15

3

4

5

2.1

What is Cybernetics?............................................................................................................. 15

2.2

Information ........................................................................................................................... 15

2.3

Transformation ..................................................................................................................... 17

2.4

Algebraic Description ............................................................................................................ 19

2.5

The Determinate Machine with Input .................................................................................. 21

2.6

Coupling Systems .................................................................................................................. 22

2.7

Feedback ............................................................................................................................... 23

2.8

Immediate Effect and Reducibility ........................................................................................ 24

2.9

Stability ................................................................................................................................. 24

2.10

Equilibrium in Part and Whole .............................................................................................. 25

2.11

Isomorphism and Homomorphism ....................................................................................... 25

Cybernetics: Variety ...................................................................................................................... 27

3.1

Introduction .......................................................................................................................... 27

3.2

Constraint.............................................................................................................................. 27

3.3

Incessant Transmission ......................................................................................................... 31

3.4

Entropy .................................................................................................................................. 32

Cybernetics: Regulation ................................................................................................................ 35

4.1

Requisite Variety ................................................................................................................... 35

4.2

Error Controlled Regulation .................................................................................................. 37

Practical Finite State Machine Control ......................................................................................... 39

5.1

Introduction .......................................................................................................................... 39

5.2

The Relay and Logic Functions .............................................................................................. 39

5.2.1

Example : Capacitor Discharge Unit .............................................................................. 41

5.2.2

Reversing a DC Motor ................................................................................................... 44

5.2.3

Alarm Circuit ................................................................................................................. 45

5.3

6

1

Conclusions ........................................................................................................................... 46

Background Calculus ..................................................................................................................... 47

Cybernetics for Systems Engineers – DRAFT F

6.1

Basic Ideas ............................................................................................................................. 47

6.1.1

Introduction .................................................................................................................. 47

6.1.2

Areas Bounded by Straight Lines .................................................................................. 48

6.1.3

Coordinate Geometry ................................................................................................... 51

6.2

Areas ..................................................................................................................................... 52

6.2.1

Curved Boundary .......................................................................................................... 54

6.2.2

Mathematical Induction................................................................................................ 56

6.2.3

Higher Order Polynomials ............................................................................................. 57

6.2.4

The Circle....................................................................................................................... 59

6.2.5

Concluding Comment.................................................................................................... 61

6.3

Some Applications................................................................................................................. 62

6.3.1

Introduction .................................................................................................................. 62

6.3.2

Uniform Acceleration .................................................................................................... 62

6.3.3

Volumes of revolution................................................................................................... 65

6.4

Centres of Gravity ................................................................................................................. 67

6.5

Second Moment of Area ....................................................................................................... 69

6.5.1

6.6

Engineer’s Bending Theory ................................................................................................... 71

6.7

Ship Stability.......................................................................................................................... 74

6.8

Fractional Powers.................................................................................................................. 76

6.9

The Usual Starting Point........................................................................................................ 79

6.9.1

6.10

Introduction .................................................................................................................. 79

Applications........................................................................................................................... 81

6.10.1

Newton’s Method ......................................................................................................... 81

6.10.2

Maxima and Minima ..................................................................................................... 83

6.10.3

Body under Gravity ....................................................................................................... 84

6.10.4

Maximum Area.............................................................................................................. 85

6.10.5

Concluding Comments .................................................................................................. 85

6.11

Higher Order Derivatives ...................................................................................................... 86

6.11.1

Introduction .................................................................................................................. 86

6.11.2

Radius of Curvature Approximation ............................................................................. 86

6.12

More Exotic Functions .......................................................................................................... 88

6.12.2

6.13

2

Moment of inertia of a Disc .......................................................................................... 70

The Integral of the Reciprocal Function ........................................................................ 89

Searching for a Solution ........................................................................................................ 90

Cybernetics for Systems Engineers – DRAFT F

6.13.1

Finding the Magic Number. .......................................................................................... 92

6.13.2

Cooling of a Bowl of Soup ............................................................................................. 93

6.13.3

Capacitor Discharge ...................................................................................................... 94

6.13.4

Concluding Comments .................................................................................................. 95

6.14

Onwards and Upwards.......................................................................................................... 95

6.15

Trigonometrical Functions .................................................................................................... 96

6.15.1

Introduction .................................................................................................................. 96

6.15.2

Angles ............................................................................................................................ 96

6.15.3

The Full Set of Trigonometric Functions ....................................................................... 98

6.15.4

Relationhips Between Trigonometric Functions ......................................................... 100

6.15.5

Special Cases ............................................................................................................... 101

6.15.6

Functions of Sums and Differences of Angles ............................................................. 102

6.15.7

Derivatives of Trigonometric Functions ...................................................................... 103

6.16

Binomial Theorem ............................................................................................................... 105

6.17

A Few Tricks ........................................................................................................................ 106

6.18

Differential Equations ......................................................................................................... 107

6.18.1

Introduction ................................................................................................................ 107

6.18.2

First Order Equation .................................................................................................... 107

6.18.3

Integration by Parts..................................................................................................... 112

6.18.4

More Exotic Forms ...................................................................................................... 115

6.19

The Lanchester Equation of Combat................................................................................... 117

6.20

Impulse Response ............................................................................................................... 120

6.20.1

Second, and Higher Order ........................................................................................... 122

6.20.2

Resonant Circuit .......................................................................................................... 125

6.20.3

Pin Jointed Strut .......................................................................................................... 125

6.20.4

Mass – Spring – Damper ............................................................................................. 127

6.20.5

Higher Order Systems ................................................................................................. 130

6.21

7

Conclusions ......................................................................................................................... 130

More Advanced Calculus............................................................................................................. 132

7.1

Introduction ........................................................................................................................ 132

7.2

Arc Length and Surface areas of Revolution ....................................................................... 132

7.2.1

7.3

The Catenary ....................................................................................................................... 134

7.3.1

3

Why Bother? ............................................................................................................... 134

Comment..................................................................................................................... 138

Cybernetics for Systems Engineers – DRAFT F

7.4

About Suspension Bridges .................................................................................................. 139

7.5

Arc Length of an Ellipse ....................................................................................................... 141

7.5.1

Towards a better solution ........................................................................................... 144

7.5.2

About Errors ................................................................................................................ 147

7.6

Convergence ....................................................................................................................... 147

7.7

Concluding Comment.......................................................................................................... 149

8

The Calculus of Variations ........................................................................................................... 151

9

8.1.1

Application to Maximum Area .................................................................................... 152

8.1.2

Comment..................................................................................................................... 155

Cybernetics and Automatic Control ............................................................................................ 157

9.1

Introduction ........................................................................................................................ 157

9.2

Implications for System Specification ................................................................................. 157

9.3

On Feedback ....................................................................................................................... 158

9.4

About Intelligence ............................................................................................................... 159

9.5

Automatic Control............................................................................................................... 161

10

Stability ................................................................................................................................... 162

10.1

Introduction ........................................................................................................................ 162

10.2

Additional Background ........................................................................................................ 162

10.3

Matrix Algebra .................................................................................................................... 164

10.3.1

Definition and Basic Operations ................................................................................. 164

10.3.2

Additional Operations ................................................................................................. 165

10.3.3

The Identity Matrix and the Matrix Inverse ................................................................ 166

10.3.4

Checking for Linear Independence - Determinants .................................................... 167

10.4

10.4.1

‘Sign’ of a Matrix ......................................................................................................... 170

10.4.2

Eigenvalues and Eigenvectors ..................................................................................... 171

10.4.3

Calculation of Eigenvalues .......................................................................................... 173

10.5

11

Stability – a Different Perspective....................................................................................... 174

Examples ................................................................................................................................. 177

11.1

Introduction ........................................................................................................................ 177

11.2

Vibrating String ................................................................................................................... 177

11.3

Directional Stability of a Road Vehicle ................................................................................ 179

11.4

Hunting of a Railway Wheelset ........................................................................................... 182

12

4

State Space Representation ................................................................................................ 169

Linearisation ............................................................................................................................ 187

Cybernetics for Systems Engineers – DRAFT F

12.1

Introduction ........................................................................................................................ 187

12.2

Stability of a Circular Orbit .................................................................................................. 187

12.3

Rigid Body Motion............................................................................................................... 188

12.3.1

Nomenclature ............................................................................................................. 189

12.3.2

Translation Equation ................................................................................................... 190

12.3.3

Rotation Equations...................................................................................................... 192

12.4

12.4.1

Cartesian Rocket – Steady Flight ................................................................................. 194

12.4.2

Cartesian Rocket – High Angle of Attack..................................................................... 195

12.4.3

Spinning Projectile ...................................................................................................... 196

12.4.4

Spacecraft ................................................................................................................... 197

12.4.5

Aircraft ........................................................................................................................ 198

12.4.6

Wider Application ....................................................................................................... 198

12.5

Partial Derivatives ............................................................................................................... 199

12.5.1

The Symmetrical Rocket Re-visited............................................................................. 200

12.5.2

Stability of a Spinning Shell ......................................................................................... 201

12.6

A Few More Tricks............................................................................................................... 202

12.6.1

Spinning Shell Conditions for Stability ........................................................................ 205

12.6.2

The Aeroplane ............................................................................................................. 206

12.6.3

The Brennan Monorail ................................................................................................ 209

12.6.4

Equation of Motion ..................................................................................................... 210

12.6.5

Approximate Factorisation.......................................................................................... 211

12.6.6

Brennan’s Solution ...................................................................................................... 211

12.6.7

Cornering..................................................................................................................... 212

12.6.8

Side Loads ................................................................................................................... 213

12.7

13

Concluding Comments ........................................................................................................ 213

Modifying the System Behaviour ............................................................................................ 214

13.1

Introduction ........................................................................................................................ 214

13.2

The Laplace Transform ........................................................................................................ 215

13.3

Transforms of Common Functions...................................................................................... 216

13.3.1

Derivatives and Integrals............................................................................................. 216

13.3.2

Exponential Forms ...................................................................................................... 217

13.3.3

Polynomials ................................................................................................................. 218

13.4

5

Perturbation forms ............................................................................................................. 194

Impluse Function and the Z Transform .............................................................................. 218

Cybernetics for Systems Engineers – DRAFT F

13.5

14

Laplace Transform Solution of Non-Homogenous Equations ............................................. 219

Impulse Response Methods – Evan’s Root Locus ................................................................... 222

14.1

Introduction ........................................................................................................................ 222

14.2

Example: Missile Autopilot ................................................................................................. 223

14.3

General Transfer Function .................................................................................................. 226

14.4

Rules for Constructing the Root Locus ................................................................................ 227

14.4.1

Real Axis ...................................................................................................................... 227

14.4.2

Asymptotes ................................................................................................................. 228

14.4.3

Exit and Arrival Directions ........................................................................................... 229

14.4.4

Break Away Points....................................................................................................... 229

14.4.5

Value of Gain ............................................................................................................... 230

14.4.6

Comment on Rules ...................................................................................................... 230

14.5

Autopilot Revisited.............................................................................................................. 231

14.5.1

Numerical Example ..................................................................................................... 231

14.5.2

Initial Selection of Servo Pole ..................................................................................... 233

14.5.3

Adding Compensation ................................................................................................. 235

14.5.4

Concluding Comments ................................................................................................ 237

14.6

Gyro Monorail ..................................................................................................................... 238

14.6.1

Representative Parameters ........................................................................................ 238

14.6.2

Root Locus ................................................................................................................... 239

14.7

Comments on Root Locus Examples ................................................................................... 242

14.8

The Line of Sight Tracker ..................................................................................................... 242

14.9

Tracking and the Final Value Theorem ............................................................................... 244

14.10 The Butterworth Pole Pattern............................................................................................. 245

14.11 Status of Root Locus Methods ............................................................................................ 246

15

6

Representation of Compensators ........................................................................................... 247

15.1

Introduction ........................................................................................................................ 247

15.2

Modelling of Continuous Systems ...................................................................................... 247

15.3

Sampled Data Systems ........................................................................................................ 250

15.4

Zero Order Hold .................................................................................................................. 251

15.5

Sampled Data Stability ........................................................................................................ 251

15.6

Illustrative Example............................................................................................................. 252

15.7

Sampled Data Design .......................................................................................................... 256

15.8

Concluding Comments ........................................................................................................ 257

Cybernetics for Systems Engineers – DRAFT F

16

Frequency Domain Description............................................................................................... 258

16.1

Introduction ........................................................................................................................ 258

16.2

Sinusoidal Response............................................................................................................ 258

16.3

Stability ............................................................................................................................... 260

16.4

Stability Margins ................................................................................................................. 261

16.5

Gain and Phase Margin ....................................................................................................... 263

16.6

Closed Loop Response from the Harmonic Locus............................................................... 263

16.7

The Monorail Example ........................................................................................................ 265

16.8

Sampled Data Systems ........................................................................................................ 268

16.9

Common z transforms......................................................................................................... 269

16.9.1

Sine and Cosine ........................................................................................................... 269

16.10 Frequency Warping ............................................................................................................. 271

16.11 Decibels ............................................................................................................................... 271

16.12 Signal Shaping in the Frequency Domain – Bode Plots....................................................... 272

16.12.1

Second Order Transfer Functions and Resonance .................................................. 274

16.13 Gain and Phase Margins from Bode Plots........................................................................... 276

16.14 Phase Unwrapping .............................................................................................................. 277

16.15 The Nichol’s Chart ............................................................................................................... 277

16.16 A Word of Warning ............................................................................................................. 278

16.17 Concluding Comment.......................................................................................................... 278

17

Time Varying Systems ............................................................................................................. 280

17.1

Introduction ........................................................................................................................ 280

17.2

Missile Homing .................................................................................................................... 281

17.3

Significance of Sight Line Spin ............................................................................................. 283

17.4

Pursuit Solution ................................................................................................................... 283

17.5

Seeking a More Satisfactory Navigation Law ...................................................................... 285

17.5.1

17.6

Blind Range ......................................................................................................................... 287

17.7

Sensitivity ............................................................................................................................ 289

17.7.1

Block Diagram Adjoint................................................................................................. 291

17.8

Example: Homing Loop with First Order Lag ...................................................................... 292

17.9

Concluding Comment.......................................................................................................... 301

18

18.1

7

Lateral Acceleration History ........................................................................................ 286

Rapid Non-Linearities .............................................................................................................. 302

Introduction ........................................................................................................................ 302

Cybernetics for Systems Engineers – DRAFT F

18.2

Describing the Distorted Signal ........................................................................................... 303

18.3

Fourier Series Curve Fit ....................................................................................................... 303

18.4

The Describing Function...................................................................................................... 305

18.5

Application of Describing Function to the Harmonic Locus ................................................ 307

18.6

Bang-Bang Control .............................................................................................................. 307

18.7

Schilovski Monorail ............................................................................................................. 309

18.8

More General Servo Considerations ................................................................................... 310

18.9

The Phase Plane .................................................................................................................. 312

18.9.1

Dominant Modes ........................................................................................................ 312

18.9.2

Representation of a Second Order Plant .................................................................... 313

18.9.3

Unforced Plant ............................................................................................................ 314

18.10 Introducing Control ............................................................................................................. 316

18.11 Hierarchical System Considerations.................................................................................... 319

18.12 Scanning System ................................................................................................................. 320

18.12.1

Outer Loop .............................................................................................................. 323

18.13 Concluding Comments ........................................................................................................ 325

19

The Representation of Noise .................................................................................................. 326

19.1

Introduction ........................................................................................................................ 326

19.2

Correlation .......................................................................................................................... 326

19.2.1

The Same, Or Different? ............................................................................................. 326

19.2.2

Pattern Matching ........................................................................................................ 328

19.3

Auto Correlation ................................................................................................................. 331

19.4

Characterising Random Processes ...................................................................................... 333

19.4.1

White Noise................................................................................................................. 334

19.4.2

System Response to White Noise ............................................................................... 335

19.4.3

Implications for Time-Varying Systems....................................................................... 337

19.5

19.5.1

The Fourier Transform ................................................................................................ 338

19.5.2

Application to Noise .................................................................................................... 339

19.6

20

8

Frequency Domain .............................................................................................................. 338

Application to a Tracking System ........................................................................................ 341

Line of Sight Missile Guidance ................................................................................................ 343

20.1

Introduction ........................................................................................................................ 343

20.2

Line of Sight Variants .......................................................................................................... 343

20.3

Analysis ............................................................................................................................... 344

Cybernetics for Systems Engineers – DRAFT F

20.4

21

Multiple Outputs ..................................................................................................................... 347

21.1

Introduction ........................................................................................................................ 347

21.2

Nested Loops....................................................................................................................... 347

21.3

Pole Placement ................................................................................................................... 350

21.4

Algebraic Controllability...................................................................................................... 351

21.4.1

Monorail ...................................................................................................................... 353

21.4.2

Road Vehicle................................................................................................................ 353

21.5

Comment on Pole-placement ............................................................................................. 355

21.6

The Luenberger Observer ................................................................................................... 357

21.7

The Separation Theorem .................................................................................................... 358

21.8

Reduced Order Observers ................................................................................................... 359

21.9

Concluding Comments ........................................................................................................ 360

22

Respecting System Limits – Optimal Control .......................................................................... 362

22.1

Introduction ........................................................................................................................ 362

22.2

Optimal Control................................................................................................................... 362

22.3

Optimisation Background ................................................................................................... 364

22.3.1

Principle of Optimality ................................................................................................ 364

22.3.2

Application to the Control Problem ............................................................................ 366

22.4

Gyro Monorail ..................................................................................................................... 368

22.5

The Optimal Observer ......................................................................................................... 370

22.5.1

Covariance Equation ................................................................................................... 371

22.5.2

The Observer ............................................................................................................... 373

22.5.3

Tracker Example .......................................................................................................... 374

22.5.4

Alpha beta Filter .......................................................................................................... 375

22.6

23

9

Comments ........................................................................................................................... 346

Comment ............................................................................................................................ 375

Multiple Inputs ........................................................................................................................ 377

23.1

Introduction ........................................................................................................................ 377

23.2

Eigenstructure Assignment ................................................................................................. 378

23.3

Using Full State Feedback ................................................................................................... 378

23.3.1

Basic Method .............................................................................................................. 378

23.3.2

Fitting the Eigenvectors .............................................................................................. 379

23.3.3

Calculation of Gains .................................................................................................... 381

23.3.4

Comment on Stability ................................................................................................. 382

Cybernetics for Systems Engineers – DRAFT F

23.4

Loop Methods ..................................................................................................................... 383

23.4.1

General Considerations............................................................................................... 383

23.4.2

Nyquist Criterion ......................................................................................................... 384

23.5

Sequential Loop Closing ...................................................................................................... 385

23.6

Diagonalisation Methods .................................................................................................... 386

23.6.1

‘Obvious’ Approach ..................................................................................................... 386

23.6.2

Commutative Compensators ...................................................................................... 386

23.7

24

Comment ............................................................................................................................ 386

Closed Loop Methods ............................................................................................................. 388

24.1

Introduction ........................................................................................................................ 388

24.2

The H-infinity Norm ............................................................................................................ 389

24.3

MIMO Signals ...................................................................................................................... 390

24.4

Closed Loop Characterisation ............................................................................................. 392

24.4.1

Tracker Example .......................................................................................................... 393

24.4.2

Comment..................................................................................................................... 393

24.4.3

Input Output Relationships ......................................................................................... 394

24.4.4

Weighted Sensitivity ................................................................................................... 395

24.5

Stacked Requirements ........................................................................................................ 396

24.5.1

Robustness .................................................................................................................. 397

24.5.2

Phase in MIMO Systems ............................................................................................. 397

24.6

25

Concluding Comment.......................................................................................................... 398

Catastrophe and Chaos ........................................................................................................... 400

25.1

Introduction ........................................................................................................................ 400

25.2

Catastrophe......................................................................................................................... 400

25.3

The ‘Fold’............................................................................................................................. 401

25.4

The ‘Cusp’ ............................................................................................................................ 402

25.5

Comment ............................................................................................................................ 403

25.6

Chaos ................................................................................................................................... 404

25.6.1

Adding Some Dynamics............................................................................................... 404

25.6.2

Attractors .................................................................................................................... 406

25.7

The Lorenz Equation ........................................................................................................... 407

25.7.1

Explicit Solution ........................................................................................................... 409

25.8

The Logistics Equation......................................................................................................... 410

25.9

Bluffer’s Guide .................................................................................................................... 412

10

Cybernetics for Systems Engineers – DRAFT F

25.9.1

Basin of Attraction ...................................................................................................... 412

25.9.2

Bifurcation................................................................................................................... 412

25.9.3

Cobweb Plot ................................................................................................................ 412

25.9.4

Feigenbaum Number .................................................................................................. 412

25.9.5

Liapunov Exponent...................................................................................................... 412

25.9.6

Poincaré Section.......................................................................................................... 413

25.9.7

Symmetry Breaking ..................................................................................................... 413

25.10 Concluding Comment.......................................................................................................... 413

26

Artificial Intelligence and Cybernetics .................................................................................... 414

26.1

Introduction ........................................................................................................................ 414

26.2

Intelligent Knowledge Based Systems ................................................................................ 414

26.3

Fuzzy Logic .......................................................................................................................... 415

26.4

Neural Nets ......................................................................................................................... 417

26.5

Concluding Comment.......................................................................................................... 418

27

Conclusions ............................................................................................................................. 419

27.1

Introduction ........................................................................................................................ 419

27.2

Hierarchy ............................................................................................................................. 419

27.3

Intelligence .......................................................................................................................... 420

27.4

Seeking Null......................................................................................................................... 420

27.5

And Finally... ........................................................................................................................ 421

11

Cybernetics for Systems Engineers – DRAFT F

1 Introduction

It is only fair to the reader to start with sufficient information to decide whether to proceed.

The word ‘system’ as used today is most frequently meant in the sense implied by ‘systems analyst’,

a specialist concerned with mapping technically naive user’s requirements into computer code.

Before the pre-eminence of software engineering, it had another meaning, as defined by the likes of

Norbert Wiener, William Ross Ashby, Harry Nyquist, and Claude Shannon to describe a subject which

was intended for the highly numerate, in order to gain a scientific understanding of system

behaviour.

Without passing further comment on the works which expound the modern innumerate approach, I

will merely comment that this is not such a work.

In order to discriminate between the two usages, I shall refer to the former as ‘systems engineering’

and the latter I shall call ‘cybernetics’ from the Greek word for ‘helmsman’.

To supplement the management/organizational characterization of the system, we require a means

of characterizing components of system elements which do not exist, but are postulated as potential

means of achieving improved system performance. This requires the methods of cybernetics.

The methods which work, and have found practical application over the years belong to a body of

knowledge called ‘control theory’. In recent years, for various reasons, this has become perceived as

an arcane specialisation, which is unfortunate because it constitutes a universal means of system

description and analysis, which should form part of every engineer’s toolkit, regardless of

specialisation.

The book is unusual in appealing to the broad knowledge and experience of the practitioner, rather

than trying to present the subject as self-contained. Like all systems approaches, it is useless within

itself; it’s value becomes apparent in its application to real problems. With that in mind, the text

presents a number of practical problems to illustrate the methods used.

The approach is eclectic, taking examples from all branches of engineering, to illustrate the universal

application of cybernetics to all self-correcting systems, regardless of their actual physical

implementation.

As far as possible, the concepts are explained in plain English, so hopefully a reasonable

understanding of the subject matter may be gained from the text alone.

The early chapters present the fundamental ideas more as a philosophical discussion, than

pragmatically useful methods. However, the reader is advised to get to grips with the Principle of

Requisite Entropy, on which all valid systems thinking, mathematical or not, is based, yet judging by

the decrees of some so-called ‘experts’, is not as widely understood as it should be.

This is followed by a brief presentation on the practical implementation of finite state controllers,

leading to the conclusion that a problem formulation which enables hardware behaviour to be taken

into account is to be preferred to general software development methods. This theme of the

12

Cybernetics for Systems Engineers – DRAFT F

constraint imposed by the real world forms the basis of the remainder of the book, but requires

significantly greater mathematical knowledge.

We then proceed with the background mathematics needed to understand the remainder of the

book. This is no more than would be covered in the first year of a typical engineering course. It is

included because many line engineers may not have had call to use their mathematics in support of

their largely administrative and managerial duties, and a reminder is in order. The mathematically

adept may safely skip these chapters. The presentation differs from the more usual approach in that

we start with integral calculus, which is immediately applicable to engineering problems, and

differentiation is introduced as its inverse. This is expected to appeal to the natural engineer, who

has little inclination to discuss the number of angels which can dance on a pinhead.

The main body of the book then considers the natural behaviour of dynamic systems, covering the

methods used to uncover the causes of instability, and the identification of critical parameters.

Many systems issues are resolved cheaply and simply by changes to the plant parameters, without

introducing control at all. We emphasise the need to understand the dynamic behaviour of the

plant before considering introducing artificial means of improving its behaviour. All the clues we

need to design our closed loop system arise from this understanding of the basic plant dynamics.

After the basic stability has been considered, we cover the body of knowledge which has become

known as ‘classical control’. These methods relate the open loop plant behaviour to that of the

closed loop, so that the effect of compensators introduced within the loop may be predicted.

Central to this presentation are the Laplace and Z transforms, which enable the behaviour of

complex networks of dynamic elements to be predicted readily.

These methods are concerned dominantly with stability, although tracking behaviour is also

considered. It becomes evident that stability alone is insufficient, we need to introduce constraints

of noise and saturation to decide the characteristics of the compensation we may employ. To this

end we investigate the effects of non-linearities on stability using describing functions and phaseplane methods.

Before proceeding to characterise noise, we take a detour into the world of terminal controllers,

which naturally introduces the method of adjoint simulation. The ideas introduced here are needed

to understand the effects of noise impulses applied to a linear system using a time domain

description. The concept of white noise is introduced, and from it the means of predicting the

accuracy of tracking from the noise sources within the loop.

All the ‘classical’ approaches are intended for single input, single output systems. With the reduced

cost of sensors in recent years, most closed loop systems have multiple outputs, and we revert back

to the state-space descriptions used to describe our basic plant dynamics. This introduces pole

placement and the idea of an observer, as a special type of compensator which models the plant

dynamics. The separation theorem is invoked to show that the dynamics of the controller do not

affect those of the observer, so the two can be designed in isolation. These methods work directly

with the closed loop plant.

The state space methods are extended to consider controllers whose states are limited in range

(typically by saturation), and observers whose accuracy is limited by noise (the fixed gain Kalman

13

Cybernetics for Systems Engineers – DRAFT F

filter). The fundamental limitation of these approaches, that the separation theorem is unlikely to

apply in practice, is identified.

Moving on from muti-output systems, we proceed to consider systems which are multi-input as well

as multi-output. In view of the cost of servos, such systems are unlikely to be common. Usually we

deal with multiple de-coupled single-input/single-output systems. However, these are recognised as

potentially difficult to design, and considerable intellectual effort has been invested in them over the

past thirty years. Most of the recent work lies beyond the scope of the current book, which is not

intended for post graduate studies into control. Some techniques which the author has found

practically useful are presented. However, the most important question to be resolved before

embarking on a MIMO controller; is why is the plant so horribly cross-coupled in the first place?

The dominant method, that of H∞ ‘robust’ control, or the ideas behind it, are presented briefly, but

the absence of examples which were not contrived for the sole purpose of illustrating the method, is

rather a handicap when presenting it to an audience of pragmatic engineers.

The remainder of the book reverts back to the philosophical discussion of the opening chapters,

except that the exposition is more speculative. These sections may be skipped unless the ideas

presented are of particular interest to the reader.

Well, that completes the introduction, it should be evident whether or not the book is for you. If

not, I thank you for your time. If so, do I hear the satisfying ring of a cash register?

14

Cybernetics for Systems Engineers – DRAFT F

2 Cybernetics: Basics

2.1 What is Cybernetics?

Cybernetics is a theory of machines, but does not deal with the gears or circuit boards of physical

machines, but in how machines behave independently of their physical implementation. In essence,

it is the study of machines in the abstract.

As it deals with behaviour, rather than implementation, the ‘what’ of the system function, rather

than the ‘how’, it would be reasonable to use it as the basis for system specification, particularly as

the resulting definitions of behaviour are concise, compact and unambiguous.

The approach deals with ‘black boxes’, that is we deal with how entities behave, or are required to

behave, not how they are implemented in any specific instance. We find that even the most

complex behaviours can be characterized with extremely simple system models. Indeed, it is shown

that much of the complexity of ‘complex’ systems serves to simplify behaviour. This is in contrast to

the ‘white box’ approach characteristic of more mainstream systems engineering, where internal

structure and component functions must be known, in order for the system to be specified.

It follows that the methods of cybernetics, in containing no pre-conceptions as to how behaviours

are achieved ,furnishes the techniques most appropriate for system specification, yet paradoxically,

their strong emphasis on mathematical formulation, appears to have relegated them to the

‘implementation’ stages of system development, in the minds of many who call themselves ‘systems

engineers’.

2.2 Information

In the author’s opinion, the best introduction to cybernetics was written in the mid-Twentieth

Century by William Ross Ashby, and is entitled, not surprisingly; ‘An Introduction to Cybernetics’.

This book has been out of print for many years, but it is well worth seeking it out. What follows

summarises his ideas, but with nowhere near the same quality of prose.

For consistency with modern systems engineering practice I have attempted to introduce the subject

using UML (Unified Modelling Language) diagrams, as these are becoming the de-facto standard, as

a means of capturing basic system function. Unfortunately, it soon came apparent that cybernetic

ideas cannot be expressed using them, as they are too application-oriented for the level of

abstraction required.

We are told that with the internet, we have access to more information than has ever been possible

in the past. Well, it all depends on how you define ‘information’. We have the basis of information

when we can change the state of the environment, for example tying a knot in a handkerchief to

remind us to do something. Whenever we alter our surroundings, we may ascribe meaning to the

changes we introduce. We code our abstract thoughts as configurations of matter in the physical

world.

The action of forces of nature on the configuration of matter may introduce changes, so that it no

longer conveys the same idea, e.g. an animal may accidentally trample the stack of stones.

15

Cybernetics for Systems Engineers – DRAFT F

No matter how we encode it any physical medium can become corrupted, there will always be noise

present. We usually distinguish a valid configuration from the corrupted ones by having a limited

number of valid configurations, which have a low probability of occurring by chance.

The commonest form of encoding is through speech. Of all the potential sounds and sequences of

sounds the voice is capable of making, only a tiny part of the potential repertoire is used to convey

messages.

Claude Shannon proved that there exists a coding scheme which will convey an error-free message

regardless of the noise present in the communication channel. It is a reasonable guess that spoken

language isn’t too far off this ideal encoding for the noise sources in existence when language first

emerged. Whether it remains ideal in the industrial age is a moot point.

The simplest form of encoding is to ensure the signal level is higher than the noise level. Ultimately,

the noise limits the difference between adjacent signal amplitudes, introducing a quantisation error,

even when the signal is not digital in nature.

More typically, a set of symbols would be defined as a sequence of signal levels in time (e.g. Morse

code symbols), or in space (printed characters). We know that the set of permissible sequences is

limited for sequences conveying information. So a sequence of letters taken from the Latin alphabet

in which the letter X is as common as the letter E, is not likely to convey English language messages.

Similarly we could check to see whether the combinations of letters formed English words.

We could repeat this process by checking the frequency of words, but ultimately all we are doing is

checking that the message is valid English. We are not able, through this process, to decide whether

the message is true, or indeed conveys any meaning.

It is in the information-theoretic sense described above, that we claim there is an information

explosion. The reality is, searching for useful information is like picking up a weak signal on a radio

receiver – turning up the volume just increases the noise. The internet has given us a spectacular

increase in uninformed opinion, misinformation and utter nonsense, with valid information

becoming ever more elusive. The needle is the same size as ever, but the haystack has increased by

orders of magnitude.

Information theory infers that information is being conveyed if the statistical distribution of the

symbols in a message is different from a uniform distribution in which all symbols are equally likely.

The distribution implies the conveyance of information, without specifying its exact form. For this

reason the information is characterised as a function of the probabilities of each symbol occurring,

called the entropy of the message.

The entropy is defined in such a way that if a message is passed through a process the output

entropy is equal to the sum of the process entropy and that of the original message. As an example,

in order to improve the match between a message and a communications channel (which must have

capacity to transmit every symbol with equal probability), the message may pass through some form

of coder. At the other end, a decoder, matched to the coder, so by implication has same entropy,

re-creates the original message. This matching of entropies illustrates a fundamental principle of

cybernetics, called the principle of requisite entropy.

16

Cybernetics for Systems Engineers – DRAFT F

A system which remains in the same state indefinitely is not particularly interesting, so cybernetics

concerns itself with the evolution of system states with respect to time, or some other independent

variable. The most important results can be derived using what is called a finite state machine

formulation.

The machine has a number of states which we can give English language labels, or symbols (e.g;

letters, pictograms, or numbers ). These are precisely the same as a set of symbols we might use to

code information. We assume that the transitions between states take place instantaneously.

We describe our system as a set of symbols, and we can, over a long period of time determine the

probability of the machine being in each of its states at any particular time. Thus we can

characterize the machine’s evolution as an entropy, in much the same way as we can define the

entropy of a messages made up of symbols taken from a set.

However, the statistical behaviour of a finite state machine introduces excessive complication, which

will obscure the concepts I am trying to introduce. The most important ideas can be understood,

assuming the machine to be deterministic, rather than statistical (stochastic) in nature.

Admittedly, systems which actually transition neatly between states in this manner are rarely, if

ever, to be found in nature. Our primary system consideration is whether the states can in fact be

reached and maintained. With a digital computer this issue is hidden in the design of the circuits, in

more general systems, we cannot be certain that this will be the case.

2.3 Transformation

Imagine yourself in an environmentally-controlled greenhouse, and you notice the following:

The temperature falls

The carbon dioxide concentration reduces

The humidity falls

You conclude that somebody has opened the door.

17

Cybernetics for Systems Engineers – DRAFT F

The UML state chart for this behaviour shows ‘Open door’ as the condition which causes the

transitions from the states before the door was opened to some (unspecified) time later. The

implication is that the disturbance caused by leaving the door open exceeds the capability of the

environmental control system to maintain regulation, so that a new (unsatisfactory) equilibrium

condition results.

Alternatively we may consider ‘Open door’ to be an operator, and we may denote it with an arrow

. We take one of the effects, say the first, and write it as a compact expression:

AB

Where A is shorthand for ‘warm’ is the ‘operand’, the arrow implies an influence, B is shorthand for

‘cool’, is what Ross Ashby calls the ‘transition‘. The total set of transitions consists of a vector of

operands which become a vector of transitions. The set of transitions is called a ‘transformation‘.

The UML state chart could represent this by defining a pair of compound states which include the

individual states:

The UML description necessarily contains labels associating the diagrams with specific entities,

which is fine for describing specific systems, but of little help in seeking universal principles which

apply to all systems. For this reason, the transition arrow label will be omitted from the state

diagrams and states will be labelled by letters, without reference to any specific system.

This example is an ‘open’ transformation, because the transitions and operands are from different

sets, if the transform has the same set of symbols in the operand and transition sets, it is called a

closed transformation.

All the above transforms are single valued. Other types are possible, for example:

AB or D

BA

CB or C

DD

Is not single valued. In principle, the system may start and end on any state, so we have omitted the

start and end node from the state chart. The alternative is to present 16 practically identical

diagrams, which seems an inefficient means of presenting a relatively simple idea.

18

Cybernetics for Systems Engineers – DRAFT F

Another important type of transformation is ‘one-to-one‘. This is single valued, so that each

operand yields a unique transition, but in addition, each transition indicates a unique operand. A

transformation which is single valued, but not one-to-one is called ‘many to one’.

To complete the set of possible transforms, we have the identity which transforms the operand to

itself.

The transitions in UML state diagrams usually correspond to a defined condition, rather than the

natural evolution of the system over a single fixed time interval. For this reason they do not

represent an efficient means of expressing system behaviour, beyond the execution of computer

code.

Activity diagrams, sequence diagrams and collaboration charts, likewise are doubtlessly invaluable

for defining how code should be organised and executed, but outside the realm of software

engineering UML appears somewhat over-sold.

2.4 Algebraic Description

19

Cybernetics for Systems Engineers – DRAFT F

The arguments of this and subsequent sections, are more compactly illustrated by using a matrix

representation of the transitions. The first row is the start state, whilst the second row is the final

state. The presence of transformations which are not single valued raises the difficulty that more

than one matrix is needed. When this occurs, it is evident that more information is needed to

determine the system evolution.

For one to one, or many to one transitions, we have sufficient information in our transform

definition to determine how the system behaves when the transform is repeated.

We refer to Tn as the nth power of T, i.e. the result of applying T n times.

More generally, we have the product, or composition of two different transforms operating on the

same set of operands:

Consider now a more complicated transformation:

20

Cybernetics for Systems Engineers – DRAFT F

By starting at any state and following the arrows, the repeated transformation ends up cycling

around a set of states indefinitely. If any state transitions back to itself, the transformation will stop

once it is reached. The separate regions of the kinematic graph are known as ‘basins‘. By

implication, the system either converges on to its final state or cycles around a set of states in a

basin.

Unfortunately, this benign behaviour is only a consequence of the finite state machine assumption.

In practice it is very likely that there will be states which the machine can reach which are not in the

finite state machine model of the system. The belief that a finite state machine will be adequate

outside of a controlled environment is a dangerous delusion.

As an example; consider a strategy system which has a gambit for every anticipated move the

opponent may make. There is nothing to stop the opponent from making a move which was not

anticipated, and for which no counter was anticipated.

The implicit assumption of the finite state machine, that the set of disturbances to which it is

subjected is closed, limits its operation to extremely benign controlled environments. A system

which is capable of surviving a real environment, with its open set of disturbances, presents a more

challenging problem.

2.5 The Determinate Machine with Input

A determinate machine is any process which behaves as a closed single-valued transformation.

By ‘single value’ we do not necessarily imply a single number, but an operand in a transformation.

Each operand in the previous section was a label covering sets of attributes; a particular combination

of data and/or behaviour which we called a ‘state‘. Since each state is assigned a unique label, the

‘single-valued ‘requirement implies that the machine’s transition from one state to another is strictly

one-to-one.

We have defined our deterministic cybernetic machine by the transforms which characterise its

behaviour. The set of operands are states, which themselves may be transforms. Thus we can

define a transform U whose operands are transforms T1, T2 and T3. Applying U has the effect, for

example:

T1T2

T2T2

T3T1

In addition to our requirement for single valued transformations, this discussion requires all

transformations to be closed. The three transformations in U could have distinct sets of operands, in

which case, U is merely a ‘big’ transform, no different in nature from its component transforms. The

more interesting case is when all three component transforms have the same set of operands.

For example, we assume all three component have a common set of operands; A,B,C,D:

21

Cybernetics for Systems Engineers – DRAFT F

The three transformations may be considered three ways in which the same machine can be made

to behave. That it is the same machine is implicit in the common operands (states).

If we refer to ‘change’ in the context of such a machine, it is change in behaviour which is of interest,

rather than the transformations associated with each individual behaviour. Those with

programming experience will recognise the idea of a subroutine call in assembler, or a function

/procedure call in higher level languages. The subscript 1,2 or 3 applied to the individual

transformations indicates which behaviour is selected at any time, and will be called a ’parameter’.

A real machine, which can be described as a set of closed single-valued transformations will be called

a machine with input. The parameter specifying which behaviour is selected is called the ‘input’.

2.6 Coupling Systems

Suppose a machine P is to be connected to another, R. This is possible if the parameters of R are

functions of the states of P. We define the two machines by their sets of transformations. R will

have three, P only one.

22

Cybernetics for Systems Engineers – DRAFT F

We construct a relation, which is a single valued transformation relating the state of P to the

parameter values of R. We assume also that the two machines transforms are synchronised to a

common timescale. An example of the coupling relationship could be:

State of P

i

j

k

Value of parameter

2

3

2

The two machines now form a new machine of completely determined behaviour. The set of states

is equal to the number of combinations of the 3 states of P and 4 states of R, and each individual

state is a vector quantity, e.g. (a,i). Taking these compound states, a kinematic graph may be drawn

which has behaviour which is different from both P and R.