Partial-Solutions-blog

advertisement

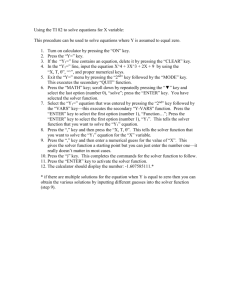

KAP’s Corner Partial Solutions Let’s say you are running one of the study types that require an iterative solution – the problem is either a Large Deflection, or a Contact problem, or is Non-Linear. And after maybe ages of compute-time, you get the error message, “Iterative Solver Failed to converge at XX% loading”. That can be pretty frustrating. But despair not – there is still information to be gleaned from this mode of failure. In fact, the term ‘failure’ may not really be applicable - at least not to the FEA software. The system may in fact be trying to tell you something critical about the design and/or the level of loading. o SOLIDWORKS In this KAP’s Corner we dissect the three reason why the iterative solver can Simulation become unstable, and what to do next. o Enterprise PDM About KAP o Rapid Prototyping Keith A. Pedersen, CAPINC Principal Engineer, SolidWorks Elite o Office Productivity Tools Applications Engineer Keith Pedersen has a BSME from Clarkson College and an MSME from Boston University. After a stint at General Electric in Burlington, VT, Keith was the lead Applications Engineer for Advanced Surfacing products for Matra Datavision USA, including EUCLID-IS, UniSurf, and STRIM. He joined CAPINC in 1998 to support advanced surfacing applications in SDRC IDEAS and joined our SolidWorks group one year later. Keith has extensive industry and consulting experience in non-linear Finite Element Analysis and Computational Fluid Dynamics in addition to surfacing applications. He is a Certified SolidWorks Professional (CSWP) and certified to train and support Simulation. Keith has contributed over a dozen presentations to SolidWorks World conferences since the year 2000. About CAPINC Awarded Number One in Customer Satisfaction in North America by DS SolidWorks. CAPINC provides outstanding support for SolidWorks, Simulation, and Enterprise PDM customers throughout New England. Your Source for CAD Excellence: Products, Training and Service www.capinc.com Copyright 2014 CAPINC 11 1 www.capinc.com KAP’s Corner 1) Stiffness Matrix Stability If you were to write the equation of static equilibrium that governs a single, simple coil spring, acting in one (say, the y) direction, it would look like this; f = k * (y) Force on the spring is equal to the stiffness, k, times the stretch in y. The mesher turns your CAD model into a collection of Finite Elements, and each element is essentially also a spring – albeit, a 3-dimensional spring, and with 6 degrees-of-freedom, instead of one – but still, just a spring! So the FEA process creates a massive set of equations, where the collected stiffness of every mesh element, in each direction of action, becomes a separate term buried within a matrix. The equation above still applies, but is now in matrix form; SF = [K] [d] So now, the sum of all forces acting on the entire structure, must balance with the stiffness matrix, K, times the matrix of x,y,z deflections, d, of all the nodes. This simplified view is how the programmer views your study. Once the solver has started running, he has lost all benefits of visual intuition and spatial reasoning – he cannot ‘see’ your CAD parts any more, he is just handling a large chunk of numbers, (and especially, the K matrix). So when something goes wrong in the matrix, he can’t give you a whole lot of information about what exactly to do about it. That’s why we need a tool-kit of techniques to tweeze information out of problems that halt prematurely. Now, if you had not restrained your parts properly, the programmer can usually detect that, while he is still assembling the K matrix. He might notice a whole slew of elements that do not connect back to any ground, so there will be a lot of null values in the matrix. In this case, the problem would fail to start – and right away, you’d get a fairly clear error message. But the vast majority of partial-completion failures occur because, at some loading factor, the K matrix goes unstable. And by ‘unstable’, we mean that, for a small incremental increase in the forces, SF, we suddenly get unmanageably large values for the deflection, [d]. Sometimes, what kills the solver is that portions of the stiffness matrix seem to have no stiffness at all. Sometimes a bunch of elements still have SOME stiffness, but they seem to suddenly change. Then it is the abruptness of that change that is killing the solver’s ability to predict what happens at the next load increment. Here’s the key point; If a problem was able to solve up to, say, 75% of the loading, but then fails to push past 80%, then what you are trying to diagnose is, what went wrong.., at that loading? What was the shape of the deformed structure, how do the parts contact each other, how are the most highly loaded elements behaving – at that loading ! Something changes very suddenly at that loading point, and the programmer cannot tell you why Copyright 2014 CAPINC 22 2 www.capinc.com KAP’s Corner – so you need to back the study up a bit and figure what is happening, with the benefit of your visual intuition. 2) Four Cases of Instability This listing is sorted in order of increasing perniciousness. Solver Failed: Do you want to re-start?: The outermost control loop of the iterative solver is the place where the load factor is incremented with each time-step. If you are running the Non-Linear solver, you have direct control over this time-stepping. But for the users of Linear, Static studies, this time-stepper is out of your hands. The programmers are going to start small, with maybe a 2% or 3% load factor, and then they ramp up quickly, accelerating the load factor to try to get you the answer as quickly as possible. Sometimes, their ramping-up strategy is too aggressive. Then you get the “Solver Failed” message. This is not nearly so daunting as it looks. Click YES. Always. The solver will NOT go back and start over from the beginning – it will merely retreat back to the last load-increment that DID succeed, and then it will baby-step forward from there to try to get ‘over the hump’. I’ve had some problems prompt me a second time later on in the same study. And (only) once, I had to click YES to this prompt, a third time, to get the study to run to completion, and these were all cases where the autostepper was just trying to chew too much at a time, needed to take smaller bites. If the study STILL does not complete, then you have one of the next three problems, below. Large-Scale Motion: I call these ‘slip-and-fall’ problems. They are usually associated with one or more Contact conditions. Pictured at left is a very simple, but classic, example of load-dependent instability. The cantilevered beam from the left is supposed to land on the platform of the column at right – and it WILL – as long as the load is low enough that the beam flexure does not cause it to slip off. If a study like this fails to run at the desired loading, then re-run it with all the loads reduced – by a factor of 100, perhaps even less, to get a visual idea of the deflection pattern at some load level that CAN succeed. Then, manually scale the resulting plots back up by the same 100x factor. Large- displacement and non-linearities aside, this will give you a good idea of what the system is trying to achieve at the full load. When the pictured system is run with a 1-lb load, we get a good idea where it is headed, and the study took only 2 minutes to complete. Copyright 2014 CAPINC 33 3 www.capinc.com KAP’s Corner The next picture below, shows what happens when the force across the beam is set to 100 lbs. In Solidworks 2013 and before, this study would have simply have said “Failure to converge at load increment 51%”. But in a 2014 enhancement to provide better diagnostics for contact problems, the solver now detects when a contact set, that HAD been functioning, suddenly fails to produce any contacts, and it now allows you to re-set the time stepper, much like the first failure case we cited, above. This looks promising – but unlike the first case, the fatal flaw is in the geometry, not in the aggressiveness of the solver. If you say YES to re-start this problem, it will eventually error out – mine stated, “Analysis Failed After 5 Trials”. Then I got the message, failure at load increment 50.8% - saving results for 50% loading. In my view, this is not a ‘failure of the solver’ – it is instead, failing the design for that particular load-case. The deflection pattern at the 50% loading tells the story, (picture at left). If for some reason the solver does NOT offer you the chance to save the results at the last good load increment, you can get this result yourself. Simply multiply all your loads by a tad less than the failing load-factor, (I used 48% in this case), and re-run the study. It will complete and give you a good deflection picture of what exactly is going on just before it goes unstable. Note, however, that you re-run this study only to understand visually what the deflection pattern is. You don’t need to re-run to get the failure threshold number – you already have that from the error message of the ‘failed’ study ! And computing the ‘good’ study, at a value so close to the moment of instability, is not cheap – in my case, this study ran 50 minutes, (versus only 2 minutes for the 1-lb study to qualify the loads). Another flavor of this problem occurs when you have friction as an important stabilizing element in the study. If you model a shaft, gripped in a collet, and you set the collet gripping force to be 100 pounds, and the coefficient of Copyright 2014 CAPINC 44 4 www.capinc.com KAP’s Corner friction is set to 0.35, you should not blame the solver if the study goes unstable and halts at a 35+ pounds pull-out force, (or at a torque level that exceeds the same friction-limited grip strength. Large-Scale Buckling: The image at left is the setup for a classical end-loaded column buckling problem. I’m sure your problems are a lot more complex. I use it as an illustration because, A) Everyone can understand this picture and agree on what “should happen”, and; B) Any much-more complex (assembly) study can fail for the exact same reasons, just in harder-to-see places. Not every Solidworks Simulation user has licenses to run the Buckling Analysis, (part of Simulation Professional). But I’m going to use that here first, to set our expectations of a ‘reasonable’ end load. I’ve loaded the column with 100 lbs. The study predicts a buckling load-factor of 129.3. This means that our column will very likely collapse laterally if the loading is 12,930 pounds or more. We then set up a linear, static study, with the endload slightly less than this – I used 12,000 lbs. And, regardless of the solver used, and the large deflection flag ON or OFF, the study runs fine. The predicted stress level is 4800 psi, which, given the cross-sectional area of 2.5 sq. in., is pretty believable. Now we increase the loading to 13,500 lbs. And if we run without the largedeflection flag set, the study runs fine! It predicts 5400 psi mean stress, the deflection increase is in proportion with the prior study,… why did it NOT fail? Without the Large Deflection flag turned on, (and also, lacking any Contact conditions), we have simply not invoked the iterative solver – the stiffness matrix as computed at the start of the problem, is presumed to hold sway, unchanged, across the entire load, so the only stability problems we could really see here are Initial stability problems, (like insufficient restraints). Run the same study again, with the large-deflection flag active, and you get… the results pictured below. Sometimes, when there is no Contact set to solve, the study just fails without even giving you a %completion message. Copyright 2014 CAPINC 55 5 www.capinc.com KAP’s Corner KAP’s Tip: For linear studies, the DirectSparse solver, plus “Inertial Relief”, can often get the solver to complete the loading. If you are running a Non-Linear study, then the solver results will be saved for post-processing at each load increment – and you can manually control the load increments, to determine your own appetite for solve time/accuracy. However, if you do not have a license to the non-linear solver, then this is the most annoying type of run-time failure, because it very well might not show to you the last load increment it successfully achieved, or at what loadfactor it failed upon. The only way to figure out where the edge of the cliff is, is to back your loads off to 80% or 60% of the original loadings, and try again, until you home in on the load factor at the moment of failure. Hopefully you don’t need to do this sort of assessment very often – often it is enough, just to realize that the design will buckle at the intending loading, and that warrants going back to the drawing board. Interestingly, there sometimes is a way to ‘force’ the solver to run, past the point of instability, if you still really need that result. You will have to turn on the Direct Sparse solver to do this, but then if you invoke the option in the Solver Properties page, “Use Inertial Relief” (see below) – this stabilizes the model against un-intended force imbalances. It does not work by nulling out the resulting forces, but instead by computing the reactions, deflecting the model in the indicated direction – and then nulling out any residual velocities, so that they don’t accumulate with each iteration, essentially preventing acceleration. Copyright 2014 CAPINC 66 6 www.capinc.com KAP’s Corner Small-Scale Buckling: Ah, now for the creepiest of problems to diagnose. A single, highly-loaded Finite Element (Triangular Shell or Tetrahedral Solid) can buckle, also. This is more commonly the case in Contact problems, at locations of very high compressive loading, or at the interior of small fillet radii, or in notches, or also at locations where very small model edges result in small FEA mesh elements adjacent to rather large ones. First, the root cause. Our default Solid element is the Tet-10, a tetrahedron with 4 vertex points, and 6 mid-side nodes along each edge. The mid-side nodes give it additional flexibility, so it can not only undergo linear tension/compression, and linear shear, it can also flex in (second order) curvature. The mid-side node also means that the initial mesh can follow curving model surfaces with greater fidelity. The default Shell element, the Tri-6, has three mid-side nodes also. Mid-side nodes are usually a good thing. The problem with these mid-side nodes is, what can happen if the Tet is created very thin in one direction, (from a vertex to the opposite face), and very large and curved, as seen from two, or all three, of the other vertices. The two images at left try to capture this. This is not so much a problem of the element’s ASPECT, (although that is a contributor), but rather, the element’s CURVATURE. If this element lies on the inside of a curved model face that is loaded in compression, the element can easily buckle, folding in upon itself. The element pictured at left has already started to fold – note that in the lower image you can see the red face ‘bleeding thru’ the back, more-planar-looking face. Another way of looking at this – when the element computes the equations of static equilibrium, computed at the center of the element – it can be a real problem if the ‘center’ of the element lies OUTSIDE of the element. Copyright 2014 CAPINC 77 7 www.capinc.com KAP’s Corner There is a quality check intended to capture this sort of problem, a matrix operation called the “Jacobian Check”. If a tetrahedron is absolutely perfect, equilateral, and no initial curvature, the Jacobian comes out to be 1. The more highly curved, the higher the Jacobian For linear, static problems the programmers tell us that a Jacobian result as high as 40 can still be OK. And if the element actually collapses, the Jacobian check suddenly becomes a negative number. In a linear, static problem you will seldom have this problem. First, because the Mesher has an Advanced option, called “Jacobian Points”, and the default setting is to do a quick-n-dirty Jacobian check in the 4 ‘quadrature’ points that (should be) interior to the element. This is usually good enough. You can choose slower, but more thorough diagnosis by checking at 16 or 29 interior points. The second reason a Linear, Static study will seldom buckle at an element, is because it is not iterative. But when you have the Large Displacement flag turned on, the software will apply the loading in small increments – but also, it will re-evaluate the stiffness matrix to see how the structure might react differently now that it is in each flexed position. This is the rub. An element with a so-so Jacobian check as initially meshed, can be loaded so highly that it flexes into a potato-chip shape, collapses, and suddenly loses all its strength. KAP’s Tip: Draft-quality mesh elements are immune to buckling errors It can even ‘snap thru’ and start acting like ‘negative material’ – (something that usually only happens on Star Trek). If you have an iterative study that fails after some percentage loading, but it can at least show you results for the last-saved, good loading – then display a plot of the Equivalent Strain. Most of your strains should be well under a percent, .01. But if you see one particular element that is at 3% or 5%, or up to 15% strain? That’s a red flag. A Mesh Plot of the Jacobian will likely show him to be very high, (10 or more), and the local stresses folded him up. The solutions to small-scale element buckling all focus on the mesh. 1) Place a Local Mesh Control on the element’s CAD geometry, and make it at most 1/3 the size of the mesh element size that just failed. 2) Local Mesh Controls have a setting for the element growth ratio, and the default value is 1.5, (so, 50% larger at each row). I hate that value. That is a lousy default – usually too aggressive, and I seldom go higher than 1.35. But in the case of a buckling element, you want to baby- step the mesh away, at a ratio of perhaps 1.2 or 1.15. Z) Or, you can switch to a completely different mesh strategy. High quality tests can buckle only because they have mid-side nodes. But Draft quality elements, the Tet-4s, cannot collapse. They’re not as accurate – but they Copyright 2014 CAPINC 88 8 www.capinc.com KAP’s Corner are a lot more robust. To get the same overall accuracy, you would need to mesh the overall problem with roughly half the element size. The problem will run slower with the Draft Quality Tests, because at half the size, there will be roughly 8 times more of them. However, Tet-4s have only 40% as many degrees-of-freedom to be computed, so they are a cheaper element, and your actual solve-time will go up by about 3x. I would STILL apply a local mesh refinement in the problem area, as outline in step 1 and 2, above, but now more for accuracy reasons, not stability. The Draft tet-4 elements simply cannot buckle. This approach of dropping back to Tet-4 elements makes even more sense if you problem is highly non-linear – for example, involving elastomers, or a elasto-plastic stress-strain curve. strains. These problems tend towards large Large plastic strains can create a different kind of numerical instability, so there are a couple of other tips we need to cover in that specific case…. But that is a topic for another upcoming KAP’s Corner. Have a Solidworks bone to pick? Want more tips in a specific area of the CAD? Keith is looking for requests from users for future KAP’s Corner topics. Email your suggestions to: marketing@capinc.com Copyright 2014 CAPINC 99 9 www.capinc.com