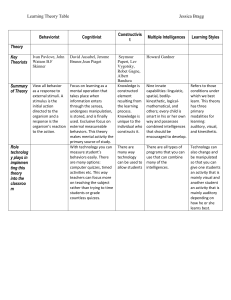

Supplementary Table S1

advertisement

Supplementary methods, results and discussion Partial integration using a coupling prior Previous research has proposed to model partial integration using a Gaussian coupling prior pc (∈ [0,1]) that controls the extent to which sensory signals are integrated. For comparison, we therefore also fitted this model to the auditory localization responses (Bresciani, Dammeier, & Ernst, 2006; Ernst, 2006). The coupling prior is defined as a two-dimensional Gaussian prior distribution over bisensory signal locations (i.e., SA and SV) σ σPpc (9) p(SA, SV) = N([0 0], [ P ]) σPpc σP If the coupling prior pc approaches one (i.e., the prior’s covariance is high), the prior distribution forms a Gaussian ridge along the diagonal which models the integration of signals. If the coupling prior approaches zero (i.e., the prior’s covariance is low), the prior forms a circular Gaussian blob modeling segregation of the signals. Hence, the coupling prior pc controls the extent of integration (pc = 1) versus segregation (pc = 0) of signals. Given the auditory and visual signal sources, the model assumes that auditory and visual signals are sampled independently from Gaussian distributions. Hence, the likelihood is specified as: σ 0 (10) p(xA, xV|SA, SV) = N([SA SV], [ A ]) 0 σV We obtain the posterior distribution by applying Bayes rule (11) p(SA, SV|xA, xV) ≈ p(SA, SV)p(xA|SA)p(xv|Sv) Because the prior and the likelihoods are Gaussians, the posterior is also Gaussian, so that the maximum-a-posteriori estimate (Bishop, 2006) can be specified as σ σPpc −1 σ 0 −1 −1 σA 0 −1 [ (12) [ŜA ŜV]T = ([ P ] +[ A ] ) ([ ] xA xV]T ) σPpc σP 0 σV 0 σV To fit the Coupling prior model to the data, we simulated xA and xV and computed the predicted distributions (i.e., p(ŜA|SA,SV,1/σV2)) which we linked to the participant’s auditory localization responses exactly as described for the CI models in the main paper. Note that the Coupling prior model cannot be fitted to participants’ common-source judgment responses because it does not explicitly model the two alternative causal structures that could have generated the sensory signals. Thus, when comparing the Coupling prior model to the CI models, we fitted both models to auditory localization responses only (see supplementary Tab. S2). Fitting the Coupling prior model to participants’ auditory localization responses, we obtained an average coupling prior of 0.86, which indicates relatively strong audiovisual integration (see supplementary tab. S2). In line with previous findings (Koerding et al., 2007), the Coupling prior model performed worse than the CI models because it is not able to account for the non-linear response behavior, i.e. the transition from integration to segregation as a function of spatial disparity (see Fig. 2B, C and S3B, C, for further discussion see Shams and Beierholm (2010). 1 Supplementary experiment 1 – Ventrioloquist paradigm with additional unisensory conditions Experimental paradigm and analysis Eight independent participants (3 female, mean age 28 years, range 22-36 years) took part in an additional experiment which was mostly equivalent to the spatial ventriloquist paradigm reported in the main paper. However, this experiment included unisensory auditory and visual conditions allowing us to estimate the auditory and visual variances from the unisensory conditions alone and inserting them then as known when fitting the CI model to the bisensory conditions. In the unisensory auditory conditions, the participants used four response buttons to localize auditory signals (HRTF-convolved white noise, 50 ms duration) which were presented at four locations along the azimuth (i.e., -10°, -3.3°, 3.3° or 10°; these 4 conditions were presented randomly in 64 trials). In the unisensory visual conditions, participants localized a Gaussian cloud of 20 white dots presented for 50 ms on a black background at one of the four locations. The horizontal standard deviation of the Gaussian cloud was set to 2° or 14° visual angle to generate two levels of visual reliability (2° or 14° STD of the Gaussian cloud; i.e. 4 x 2 conditions randomly presented in 128 trials). In bisensory conditions, auditory and visual signals were presented synchronously yet independently at one of the four locations and at the two levels of visual reliability (i.e., 4 x 4 x 2 conditions randomly presented in 512 trials). Please note that as in the main paper, the auditory and visual locations were sampled independently. In separate experimental sessions participants either selectively localized the auditory spatial signal (i.e., 512 trials of auditory localization) or indicated whether the visual and auditory signals were generated by a common vs. independent sources using a two-choice key press (i.e., 512 trials of common-source judgments). The experimental setup was identical to the main experiment. First, we analyzed the auditory localization responses and common-source judgments and fitted the six CI models individually to participants’ responses as in the main experiment to allow comparison between the two experiments (see supplementary Fig. S3 and Tab. S3). Second, we estimated the variances of the spatial prior and the auditory and visual percepts (σP2, σA2, σV12, σV2) from the localization responses in the unisensory auditory and visual conditions using an independent generative model for the visual and the auditory signals (i.e., the auditory or the visual model component under full segregation, see equation (3) in the main paper). As the variance estimates obtained from the unisensory conditions alone were small (cf. Tab. S3 vs. S4), the predicted response distributions for the bisensory conditions did not always overlap with participants’ response distributions. Therefore, we included a “trembling hand” parameter α to model that participants choose a random response button by chance on a fraction of trials. This trembling hand parameter is similar to a lapse parameter when fitting psychometric functions. In summary, we fitted the sensory variance parameters (σA, σV1, σV2), the trembling hand parameter α and the spatial prior’s variance (σP) individually to participants’ localization responses of the unisensory conditions. We then assumed these parameters (α and σP were averaged across unimodal auditory and visual model fits) as known and fitted the remaining free common-source prior (pcommon) jointly to participants’ auditory localization responses and common-source judgments during the audiovisual conditions independently for each of the six CI models. 2 Results and Discussion First, when all parameters were estimated from the audiovisual conditions, the model predictions from supplementary experiment 1 replicated the profile of common-source judgments, visual bias and localization variability that we observed in the main experiment (supplementary Fig. S3): Large spatial disparities reduced common-source judgments. Further, spatial disparity was especially informative for common-source judgments when visual reliability was high (Fig. S2A). Likewise, the visual bias on the perceived auditory location and the reduction in localization variability was increased for high visual reliability and small spatial disparities (Fig. S3B, C). Moreover, model comparison based on group BIC (Tab. S3) demonstrated that participants used model averaging for implicit and a fixed threshold of 0.5 for explicit causal inference. Second, when estimating the variance parameters from the unisensory conditions alone, we obtained markedly smaller visual and auditory variances (Tab. S4). Not surprisingly, when we assumed the variance parameters known and only fit the common-source prior to the multisensory conditions, the model fit was worse than when fitting all parameters to the multisensory conditions. In particular, the model falsely predicted that the VE effect was nearly abolished for highly reliable visual signals at intermediate and large spatial discrepancies (Fig. S3B, C). In other words, the sensory variance was smaller when estimated from the unisensory relative to the multisensory conditions. These smaller sensory variances led to a sharpening of the audiovisual integration window. For highly reliable visual signals, the model then predicts that audiovisual integration breaks down at intermediate and large spatial discrepancies. This mismatch between model predictions and participants’ responses may initially surprise. In classical forced-fusion settings, estimating the sensory variances from the unisensory conditions to predict relative auditory and visual weights for multisensory conditions has been the standard and a very successful approach (Alais & Burr, 2004; Ernst & Banks, 2002). Yet, the experimental paradigms for studying multisensory integration under forced-fusion assumptions are markedly different from our current ventriloquist paradigm. Thus, experimental paradigms under forced-fusion assumptions introduce only a small non-noticeable sensory conflict, so that participants integrate sensory signals throughout the experiment in a constant attentional setting (Alais & Burr, 2004; Ernst & Banks, 2002). By contrast, our ventriloquist paradigm manipulated spatial disparity randomly across trials, so that participants needed to arbitrate between information integration and segregation on each trial. Even though participants were instructed to attend only to the auditory modality in an intersensory selective-attention paradigm, it is likely that intersensory conflict and non-conflict trials were associated with different attentional settings. Accumulating neurophysiological research has demonstrated that attention reduces the variance of stimulus representations by increasing the gain of single neurons (Treue & Martinez Trujillo, 1999), enhancing the selectivity of neuronal populations (Martinez-Trujillo & Treue, 2004) or by decreasing the populations’ inter-neuronal firing correlations (Cohen & Maunsell, 2009). This wealth of research suggests that the sensory variance may depend on the particular attentional settings, so that sensory variances estimated from purely unisensory conditions may not be appropriate for the multisensory conditions. The role of attention in multisensory integration is still debated. While some studies have suggested that the sensory weights are immune to attentional modulation under forced-fusion assumptions (Helbig & Ernst, 2008), accumulating evidence points towards a complex interplay between attention and multisensory integration (Alsius, Navarra, Campbell, & Soto-Faraco, 2005; Helbig & Ernst, 2008; Talsma, Senkowski, Soto-Faraco, & Woldorff, 2010). Potentially, the role of attention in multisensory 3 integration is more prominent in paradigms that do not generate forced-fusion settings, but require participants to arbitrate between integration and segregation on a trial-by-trial basis. Supplementary experiment 2 – Ventriloquist paradigm with joystick responses Experimental design and analysis Four participants (3 females, mean age 23.3 years, range 22-24 years) participated in a ventriloquist paradigm where they localized sound via a continuous joystick response (ST200, Saitek) without visual or auditory feedback. In the audiovisual conditions, participants were presented in synchrony with auditory signals (HRTF-convolved white noise) and a Gaussian cloud of 10 white dots (duration of 32 ms) from one of five potential locations along the azimuth (-10°, -5°, 0°, 5° or 10°). The locations of the auditory and visual signals were independently sampled on each trial in a randomized fashion. The Gaussian cloud of dots was presented at four levels of visual reliability (5.4°, 10.8°, 16.2°, 21.6° STD of the Gaussian cloud). On each trial, participants first selectively localized the auditory spatial signal by freely pointing with the joystick towards the perceived auditory signal location. Subsequently, they judged whether the visual and auditory signals were generated by common or independent sources via a two-choice key press. The levels of visual reliability were presented either in blocks or varied according to a Markov chain as in the main experiment. Overall, each participant completed 720 trials. Otherwise, the experimental setup and stimulus timing was the same as in the main experiment. To compare the data of this experiment to the main experiment, the auditory localization responses and the common-source judgments were analyzed exactly as the response data from the main experiment (supplemental Fig. S4). The main question of this supplementary experiment was whether a repulsion effect could be observed when participants made a continuous localization response using the joystick. Results and Discussion Indeed, when computing the ventriloquist effect separately for trials when common and independent sources were perceived, we observed a negative ventriloquist effect, i.e. a repulsion effect, for the ‘independent source’ trials (Fig. S4). Thus, participants perceived the auditory signal shifted away from the visual signal location. As predicted by the CI model (Fig. S2B), the repulsion effect was most pronounced for highly reliable visual signals. To our knowledge, this repulsion effect has previously been reported only in one single study where observers similarly used a continuous response device such as a laser pointer (Koerding et al., 2007; Wallace et al., 2004). By contrast, in our main experiment where participants used key responses, we did not observe a repulsion effect for ‘independent source’ trials (Fig. S2A). The repulsion effect was absent even on the high visual reliability conditions, where it should have been most pronounced. It is currently unclear why we observed a repulsion effect only in this small sample of four subjects that employed a joystick rather than discrete button responses. Discretizing the model predictions and assigning them to a fixed set of button responses still preserves the repulsion effect for the CI model (Fig. S2B). Hence, in principle a repulsion effect could also emerge for discretized response options. Potentially, discrete button responses induce specific response strategies in participants that are not yet accommodated by the Bayesian Causal Inference model (e.g., equalization of responses). It may even transform the continuous spatial estimation task into a spatial categorization task potentially on a subset of trials. Yet, modelling 4 participants’ button responses from the perspective of a categorization task may fail to accommodate other characteristic features of the spatial localization responses. Interestingly, a recent modelling paper demonstrated that the repulsion effect in Bayesian Causal Inference is only predicted for Gaussian but not heavy-tailed likelihood distributions (Natarajan et al., 2009). Hence, the repulsion effect is not a critical prediction of the Bayesian Causal Inference model, but strongly depends on additional Gaussian assumptions that may not be valid. Previous research has demonstrated that the repulsion effect can also emerge as a result of dual task demands and participants’ drive for self-consistency. For instance, Stocker and Simoncelli (2008) developed a model where hypothesis and model selection during an initial motion categorization task induced a repulsion effect and bias in a subsequent motion estimation task (Jazayeri & Movshon, 2007). In Stocker and Simoncelli’s model, participants estimated the motion direction conditioned on the fact that motion direction comes e.g. from the leftward motion category. As a result of this prior model selection, the model predicted a motion direction estimate that was deviated away from the categorization boundary under dual-task constraints. By contrast, Jazayeri and Movshon (2007) explained the visual-motion repulsion by a read-out of sensory representations with a weighting profile that shifted the motion-direction estimate away from the categorization boundary. Collectively, these models illustrate that repulsion effects may emerge via different mechanisms such as a weighted read-out of sensory representations (Jazayeri & Movshon, 2007), self-consistency in perceptual decisions and prior model selection (Stocker and Simoncelli, 2008) or truncation of posterior distributions (Koerding et al., 2007). In conclusion, further psychophysics and modelling research is clearly needed to determine the experimental conditions and modelling assumptions that induce a repulsion effect in multisensory integration. . 5 Supplementary tables Table S1. Model parameters (across-subjects’ mean ± SEM) and fit indices of the computational models from the main experiment. Model pcommon σP σA σV1 σV2 σV3 σV4 R2 relBIC MA & CITh-0.5 MS & CITh-0.5 PM & CITh-0.5 MA & CISampling 0.50±0.01 13.2±1.7 14.3±1.7 1.2±0.2 2.8±0.7 8.7±1.1 18.0±2.2 0.67±0.02 0.51±0.01 10.2±1.4 12.2±1.1 3.2±0.2 7.2±1.3 9.0±1.0 14.3±1.9 0.64±0.03 0 0.51±0.01 11.0±1.5 12.2±1.2 2.6±0.3 5.1±0.8 9.4±1.3 16.7±2.1 0.66±0.02 248.7 0.62±0.02 13.6±2.1 12.7±1.8 1.6±0.3 2.7±0.3 7.8±1.0 16.9±2.1 0.67±0.02 316.4 MS & CISampling 0.64±0.02 10.7±1.8 11.3±1.3 3.4±0.3 5.7±0.8 9.1±1.0 16.8±1.3 0.66±0.02 256.5 PM & CISampling 0.63±0.02 11.1±1.9 10.0±0.9 2.7±0.2 4.0±0.3 6.9±0.5 17.1±2.2 0.66±0.02 243.5 378.1 Note: Each model was fitted jointly to the auditory localization and common-source judgment responses individually for each participant. Models of the implicit causal inference strategy involved in auditory spatial localization: model averaging (MA), model selection (MS) or probability matching (PM). Models of the explicit causal inference strategy involved in the common-source judgment: a fixed threshold of 0.5 (CITh-0.5) or a sampling strategy (CISampling). The parameter values refer to the across-subjects’ mean ± SEM: pcommon = probability of the common-cause prior. σP = standard deviation of the spatial location prior (in °). σA = standard deviation of the auditory percept (in °). σV = standard deviation of the visual percept at different levels of visual reliability (1 = highest, 4 = lowest) (in °). R2 = coefficient of determination (mean ± SEM). relBIC = Bayesian information criterion (BIC = LL - 0.5 M ln(N), LL = log likelihood, M = number of parameters, N = number of data points) of a model relative to the worst model (larger = better). relBICs are group BICs summed over subjects. 6 Table S2. Model parameters (across-subjects’ mean ± SEM) and fit indices of the Causal Inference models and the Coupling prior model from the main experiment. pcommon Model σP σA σV1 σV2 σV3 σV4 R2 relBIC or pc MA 0.69±0.04 12.57±1.80 11.96±1.64 1.80±0.25 3.15±0.34 MS 0.76±0.02 10.01±1.18 4.67±0.83 7.68±1.31 14.11±1.77 22.07±1.85 0.658±0.023 473.9 PM 0.78±0.03 10.34±1.40 10.09±1.02 2.98±0.24 5.49±0.88 11.62±1.44 22.27±1.99 0.660±0.023 522.1 9.95±0.85 9.01±1.02 19.16±1.76 0.662±0.023 561 Coupling 0.86±0.04 11.90±1.58 11.62±1.76 5.12±0.64 8.24±1.15 12.53±1.32 20.29±1.45 0.626±0.025 0 prior model Note: Each model was fitted only to the auditory localization responses individually for each participant. Models of the implicit causal inference strategy involved in auditory spatial localization: model averaging (MA), model selection (MS) or probability matching (PM) and the Coupling model using a Gaussian coupling prior. The parameter values refer to the across-subjects’ mean ± SEM: pcommon = probability of the common-source prior for causal inference models. pc = ratio of the variance to the covariance of the Coupling model’s prior distribution. σP = standard deviation of the spatial location prior (in °). σA = standard deviation of the auditory percept (in °). σV = standard deviation of the visual percept at different levels of visual reliability (1 = highest, 4 = lowest) (in °). R 2 = coefficient of determination (mean ± SEM). relBIC = Bayesian information criterion (BIC = LL - 0.5 M ln(N), LL = log likelihood, M = number of parameters, N = number of data points; BICs summed across sample) of a model relative to the worst model (larger = better). relBICs are group BICs summed over subjects. 7 Table S3. Model parameters (across-subjects’ mean ± SEM) and fit indices of the computational models from supplementary experiment 1. Model pcommon σP σA σV1 σV2 R2 relBIC 143.8 MA & CITh-0.5 0.49±0.03 14.89±3.44 8.88±1.78 3.79±0.95 15.19±3.72 0.76±0.04 43.3 MS & CITh-0.5 0.52±0.03 9.84±1.44 8.21±1.64 6.11±1.26 11.58±3.15 0.73±0.05 77.4 PM & CITh-0.5 0.50±0.03 13.02±3.24 7.80±1.53 5.21±0.76 12.24±2.47 0.74±0.05 MA & 0.61±0.05 15.85±4.13 7.69±1.54 3.74±0.89 9.87±1.82 0.74±0.04 65.4 CISampling MS & 0.65±0.04 11.10±2.20 7.06±1.50 5.33±1.19 9.05±1.80 0.73±0.04 0 CISampling PM & 0.63±0.04 11.78±2.61 6.90±1.41 4.60±0.71 7.80±1.28 0.73±0.04 25 CISampling Note: Each model was fitted jointly to the auditory localization and commonsource judgment responses individually for each participant. All parameters including the sensory variance parameters were estimated from the audiovisual conditions. Models of the implicit causal inference strategy involved in auditory spatial localization: model averaging (MA), model selection (MS) or probability matching (PM). Models of the explicit causal inference strategy involved in the common-source judgment: a fixed threshold of 0.5 (CITh-0.5) or a sampling strategy (CISampling). The parameter values refer to the across-subjects’ mean ± SEM: pcommon = probability of the common-cause prior. σP = standard deviation of the spatial location prior (in °). σA = standard deviation of the auditory percept (in °). σV = standard deviation of the visual percept at different levels of visual reliability (1 = highest, 4 = lowest) (in °). R2 = coefficient of determination (mean ± SEM). relBIC = Bayesian information criterion (BIC = LL - 0.5 M ln(N), LL = log likelihood, M = number of parameters, N = number of data points) of a model relative to the worst model (larger = better). relBICs are group BICs summed over subjects. 8 Table S4. Model parameters (across-subjects’ mean ± SEM) and fit indices of the computational models from supplementary experiment 1. Model pcommon σP σA σV1 σV2 α R2 relBIC MA & CITh-0.5 MS & CITh-0.5 PM & CITh-0.5 0.58±0.08 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.54±0.09 37.6 0.58±0.09 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.53±0.08 0 0.58±0.09 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.55±0.08 53.9 MA & 0.67±0.07 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.59±0.07 166.2 CISampling MS & 0.66±0.08 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.58±0.06 118.3 CISampling PM & 0.65±0.08 20.60±3.51 3.87±0.45 2.88±0.68 5.87±1.26 0.08±0.01 0.60±0.06 175.3 CISampling Note: Each model was fitted jointly to the auditory localization and common-source judgment responses in audiovisual conditions individually for each participant. The sensory variance parameters σ, the spatial prior σP and the trembling hand parameter α were estimated from the unisensory conditions and kept fixed when the remaining parameter (i.e., the common-source prior) was estimated from the audiovisual conditions. Models of the implicit causal inference strategy involved in auditory spatial localization: model averaging (MA), model selection (MS) or probability matching (PM). Models of the explicit causal inference strategy involved in the common-source judgment: a fixed threshold of 0.5 (CITh-0.5) or a sampling strategy (CISampling). The parameter values refer to the across-subjects’ mean ± SEM: pcommon = probability of the common-cause prior. σP = standard deviation of the spatial location prior (in °). σA = standard deviation of the auditory percept (in °). σV = standard deviation of the visual percept at different levels of visual reliability (1 = highest, 4 = lowest) (in °). α = trembling hand parameter. R2 = coefficient of determination (mean ± SEM). relBIC = Bayesian information criterion (BIC = LL - 0.5 M ln(N), LL = log likelihood, M = number of parameters, N = number of data points) of a model relative to the worst model (larger = better). relBICs are group BICs summed over subjects. 9 Supplementary figures Figure S1. Behavioral responses including the fifth level of visual reliability (21.6°) which was only presented to a sub-sample of 12 participants in the main experiment. The remaining visual reliability levels were presented to 26 participants. The figure panels show the behavioral data (across-subjects’ mean ± SEM, solid lines) as a function of visual reliability (VR; color coded) and audiovisual disparity (shown along the x-axis). (A) Percentage of common-source judgments. (B) Absolute spatial visual bias, AResp – ALoc. (C) Relative spatial visual bias (i.e., the ventriloquist effect VE = (AResp – ALoc) / (VLoc – ALoc)). In panels (B) and (C), the absolute and relative spatial visual biases are also shown for the case of pure visual or pure auditory influence for reference. (D) Localization variability (i.e., variance) of the behavioral responses. 10 Figure S2. The relative spatial visual bias (i.e., the ventriloquist effect VE = (AResp – ALoc) / (VLoc – ALoc); across-subjects’ mean ± SEM) as a function of audiovisual disparity, visual reliability (VR) and separately for trials where common or independent sources were inferred (data from the main experiment). (A) The ventriloquist effect computed from the participants’ auditory localization responses. (B) The ventriloquist effect computed from the predictions of the winning Causal Inference model. Please note that splitting up trials according to the common-source judgment induced an unbalanced distribution of trials across conditions, such that not each of the 26 participants had data for a given combinations of the factors spatial disparity, visual reliability and commonsource judgment. 11 Figure S3. Behavioral responses and the model’s predictions (pooled over common-source decisions) for the data from supplementary experiment 1 (n = 8) with additional unisensory conditions. The figure panels show the behavioral responses (across-subjects’ mean ± SEM, solid lines) and the predictions of the winning Causal Inference model (CI, dotted lines) as a function of visual reliability (VR; color coded) and audiovisual disparity (shown along the xaxis). The CI model’s parameters were fitted in two ways: 1) The sensory variance parameters (σA, σV1, σV2) and spatial prior were estimated together with the common-source prior from participants’ responses in the audiovisual conditions (i.e., estimation procedure identical to the main paper, dotted). 2) The sensory variances and the spatial prior were estimated from the unisensory, i.e. auditory and visual, conditions and held fixed when the remaining parameter (i.e., the common-source prior) was estimated from the audiovisual conditions (solid). (A) Percentage of common-source judgments. (B) Absolute spatial visual bias, AResp – ALoc. In panel (B), the absolute and relative spatial visual biases are also shown for the case of pure visual or pure auditory influence for reference. (C) Relative spatial visual bias (i.e., the ventriloquist effect VE = (AResp – ALoc) / (VLoc – ALoc)). (D) Localization variability (i.e., variance) of the behavioral and the models’ predicted responses. 12 Figure S4. The ventriloquist effect (VE) for the data from supplementary experiment 2 (n = 4) with joystick responses. The ventriloquist effect (i.e., VE = (AResp – ALoc) / (VLoc – ALoc)) is plotted as a function of audiovisual disparity, visual reliability (VR) and common- versus independent-source judgments. 13 Supplementary references Alais, D., & Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Curr Biol, 14(3), 257-262. Alsius, A., Navarra, J., Campbell, R., & Soto-Faraco, S. (2005). Audiovisual integration of speech falters under high attention demands. Curr Biol, 15(9), 839-843. Bishop, C. M. (2006). Pattern recognition and machine learning (Vol. 4). New York: Springer. Bresciani, J. P., Dammeier, F., & Ernst, M. O. (2006). Vision and touch are automatically integrated for the perception of sequences of events. J Vis, 6(5), 554-564. Cohen, M. R., & Maunsell, J. H. (2009). Attention improves performance primarily by reducing interneuronal correlations. Nature neuroscience, 12(12), 1594-1600. Ernst, M. O. (2006). A Bayesian view on multimodal cue integration. Human body perception from the inside out, 105-131. Ernst, M. O., & Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature, 415(6870), 429-433. Helbig, H. B., & Ernst, M. O. (2008). Visual-haptic cue weighting is independent of modality-specific attention. J Vis, 8(1), 21 21-16. Jazayeri, M., & Movshon, J. A. (2007). A new perceptual illusion reveals mechanisms of sensory decoding. Nature, 446(7138), 912-915. Koerding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., & Shams, L. (2007). Causal inference in multisensory perception. PLoS One, 2(9), e943. Martinez-Trujillo, J. C., & Treue, S. (2004). Feature-based attention increases the selectivity of population responses in primate visual cortex. Curr Biol, 14(9), 744-751. Shams, L., & Beierholm, U. R. (2010). Causal inference in perception. Trends Cogn Sci, 14(9), 425-432. Talsma, D., Senkowski, D., Soto-Faraco, S., & Woldorff, M. G. (2010). The multifaceted interplay between attention and multisensory integration. Trends Cogn Sci, 14(9), 400-410. Treue, S., & Martinez Trujillo, J. C. (1999). Feature-based attention influences motion processing gain in macaque visual cortex. Nature, 399(6736), 575-579. Wallace, M. T., Roberson, G. E., Hairston, W. D., Stein, B. E., Vaughan, J. W., & Schirillo, J. A. (2004). Unifying multisensory signals across time and space. Exp Brain Res, 158(2), 252-258. 14