Midway Report4

advertisement

World Wide Knowledge Base Webpage Classification

Midway Report

Bo Feng (108809282)

Rong Zou (108661275)

1. Goal

To learn classifiers to predict the type of a webpage from the text.

2. Relevant work

Automatic text classification has always been an important application and research topic

since the inception of digital documents.

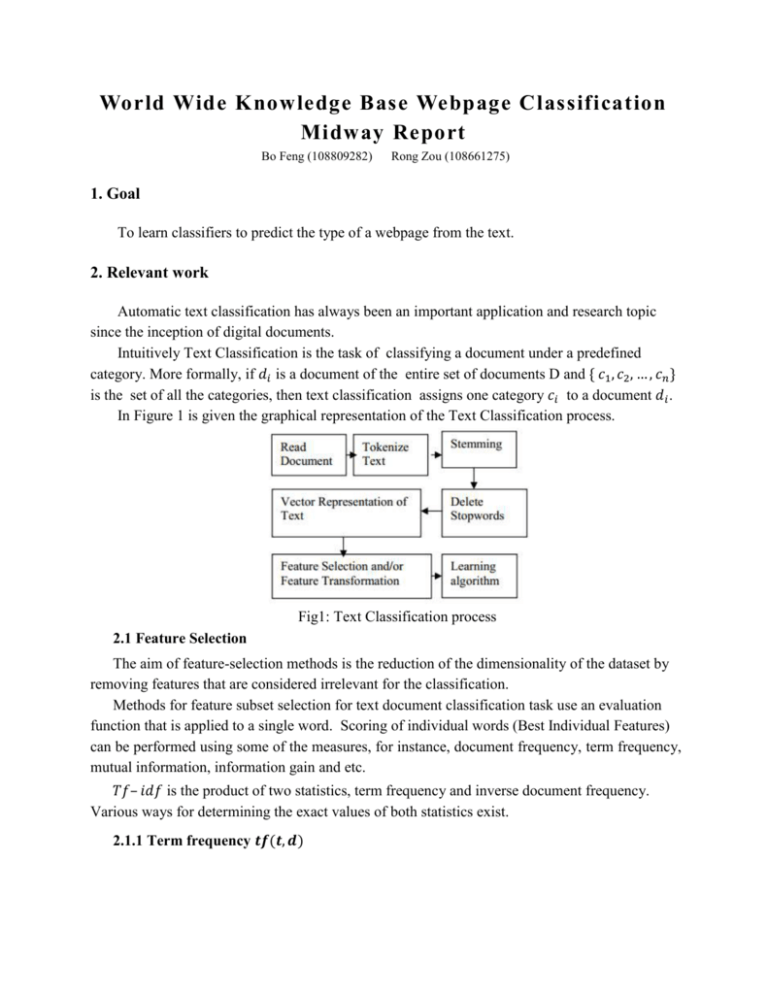

Intuitively Text Classification is the task of classifying a document under a predefined

category. More formally, if 𝑑𝑖 is a document of the entire set of documents D and { 𝑐1 , 𝑐2 , … , 𝑐𝑛 }

is the set of all the categories, then text classification assigns one category 𝑐𝑖 to a document 𝑑𝑖 .

In Figure 1 is given the graphical representation of the Text Classification process.

Fig1: Text Classification process

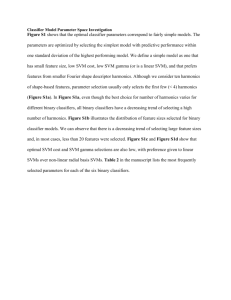

2.1 Feature Selection

The aim of feature-selection methods is the reduction of the dimensionality of the dataset by

removing features that are considered irrelevant for the classification.

Methods for feature subset selection for text document classification task use an evaluation

function that is applied to a single word. Scoring of individual words (Best Individual Features)

can be performed using some of the measures, for instance, document frequency, term frequency,

mutual information, information gain and etc.

𝑇𝑓– 𝑖𝑑𝑓 is the product of two statistics, term frequency and inverse document frequency.

Various ways for determining the exact values of both statistics exist.

2.1.1 Term frequency 𝒕𝒇(𝒕, 𝒅)

In the case of the term frequency tf(t, d), we choose normalized frequency to prevent a bias

towards longer documents, e.g. raw frequency divided by the maximum raw frequency of any

term in the document:

𝑓(𝑡, 𝑑)

𝑡𝑓(𝑡, 𝑑) =

max{𝑓(𝑤, 𝑑): 𝑤 ∈ 𝑑}

2.1.2 Inverse document frequency 𝒊𝒅𝒇(𝒕, 𝑫)

The inverse document frequency is a measure of whether the term is common or rare across

all documents. It is obtained by dividing the total number of documents by the number of

documents containing the term, and then taking the logarithm of that quotient.

log|𝐷|

𝑖𝑑𝑓(𝑡, 𝐷) =

|{𝑑 ∈ 𝐷: 𝑡 ∈ 𝑑}|

|𝐷|: the total number of documents in the corpus

|{𝑑 ∈ 𝐷: 𝑡 ∈ 𝑑}|: number of documents where the term 𝑡 appears (i.e., 𝑡𝑓(𝑡, 𝑑) ≠ 0).

If the term is not in the corpus, this will lead to a division-by-zero. It is therefore

common to adjust the formula to 1 + |{𝑑 ∈ 𝐷: 𝑡 ∈ 𝑑}| .

Mathematically the base of the log function does not matter and constitutes a constant

multiplicative factor towards the overall result.

2.1.3 Term frequency–inverse document frequency 𝒕𝒇 − 𝒊𝒅𝒇(𝒕, 𝒅, 𝑫) [4]

Then 𝑡𝑓– 𝑖𝑑𝑓 is calculated as

𝑡𝑓𝑖𝑑𝑓(𝑡, 𝑑, 𝐷) = 𝑡𝑓(𝑡, 𝑑) × 𝑖𝑑𝑓(𝑡, 𝐷)

A high weight in 𝑡𝑓– 𝑖𝑑𝑓 is reached by a high term frequency (in the given document) and a

low document frequency of the term in the whole collection of documents; the weights hence

tend to filter out common terms. Since the ratio inside the idf's log function is always greater

than or equal to 1, the value of idf (and tf-idf) is greater than or equal to 0. As a term appears in

more documents, the ratio inside the logarithm approaches 1, bringing the idf and tf-idf closer to

0.

2.2 Machine Learning Algorithms

After feature selection and transformation the documents can be easily represented in a

form that can be used by a ML algorithm. Many text classifiers have been proposed in the

literature using machine learning techniques, probabilistic models, etc. They often differ in the

approach adopted: Decision Trees, Naïve-Bayes, Rule Induction, Neural Networks, Nearest

Neighbors, and lately, Support Vector Machines.

Naive Bayes is often used in text classification applications and experiments because of its

simplicity and effectiveness [6]. However, its performance is often degraded because it does not

model text well.

Support vector machines (SVM), when applied to text classification provide excellent

precision, but poor recall. One means of customizing SVMs to improve recall, is to adjust the

threshold associated with an SVM. Shanahan and Roma described an automatic process for

adjusting the thresholds of generic SVM [7] with better results.

Johnson et al. described a fast decision tree construction algorithm that takes advantage of

the sparsity of text data, and a rule simplification method that converts the decision tree into a

logically equivalent rule set [8].

3. Problem Analysis

We are developing a system that can classify webpages. For this part, our goal is to develop

a probabilistic, symbolic knowledge base that mirrors the content of the World Wide Web[1]

using three approaches. If successful, we will try to develop an application for fitting pages in

mobile devices. This will make text information on the web available in computerunderstandable form, enabling much more sophisticated information retrieval and problem

solving.

First, we will learn classifiers to predict the type of webpages from the text by using Naïve

Bayes and SVM (unigram and bigram). Also, we will try to improve accuracy by exploiting

correlations between pages that point to each other[2].

In addition, in the part of segmenting web pages into meaningful parts, including bio,

publication, etc, we'd like to employ the decision tree method[3]. Since intuitively there should be

some rules to distinguish those parts, e.g. the biography part of professor may use <img> and <p>

html tags, while the "publications" section may use <ul> <li> tags, etc. In the end, after we

learned those classifiers, we’d like using these to build an application: given a web page url, it

will rearrange the content and generate a proper web page for mobile user, which we think will

be quite useful.

4. Approach

4.1 Feature Selection

We extract text features using 𝑇𝐹 and 𝑇𝐹 − 𝐼𝐷𝐹 (term frequency–inverse document

frequency), which reflects how important a word is to a document in a collection or corpus.

4.2 Algorithm

We are developing a system that can be trained to extract symbolic knowledge from

hypertext, using a variety of machine learning methods:

1) Naïve Bayes

2) SVM

3) Bayes Network

4) HMM

In additon, we try to improve accuracy by (1) exploiting correlations between pages that

point to each other, and (2) segmenting the pages into meaningful parts (bio, publications, etc.)

6. Dataset

6.1 Dataset

A dataset from 4 universities containing 8,282 web pages and hyperlink data, labeled with

whether they are professor, student, project, or other pages.

For each class the data set contains pages from the four universities:

Cornell (867)

Texas (827)

Washington (1205)

Wisconsin (1263)

and 4,120 miscellaneous pages collected from other universities.

6.2 Test & Train Data Splits

Since each university's web pages have their own idiosyncrasies, we train on three of the

universities plus the miscellaneous collection, and testing on the pages from a fourth, held-out

university, which is 4-fold cross validation.

6.3 Vector Space Webpage Representations

A webpage is a sequence of words. So each webpage is usually represented by an array of

words. The set of all the words of a training set is called vocabulary, or feature set. So a webpage

can be presented by a binary vector, assigning the value 1 if the webpage contains the featureword or 0 if the word does not appear in the webpage. This can be translated as positioning a

webpage in a 𝑅 |𝑉| space, where |𝑉| denotes the size of the vocabulary.

7. Results

Expected Milestone Result: Learn classifier using at least two of these methods in a subset

dataset:1) develop a system that can be trained to extract symbolic knowledge from

hypertext using Naïve Bayes and SVM; 2) exploit correlations between pages that point to

each other; 3) Segment the pages into meaningful parts using decision tree.

Actual Milestone Result:

We learned 2 classifiers:

1) Naïve Bayes classifier

We use 𝑃(𝑤𝑜𝑟𝑑|𝑐𝑙𝑎𝑠𝑠) to train classifier, which achieves accuracy at 16%.

2) SVM classifier using TF-IDF(unigram)

We achieved 70% predict precision by using SVM classifier, where we select

features using unigram based on TF-IDF.

After that, we focused on the correlation between pages that point to each other.

8. Reference

[1] Learning to Construct Knowledge Bases from the World Wide Web. M. Craven, D. DiPasquo, D. Freitag,

A. McCallum, T. Mitchell, K. Nigam and S. Slattery. Artificial Intelligence.

[2] Learning to Extract Symbolic Knowledge from the World Wide Web. M. Craven, D. DiPasquo, D. Freitag,

A. McCallum, T. Mitchell, K. Nigam and S. Slattery. Proceedings of the 15th National Conference on

Artificial Intelligence (AAAI-98).

[3] Data Mining on Symbolic Knowledge Extracted from the Web. Rayid Ghani, Rosie Jones, Dunja

Mladenic, Kamal Nigam and Sean Slattery. KDD-2000 Workshop on Text Mining. 2000

[4] tf–idf, http://en.wikipedia.org/wiki/Tfidf

[5] Zu G., Ohyama W., Wakabayashi T., Kimura F., "Accuracy improvement of automatic text classification

based on feature transformation": Proc: the 2003 ACM Symposium on Document Engineering, November 20 22, 2003, pp.118-120

[6] Kim S. B., Rim H. C., Yook D. S. and Lim H. S., “Effective Methods for Improving Naive Bayes Text

Classifiers”, LNAI 2417, 2002, pp. 414-423

[7] Shanahan J. and Roma N., Improving SVM Text Classification Performance through Threshold

Adjustment, LNAI 2837, 2003, 361-372

[8] D. E. Johnson, F. J. Oles, T. Zhang, T. Goetz, “A decision-tree-based symbolic rule induction system for

text categorization”, IBM Systems Journal, September 2002.