Comparison of categorization criteria across image genres

advertisement

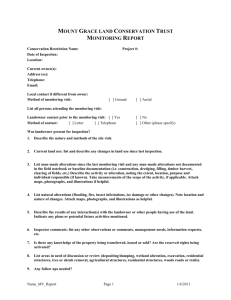

Comparison of categorization criteria across image genres Mari Laine-Hernandez Department of Media Technology Aalto University School of Science and Technology P.O.Box 15500, FI00076 Aalto mari.laine-hernandez@tkk.fi Stina Westman Department of Media Technology Aalto University School of Science and Technology P.O.Box 15500, FI00076 Aalto stina.westman@tkk.fi ABSTRACT image categorization and description have been studied extensively. The subjects of the images in these studies vary from narrow topics such as images of people (Rorissa & Hastings, 2004; Rorissa & Iyer, 2008) or grayscale images of trees/forest (Greisdorf & O’Connor, 2001), to “generic” photographs with extremely varied content including e.g. people, objects and scenery (from a Kodak Photo-CD, Teeselink et al., 2000; from PhotoDisc, Mojsilovic & Rogowitz, 2001). They can also be selected based on a specific image genre such as vacation images (Vailaya et al., 1998), journalistic images (Laine-Hernandez & Westman, 2006; Sormunen et al., 1999) or magazine photographs (Laine-Hernandez & Westman, 2008; Westman & Laine-Hernandez, 2008), and as such may cover a wide range of semantic content. This paper describes a comparison of categorization criteria for three image genres. Two experiments were conducted, where naïve participants freely sorted stock photographs and abstract/surreal graphics. The results were compared to a previous study on magazine image categorization. The study also aimed to validate and generalize an existing framework for image categorization. Stock photographs were categorized mostly based on the presence of people, and whether they depicted objects or scenes. For abstract images, visual attributes were used the most. The lightness/darkness of images and their user-evaluated abstractness/representativeness also emerged as important criteria for categorization. We found that image categorization criteria for magazine and stock photographs are fairly similar, while the bases for categorizing abstract images differ more from the former two, most notably in the use of visual sorting criteria. However, according to the results of this study, people tend to use descriptors related to both image content and image production technique and style, as well as to interpret the affective impression of the images in a way that remains constant across image genres. These facets are present in the evaluated categorization framework which was deemed valid for these genres. The contents of the images have an influence on the categorization results. For instance, using photographs of people with the backgrounds removed the participants of Rorissa and Hastings (2004) formed the following main categories: exercising, single men/women, working/busy, couples, poses, entertainment/fun, costume and facial expression. Vailaya et al. (1998), on the other hand, used outdoor vacation images which subjects sorted into the following categories: forests and farmlands, natural scenery and mountains, beach and water scenes, pathways, sunset/sunrise scenes, city scenes, bridges and city scenes with water, monuments, scenes of Washington DC, a mixed class of city and natural scenes, and face images. A comparison of the category labels in these two studies reveals the effect of the material that is being categorized. Keywords Image categorization, free sorting, image categories, image genres INTRODUCTION The ability to represent images in meaningful and useful groups is essential for the ever-growing number of applications and services involving large collections of images, for both professional and personal use. Being central issues for visual information retrieval, subjective The above-mentioned prior research has revealed that people evaluate image similarity mostly on a high, conceptual (as opposed to low, syntactic) level, based on the presence of people in the photographs, distinguishing e.g. between people, animals and inanimate objects as well as according to whether the scenes and objects in the images are man-made or natural, e.g. buildings vs. landscapes (Laine-Hernandez & Westman, 2008; Mojsilovic & Rogowitz, 2001; Teeselink et al., 2000). Laine-Hernandez and Westman (2006) found that people also create image categories based on abstract concepts related to emotions or atmosphere, cultural references and visual elements. Professional image users (journalists) have Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. ASIST 2010, October 22–27, 2010, Pittsburgh, PA, USA. Copyright © 2010 Aalto University School of Science and Technology 1 METHODOLOGY been shown to evaluate image similarity based on criteria varying from syntactic/visual to highly abstract: shooting distance and angle, colors, composition, cropping, direction (horizontal/vertical), background, direction of movement, objects in the image, number of people in the image, action, facial expressions and gestures, and abstract theme (Sormunen et al., 1999). Image categorization for different image genres We set out to compare three different genres of images: magazine photographs, stock photographs, and abstract images. In the case of magazine photographs, we looked at the results obtained by Laine-Hernandez and Westman (2008), and for stock photographs and abstract images we conducted two new experiments with identical procedure. As in many previous image categorization studies (e.g. Laine-Hernandez & Westman, 2006; Rorissa & Hastings, 2004; Vailaya et al., 1998), we used free sorting to obtain the image categories. The first two image genres (magazine and stock photographs) are more similar to each other - due to the production technique but also in style and semantic content - compared to the third genre (computer-generated graphic abstract images). By selecting these materials we hope to address the potentially different degrees of applicability of the results on image categorization. Some aspects of magazine image categorization behavior may apply to stock photographs but not abstract images, while other aspects might be shared between all three image genres under study. Most previous studies have involved a descriptive analysis of the category labels used but have not generated a hierarchical image categorization framework. LaineHernandez and Westman (2008), however, employed a qualitative data-based analysis on the category names given by their participants and produced the framework for magazine image categorization shown in Table 1. The goals of the current study are to: 1. discover which image attributes (similarity criteria) are used when categorizing stock photographs and abstract images, and 2. validate the class framework of Laine-Hernandez & Westman (2008) by studying whether it can be generalized across image genres. Main class The study of Laine-Hernandez and Westman (2008) involved two participant groups, expert (staff members at magazines, newspapers, picture agencies and museum photograph archives) and non-expert (engineering students or university employees). Differences were found in the two groups’ categorization behavior. For the two new experiments we recruited non-expert participants, and therefore only look at the results of Laine-Hernandez and Westman obtained with non-experts (N=18). Subclasses Function Product photos, Reportage, Portraits, News photos, Illustration, Symbol photos, Advertisement, Profile piece, Vacation photos, Misc. function People Person, Social status, Gender, Posing, Groups, Relationships, Age, Expression, Eye contact Object Non-living, Buildings, Vehicles, Animals Scene Landscape, Interiors, Nature, Cityscape, Misc. scene Theme Food&drink, Work, Sports, Cinema, Travel, Fashion, Art, Transportation, Architecture, Home&family, Culture, Technology, Politics, Economy, Religion, Hobbies&leisure, Misc. theme Story Event, Time, Activity Affective Emotion, Mood Description Property, Number Visual Color, Composition, Motion, Shape Photography Distance, Black&white, Style, Image size, Cropping Experiment I – Stock photographs Test images One hundred stock photographs were chosen at random from a collection of 4000+ colored stock photographs with varied content originally collected from the stock photography service Photos.com. Examples of the test images are shown in Figure 1. The photographs were printed so that the longer side of each photo measured roughly 15 cm. Each photograph was glued on grey cardboard for easier handling. Table 1. Framework for magazine image categorization (Laine-Hernandez & Westman 2008) Figure 1. Examples of test images in Experiment I 2 Participants The participants (N=20, 10 female) were engineering students and university staff. Their age varied from 19 to 55 (mean 27). They were rewarded for their effort with movie tickets. 11.2 and 12.7 cm. The images were glued on grey cardboard for easier handling. Image orientation was marked with a small circle drawn under each image denoting bottom, and all images were handed to the participant in correct orientation. Procedure Participants The participant was handed the test images in one randomordered pile. The written task instruction was: The participants (N=20, 9 female) were recruited through engineering students’ newsgroups. Their age varied from 20 to 38 (mean 24). They were rewarded for their effort with movie tickets. Sort the images into piles according to their similarity so that images similar to each other are in the same pile. You decide the basis on which you evaluate similarity. There are no ‘correct’ answers, but it is about what you experience as similarity. You decide the number of piles and how many images there are in each pile. An individual image can also form a pile. Take your time in completing the task. There is no time limit for the experiment. Procedure The first part of the procedure was identical to the procedure described for Experiment I. Category names were not required for single image categories due to expected difficulty in describing these categories. After sorting the images and describing the resulting categories, 9 of the participants (4 female) carried out an additional abstractness/representativeness evaluation task. The evaluation procedure was taken from the context of image quality evaluation (Leisti et al., 2009). The test images were placed in one pile in random order. A paper rating scale with numbers from 1 to 7 appearing at equal intervals as well as the words “abstract” and “representative” written in the ends of the scale was placed on a table. The participant was first asked to select the most abstract test image and place it on number 1 (“abstract”). The participant was then asked to select the most representative test image and place it on number 7 (“representative”). After that, the participant was asked to place the remaining images on numbers 1 to 7 so that the representativeness grows linearly from 1 to 7. A mean opinion score was calculated from the participants’ evaluations for each test image. The participant was also told that there would be a discussion about the piles after the completion of the task. After completing the sorting task the participant was asked to explain the basis on which they has formed each pile, i.e. to describe and name them. Experiment II – Abstract/surreal images Test images Test image candidates were collected from various websites (caedes.net, deviantart.com, digitalart.org, flickr.com creative commons, sxc.hu). All the collected images were keyworded as “abstract” or “abstract/surreal” on these sites. The images were vector art, 3D rendered art, fractal art, computer desktop backgrounds etc. We chose images based on high user-rating if provided by the website. In order to be able to produce decent-quality prints, we required that the smaller side of the image be at least 500 pixels and the longer side at least 800 pixels. We looked for color images that had visually complex, abstract content, but also images with semi-representative content (e.g. 3D modeled images) were included in order to make the test image collection varied within the genre. We collected approximately 250 test image candidates, and then selected the final one hundred test images from those at random. Examples of test images are shown in Figure 2. Data analysis Qualitative analysis of category names The category names or descriptions given by the participants in Experiments I and II were analyzed qualitatively by the authors and assigned to the main and subclasses shown in Table 1. The reader should note that in this paper, the terms “category” and “class” carry distinct meanings. Category refers to an image group created by a participant in an experiment. Class refers to an instance of category names coded according to the categorization framework developed by Laine-Hernandez & Westman (2008). If the category included references to multiple classes (i.e. multiclass category), two or more class instances were created from the name. For example, if the category was described as “Ocean-themed, blue images”, the instances “ocean-theme” (Theme – Misc. theme) and “blue” (Visual - Color) were separated. The images were printed at a resolution of 180 dpi, with the length of the longer side of the images varying between Figure 2. Examples of test images in Experiment II. left: “Foucault Pendulum” by Burto (Caedes.net), center: “strained glass” by Buttersweet (Flickr.com), right: “The Dilemma of Solipsism” by WENPEDER (Caedes.net). The same procedure was employed by Laine-Hernandez and Westman (2008) and the results obtained here will be compared to those. Data from the second phase of the 3 sorting procedure in Laine-Hernandez and Westman (2008) is disregarded here, as the results regarding the use of classes were determined by the first part of the experiment, identical to the procedure in Experiments I and II. those subclasses were included that were connected to at least two images. The contingency table was formed by assigning subclasses (C) to columns and images (I) to rows. The value in cell (Ci,Ij) was determined by the number of times subclass Ci was used as category description for image Ij. Statistical analysis For purposes of statistical comparisons, the distributions to be compared were tested for normality using the ShapiroWilk test. If they were normally distributed, parametric tests for two or more independent samples (t-test or oneway analysis of variance (ANOVA), respectively) were applied. If they were not normally distributed, we used nonparametric tests for two or more independent samples (Mann-Whitney U test or Kruskal-Wallis test). CA was carried out with R (R Development Core Team, 2010), an environment for statistical computing, using the package FactoMineR (Husson et al., 2008). The results were further visualized using Matlab. RESULTS Number of categories The numbers of categories formed for different image genres are listed in Table 2. The amount of test images was the same (100 images) in all three experiments. For magazine photographs, participants created 298 categories (out of which 2 were excluded from further analysis because of ambiguous meaning) in total, for stock photographs 352 categories and for abstract images 419 categories (out of which 2 were excluded from analysis because of ambiguous meaning, and 68 because they were single-image categories which needed not be named by the participant). According to a one-way ANOVA there was no statistically significant difference between the three experiments in the number of categories formed. Multidimensional scaling (MDS) The sorting data from Experiments I and II was also visualized in Matlab (The MathWorks) using non-metric multidimensional scaling. MDS is a multivariate data analysis method that seeks to express the structure of similarity data (given in the form of a distance matrix) spatially in a given (low) number of dimensions. The Standardized Residual Sum of Square (STRESS) value is used to evaluate the goodness of fit of an MDS solution. A squared stress, which is normalized with the sum of 4th powers of the inter-point distances, was used in the analysis. A stress value <0.025 has been considered excellent, 0.05 good, 0.10 fair and >0.20 poor (Kruskal, 1964). These are only rough guidelines and not applicable in all situations as the stress value depends on the number of stimuli and dimensions. When the number of points (here images) is much larger than the number of dimensions of the space they are represented in, as in our case (100 images in a two-dimensional space), higher stress values may be acceptable (Borg & Groenen, 2005). In practice stress values below 0.2 are taken to indicate an acceptable fit of the solution to the similarity data (Cox & Cox, 2001). Out of the magazine photograph categories, 33.8% included two or more bases for sorting, resulting in a total of 409 class instances. Magazine photograph category names contained 1.38 classes on average. Of the stock photograph categories, 23.6% were multiclass categories, resulting in 442 class instances. Stock photograph category names contained an average of 1.26 classes. Of the analyzed abstract image categories, 38.1% were multiclass categories, and resulted in a total of 507 class instances. Abstract image category names contained 1.45 classes on average. In order to obtain the MDS solution the data from each experiment was first converted to an aggregate dissimilarity (distance) matrix as follows. The percent overlap for each pair of photographs i and j was calculated as the ratio of the number of subjects who placed both i and j in the same category to the total number of subjects. The percent overlap Sij gives a measure of similarity, which was then converted to a measure of dissimilarity: δij = 1 - Sij. MDS was performed on these dissimilarity matrices. It was also possible to form categories of single images. For magazine photographs 16% of the categories consisted of one image, for stock photographs 19% and for abstract images 21%. According to a Kruskal-Wallis test there was no significant difference between the three experiments in the number of single-image categories formed. Correspondence analysis (CA) Correspondence analysis is an exploratory multivariate statistical technique used to analyze contingency tables, i.e. two-way and multi-way tables containing some measure of correspondence between the rows and columns. We used correspondence analysis to analyze the usage of subclasses in Experiments I and II. This was done after separating the multiclass categories into two or more categories. The image sorting data by itself was not used in the analysis, but only the connections between images and subclasses. Only Images Total Min Max Mean Sd Magazine 298 4 35 17 7 Stock 352 7 32 18 7 Abstract 419 4 39 21 10 Table 2. Number of categories for different image types 4 For magazine photographs main classes People (25.2%), Theme (22.2%), Object (12.2%) and Scene (10.3%) were used the most. The least-used classes were Visual (2.0%) and Function (3.2%). Stock photographs were also categorized mostly based on their main semantic content. The main classes that were used the most were Object (23.1%), Scene (18.6%), Theme (16.5%) and People Time spent on sorting With stock photographs the participants spent on average 16 minutes (min 7, max 32, sd 7) sorting the images (excluding the time it took to name the categories), with magazines photographs 24 minutes (min 9, max 45, sd 11), and with abstract images 33 minutes (min 13, max 76, sd 18). Magazine According to Mann-Whitney U tests the difference between stock photographs and the other two image genres was significant (p<0.02), but the difference between magazine photographs and abstract images was not significant (p=0.11). (LaineHernandez & Westman 2008) Stock Abstract (Experiment I) (Experiment II) Function** 3.2 3.4 9.9 People*** 25.2 15.8 1.6 Object* 12.2 23.1 20.1 Scene*** 10.3 18.6 3.2 Theme** 22.2 16.5 5.9 Story** 7.6 6.8 2.6 Affective 4.2 1.4 3.6 Description 7.6 6.6 8.7 Visual** 2.0 2.0 38.1 Photography 5.6 5.9 6.5 Main Class Category types Category descriptions For magazine photographs, according to Laine-Hernandez and Westman (2008) “non-expert participants often formed categories on various semantic levels instead of a controlled categorization on a thematic level.” Category descriptions often contained named objects, scenes and aspects related to the people in the images. Categories were also formed based on affective aspects, i.e. emotional impact or the mood interpreted in the photograph. Stock photograph categories mostly referred to the main semantic content of the images - often using just one word (e.g. animals, buildings, portraits, food) or a combination of two simple criteria combined (e.g., close-ups of objects, modern cityscapes, people working, nature details). Table 3. Percentage distribution of main classes for different image genres. Significant differences between all three image genres are marked with ***. Significant differences between abstract images vs. magazine and stock photographs are marked with **. Significant difference between magazine and stock photographs is marked with *. Most of the category descriptions for abstract images were more complex than the ones for stock photographs. The participants did not know beforehand that they would have to name the categories (just that they would have to discuss them in some way), so they probably grouped images based on some feeling or intuition, resulting in category descriptions such as: “A feel of realism, but also artistic mess; a combination of a drawing and realism”, or “3D modeled, the same mood, a very coherent group, spheres”, or “bright colors, they remind me of stereotypical computer graphics, could be (computer) desktop backgrounds, rich, lots of details”. On the other hand, there were also many concise category names targeting various semantic levels (space, modern art, graffiti, flowers, landscapes, spheres, splashes, spirals etc.). 40 35 30 25 % 20 15 Magazine Stock Photography Visual Description Affective Story Theme Scene 0 Object 5 The percentage distributions of main class usage for magazine photographs, stock photographs and abstract images and are listed in Table 3 and visualized in Figure 3. The exact usage percents of the main and subclasses for each image genre are also listed in the table in Appendix 1. In order to make the comparison between photographs and non-photographs (i.e. abstract images) possible, the main class Photography was extended to include aspects related to production technique (e.g. drawing, painting, comiclike). Function Main class usage People 10 Abstract Figure 3. Main class usage for different image genres 5 Multidimensional scaling results (15.8%). The four most-used main classes were the same (although not in the same order) for magazine and stock photographs. The least used classes for stock photographs were Affective (1.4%) and Visual (2.0%). A visualization of the two-dimensional MDS solution for stock photographs is presented in Figure 5. The stress value for the solution is 0.07. In the MDS several clusters of images can be identified, e.g. photographs of people, urban scenes, nature scenes, animals, non-living objects and food photographs. The diagonal axes of the solution seem to differentiate between 1) images with people and images without people, and 2) whether the images depict objects or scenes. In contrast to magazine and stock photographs, abstract images were categorized the most based on visual features. The most used main classes were Visual (38.1%), Object (20.1%), Function (9.9%) and Description (8.7%). The least used classes were People (1.6%) and Story (2.6%). According to a Kruskal-Wallis test the usage of the following three main classes did not significantly differ between the three experiments: Affective, Description and Photography. For the rest of the main classes we used the Mann-Whitney U test for two independent samples to see where the pairwise differences occurred. For classes Function, Story, Theme and Visual there was no significant difference between magazine and stock photographs, but the abstract images differed from the other two (Function p=0.050, Story p<0.007, Theme p<0.001, Visual p=0.000). For class Object the only significant difference occurred between magazine and stock photographs (p=0.018), and for class Function abstract images differed from both stock photographs (p=0.050) and magazine photographs (p=0.044). For classes People and Scene the differences were significant between all three images genres (p<0.001).The usage of the main classes was thus most similar between magazine and stock photographs; for seven out of ten main classes there was no significant difference. A visualization of the two-dimensional MDS solution for abstract images is presented in Figure 6. The stress value of the solution is 0.17. This means that inter-categorizer agreement was lower for abstract images than for stock photographs. We also analyzed for how many images (out of 100) each main class was used for each image genre. The results are depicted in Figure 4. The classes that were used to describe the most images across all genres are Description (80% of all images), Theme (74%), Function (71%) and Photography (71%). Figure 5. Result of the two-dimensional MDS solution for stock photographs Magazine Photography Visual Description Story Stock Affective Theme Scene Object People Function number of images 100 90 80 70 60 50 40 30 20 10 0 Abstract Figure 4. Number of images (out of 100) for which each main class was used Figure 6. Result of the two-dimensional MDS solution for abstract images 6 Dark images seem to be placed in the bottom-left corner of the MDS visualization. We therefore converted the images to the CIELAB color space (Fairchild, 2005) and calculated their mean lightness. We then calculated the Pearson correlation coefficient r between the mean lightness and MDS x+y values of the images which resulted in r=0.756 (p<0.001), indicating strong correlation. Representative images seem to be located in the top left part of the MDS visualization. We therefore calculated the correlation between the abstractness scores (calculated as the mean of the participants’ evaluations) and MDS y−x coordinates of the image resulting in r=0.772 (p<0.001), also indicating strong correlation. people and images with no people. Dimension 2 appears to represent the distinction between man-made and natural scenes/objects. The result of the correspondence analysis for abstract images is shown in Figure 8. Dimension 1 explains 17.0% of the inertia and dimension 2 explains 15.4%, adding up to 32.4% of the total inertia. Even though the two dimensions explain more of the variance than the two dimensions for stock photographs, the solution is not as clear to interpret as the one for stock photographs (Figure 7). This is an indication of the complexity of similarity evaluations for abstract images. It is also partially due to the fact that two of the images - the only ones with clearly identifiable human figures - are isolated and the rest of the images are tightly clustered. Correspondence analysis results The result of the correspondence analysis for the stock photographs is shown in Figure 7. Dimension 1 (horizontal axis) explains 14.3% of the total inertia (variance) of the data and dimension 2 (vertical axis) explains 13.5%. The two dimensions together then account for 27.8% of the inertia. The subclasses correlating positively the most with dimension 1 are Photography - Technique (r=0.70) and Function – Advertisement (r=0.52), and the ones that correlate negatively Theme – Misc. theme (r=-0.76) and Photography – Distance (r=-0.71). The subclasses that correlate positively the most with dimension 2 are People Person (r=0.72) and Scene – Nature (r=0.43), and the ones that correlate negatively Object – Non-living (r=-0.66) and Description – Property (r=-0.52). All these correlations are statistically significant (p<0.001). The semantic aspects represented by the two axes are not as clear as in the case of stock photographs, but subclasses People – person and Description - property can be found in the ends of both dimension 1 of stock photographs and dimension 2 of abstract images. The subclasses that correlate positively the most with dimension 1 are People - Person (r=0.87) and People Portraits (r=0.74), and the ones that correlate negatively Description - Property (r=-0.56) and Scene - Landscape (r=-0.53). The subclasses that correlate positively the most with dimension 2 are Object - Buildings (r=0.69) and Scene - Cityscape (r=0.67), and the ones that correlate negatively Scene - Nature (r=-0.70) and Scene - Landscape (r=-0.55). All these correlations are statistically significant (p<0.001). Dimension 1 seems to differentiate between images of Figure 7. Result of the correspondence analysis based on subclass usage for stock photographs Figure 8. Result of the correspondence analysis based on subclass usage for abstract images 7 theme technology was extended to include industry. In addition, especially for abstract images but to a lesser extent also for stock photographs, the function art images appeared in the category names. Furthermore, visual impression (e.g. clear, fuzzy) was used as a categorization criterion for abstract images. The addition of these subclasses does not change the results of the comparisons between the three image genres, because the main class level remained unmodified. DISCUSSION Complexity of image categorization Magazine photograph category names contained on average 1.38 classes, stock photograph category names 1.26 classes, and abstract image category names 1.45 classes. These findings are roughly in line with Jörgensen’s (1995) results, where one third of image group names were composed of multiple (on average 1.5) terms. There is, however, some variation, which could be interpreted as an indication of the complexity of categorization criteria. The time taken to sort the images can be interpreted to correlate with the difficulty of grouping the test images into coherent categories. Taken together, these two findings indicate that image categorization was the most simple for stock photographs and the most complex for abstract images. The more complex the categorization is, the more difficult it is to represent the criteria employed to perform it (e.g. in the form of a categorization framework). Applicability of the framework for image categorization Based on our results on main class usage, the categorization framework developed for magazine photographs (LaineHernandez & Westman, 2008) can be extended to apply to stock photographs. For seven out of ten main classes there were no significant usage differences. The only main classes with significant differences were People, Scene and Object. Since these classes describe the main semantic content of the images, it is not surprising that their usage differs between different image genres and even between different image collections within genres. It should, however, be noted that despite the differences, these three classes were all among the four most-used classes for both magazine and stock photographs. Dimensions of similarity evaluations The dimensions of image similarity criteria as revealed by MDS were different for stock photographs and abstract images. The result for stock photographs was largely similar to the results reported in earlier studies (LaineHernandez & Westman, 2008; Rogowitz et al., 1998; Teeselink et al 2000). The result for abstract images, however, revealed entirely different bases for categorization: the lightness vs. darkness and abstractness vs. representativeness of the images. These dimensions are not about the main semantic content of the images, but describe their visual and stylistic/representational aspects. Abstract images differed more from the two photograph genres; only three out of ten main classes did not show a statistically significant difference across all three image genres: Affective, Description and Photography. The usage of these classes was at best moderate if evaluated by the percentage usage of them in the category descriptions. However, taking into account the number of images they were used to categorize, classes Description, Function and Photography were among the four most-used main classes if averaged over the three image genres. This shows that they form important and widely applicable bases for image categorizations across image genres. For stock photographs, the correspondence analysis conducted using sublevel class information revealed very similar axes as the MDS conducted by Rogowitz et al. (1998): man-made (buildings & cityscape) vs. natural (landscape & nature) and more human-like (person & portraits) vs. less human-like (property & landscape). Considering that the image sorting data was not used at all in the correspondence analysis, the result shows that the categorization framework of Laine-Hernandez and Westman (2008) is capable of accurate characterization of stock photographs when compared to user-evaluated image similarity. For abstract images the result was not as clear, indicating that the framework is perhaps less suitable for non-photographs and some other image genres. The largest differences across all three image genres occurred in the use of main classes People, Scene and Visual. Classes People and Scene were seldom used in the categorization of abstract images. As mentioned above, the differences can be explained by the subject matter of the test images. Of the 100 stock photographs, 38 both depicted people as the main subject matter and were used by the participants as sorting criteria. The study of LaineHernandez and Westman (2008) included photographs from five different magazine types with each of them including photographs of people, one of them “predominantly” so. In contrast to this, only 2 of the 100 abstract images contained people depicted clearly enough for the participants to use them as sorting criteria, and these two images were strongly categorized based on this aspect (evident also from the correspondence result in Figure 8). This demonstrates again the importance of the presence of people in images. Class Visual, on the other hand, was used rarely in the categorization of photographs but in the case of abstract Additions to the categorization framework During the data analysis of Experiments I and II, a few possible additions to the categorization framework (LaineHernandez & Westman 2008) emerged and were used in the data analysis for stock photographs and abstract images. Most notably, the addition of the subclass Object - nature objects would logically fill the gap left between subclasses non-living and animals. The subclass includes plants, flowers etc. and was used in both experiments. Because of the occurrences in stock photograph category names, the 8 images it was by far the most used class. Visual aspects are therefore important in image categorization across genres. Meeting of the American Society for Information Science and Technology. Laine-Hernandez, M., & Westman, S. (2008). Multifaceted image similarity criteria as revealed by sorting tasks. Proceedings of the 71st Annual Meeting of the American Society for Information Science and Technology. CONCLUSIONS This paper presented the results of two subjective image categorization experiments and a comparison between them as well as an earlier study on the categorization of magazine photographs. We found that the image categorization criteria for magazine and stock photographs are fairly similar, while the bases for categorizing abstract images differ more from the former two. According to the results of this study, the image categorization framework developed for magazine photographs can fairly well be generalized to apply to different image genres, and especially well to stock photographs. The categorization framework is detailed enough to be able to characterize the contents and similarity of stock photographs. Leisti, T., Radun, J., Virtanen, T., Halonen, R., & Nyman, G. (2009). Subjective experience of image quality: attributes, definitions, and decision making of subjective image quality. In S. P. Farnand & F. Gaykema, eds. Image Quality and System Performance VI. San Jose, CA, USA. Mojsilovic, A., & Rogowitz, B. (2001). Capturing Image Semantics with Low-level Descriptors. Proceedings of IEEE International Conference on Image Processing, (ICIP 2001) 1, 18-21. R Development Core Team (2010). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. Url: http://www.R-project.org. ACKNOWLEDGMENTS This study was funded by the Academy of Finland and the National Technology Agency of Finland. Rogowitz, B. E., Frese, T., Smith, J. R., Bouman, C. A., & Kalin, E. (1998). Perceptual image similarity experiments. In Proceedings of SPIE Conference on Human Vision and Electronic Imaging III, 3299, 576590. REFERENCES Borg, I., & Groenen, P. J. F. (2005) Modern Multidimensional Scaling: Theory and Applications, 2 nd ed. Springer, New York. Cox, T. F., & Cox, M. A. (2001). Multidimensional Scaling, 2nd ed. Chapman and Hall, Boca Raton. Rorissa, A., & Hastings, S.K. (2004). Free sorting of images: Attributes used for categorization. Proceedings of the 67th Annual Meeting of the American Society for Information Science and Technology. nd Fairchild, M. D. (2005). Color Appearance Models, 2 ed. Wiley-IS&T, Chichester, UK. Greisdorf, H., & O’Connor, B. (2001). Modelling what users see when they look at images: a cognitive viewpoint. Journal of Documentation 58(1), 6-29. Rorissa, A., & Iyer, H. (2008). Theories of cognition and image categorization: What category labels reveal about basic level theory. Journal of the American Society for Information Science and Technology 59(9), 1383-1392. Greisdorf, H., & O’Connor, B. (2002). What do users see? Exploring the cognitive nature of functional image retrieval. Proceedings of the 65th Annual Meeting of the American Society for Information Science and Technology 39, 383-390. Sormunen, E., Markkula, M., & Järvelin, K. (1999). The Perceived Similarity of Photos - A Test-Collection Based Evaluation Framework for the Content-Based Photo Retrieval Algorithms. In: S.W. Draper S.W., M.D. Dunlop, I. Ruthven, C.J. van Rijsbergen (Eds.) Mira 99: Evaluating interactive information retrieval. Husson, F., Josse, J., & Lê, S. (2008). FactoMineR: An R Package for Multivariate Analysis. Journal of Statistical Software 25(1), 1-18. Teeselink, I.K., Blommaert, F., & de Ridder, H. (2000). Image Categorization. Journal of Imaging Science and Technology 44(6), 552-55 Jörgensen, C. (1995). Classifying Images: Criteria for Grouping as Revealed in a Sorting Task. Proceedings of the 6th ASIS SIG/CR Classification Research Workshop. Vailaya, A., Jain, A., & Zhang, H.J. (1998) On Image Classification: City Images vs. Landscapes. Pattern Recognition 31(12), 1921-1935. Jörgensen, C. (1998). Attributes of Images in Describing Tasks. Information Processing & Management 34(2), 161-174. Westman, S., & Laine-Hernandez, M. (2008). The effect of page context on magazine image categorization. Proceedings of the 71st Annual Meeting of the American Society for Information Science and Technology. Kruskal, J.B. (1964) Multidimensional Scaling by Optimizing Goodness of Fit to a Nonmetric Hypothesis. Psykometrika 29(1), 1-27. Laine-Hernandez, M., & Westman, S. (2006). Image Semantics in the Description and Categorization of Journalistic Photographs. Proceedings of the 69th Annual 9 APPENDIX 1 Percentage distribution of main and subclasses in the three experiments. New additions to the class hierarchy in italics. Main/subclass Function Magazine photographs 3.2 Stock photographs 3.4 Abstract images Main/subclass 9.9 Magazine photographs Stock photographs Abstract images Cinema 1.7 0 0 Product photos 0.7 0 0 Travel 1.0 0 0 Reportage 0 0 0 Fashion 1.7 0 0 Portraits 0.2 1.8 0 Art 3.2 0.5 0.2 News photos 0.2 0 0 Transportation 0.5 0.7 0 Illustration 0 0 0 Architecture 1.0 1.8 0 Symbol photos 0 0 0 Home&family 0.5 0.7 0 Advertisement 0.2 0.2 2.8 Culture 0.5 0 0 0.7 2.7 0 0.7 0 0 Economy 0 0.2 0 Religion 0.2 0 0 Hobbies&leisure 0.2 1.4 0 Profile piece 0.5 0 0 Vacation photos 0.5 0.2 0 Industry & Technology Art images - 0.9 3.8 Politics Misc. function 0.7 0.2 3.4 People 25.2 15.8 1.6 Person 8.8 5.0 1.6 Social status 3.9 1.6 0 Gender 4.4 0.7 0 Posing 3.2 1.6 0 Groups 1.5 3.2 0 Relationships 0.7 1.1 0 Age 1.2 2.3 0 Expression 0.7 0.5 0 Eye contact 0.7 0 0 Object 12.2 23.1 8.1 13.8 Buildings 3.4 3.4 0.2 3.4 0.5 Animals 0 5.4 0.4 Nature objects - 5.7 5.7 Scene 10.3 18.6 1.1 1.8 Time 2.4 0.9 0.6 Number 3.9 5.0 8.7 Visual 17.6 Composition 0 0.2 5.3 Impression - 0 2.6 0.5 0.2 0.4 0.2 0 12.2 0 Shape Nature 0.5 3.6 0.6 Theme Food&drink 22.2 16.5 4.6 Photography 5.9 3.9 0 Work 2.2 1.8 0 Sports 2.0 0.7 0.2 10 38.1 1.6 0.2 1.6 2.0 1.2 1.0 6.6 2.0 Color Interiors 6.4 3.2 8.7 0 1.0 Misc. scene 1.1 6.6 1.6 4.5 0 0.4 3.7 2.0 3.6 0.2 1.2 7.6 0.2 3.6 Property Landscape 0.5 4.7 1.4 2.9 Mood Motion Cityscape 2.7 4.2 Emotion 3.2 5.5 2.6 2.4 Activity 0 2.3 6.8 Event Affective 20.1 5.4 1.5 7.6 Description Non-living Vehicles Misc. theme Story 5.6 5.9 6.6 Distance 1.5 5.7 0.2 Black&white 1.7 0 0 Style/technique 0.7 0.2 6.3 Image size 1.2 0 0 Cropping 0.5 0 0