Program Assessments Study

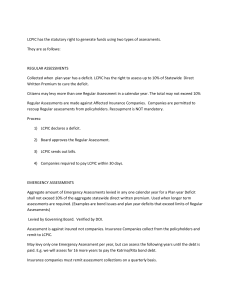

advertisement