A Simple Example of Finding MLE`s Using SAS PROC NLP With the

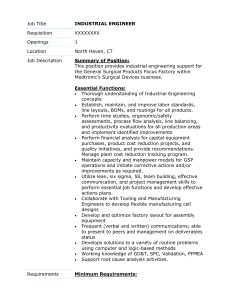

advertisement

1

A Simple Example of Finding MLE’s Using SAS PROC NLP

With the Nelder-Mead Simplex Method, Followed by Newton’s Method

We want to obtain MLE’s for a simple example in which a relatively small (n = 5) data set has been

sampled from a normal distribution with mean µ and finite variance 𝜎 2 . The data values are: 𝑥1 = 1,

𝑥2 = 3, 𝑥3 = 4, 𝑥4 = 5, and 𝑥5 = 7.

We will use SAS PROC NLP to obtain simultaneous MLE’s for both parameters using the Nelder-Mead

Simplex algorithm, followed by refinement of the parameter estimates using Newton’s method.

The log-likelihood function to be maximized is:

(1)

𝑛

1

𝑙(𝜇, 𝜎;⃗⃗⃗⃗𝑥) = − 2 𝑙𝑛(2𝜋) − 𝑛𝑙𝑛(𝜎) − 2𝜎2 ∑𝑛𝑖=1(𝑥𝑖 − 𝜇)2.

In PROC NLP, we enter this function for a single observation; SAS then recognizes that the data

represent n observations from the distribution.

The Nelder-Mead method (without modification) does not necessarily converge to a minimum point for

general non-convex functions. However, it does tend to produce “a rapid initial decrease in function

values…” 1 regardless of the form of the function.

In this example, we are finding the extreme point of a convex function; hence the Nelder-Mead method

should work well. The SAS program for implementing the first algorithm is shown below, followed by

an explanation of the options and statements in the PROC, and the output.

data Ex1;

input x @@;

datalines;

1 3 4 5 7

;

proc nlp data=Ex1 tech=nmsimp vardef=df covariance=h pcov phes;

max loglik;

parms mean=0., sigma=1.;

bounds sigma > 1e-12;

loglik=-0.5*((x-mean)/sigma)**2-log(sigma);

;

run;

Options in the PROC NLP statement:

a) “data=” tells the procedure where to find the data set.

b) “tech” specifies the method for numeric optimization; in this case “nmsimp” tells SAS to use the

Nelder-Mead simplex method. “newrap” would specify the use of the Newton-Raphson method.

If no method is specified, the default method is Newton-Raphson.

2

c) “vardef” specifies the divisor to be used in the calculation of the covariance matrix and standard

errors. The default value is N, the sample size. Here, “df” specifies that the degrees of freedom

should be used as the divisor. (See p. 371 of the manual.)

d) “covariance” specifies that an approximate covariance matrix for the parameter estimates is to be

computed. There are several ways to do this (See p. 370 of the manual.) The option “h” used

here specifies that, since the “max” statement is used in the procedure, the covariance matrix

𝑛

should be computed as 𝑚𝑎𝑥{1,𝑛−𝑑𝑓} 𝐺 −1 , where G is the Hessian matrix (the matrix of second

partial derivatives of the objective function, (1), with respect to the parameters.)

e) “pcov” tells SAS to display the covariance matrix.

f) “phes” tells SAS to display the Hessian matrix.

Other statements:

a) The “max” statement specifies that the maximum point for the objective function (1) is to be

found. The “loglik” variable denotes the objective function.

b) The “parms” statement specifies initial values of the parameters, to be used in this case to

generate the initial simplex for starting the Nelder-Mead algorithm. (See p. 374 of the manual.)

c) The “bounds” statement specifies constraints on the values of the parameters. Here, the only

constraint is that σ must be positive. (See p. 325 of the manual.)

d) The objective function to be maximized is defined by a statement in the procedure. Here, we

1 𝑥−𝜇 2

define 𝑙𝑜𝑔𝑙𝑖𝑘(𝜇, 𝜎) = − 2 (

𝜎

) − 𝑙𝑛(𝜎).

The output of the program is listed below:

The SAS System

PROC NLP: Nonlinear Maximization

Gradient is computed using analytic formulas.

Hessian is computed using finite difference approximations (2) based on analytic gradient.

N Parameter

1 mean

2 sigma

The SAS System

PROC NLP: Nonlinear Maximization

Optimization Start

Parameter Estimates

Gradient

Lower

Objective

Bound

Estimate

Function

Constraint

0

20.000000

.

1.000000

95.000000

1E-12

Value of Objective Function = -50

mean

sigma

Hessian Matrix

mean

-5

-40.00000001

sigma

-40.00000001

-295.0000001

Determinant = -124.9999997

Matrix has 1 Positive Eigenvalue(s)

WARNING: Second-order optimality condition violated.

The SAS System

PROC NLP: Nonlinear Maximization

Upper

Bound

Constraint

.

.

3

Nelder-Mead Simplex Optimization

Parameter Estimates

Functions (Observations)

Lower Bounds

Upper Bounds

2

5

1

0

Optimization Start

0

-50

Active Constraints

Objective Function

Iter

Restarts

Function

Calls

1

2

3

4

5

6

0

0

0

0

0

0

11

20

29

38

48

58

Active

Constraints

Objective

Function

Objective

Function

Change

Std Dev

of Simplex

Values

Restart

Vertex

Length

Simplex

Size

0

0

0

0

0

0

-6.40098

-6.08749

-5.97918

-5.96671

-5.96581

-5.96574

1.7805

0.3135

0.0496

0.00693

0.000095

1.57E-6

0.7758

0.1291

0.0218

0.00312

0.000039

6.954E-7

1.000

1.000

1.000

1.000

1.000

1.000

2.750

1.438

0.262

0.101

0.0213

0.00459

Optimization Results

6 Function Calls

0 Active Constraints

-5.965738194 Std Dev of Simplex Values

1 Size

Iterations

Restarts

Objective Function

Deltax

60

0

6.9541052E-7

0.0045923843

FCONV2 convergence criterion satisfied.

NOTE: At least one element of the (projected) gradient is greater than 1e-3.

The SAS System

PROC NLP: Nonlinear Maximization

Optimization Results

Parameter Estimates

N Parameter

1 mean

2 sigma

Approx

Estimate

Std Err

t Value

3.998087

0.894406

4.470100

1.999952

0.632418

3.162391

Value of Objective Function = -5.965738194

mean

sigma

Hessian Matrix

mean

-1.250060277

-0.002392044

sigma

-0.002392044

-2.500304826

Determinant = 3.1255260207

Matrix has Only Negative Eigenvalues

Covariance Matrix 2: H = (NOBS/d) inv(G)

mean

sigma

mean

0.7999628893

-0.000765325

sigma

-0.000765325

0.399951966

Factor sigm = 1

Determinant = 0.3199461445

Matrix has 2 Positive Eigenvalue(s)

Approximate Correlation Matrix

of Parameter Estimates

mean

sigma

mean

1

-0.001353029

sigma

-0.001353029

1

Determinant = 0.9999981693

Matrix has 2 Positive Eigenvalue(s)

Approx

Pr > |t|

0.006579

0.025028

Gradient

Objective

Function

0.002392

0.000123

4

The final parameter estimates obtained using the Nelder-Mead method were 𝜇̂ = 3.998087 and 𝜎̂ =

1.999952. We will use these as our initial guesses of the parameters, and refine these values by using

Newton’s method.

The program for implementing Newton’s method is listed below, followed by the output. The final

MLE’s for the parameters (after 1 iteration) were found to be 𝜇̂ = 4.000000 and 𝜎̂ = 1.999999.

data Ex1;

input x @@;

datalines;

1 3 4 5 7

;

proc nlp data=Ex1 tech=newrap vardef=n covariance=h pcov phes;

max loglik;

parms mean=3.998087, sigma=1.999952;

bounds sigma > 1e-12;

loglik=-0.5*((x-mean)/sigma)**2-log(sigma);

;

run;

The SAS System

PROC NLP: Nonlinear Maximization

Gradient is computed using analytic formulas.

Hessian is computed using analytic formulas.

The SAS System

PROC NLP: Nonlinear Maximization

Optimization Start

Parameter Estimates

Gradient

Lower

Objective

Bound

N Parameter

Estimate

Function

Constraint

1 mean

3.998087

0.002391

.

2 sigma

1.999952

0.000122

1E-12

Upper

Bound

Constraint

.

.

Value of Objective Function = -5.965738193

mean

sigma

Hessian Matrix

mean

sigma

-1.250060002

-0.002391422

-0.002391422

-2.500303451

Determinant = 3.125523618

Matrix has Only Negative Eigenvalues

Active Constraints

Objective Function

Max Abs Gradient Element

The SAS System

PROC NLP: Nonlinear Maximization

Newton-Raphson Optimization with Line Search

Without Parameter Scaling

Parameter Estimates

2

Functions (Observations)

5

Lower Bounds

1

Upper Bounds

0

Optimization Start

0

-5.965738193

0.0023913648

Objective

Max Abs

Slope of

5

Iter

Restarts

Function

Calls

Active

Constraints

Objective

Function

Function

Change

Gradient

Element

Step

Size

Search

Direction

1

0

2

0

-5.96574

2.29E-6

2.294E-6

1.000

-458E-8

Optimization Results

1 Function Calls

2 Active Constraints

-5.965735903 Max Abs Gradient Element

-4.580223E-6 Ridge

Iterations

Hessian Calls

Objective Function

Slope of Search Direction

3

0

2.2942694E-6

0

ABSGCONV convergence criterion satisfied.

The SAS System

PROC NLP: Nonlinear Maximization

Optimization Results

Parameter Estimates

N Parameter

1 mean

2 sigma

Approx

Estimate

Std Err

t Value

4.000000

0.894427

4.472138

1.999999

0.632455

3.162280

Value of Objective Function = -5.965735903

mean

sigma

Hessian Matrix

mean

-1.250001147

-1.125884E-7

sigma

-1.125884E-7

-2.500005736

Determinant = 3.1250100374

Matrix has Only Negative Eigenvalues

Covariance Matrix 2: H = (NOBS/d) inv(G)

mean

sigma

mean

0.7999992658

-3.602817E-8

sigma

-3.602817E-8

0.3999990823

Factor sigm = 1

Determinant = 0.3199989722

Matrix has 2 Positive Eigenvalue(s)

Approximate Correlation Matrix

of Parameter Estimates

mean

sigma

mean

1

-6.368951E-8

sigma

-6.368951E-8

1

Determinant = 1

Matrix has 2 Positive Eigenvalue(s)

Approx

Pr > |t|

0.006566

0.025031

Gradient

Objective

Function

0.000000113

0.000002294

6

Lagarias, J. C.; Reeds, J. A.; Wright, M. H.; and Wright, P. E. (1998). “Convergence Properties of the NelderMead simplex method in low dimensions,” SIAM Journal on Optimization, 9, 1, pp. 112-147.

1