Hashing and Hash Tables

advertisement

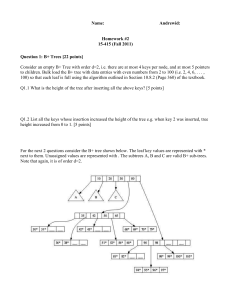

Hashing and Hash Tables Hashing is key Hash tables are one of the great inventions in computer science. Combination of lists, arrays, and a clever idea. Usual use is a symbol table: associate value (data) with string (key). Countless other applications exist; we'll talk about some. Hashing The insight is to create from arbitrary data a small integer key (or just hash) that can be used to identify the data efficiently. Usually (but not always) a subset of the data is used in making the key: the string in a string/value pair, for example. Store the complete data under the key, usually in a hash table that uses the key as an index. This makes the key a form of lookup address (index in a table). Some hashing schemes eliminate the table altogether and make the key the machine address itself. Hash function Why is it called a hash? Because the key is usually generated by mashing all the bits of the data together to make as random a mess as possible. OED: A mixture of mangled and incongruous fragments; a medley; a spoiled mixture; a mess, jumble. Often in phr. to make a hash of, to mangle and spoil in attempting to deal with. This is the job of the hash function: produce a hash from the data to be used as the key. The hash should be randomly distributed through a modest integer range. That range is the size of the hash table that stores the data. For example, add up the bytes in a character string and take the result modulo 128, to give an index into a 128-entry array of string/value pairs. Hashing styles What to do if two items hash to same key? 1. Perfect hashing Arrange that there are no collisions. Can be done if the data is known in advance: choose the right hash function, or compute it. Rare, but nice when it can work. 2. Open hashing Put the colliding entry in the next slot of the table, or chain them together with pointers. When the table fills, rehash. Adds complexity; avoids memory allocation. Old-fashioned. 3. Hash buckets Each hash table entry is the head of a list of items that hash to that value. This is almost always the way to go these days. Can still rehash if the lists get long, but we can often arrange that that won't happen. Open Hashing Hash Buckets Hash tables have their limitations. If the hash function is poor or the table size is too small, the lists can grow long. Since they're unsorted, this leads to n 2 behaviour. There's no easy (let alone efficient) way to extract the elements in order. Types of Hashing • There are two types of hashing: 1. Static hashing: the set of keys is fixed and given in advance. 2· Dynamic hashing: the set of keys can change dynamically. • The load factor of a hash table is the ratio of the number of keys in the table to the size of the hash table. • As the load factor gets closer to 1.0, the likelihood of collisions increases. • The load factor is a typical example of a space/time trade-off. A good hash function should: · Minimize collision. · Be easy and quick to compute. · Distribute key values evenly in the hash table. · Use all the information provided in the key. · Have a high load factor for a given set of keys Hashing Methods 1. Prime-Number Division Remainder • Computes hash value from key using the % operator. • Table size that is a power of 2 like 32 and 1024 should be avoided, for it leads to more collisions. • Also, powers of 10 are not good for table sizes when the keys rely on decimal integers. • Prime numbers not close to powers of 2 are better table size values. • This method is best when combined with truncation or folding – which are discussed in the following slides 2. Truncation or Digit/Character Extraction • Works based on the distribution of digits or characters in the key. • More evenly distributed digit positions are extracted and used for hashing purposes. • For instance, students IDs or ISBN codes may contain common subsequences which may increase the likelihood of collision. • Very fast but digits/characters distribution in keys may not be very even. 3. Folding • It involves splitting keys into two or more parts and then combining the parts to form the hash addresses. • To map the key 25936715 to a range between 0 and 9999, we can: · split the number into two as 2593 and 6715 and · add these two to obtain 9308 as the hash value. • Very useful if we have keys that are very large. • Fast and simple especially with bit patterns. • A great advantage is ability to transform non-integer keys into integer values. 4. Radix Conversion • • • Transforms a key into another number base to obtain the hash value. Typically use number base other than base 10 and base 2 to calculate the hash addresses. To map the key 38652 in the range 0 to 9999 using base 11 we have: 3x114 + 8x113 + 6x112 + 5x111 + 2x110 = 5535411 • We may truncate the high-order 5 to yield 5354 as our hash address within 0 to 9999. 5. Mid-Square • The key is squared and the middle part of the result taken as the hash value. • To map the key 3121 into a hash table of size 1000, we square it 31212 = 9740641 and extract 406 as the hash value. • Can be more efficient with powers of 2 as hash table size. • Works well if the keys do not contain a lot of leading or trailing zeros. • Non-integer keys have to be preprocessed to obtain corresponding integer values. 6. Use of a Random-Number Generator • Given a seed as parameter, the method generates a random number. • The algorithm must ensure that: • • It always generates the same random value for a given key. • It is unlikely for two keys to yield the same random value. The random number produced can be transformed to produce a valid hash value. Hash collision In computer science, a hash collision is a situation that occurs when two distinct inputs into a hash function produce identical outputs. Most hash functions have potential collisions, but with good hash functions they occur less often than with bad ones. In certain specialized applications where a relatively small number of possible inputs are all known ahead of time it is possible to construct a perfect hash function which maps all inputs to different outputs. But in a function which can take input of arbitrary length and content and returns a hash of a fixed length, there will always be collisions, because any given hash can correspond to an infinite number of possible inputs. In searching An efficient method of searching can be to process a lookup key using a hash function, then take the resulting hash value and then use it as an index into an array of data. The resulting data structure is called a hash table. As long as different keys map to different indices, lookup can be performed in constant time. When multiple lookup keys are mapped to identical indices, however, a hash collision occurs. The most popular ways of dealing with this are chaining (building a linked list of values for each array index), and open addressing (searching other array indices nearby for an empty space) Collision resolution Collisions are practically unavoidable when hashing a random subset of a large set of possible keys. For example, if 2500 keys are hashed into a million buckets, even with a perfectly uniform random distribution, according to the birthday paradox there is a 95% chance of at least two of the keys being hashed to the same slot. Therefore, most hash table implementations have some collision resolution strategy to handle such events. Some common strategies are described below. All these methods require that the keys (or pointers to them) be stored in the table, together with the associated values. Load factor The performance of most collision resolution methods does not depend directly on the number n of stored entries, but depends strongly on the table's load factor, the ratio n/s between n and the size s of its bucket array. With a good hash function, the average lookup cost is nearly constant as the load factor increases from 0 up to 0.7 or so. Beyond that point, the probability of collisions and the cost of handling them increases. On the other hand, as the load factor approaches zero, the size of the hash table increases with little improvement in the search cost, and memory is wasted. Separate chaining In the strategy known as separate chaining, direct chaining, or simply chaining, each slot of the bucket array is a pointer to a linked list that contains the key-value pairs that hashed to the same location. Lookup requires scanning the list for an entry with the given key. Insertion requires appending a new entry record to either end of the list in the hashed slot. Deletion requires searching the list and removing the element. (The technique is also called open hashing or closed addressing, which should not be confused with 'open addressing' or 'closed hashing'.) Chained hash tables with linked lists are popular because they require only basic data structures with simple algorithms, and can use simple hash functions that are unsuitable for other methods. The cost of a table operation is that of scanning the entries of the selected bucket for the desired key. If the distribution of keys insufficiently, the average cost of a lookup depends only on the average number of keys per bucket—that is, on the load factor. Chained hash tables remain effective even when the number of entries n is much higher than the number of slots. Their performancedegrades more gracefully (linearly) with the load factor. For example, a chained hash table with 1000 slots and 10,000 stored keys (load factor 10) is five to ten times slower than a 10,000-slot table (load factor 1); but still 1000 times faster than a plain sequential list, and possibly even faster than a balanced search tree. For separate-chaining, the worst-case scenario is when all entries were inserted into the same bucket, in which case the hash table is ineffective and the cost is that of searching the bucket data structure. If the latter is a linear list, the lookup procedure may have to scan all its entries; so the worst-case cost is proportional to the number n of entries in the table. The bucket chains are often implemented as ordered lists, sorted by the key field; this choice approximately halves the average cost of unsuccessful lookups, compared to an unordered list. However, if some keys are much more likely to come up than others, an unordered list with move-to-front heuristic may be more effective. More sophisticated data structures, such as balanced search trees, are worth considering only if the load factor is large (about 10 or more), or if the hash distribution is likely to be very nonuniform, or if one must guarantee good performance even in the worst-case. However, using a larger table and/or a better hash function may be even more effective in those cases. Chained hash tables also inherit the disadvantages of linked lists. When storing small keys and values, the space overhead of the next pointer in each entry record can be significant. An additional disadvantage is that traversing a linked list has poor cache performance, making the processor cache ineffective. Separate chaining with list heads Some chaining implementations store the first record of each chain in the slot array itself. The purpose is to increase cache efficiency of hash table access. To save memory space, such hash tables often have about as many slots as stored entries, meaning that many slots have two or more entries. Hash collision by separate chaining with head records in the bucket array. Separate chaining with other structure Instead of a list, one can use any other data structure that supports the required operations. By using a self-balancing tree, for example, the theoretical worst-case time of a hash table can be brought down to O(log n) rather than O(n). However, this approach is only worth the trouble and extra memory cost if long delays must be avoided at all costs (e.g. in a real-time application), or if one expects to have many entries hashed to the same slot (e.g. if one expects extremely non-uniform or even malicious key distributions). The variant called array hashing uses a dynamic array to store all the entries that hash to the same bucket.[6] Each inserted entry gets appended to the end of the dynamic array that is assigned to the hashed slot. This variation makes more effective use of CPU caching, since the bucket entries are stored in sequential memory positions. It also dispenses with the next pointers that are required by linked lists, which saves space when the entries are small, such as pointers or single-word integers. An elaboration on this approach is the so-called dynamic perfect hashing , where a bucket that contains k entries is organized as a perfect hash table with k2 slots. While it uses more memory (n2 slots for nentries, in the worst case), this variant has guaranteed constant worst-case lookup time, and low amortized time for insertion. Open addressing In another strategy, called open addressing, all entry records are stored in the bucket array itself. When a new entry has to be inserted, the buckets are examined, starting with the hashed-to slot and proceeding in some probe sequence, until an unoccupied slot is found. When searching for an entry, the buckets are scanned in the same sequence, until either the target record is found, or an unused array slot is found, which indicates that there is no such key in the table.[9] The name "open addressing" refers to the fact that the location ("address") of the item is not determined by its hash value. (This method is also called closed hashing; it should not be confused with "open hashing" or "closed addressing" which usually mean separate chaining.) Hash collision resolved by open addressing with linear probing (interval=1). Note that "Ted Baker" has a unique hash, but nevertheless collided with "Sandra Dee" which had previously collided with "John Smith". Coalesced hashing A hybrid of chaining and open addressing, coalesced hashing links together chains of nodes within the table itself.Like open addressing, it achieves space usage and (somewhat diminished) cache advantages over chaining. Like chaining, it does not exhibit clustering effects; in fact, the table can be efficiently filled to a high density. Unlike chaining, it cannot have more elements than table slots. Robin Hood hashing One interesting variation on double-hashing collision resolution is that of Robin Hood hashing.The idea is that a key already inserted may be displaced by a new key if its probe count is larger than the key at the current position. The net effect of this is that it reduces worst case search times in the table. This is similar to Knuth's ordered hash tables except the criteria for bumping a key does not depend on a direct relationship between the keys. Since both the worst case and the variance on the number of probes is reduced dramatically an interesting variation is to probe the table starting at the expected successful probe value and then expand from that position in both directions. External Robin Hashing is an extension of this algorithm where the table is stored in an external file and each table position corresponds to a fixed sized page or bucket with B records. Cuckoo hashing Another alternative open-addressing solution is cuckoo hashing, which ensures constant lookup time in the worst case, and constant amortized time for insertions and deletions. Hopscotch hashing Another alternative open-addressing solution is hopscotch hashing,which combines the approaches of cuckoo hashing and linear probing, yet seems in general to avoid their limitations. In particular it works well even when the load factor grows beyond 0.9. The algorithm is well suited for implementing a resizable concurrent hash table. The hopscotch hashing algorithm works by defining a neighborhood of buckets near the original hashed bucket, where a given entry is always found. Thus, search is limited to the number of entries in this neighborhood, which is logarithmic in the worst case, constant on average, and with proper alignment of the neighborhood typically requires one cache miss. When inserting an entry, one first attempts to add it to a bucket in the neighborhood. However, if all buckets in this neighborhood are occupied, the algorithm traverses buckets in sequence until an open slot (an unoccupied bucket) is found (as in linear probing). At that point, since the empty bucket is outside the neighborhood, items are repeatedly displaced in a sequence of hops (in a manner reminiscent of cuckoo hashing, but with the difference that in this case the empty slot is being moved into the neighborhood, instead of items being moved out with the hope of eventually finding an empty slot). Each hop brings the open slot closer to the original neighborhood, without invalidating the neighborhood property of any of the buckets along the way. In the end the open slot has been moved into the neighborhood, and the entry being inserted can be added to it.