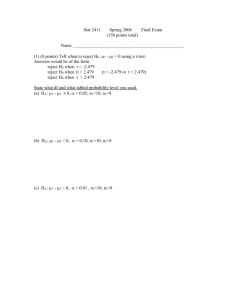

Testing Two Means

advertisement

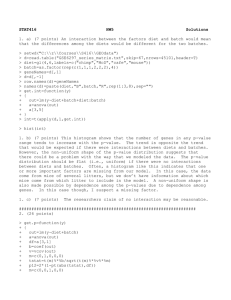

TESTING TWO MEANS The good news here is that the distributional assumptions are the same as the single mean test. Once again, if you know the population standard deviation (though this time you need to know both) you use a Z, otherwise you use a t. The test statistics are a little uglier, but by no means unmanageable. So let’s set one up. Suppose two samples have been collected. Sample 1 has a mean of 5 drawn from 17 observations. Sample two has a mean of 7 drawn from 19 observations. Suppose also you know σ1 = 1 and σ2 = 1.5. Conduct a hypothesis test that the two means are equal (α = 0.05). Our first step is (as always) to state the null and alternative. Since our claim is that the two means are equal, the following are perfectly reasonable and correct hypotheses to test. H0: μ1 = μ2; HA: μ1 ≠ μ2. However, there’s a slight alteration that will be useful to us a little later when setting up the test statistic. If we rearrange these, we have a numerical answer on the right and both of our means on the left. This trick is also useful should we ever wish to test for something other than equality. H0: μ1 - μ2 = 0 ; HA: μ1 - μ2 ≠ 0 Since this is a two-sided Z-test, the critical value is no different than before. This also means that our decision rule is identical as well. Zcrit = ±1.96 If Zstat > 1.96 or < -1.96, reject H0. Else, fail to reject H0. Here is our test statistic: 𝑍𝑠𝑡𝑎𝑡 = (𝑥̅1 − 𝑥̅2 ) − (𝜇1 − 𝜇2 ) 𝜎2 𝜎2 √ 1+ 2 𝑛1 𝑛2 It’s tedious, but not super difficult. 𝑍𝑠𝑡𝑎𝑡 = (5 − 7) − (0) √ 1 + 2.25 17 19 = −4.75 Notice we are simply plugging in what we know. Since we were given standard deviations and the denominator calls for variances, we did have to square our given numbers. Also, the second half of the numerator is once again drawn from the hypotheses. Had we (for example) wanted to see if mean 2 was 4 larger than mean 1, we would insert -4 (as it would match our hypotheses). Since our Zstat is well below -1.96, we Reject H0. These two averages are not equal. Are we okay so far? Okay, now let’s check what happens when we don’t know the σ’s. When you didn’t know σ before, you simply used s as a proxy and the t-distribution. That isn’t changing in essence; we are still using s1 and s2 as proxies and switching to a t. There is however two different ttests based on your assumptions about σ1 and σ2. If you are willing to believe that σ1 = σ2, then you will use one tstat. If you believe that the two aren’t equal, then you use a different tstat. How do you determine which to use? I have an idea; didn’t we do something where we tested whether or not variances (and subsequently standard deviations) are equal... While you ponder that one, I’m going to set up the same test using the same dataset from the Ztest we did previously. I’m going to show you how to set this up using both cases. Let’s pretend for now that s1 = σ1 and s2 = σ2. If σ1 = σ2 If σ1 ≠ σ2 H0: μ1 - μ2 = 0 ; HA: μ1 - μ2 ≠ 0 H0: μ1 - μ2 = 0 ; HA: μ1 - μ2 ≠ 0 With α = 0.05 and df = n1 + n2 – 2, tcrit = ±2.0423 1 If tstat > 2.0423 or < - 2.0423, reject H0. Else, fail to reject. If tstat > 2.0423 or < - 2.0423, reject H0. Else, fail to reject. With α = 0.05 and df = n1 + n2 – 2, tcrit = ±2.0423 Notice at this point, these are identical. Now is where the difference enters. Before I continue, I need to introduce the pooled standard deviation. What this does is provide a weighted average of the sample standard deviations based on the relative number of observations. It should lie between s1 and s2. 𝑆𝑝 = √ (𝑛1 − 1)𝑠12 + (𝑛2 − 1)𝑠22 (17 − 1)1 + (19 − 1)2.25 =√ = 1.28 𝑛1 − 𝑛2 − 2 17 − 19 − 2 We’ll need this for the case that the sigmas are equal. Okay, now we can proceed. tstat = (𝑥̅1 −𝑥̅ 2 )−(𝜇1 −𝜇2 ) 1 1 𝑆𝑝 √ + 𝑛1 𝑛2 tstat = (𝑥̅1 −𝑥̅ 2 )−(𝜇1 −𝜇2 ) 2 2 𝜎 𝜎 √ 1+ 2 𝑛1 𝑛2 Once again, tedious but not difficult. A couple of comments that I should make at this point. First, notice that the df is present in the tstat on the left in the Sp. It’s not apparent on the right; this is an exception to what normally occurs. Also, notice how similar these tests are. It’s not “in your face”, but it’s there. The numerators are identical. The denominators are “standard deviation” divided by “square root of n”. Do you see it? 1 This df differs from the text. I am overruling the text and going with this measure of degrees of freedom. tstat = (𝟓−𝟕)−(𝟎) 𝟏 𝟏 𝟏.𝟐𝟖√ + 𝟏𝟕 𝟏𝟗 − 𝟒. 𝟔𝟖 tstat = (𝟓−𝟕)−(𝟎) 𝟏 𝟐.𝟐𝟓 𝟏𝟕 𝟏𝟗 = −𝟒. 𝟕𝟓 √ + Reject H0 (both cases). The two means are not equal (both cases). That’s it. Ok, so try this one. Conduct the appropriate hypothesis test (α = 0.10) that these two means are equal: Sample 1 has an average of 3, a standard deviation of 1.2, and a sample size of 10. Sample 2 has an average of 4, a standard deviation of 2.1, and a sample size of 14. Try this out and see the answer on the next page. Okay, you should know it’s a t-test because there’s no mention of σ’s anywhere. But which t-test? Test the variances! H0: σ1 = σ2, HA: σ1 ≠ σ2. Fcrit (α=0.10, df1 = 14 – 1, df2 = 10 – 1) = 2.577 If Fstat > 2.577, reject H0. Else, fail to reject H0. 𝐹𝑠𝑡𝑎𝑡 2.12 4.41 = = = 3.06 1.22 1.44 Reject H0. The two variances are not equal. So, this means that we operate assuming that σ1 ≠ σ2. H0: μ1 - μ2 = 0 ; HA: μ1 - μ2 ≠ 0 tcrit (α=0.10, df = 14 + 10 – 2 = 22) = 1.7171 If tstat > 1.7171 or < -1.7171, reject H0. Else, fail to reject H0. 𝑡𝑠𝑡𝑎𝑡 = (3 − 4) − (0) √1.44 + 4.41 10 14 = −1.47 Reject H0. These two samples appear to have equal means.