Protein_Fold_Recognition_Report

advertisement

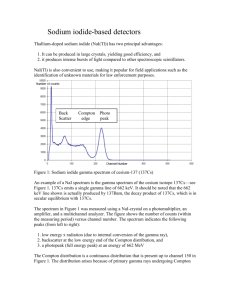

alignment method for fold recognition, one could also Protein Fold Recognition use information retrieval methods that leverages features A Coursework Project on Data Mining extracted Department of Computer Science University of Missouri-Columbia tools or structure the protein pairs. Instead, it is about using the already extracted features, (1) to derive a model that will represent the examples, and (2) to evaluate the process was model derived. Abstract Protein fold recognition is a well-known problem in the bioinformatics. approaches and There algorithms for are many predicting different whether a given protein sequence belongs to a protein family, superfamily, or more specifically to a fold. Here we discuss a classification approach using support vector machines for predicting whether two proteins (a pair) belong to same fold/superfamily/family (any), using existing dataset. Radial basis function was chosen as the kernel. Through 10-fold cross-validation approach of training and testing the best value of gamma and c parameter alignment This project is not about extracting features values from 5/10/2012 of existing prediction tools (Cheng & Baldi, 2006). Badri Adhikari field using were found to be 0.017 and 0.5 2 Methods 2.1 Data Description The input data used for the mining obtained from a previously done research (Cheng & Baldi, 2006) available at http://casp.rnet.missouri.edu/download/linda_lob.bin2. The feature values in data were computed using different methods and tools. The sequences and models (Lindahl & Elofsson, 2000) used to generate these features are derivation of SCOP database. respectively. Results show that although the accuracy The dataset has 951,600 examples out of which 7,438 and is are +1 labeled examples and the rest 944,162 are -1 extremely low, below 5%. The area under the curve for labeled examples. There are 84 features that have the final model was found to be 0.874. The project is different information gain values. Each example has two primarily based on previously published work (Cheng & rows: title row - that begins with a hash which has Baldi, 2006) and is being continued as a research protein pair name along with the structure classification project. and feature row - that has feature values used to precision are above 80%, the precision Supplementary Information: Supplementary report, codes and the source are data, available this describe the pair of protein in the title row. at http://web.missouri.edu/~bap54/protein_fold_recognition_ 2012/ 1 Introduction Protein fold recognition methods are the methods developed to know the structural relationship between proteins. These methods are usually either predictionbased methods, structural methods or sequence-based methods or combination of these methods (Lindahl & Elofsson, 2000). Instead of developing one specialized Figure 1 A portion of input dataset. Title rows begin with a hash and others are feature rows. 2.2 Data Preprocessing The examples were evenly split into 10 subsets for 10fold cross-validation. However, this was not done 1 of 4 randomly. At first, examples having the same query protein were grouped together. This resulted in a cluster of size 976 because there are 976 unique proteins in the available data. Ten subsets of data were created for 10-fold cross-validation, using these 976 clusters of examples, without breaking the clusters. This was done so that the training dataset does not contain any of the query proteins that will be used in testing. 2.3 Training and Classification While using SVMlight, instead of randomly picking up kernels and trying different parameters, a support vector classification guide (Hsu, Chang, & Lin, 2010) was followed. The RBF kernel considered for K(x, y) generating the = was model. Then, 10-fold To deal with the problem of biased examples that we cross-validation was performed to determine the value had, a test was conducted to see if balancing helps of gamma and c. In each iteration, 9 subsets were improve used for training and the remaining set was used as the performance. Two random training and testing sets were prepared. Using the previously known values of gamma, learning and classification were performed. The area under the curve were computed for the two models and were found to be 0.82 and 0.87 as shown in the ROC curve below. the test set. To find the value of gamma, at first gamma values between 0 and 1 were considered with the step size of 0.1. Better performance was observed at the values close to 0.1, so more precise values of gamma were Keeping the two test sets as is, the training sets were used balanced performance by filtering away most of the negative examples so that there were equal number of negative to repeat of the the process. classifier Figure for 3 values shows of the gamma between 0 and 0.19 with step-size of 0.01. and positive examples. Again, using the same value of gamma, learning and classification were performed, with exactly same test sets. The area under the curve was found to be 0.94 and 0.93. Surprisingly, it is observed that balancing of examples actually improves the performance of the training and classification results. Sensitivity Accuracy Precision 120 100 80 60 40 20 0 0.01 0.02 0.03 0.04 0.05 0.06 0.07 0.08 0.09 0.1 0.11 0.12 0.13 0.14 0.15 0.16 0.17 0.18 0.19 0 Figure 3 Average values of sensitivity versus specificity for different values of gamma. Figure 2 Comparison of four models: filtered 01 and 02 are models generated from balanced training data and unfiltered 01 and 02 are models generated from the training data as it. The same test data were used for the models generated. 2 of 4 Sensivity Sensitivity Specificity 85.2 100 85 80 84.8 60 84.6 Accuracy Precision 40 84.4 20 0 0.0025 0.0050 0.0075 0.0100 0.0125 0.0150 0.0175 0.0200 0.0225 0.0250 0.0275 0.0300 0.0325 0.0350 0.0375 0.0400 0.0425 0.0450 0.0475 84 0 0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5 0.55 0.6 0.65 0.7 0.75 0.8 0.85 0.9 0.95 84.2 C parameter gamma Figure 4 More precise values of gamma against average sensitivity and specificity. Figure 5 Average values of sensitivity, accuracy and precision against a range of values of c parameter of the RBF kernel function. Upon finding that the classifier is performing best near the gamma values of 0.01, another round of training and testing was conducted with gamma starting from 0.0025 to 0.0475, to find a more precise value of gamma. Figure 4 shows the performance. It is evident that there is no distinguishably best value of gamma. However, we can observe that the sensitivity and specificity are highest at gamma equal to 0.0175. To determine the best value of c, training The support vector machine implementation tool used was SVMlight. The tool is basically two programs: svm_learn for training and svm_classify for classification. and classification was performed with a range of values of c from 0 to 1, as shown in figure 5, keeping gamma constant as 0.0175. We observe that, at c-values higher than 0.05, there is not much impact of the values in any of the measurements. Any value of c greater than 0.1 and less than 1 seems appropriate. 2.4 Tools Used Using the tool is quite straightforward. $svm_learn example1/train.dat example1/model $svm_classify example1/test.dat example1/model example1/prediction Perl was used as the scripting language for all data transformations, pre-processing and calculations. R was used to plot the ROC curves and for calculating area under the curves. For other graphs, MS Excel was used. 3 Results The final model was built using gamma equal to 0.0175 and c value equal to 0.5. Figure 6 shows that the area under the curve for the model is 0.874. 3 of 4 Cheng, J., & Baldi, P. (2006). A machine learning information retireval approach to protein fold recognition. Bioinformatics. Hsu, C.-W., (2010). A Chang, Practical C.-C., Guide to & Lin, C.-J. Support Vector Classi. National Taiwan University. Lindahl, E., & Elofsson, A. (2000). Identification of Related Proteins on Family, Superfamily and Fold Level. JMB. Figure 6 ROC curve for the final model with area under curve equal to 0.874 shown with different cutoff values represented by different colors. 4 Future Works Following are the future works planned: 1. Perform selectively more precise removing balancing the data of data by instead of randomly filtering them out. Instead of applying filtering on the whole training set, it should be applied to individual cluster of examples. This could make the examples more discriminative. 2. Perform grid-search approach to find the best values of gamma and c for the RBF kernel. 3. Perform classification at more specific levels: family level, superfamily level and fold level. 4. Apply neural network algorithms for classification. 5. Apply random forest algorithm for classification. 6. Use different feature selection methods to improve accuracy. 7. Generate new features for each pair of proteins to improve prediction accuracy. References 4 of 4