Delay of reinforceme..

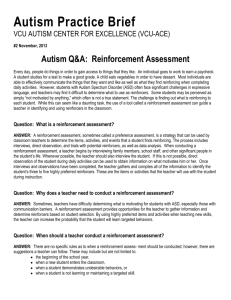

advertisement