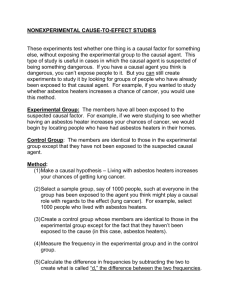

Normative Theory and Descriptive Psychology in Understanding

advertisement