Guiding Questions

advertisement

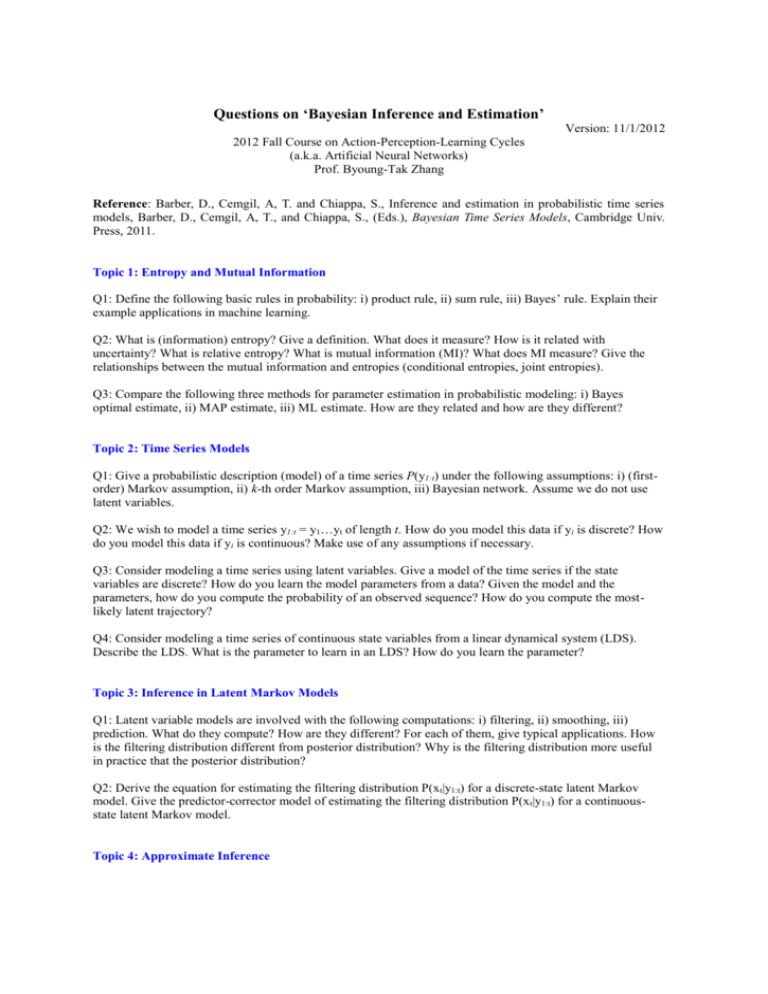

Questions on ‘Bayesian Inference and Estimation’ Version: 11/1/2012 2012 Fall Course on Action-Perception-Learning Cycles (a.k.a. Artificial Neural Networks) Prof. Byoung-Tak Zhang Reference: Barber, D., Cemgil, A, T. and Chiappa, S., Inference and estimation in probabilistic time series models, Barber, D., Cemgil, A, T., and Chiappa, S., (Eds.), Bayesian Time Series Models, Cambridge Univ. Press, 2011. Topic 1: Entropy and Mutual Information Q1: Define the following basic rules in probability: i) product rule, ii) sum rule, iii) Bayes’ rule. Explain their example applications in machine learning. Q2: What is (information) entropy? Give a definition. What does it measure? How is it related with uncertainty? What is relative entropy? What is mutual information (MI)? What does MI measure? Give the relationships between the mutual information and entropies (conditional entropies, joint entropies). Q3: Compare the following three methods for parameter estimation in probabilistic modeling: i) Bayes optimal estimate, ii) MAP estimate, iii) ML estimate. How are they related and how are they different? Topic 2: Time Series Models Q1: Give a probabilistic description (model) of a time series P(y1:t) under the following assumptions: i) (firstorder) Markov assumption, ii) k-th order Markov assumption, iii) Bayesian network. Assume we do not use latent variables. Q2: We wish to model a time series y1:t = y1…yt of length t. How do you model this data if yi is discrete? How do you model this data if yi is continuous? Make use of any assumptions if necessary. Q3: Consider modeling a time series using latent variables. Give a model of the time series if the state variables are discrete? How do you learn the model parameters from a data? Given the model and the parameters, how do you compute the probability of an observed sequence? How do you compute the mostlikely latent trajectory? Q4: Consider modeling a time series of continuous state variables from a linear dynamical system (LDS). Describe the LDS. What is the parameter to learn in an LDS? How do you learn the parameter? Topic 3: Inference in Latent Markov Models Q1: Latent variable models are involved with the following computations: i) filtering, ii) smoothing, iii) prediction. What do they compute? How are they different? For each of them, give typical applications. How is the filtering distribution different from posterior distribution? Why is the filtering distribution more useful in practice that the posterior distribution? Q2: Derive the equation for estimating the filtering distribution P(xt|y1:t) for a discrete-state latent Markov model. Give the predictor-corrector model of estimating the filtering distribution P(x t|y1:t) for a continuousstate latent Markov model. Topic 4: Approximate Inference Q1: Variational Bayes is a deterministic method for approximate inference. Why is it necessary or useful? What’s its basic idea? What’s the objective function to be minimized or maximized? Derive it. How does it compute the objective function? Topic 5: Monte Carlo Inference Q1: What is Monte Carlo approximation? Under what conditions is the MC approximation exact? What is the basic idea of Markov chain Monte Carlo? How does the Metropolis-Hastings (MH) algorithm sample the target distribution? What is burn-in? Why is it necessary? What’s the acceptance probability of MH? What is the transition kernel of the MH? Prove that the invariant distribution of the MH is the target distribution, i.e. prove the detailed balance property. Q2: Describe the Gibbs sampling algorithm. Compare it with MH. Is it a special case of MH? How? Q3: Explain the basic idea of importance sampling (IS). Is IS an MCMC? How does IS address the problem of sampling from the target distribution? What constraints should be satisfied by a proposal distribution? Q4: How can you make the IS into a sequential (recursive) estimation algorithm? Derive the recursive weight update rule for the sequential IS (or SIS). Q5: What is resampling? Why is it useful? Combined with resampling, SIS is also called particle filtering. Give the procedure for particle filtering, i.e. sequential importance sampling with resampling or sequential importance resampling (SIR).