Basic Signal Processing

advertisement

Basic Signal Processing

Signal Types

Static signals do not vary with time. In cases in which the physical quantity to be measured is

static, measurement noise can make the measured data vary somewhat with time, so the signal

may be dynamic even if the measured quantity is not.

Dynamic signals (or portions of signals) can be classified as:

Transient

Steady-state periodic

Continuous nonperiodic

A particular measured signal may have components of each type, i.e., there may be an initial

transient followed by periodic motion.

Transient Signals

Simple transient signals: step function, ramp, impulse

Short-duration oscillatory signals: tone burst, chirp

More complicated signals: includes response to initial conditions or transient inputs

Transient responses often are characterized by exponential growth or decay

Steady-state periodic signals

Single-frequency sinusoids

Square wave, sawtooth, etc. (multiple frequency components in particular ratios)

More complicated superposition of multiple sinusoids of various frequencies

Any periodic signal can be represented by a sum of sinusoids (Fourier Series)

Continuous nonperiodic signals

Noise or other random signals

Quasiperiodic signals (multiple frequencies that are incommensurable: ratio is irrational)

Chaotic signals (apparently random but actually deterministic)

The rate at which a transient signal evolves (slope of a ramp, exponential decay rate, etc.) can be

observed in time domain. Certain other characteristics of signals (either transient or continuous)

can be calculated from time domain data. Signals that involve oscillations (periodic or not) are

often better analyzed in frequency domain.

Basic Time Domain Processing

The mean of a signal gives the component of the signal that remains constant. It is sometimes

called the temporal mean to emphasize that it is the average over time of a single quantity (as

opposed to the average value of multiple quantities). It also called the offset or DC component,

(from electronics where constant Direct Current is distinguished from oscillating Alternating

Current). The AC component is the signal with the DC component removed (i.e.,

The RMS value (root-mean-squared) is a measure of how far the signal deviates from zero. By

squaring the signal before taking the mean, positive and negative portions do not average out.

-1-

Continuous (Analog) signal

ò

t2

t1

mean x =

x(t) dt

mean x =

t2 - t1

ò ( x(t))

t2

x RMS =

Discrete (Digitial) signal

t1

2

dt

x RMS =

t2 - t1

1

N

1

N

N -1

åx

i =0

i

N -1

åx

2

i

i=0

If the mean is zero (or has been subtracted from the signal) then the RMS value is a measure of

the amplitude of the oscillations.

Note also that, for a zero-mean signal (or a signal from which the mean has been subtracted), the

formula for RMS is the same as for standard deviation, although the latter term is typically used

for describing the statistics of a population of values rather than a signal.

Consider a simple sinusoid with an offset, x(t) = A0 + A1 cos(w t)

By inspection, the DC component is A0 and the AC componet is A1 cos(w t) .

Strictly speaking, the formula for mean only gives the DC component if the duration of the

signal is an exact integer number of periods of the sinusoid (but it converges to the DC

component as the duration gets large compared to the sinusoid’s period):

x=

ò

t2

t1

( A0 + A1 cos w t) dt

t2 - t1

æ sin w t2 - sin w t1 ö

= A0 + A1 ç

÷ ® A0 when w (t2 - t1 )

è w (t2 - t1 ) ø

1

The RMS value of the entire signal is

ò ( A + A cos w t )

t2

x RMS =

=

t1

0

1

t2 - t1

ò

t2

t1

2

dt

=

ò

t2

t1

A02 + A0 A1 cos w t + A12 cos 2 w t dt

t2 - t1

A02 + A0 A1 cos w t + 12 A12 (1- cos 2w t) dt

t2 - t1

The RMS value of the AC component is

-2-

® A02 + 12 A12 when w (t2 - t1 )

1

(x AC ) RMS =

ò

t2

t1

A12 cos 2 w t dt

t2 - t1

=

ò

t2

1

t1 2

A12 (1- cos2w t) dt

t2 - t1

®

1

2

A12 =

A1

2

when w (t2 - t1 )

1

Note that the

(RMS of the entire signal)2 = (mean of signal)2 + (RMS of AC component)2

Note that this means that subtracting the mean from the RMS value does not give the same result

as subtracting the mean from the signal and calculating the RMS value of the remaining AC part.

For example, if x(t) = 10 + 4cos(w t) ,

The mean is 10,

The RMS value is xRMS = 102 + 12 42 = 108 = 10.39 ,

The RMS of the AC component 4cos(ωt) is

1

2

42 = 8 = 2.82

Conversion to Frequency Domain

For measured signals that involve oscillatory behavior, it is typical to analyse data in the

frequency domain rather than the time domain.

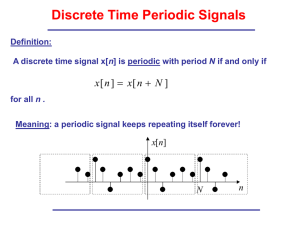

A Fourier series can be used to convert a continuous, analytic function of time that is periodic

into a (possibly infinite) series (summation) of sinusoids, which periods that are integer fractions

of the overal period T of the signal (i.e., frequencies w n = nt / T for n = 1, 2, 3, …).

Some functions may be represented by a finite series, others may require an infinite series

for perfect equivalence but can be approximated by a truncated series.

The coefficients in front of the sinusoids comprise a discrete set of information in

frequency domain.

There is a “sine/cosine” formulation for the Fourier series, and a mathematically

equivalent “complex exponential” formulation, since e±iw t = cos(w t) ± isin(w t) . Recall

that any specific sinusoid has one frequency and two additional numbers that can be

specified as:

o magnitude and phase, as in x(t) = C cos(w t + f )

o amplitude of the sine and cosine portions, as in x(t) = Acos w t + Bsin w t

o real and imaginary parts of the two complex-conjugate amplitudes of counterrotating imaginary exponentials, as in x(t) = ceiw t + c*e-iw t

{

o real and imaginary parts of a single complex amplitude, as in x(t) = Re Ĉeiw t

}

While the sinusoidal formulations are more intuitive, the exponential formulations are

more useful mathematically.

-3-

A Fourier transform can be used on any analytic function (needs not be periodic). It converts an

continous, analytic function of time into an continuous, analytic function of frequency.

An extension of the Fourier series to deal with functions whose period is infinite (never

repeats).

As T ® ¥ , the spacing between frequencies shrinks to zero, and the summation over

contributions at various specific frequencies becomes an integral over all frequencies.

The Discrete Fourier Transform converts a discrete series of sampled values at various times into

a discrete series of coefficients at various frequencies.

Experimental work always deals with signals of finite duration, so there’s never a need to

assume infinite period: the measurement period sets an upper bound on the apparent

period of the signal.

Signal processing carried out digitally, such as on a computer, deals with discrete signals

in time (rather than continuous).

to convert

a continuous

a discrete series

function of time x0, x1, x2, x3, x4,

x(t)

etc.

into

a discrete series

of frequency

coefficients

a continuous

function of

frequency, X(f)

Fourier Series

Discrete Fourier

Transform

Fourier

Transform

The DFT is the main tool for obtaining the frequency content of measured signals. The most

commonly used algorithm for computing a DFT quickly is called the Fast Fourier Transform

(FFT). The FFT is so common that the terms DFT and FFT are often used interchangeably.

The DFT can be applied to periodic and nonperiodic signals: the mathematics assumes that the

entire measured signal repeats itself… thus the measurement period determines which

frequencies correspond to the various coefficients in the resulting set (as opposed to any period

of components of the signal itself).

If the signal is a simple sinusoid with an integer number of periods occurring within the

measurement period (of a superposition of multiple sinusoids all of which have an integer

number of periods within the measurement period) then the DFT coefficients are exact

representations of that (or those) sinusoid amplitude(s).

However, for any other signal (periodic but noninteger number of periods measured,

nonperiod continuous signals, or transient signals) the DFT can still be used but there is

some ambiguity in the meaning of the resulting coefficients.

-4-

The figure shows three measurements of the same periodic signal (a sine wave at 10 Hz).

In the top and bottom, the measurement period is 3 and 4 times the signal period, respectively,

and the frequency spectrum resulting from a DFT shows peak amplitude 1 at a frequency 10 Hz

(frequency resolution is 3.33 Hz and 2.5 Hz respectively, the reciprocals of 0.3 and 0.4 seconds).

In the middle, the measurement period is 3.5 times the signal period (a non-integer). The

frequency resolution is 2.857 Hz (reciprocal of 0.35 seconds). The coefficients for 8.571 and

11.429 Hz (those closest to 10 Hz) are both high, but neither is the correct sine amplitude of 1.

Also, other, non-neighboring frequencies have non-zero coefficients, due to spectral leakage.

Spectral leakage comes from the broad frequency character of the discontinuities created at the

edges of the measurement period by the mathematical assumption that the measurement repeats

periodically:

x

t

Tmeas

It can be minimized by applying an appropriate window to the measured data (see below).

-5-

This figure shows a similar effect, except that the signal is transient, not periodic.

The signal is an exponentially decaying sinusoid with frequency 20 Hz. The DFT relies on

mathematical assumption that the signal repeats (every 0.3, 0.35, or 0.4 seconds, depending on

measurement period).

Since the signal itself is not actually periodic, no measurement period can be an integer multiple

of the signal period. In all cases, a variety of sinusoids are needed to construct the transient

signal, with the largest at and near 20 Hz (the frequency of the oscillatory component of the

signal).

Also, the overall height of the DFT result is scaled differently depending on the length of the

measurement period.

The DFT can be used to determine the dominant frequency component(s) in a non-periodic

signal, and compare relative amplitude levels, but care must be taken in interpreting amplitude

levels in any absolute sense.

Loosely, the amplitude of the 20 Hz frequency component in the DFT is the average amplitude

of the 20 Hz signal in time domain (the actual amplitude changes as the sinusoid decays).

Naturally, using a longer measurement period reduces the average level since there are a greater

number of very low amplitude samples (from the later time steps) included in the average.

-6-

DFT Formulas

Forward DFT

N -1

X k = (1) å xn e

-i2p

Inverse DFT

N -1

kn

N

kn

i2p

1

x n = ( ) å X ke N

N k=0

n=0

Arguments are identical except sign

The product of these factors must be (1/N). Some DFT formulations

give them as (

and

) or (1/N and 1) rather than (1 and 1/N).

The time domain information is x0, x1, x2, ... xN-1, where each xn is a typically a real number.

The frequency domain data is X0, X1, X2,... where each Xk represents the portion of the signal that

occurs at a certain frequency fk. Each Xk (except X0) is typically a complex number, containing

two pieces of information: the real part and the imaginary part (or, alternatively, the magnitude

and phase).

Thus, N unique xn’s will only lead to N/2 unique Xk’s.

Many FFT algorithms are two-sided algorithms, returning N complex numbers rather than N/2.

Often, we number the second half with negative indices as an indication that they are the

complex conjugates of numbers from the first half.

Since the magnitude of a number is the same as the magnitude of its complex conjugate, a plot of

the magnitude of a two-sided FFT is symmetric:

or

Likewise, since the phase of a number and the phase of its complex conjugate are the same

numbers but with opposite sign, a plot of the phase of a two-sided FFT has a second half that’s

the negative of the first half.

A one-sided FFT plot includes only X0 to XN/2, and all the Xk’s except X0 are doubled so that the

area under the curve remains the same as the two-sided case.

-7-

Scaling of Frequency Domain Data

DFT data are usually scaled to be more physically meaningful:

Amplitude Spectrum (Peak)

The actual amplitude (from zero to peak) of the sinusoidal component at any given frequency

is found by dividing the magnitude of the DFT by N (for a two-sided FFT, assuming the

formulation given above – some DFT formulation include the 1/N in the forward transform)

Amplitude Spectrum (RMS)

Multiplying the peak amplitude spectrum by 1

amplitude spectrum.

2 gives the root-mean-square (RMS)

Power Spectrum

The power in a sinusoidal signal is proportional to amplitude squared, so the two-sided

(X k )(X k* )

power spectrum is found from the two-sided DFT by or

. Note that the phase

N2

information is lost when calculating power spectra.

Power Spectral Density (PSD)

The PSD is the power spectrum divided by f, which tells how much power there is “per

Hertz” (rather than per frequency bin of width f). If you’re measuring a broadband signal

such as noise, changing the number of samples or the sampling frequency will change the

power spectrum since it changes the bin width f , but will not change the PSD, which is a

measure that is independent of bin width.

Noise spectral density

To allow noise to be compared to the amplitude of an actual signal, it is common to take the

2

square root of the PSD, converting, for example, V Hz into V Hz .

In theory, a pure sinusoid has infinite spectral density at the frequency of the sinusoid and zero

everywhere else (it is a dirac delta function in the frequency domain). However,

(1) this would be evident only if one had infinitesmal frequency resolution (infinite

measurement period),

(2) in reality, there is always some small variation in the frequency of any nominally puretone signal, resulting in a peak of finite width and height, and

(3) spectral density if usually only used for discussion of noise and other broadband signals,

not for pure tones.

The power spectrum and power spectral density can be related to the statistics of random

processes (i.e., noise), which means that they can be determined in an alternate manner by

measuring the autocorrelation of a time signal.

-8-

Autocorrelation, Cross-correlation, and Coherence

The autocorrelation of a function is a measure of the degree to which the value at one instant is

correlated with the value at a later instant. It is a function of time lag for continuous signals

or, for discrete signals, the number j of samples delayed:

1 T

f11 (t ) = lim ò x(t)x(t - t ) dt for a continuous function x(t)

T ®¥ T 0

1 N -1

f11 ( j) = å xn xn- j for a discrete series xn with n = 0 to N-1.

N n=0

that is, for discrete signals, it is the mean of the products of two samples separated by j time

steps. (Here, we’re assuming x is always real-valued).

It is typically used for random processes, for which it is usually high for small or j and then

much smaller for larger delays (the value of the function at one instant may tell you something

about the value at nearby instants but not much about the values at more distant instants).

Periodic signals (which are non-random) correlate to themselves entirely when or j represents

a delay of exactly an integer number of periods. Thus, the autocorrelation function will also be

periodic.

Note that the autocorrelation at j=0 is simply the mean of the squared signal, that is,

f11 (0) = xRMS , and this is the maximum possible autocorrelation.

According to the Wiener–Khinchin theorem, the Fourier transform of the autocorrelation

function f11 (t ) (or the DFT of f11 ( j) when dealing with discrete signals) is equal to the power

spectral density of the signal. That is, the following two processing sequences are equivalent:

Time series xn ¾DFT

¾¾

® Fourier coefficients X k = å xn e

-i2p

kn

N

¾calculate

¾¾¾¾

® PSD(k) =

PSD

n

and

Time series xn ¾calculate

¾¾¾¾¾¾

® f11 ( j) ¾DFT

¾¾

® PSD(k) = åf11 ( j)e

autocorellation

j

-9-

- i2p

kj

N

Xk

2

Df N 2

The autocorrelation is a special case of the more general cross-correlation, which measures how

well two different signals are correlated at times separated by delay (or index j):

1 T

x (t)x2 (t - t ) dt for two continuous functions x1(t) and x2(t)

T ®¥ T ò0 1

1 N -1

for two discrete series x1 and x2

f12 ( j) = å x1 x2

N n=0 n n- j

f12 (t ) = lim

If the two signals have the same behavior in time (they are differently scaled versions of

the same signal), the cross-correlation is maximized at a zero delay ( t = 0 or j = 0).

If the second signal is a time-shifted version of the first (or a time-shifted and rescaled

version), the cross-correlation is maximum when τ is equal to the amount of shift.

As with the autocorrelation, if both signals are periodic, the cross-correlation will also be

periodic (if they are correlated at one amount of delay, they will also be correlated by

other amounts of delay that differ from the initial amount by the mutual period).

The cross-correlation of two functions is equivalent to the convolution of one function with a

time-reversed (and shifted) version of the other.

The Fourier transform (or discrete Fourier transform) of the cross-correlation is the cross-spectral

density (CSD).

The coherence of two signals can be calculated from their cross-spectral density and individual

power spectral density

Coherence C12 =

CSD

2

PSD1 PSD2

for two signals (1 and 2).

The coherence represents the extent to which one signal may be predicted from the other.

If one signal is the input to a linear system and the other is the output, the coherence is the

fraction of the output power that is caused by the input, as a function of frequency (the remainder

of the output is due to noise or other inputs).

Matched Filtering

In idealized measurements made on an idealized linear system, the system output would be

completely coherent with the system input. In any situation where one signal is expected to

contain a portion coherent with another signal and a incoherent (noise) portion, the crosscorrelation can be used to implement a filter that removes as much noise as possible. A matched

filter is the best possible linear filter for a signal corrupted by random additive noise (i.e., it

maximizes the signal-to-noise-ratio).

- 10 -

A matched filter can be implemented by creating a digital filter whose impulse response

in a time-reversed and time-shifted version of the input signal (see Digital Filtering,

below).

Matlab code:

b=inputvector(end:-1:1);

filteredoutput=filter(b,1,outputvector);

Alternatively, the cross-correlation of the input and output signals provides the same

result.

Matlab code:

[filteredout,tau]=xcorr(inputvector,outputvector)

- 11 -