04. Integer Programming - Operations Research: A Model

advertisement

4 Integer Programming

Natural occurrences of integer variables (e.g., the

number of buses allocated to a route, the number of

boxes of hardwood flooring purchased, etc. But also:

logical variables.

1950s: Gomory’s cutting plane techniques

Early 1960s: Land & Doig’s Branch-&-Bound

method.

4.1 Basic concepts.

P1: Max z = x1 + x2

s.t.

3x1 + 5x2 ≤ 15

5x1 + 2x2 ≤ 10

x1, x2 ≥ 0.

In addition, some or all variables must be integers.

These are additional requirements.

MILP: mixed-integer linear programming problems

AILP: all-integer linear programming problems.

(a)

(b)

(c)

(d)

Simple rounding will not do!

Example:

Max z = 2x1 + x2

s.t. 7x1 + 48x2 ≤ 84

x1 + 12x2 ≥ 3

x1,

x2 ≥ 0 and integer.

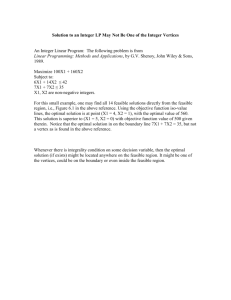

Optimal solution of LP relaxation:

x = (6.5455, 0.7955) with z = 13.8864,

while the optimal all-integer solution is

x = (5, 1) with z = 11.

No rounding can achieve this.

Change b2 from “3” to “13,” (broken line) the AILP

has no feasible solution.

On the other hand, some types of problems have

integer solutions naturally.

x1 + x2 ≤ 10

5x1 + 3x2 ≥ 15

x1

≤ 6

x2 ≤ 7

x1, x2 ≥ 0.

Extreme points (3, 0), (6, 0), (6, 4), (3, 7), (0, 7), & (0,

5).

Ignoring the integrality conditions & solving the LP

relaxation will result in an integer solution. Some large

classes of problems fall into this category.

z IP : Optimal objective value of the integer problem,

z LP : optimal objective value of the LP relaxation. Then

z IP ≤ z LP .

Absolute integrality gap z LP z IP ,

z LP z IP

relative integrality gap

.

max {| z LP | , | z IP |}

Earlier example: x LP = (6.5455, 0.7955) with z LP =

13.8864, & x IP = (5, 1) with z IP = 11.

Then: absolute integrality gap 13.8864 11 = 2.8864,

relative integrality gap is 2.8864/13.8864 = .2079.

Indicator of difficulty: relative integrality gap > 0.1:

difficult, if > 0.5: very difficult. This is, of course, only

known after the fact.

Another type of relaxation is the Lagrangean

relaxation PLagr. First, choose some or all of the given

structural constraints (the dualized constraints),

multiply them by some preselected nonnegative

constants (Lagrangean multipliers or dual variables)

and subtract them from the objective function. Then

the dualized constraints are removed from the set of

constraints.

Example: Refer to problem P4 and set up its

Lagrangean relaxation by dualizing the first

constraint, using the Lagrangean multiplier u1 = 2.

The relaxed problem is

1

PLagr

: Max z1Lagr = 10x1 + 10x2 – 2(3x1 + 5x2 – 15)

s.t. 5x1 + 2x2 ≤ 10

x1, x2 ≥ 0 and integer.

Optimal solution: x1 2 , x2 0 with z1Lagr 38 .

Alternatively, if we dualize the second constraint with,

say, a Lagrangean multiplier of u2 = 3, a different

relaxed problem results:

2

2

= 10x1 + 10x2 – 3(5x1 + 2x2 – 10)

PLagr

: Max z Lagr

s.t. 3x1 + 5x2 ≤ 15

x1, x2 ≥ 0 and integer,

which has an optimal solution of x1* 0 , x2* 3 with

*2

zLagr

42 .

We could also dualize both constraints. The objective

function is then Max 10x1 + 10x2 – u1(3x1 + 5x2 – 15) –

u2(5x1 + 2x2 – 10) subject to only the nonnegativity

constraints and the integrality requirements.

For multipliers u1 = 2 and u2 =1, the objective function

reduces to Max zLagr = –x1 – 2x2 + 40, with an optimal

solution x1 0 , x2 0 and z = 40. Choosing instead

multipliers u1 = 1 and u2 = 2 results in an objective

function Max zLagr = –3x1 + x2 + 35, which has

unbounded “optimal” solutions (x2 can be chosen

arbitrarily large).

The optimal objective value z Lagr is greater than or

equal to the value of the objective function of the

original (maximization) problem. The choice of

Lagrangean multipliers determines how close the gap

between z Lagr and z will be. In the context of linear

programming, selecting Lagrangean multipliers equal

to optimal values of the dual variables, the inequality

z Lagr z is actually satisfied as an equation. This idea

is used in a heuristic method in Section 4.3.3.

Integer variables either occur naturally or due to the

way we must formulate constraints. For instance,

logical variables are zero-one variables introduced to

model logical implications. In standard linear

programming, all constraints must hold.

However, if we have the choice of one of a number of

different machines, each with its own capacity, the

actual capacity constraint is not given by all machines,

but only by the machine that is chosen. We speak of an

either-or constraint. Another class is that of conditional

constraints. They can be spotted by the wording “if

this, then that.”

Formulate logical constraints by using a table. For two

zero-one variables y1 and y2, there are four solutions:

#

1

2a

2b

3

y1

0

0

1

1

y2

0

1

0

1

The table below shows the exclusion constraints that

allow excluding one specific solution without affecting

any of the others.

Solution Formulation

Wording

to be

excluded

1

y1 + y2 ≥ 1

“At least one of the two

activities needs to be chosen”

2a

y1 ≥ y2

“If activity y2 is chosen, then

activity y1 must also be

chosen”

2b

y1 ≤ y2

“If activity y1 is chosen, then

activity y2 must also be

chosen”

3

y1 + y2 ≤ 1

“At most one of the activities

can be chosen”

The table below shows all 8 solutions for 3 variables

#

1

2a

2b

2c

3a

3b

3c

4

y1

0

0

0

1

0

1

1

1

y2

0

0

1

0

1

0

1

1

y3

0

1

0

0

1

1

0

1

The table blow shows how each individual solution can

be excluded.

Sln to be Formulation

excluded

1

y1 + y2 + y3 ≥ 1

2a

2b

2c

3a

3b

3c

4

Wording

“At least one of the three

activities must be chosen”

y1 + y2 ≥ y3

“If neither y1 nor y2 are

chosen, then y3 must be

chosen”

y1 + y3 ≥ y2

“If neither y1 nor y3 are

chosen, then y2 must be

chosen”

y2 + y3 ≥ y1

“If neither y2 nor y3 are

chosen, then y1 must be

chosen”

–y1 + y2 + y3 ≤ 1 “If y1 is not chosen, then y2

and y3 must both be chosen”

y1 – y2 + y3 ≤ 1 “If y2 is not chosen, then y1

and y3 must both be chosen”

y1 + y2 – y3 ≤ 1 “If y3 is not chosen, then y1

and y2 must both be chosen”

y1 + y2 + y3 ≤ 2 “No more than two of the

three activities can be

chosen”

If more than a single solution is excluded, we combine

the pertinent logical constraints. For example, two

variables, excluding #1 and #2a is achieved by the

constraints y1 + y2 ≥ 1 and y1 ≥ y2 which can be

collapsed into the single constraint y1 = 1. Then replace

y1 by the value of 1 everywhere to reduce the size of

the model.

Another logical constraint may require an activity y0

to be chosen only if at least k activities in a set J = {y1,

y2, …, yn} are also chosen. This can be formulated as

y0 1k ( y1 y2 ... yn ) .

4.2 Applications of Integer Programming

Knapsack problems

Define variables yj = 1, if the item is included, & 0

otherwise.

Alternatively, yj: the number of items of type j that are

included, so that yj ≥ 0 and integer.

Cargo loading, capital budgeting.

Highrise Shopping Amusement Warehouses Airport

mall

park

Profit

contribution

(in m$)

Resource

consumption

10

6

12

2

7

4

2

5

1

3

Seven resource units are available.

P: Max z = 10y1 + 6y2 + 12y3 + 2y4 + 7y5

s.t.

4y1 + 2y2 + 5y3 + 1y4 + 3y5 7

y1, y2,

y3, y4, y5 = 0 or 1.

The LP relaxation has the optimal solution y 2 = 3.5,

y1 y3 y 4 y5 0 with the objective value z 21.

Optimal integer solution: y1 y 2 y 4 1, y3 y5 0

with objective value z = 18.

Even for simple problems, enumeration does not

work! n: number of variables, # slns = the number of

0-1 solutions (feasible or not).

n

# slns

1

21=1

2

22=4

3

23=8

4

24=16

40

240 ≈ 1 trillion

A computer that examines 1 quadrillion solutions per

second requires > 40 million years for a problem with

n = 100 variables.

4.2.1 Cutting Stock Problems (Trim Loss

Problems)

Given some material, change its shape from whatever

shape exists to whatever shape is needed.

Example: Wooden rods in standard profile & width.

Twenty 12 ft rods and twenty-five 10 ft rods are

available.

Sixty 8 ft rods, forty 5 ft rods, & seventy-five 3 ft rods

are needed.

Cut: 50¢ per cut

Purchase: $2, $1.50, & $1.10 for the 8 ft, 5 ft, and 3 ft

lengths.

Objective: Minimize waste (nonlinear! short pieces are

waste, long unused pieces may be used later) or

minimize cost.

Cutting plan:

Define variables yj as the number of times the j-th

pattern is cut. In addition, denote by v1, v2, & v3 the

number of 8 ft, 5 ft, and 3 ft rods that are purchased.

Min z = 1y1 + 1y2 + 1.5y3 + 1.5y4 + 0.5y5 + 0.5y6 + 1y7 +

1.5y8 + 2v1 + 1.5v2 + 1.1v3.

s.t. y1 + y2 + y3 + y4 ≤ 20.

y5 + y6 + y7 + y8 ≤ 25.

(supply constraints)

y1 + y5 + v1 ≥ 60.

2y2 + 1y3 + 2y6 + 1y7 + v2 ≥ 40.

1y1 + 2y3 + 4y4 + 1y7 + 3y8 + v3 ≥ 75.

(demand constraints)

(1)

(2)

(3)

(4)

(5)

y1, y2, …, y8; v1, v2, v3 ≥ 0 and integer.

Optimal solution: y1 = 2, y 2 = 0, y3 = 0, y 4 = 18, y5 =

5, y6 20, y7 = 0, and y8 = 0, as well as v1 = 53, v 2 = 0,

& v3 = 1. No rods are left over, the demand is exactly

satisfied.

2-dimensional cutting stock problems: same

formulation, cutting plans are more difficult to set up.

Material usage: pattern 1: 34/40 sq ft = 85%,

pattern 2: 32/40 sq ft = 80%.

However: pattern 1 requires frequent machine

adjustments. Also: guillotine cuts.

4.2.2 Diet Problem Revisited

Two foodstuffs, one nutrient.

Min z = 3x1 + 4x2

s.t.

x1 + 2x2 5

x1, x2 0.

plus: “if food 1 is in the diet, then food 2 should not be

included.

Define logical variables y1 (and y2) as one, if food 1

(food 2) is included in the diet, and zero otherwise.

y1

0

0

1

1

y2

0

1

0

1

OK?

No

A formulation that allows the first three cases &

prohibits the last case is

y1 + y2 1.

y1, y2 = 0 or 1.

Adding these constraints to the formulation is not

sufficient, though, as it allows the continuous variables

x1 & x2 to change independent of y1 and y2. We need

linking constraints. Here,

x1 My1 &

x2 My2,

with M >> 0 (but not too large, scaling).

Validity: If y1 = 1 (the food is included in the diet),

then the constraint reads x1 M, &, given that M is

sufficiently large, the constraint is redundant.

On the other hand, if y1 = 0 (the food is not included in

the diet), the constraint reads x1 ≤ 0, &, since x1 ≥ 0, x1

= 0 follows. In other words, if a food is not in the diet,

its quantity is zero.

Different additional (conditional) constraint:

“if food 1 is included in the diet, then food 2 must be

included in the diet as well.”

y1

0

0

1

1

y2 OK?

0

1

0 No

1

Here, the additional constraint

y1 y2

together with the linking constraints will do.

4.2.3 Land Use

Two choices for a parcel of land: harvest or protect

(but not both). Define variables y1= 1, if we harvest &

0 otherwise, & y2 = 1, if we protect, & 0 otherwise.

y1

0

0

1

1

y2 OK?

0

1

0

1 No

Again, the additional constraint is y1 + y2 ≤ 1. (There

are no other variables, so that there is nothing to link).

Allow 3 options: Harvest (y1), build a sanctuary (y2), or

allow the building of a municipal well (y3).

y1 y2 y3 OK?

0 0 0

0 0 1

0 1 0

1 0 0

0 1 1

1 0 1

No

1 1 0

No

1 1 1

No

Formulate: y1 + y2 ≤ 1 (eliminates the solutions in the

last two rows of the decision table), & the constraint

y1 + y3 ≤ 1 (eliminates the solution in the third row

from the bottom of the table).

4.2.4 Modeling Fixed Charges

Manufacture a combination of three Operations

Research texts:

Gabby and Blabby (GB),

Huff, Fluff, and Stuff (HFS), &

“Real OR” (ROR).

There are

3 printing machines are available (only 1 is needed),

2 binding machines (again, only one is needed).

Processing times for printing and binding machines

P1

GB 3

HFS 2

ROR 4

P2 P3

6 4

3 3

5 5

B4

GB 10

HFS 12

ROR 15

B5

10

11

14

The capacities of the three printing machines are 120

100, and 110 hours (7,200, 6,000, and 6,600 minutes).

Capacities of the binding machines: 333⅓ and 300

hours, respectively (or 20,000 and 18,000 minutes).

The costs to lease the machines are independent of the

number of books made with them. They are $10,000,

$8,000, $9,000, $20,000, and $23,000, respectively. The

profit contributions of the three books (other than the

leasing costs) have been identified as $40, $60, and $70.

Also, produce at least 500 copies of the landmark ROR

book in order to maintain a good academic image.

Define variables x1, x2 and x3 as the number of books

of the three types that are manufactured and sold.

Also, define binary variables y1, y2, …, y5 that assume a

value of one, if a machine is leased, and 0 otherwise.

P: Max z = 40x1 + 60x2 + 70x3

10,000y1 8,000y2 9,000y3 20,000y4 23,000y5

s.t. 3x1 + 2x2 + 4x3 7,200 + M(1−y1)

6x1 + 3x2 + 5x3 6,000 + M(1−y2)

4x1 + 3x2 + 5x3 6,600 + M(1−y3)

10x1 + 12x2 + 15x3 20,000 + M(1−y4)

10x1 + 11x2 + 14x3 18,000 + M(1−y5)

x3 500

y1 + y2 + y3 = 1

y4 + y5 = 1

x1, x2, x3 0 and integer

y1, y2, y3, y4, y5 = 0 or 1.

With M = 1,000,000, the optimal solution is y 2 = y 4 = 1

and y1 y3 y5 = 0 (i.e., we lease the second printing

and the first binding machine), and make x1 = 0 GB

books, x 2 = 1,039 HFS books, and x3 = 502 ROR books.

The profit associated with this plan is $69,480. Note

that the slack capacities indicate huge (and

meaningless) values for machines not leased. Their

right-hand side values have the artificial value of M =

1,000,000, from which nonexistent usage is subtracted.

Now allow more than one printing and/or binding

machine to be used. We now need additional variables

xij to denote books of type i processed on machine j:

P: Max z = 40x1 + 60x2 + 70x3

–10,000y1–8,000y2–9,000y3–20,000y4–23,000y5

s.t. x1 = x11 + x12 + x13

x2 = x21 + x22 + x23

x3 = x31 + x32 + x33

3x11 + 2x21 + 4x31 ≤ 7,200y1

6x12 + 3x22 + 5x32 ≤ 6,000y2

4x13 + 3x23 + 5x33 ≤ 6,600y3

10x14 + 12x24 + 15x34 ≤ 20,000y4

10x15 + 11x25 + 14x35 ≤ 10,000y5

x3 ≥ 500

y1 + y2 + y3 ≥ 1

y4 + y5 ≥ 1

x11 + x12 + x13 = x14 + x15

x21 + x22 + x23 = x24 + x25

x31 + x32 + x33 = x34 + x35

y1, …, y5 = 0 or 1

x1, x2, x3; x11, x12, …, x35 ≥ 0 and integer.

The objective function has not changed. The first three

constraints link xij and xi so that they define the

amounts of the products actually made. The next five

constraints are capacity constraints restricting the

number of units we can make by the machine

capacities (zero if we do not lease the machine). The

next constraint ensures that we make at least 500 units

of the ROR book, and the next two constraints specify

that we must choose at least one of each of the two

types of machines. The last three structural

constraints require books printed also to be bound.

The optimal solution is to lease the first printing

machine and both binding machines. As before, we

make no GB books, but 2,600 HFS books and 500 ROR

books. The only significant change is the increase of

HFS books from 1,039 to 2,600, resulting in a doubling

of the profit from $69,480 to $138,000.

4.2.5 Workload Balancing

Goal: Distribute the workload evenly. Tasks cannot be

split.

Example:

Processing times for worker-task combinations

T1

W1 5

W2 4

W3 7

T2

1

3

5

T3

9

8

6

T4

4

3

4

T5

9

8

7

Define variables yij = 1, if employee Wi is assigned to

task Tj, and zero otherwise.

Assign each task to exactly one employee.

y1j + y2j + y3j = 1 for all j = 1, ..., 5.

Actual working times of the employees:

w1 = 5y11 + 1y12 + 9y13 + 4y14 + 9y15,

w2 = 4y21 + 3y22 + 8y23 + 3y24 + 8y25, and

w3 = 7y31 + 5y32 + 6y33 + 4y34 + 7y35,

Possibility:

Min z = max {w1, w2, w3}.

Rewrite as

Min z, s.t. z ≥ w1, z ≥ w2, and z ≥ w3 + other constraints.

Min z

s.t. z ≥ 5y11 + 1y12 + 9y13 + 4y14 + 9y15

z ≥ 4y21 + 3y22 + 8y23 + 3y24 + 8y25

z ≥ 7y31 + 5y32 + 6y33 + 4y34 + 7y35

y11 + y21 + y31 = 1

y12 + y22 + y32 = 1

y13 + y23 + y33 = 1

y14 + y24 + y34 = 1

y15 + y25 + y35 = 1

yij = 0 or 1 for i=1, 2, 3; j=1, …, 5.

Solution: W1 – T2 & T5, W2 – T1 & T4, W3 – T3.

Workloads: 10, 7, and 6 hours.

Alternative: Min z

= ((1/3)w w1)2 + ((1/3)w w2)2 + ((1/3)w w3)2.

Always combine “equity” with an efficiency objective

(otherwise workloads of 12, 12, & 12 are preferred to

7, 8, 9).

4.3 Solution Methods for Integer

Programming Problems

4.3.1 Cutting Plane Methods

The first exact techniques for solving integer

programming problems were cutting plane techniques.

General idea: Solve linear programming relaxation, i.e.,

the given problem without integrality requirements.

If the optimal solution is integer, we are done.

Otherwise, introduce a cutting plane, i.e., an additional

constraint that (1) cuts off (i.e., makes infeasible) the

present optimal solution, while (2) not cutting off any

feasible integer point.

Example: Consider the all-integer programming

problem:

P: Max z = y1 + y2

s.t.

3y1 + 2y2 ≤ 6

y1 + 3y2 ≤ 3

y1, y2 ≥ 0 and integer.

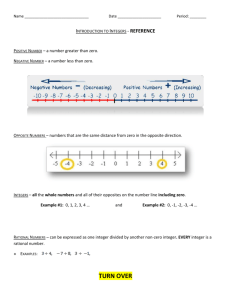

The shaded area shows the feasible set of the linear

programming relaxation, and y LP = (12/7, 3/7) is the

optimal solution of the linear programming relaxation.

The triangle shown by the broken lines connecting (0,

0), (2, 0), and (0, 1) is the convex hull of the feasible set.

The dotted line is the cutting plane 5y1 + 10y2 ≤ 12. It is

indeed a cutting plane, as the present optimal solution

12, and since all four

y LP is cut off as 90/7 = 12 76

feasible integer points satisfy the condition & are thus

not cut off.

Computation performance of cutting planes has been

disappointing.

Example: We use a simple Dantzig cut, which does not

require any knowledge beyond the solution typically

provided by a solver. Other, more efficient, cutting

planes work on the same principle.

Given: an all-integer linear programming problem.

Include all slack and excess variables, so that all

constraints are equations. Let there be n nonnegative

variables (including the slack and excess variables)

and m structural equation constraints, and assume

that the present optimal solution of the linear

programming relaxation has at least one noninteger

component.

Separate the variables into two disjoint sets B and N,

where B includes all variables that are presently

positive, while N includes all variables that are

presently zero. If the solution is nondegenerate, the set

B will include exactly m variables, and the set N

exactly (n–m) variables. In case of primal degeneracy,

the set N will include more than (n – m) variables, in

which case we define N as any (n – m) variables

presently at zero.

A Dantzig cut requires the sum of all variables in the

set N to be at least “1.” Validity: (1) Since all variables

in the set N equal zero, the cutting plane invalidates

the present solution. (2) Any feasible solution to the

original integer problem will need to have at least one

variable in N assume a positive value, which, since this

is an all-integer optimization problem, must be at least

one. Hence, the sum of all the variables that are

presently zero, must be at least one.

Add the cut to the problem & re-solve the problem

(preferably with a warm start). Stop, if the new

solution is integer; else, repeat. The the process, the zvalue cannot increase (decrease) for max (min)

problems.

In each step, the feasible set shrinks. Unfortunately,

for Dantzig cuts, this is not necessarily finite.

Example: Consider the integer programming problem:

Max z = 3y1 + 2y2

s.t. 3y1 + 7y2 22

5y1 + 3y2 17

y1

2

y1,

y2 0 and integer.

Adding slack variables S1 and S2 and an excess

variable E3, we obtain the following formulation with

n = 5 variables and m = 3 structural constraints:

Max z = 3y1 + 2y2

s.t. 3y1 + 7y2 + S1

= 22

5y1 + 3y2 +

S2

= 17

y1 –

E3 = 2

y1, y2, S1, S2, E3 0 and integer.

The optimal solution is y1 2.0385 , y2 2.2692 ,

S1 S 2 0 , and E3 0.0385 with z 10.65385 . Here, N

= {S1, S2}, so that the Dantzig cut is

S1 + S2 ≥ 1

(or 8y1 + 10y2 ≤ 38). Subtracting a new excess variable

E4 from the left-hand side of this cut, we obtain S1 + S2

– E4 = 1. Adding this cut to the problem and solving it

again, we obtain the new solution y1 2.1538 ,

y2 2.0769 , S1 1, S 2 E4 0 and E3 0.1538 with an

objective value z 10.61539 . Clearly, another cut is

required. The sequence of cutting planes generated in

the process is shown in the table below.

Optimal solution

y1 2.0385 , y2 2.2692 , S1 0 , S2 0 ,

E3 0.0385 with z 10.65385 (optimal

solution of the LP relaxation).

y1 2.1538 , y2 2.0769 , S1 1, S2 0 ,

E3 0.1538 , E4 0 with z 10.61539 .

y1 2.2692 , y2 1.8846 , S1 2 , S2 0 ,

E3 0.2692 , E4 1, E5 0 with z 10.5769 .

y1 2.3846 , y2 1.6923, S1 3 , S2 0 ,

E3 0.3846 , E4 2 , E5 1, E6 0 with

z 10.53846 .

y1 2.5 , y2 1.5 , S1 4 , S2 0 , E3 0.5 ,

E4 3 , E5 2 , E6 1, E7 0 with z 10.5 .

y1 2.6154 , y2 1.3077 , S1 5 , S2 0 ,

E3 0.6154 , E4 4 , E5 3 , E6 2 , E7 1,

E8 0 with z 10.46154 .

y1 2.7308 , y2 1.1154 , S1 6 , S2 0 ,

E3 0.7308 , E4 5 , E5 4 , E6 3 , E7 2 ,

E8 1, E9 0 with z 10.42308 .

y1 2 , y2 2 , S1 2 , S 2 1, E3 0 with

z 10 (optimal all-integer solution)

Cutting plane

S1 + S2 ≥ 1 or

S1 + S2 – E4 = 1

S2 + E4 ≥ 1 or

S2 + E4 – E5 = 1

S2 + E5 ≥ 1 or

S2 + E5 – E6 = 1

S2 + E6 ≥ 1 or

S2 + E6 – E7 = 1

S2 + E7 ≥ 1 or

S2 + E7 – E8 = 1

S2 + E8 ≥ 1 or

S2 + E8 – E9 = 1

S2 + E9 ≥ 1 or

S2 + E9 – E10 =

1

Even for this toy example, a large number of cuts are

need to solve the problem. This is true in general.

“Deep cuts” are much better, but cannot compete with

“branch & bound methods” discussed next.

One could use the objective function to derive a cut:

Since all variables must be integer, the value of the

objective function z = 3y1 + 2y2 must also be integer.

The LP relaxation of the problem has an objective

value of z 10.65385 , hence z ≤ 10 must hold.

A cutting plane is then 3y1 + 2y2 + S4 = 10. Solving the

problem with this added constraint results in the

solution y1 3.3333 , y2 0 , S1 12 , S 2 0.3333,

E3 1.3333 and S4 0 with z = 10. (Since the

objective value has not changed, we presently

encounter dual degeneracy).

The next cutting plane is then y2 + S4 ≥ 1, (or,

alternatively, 3y1 + y2 + S5 = 9). Adding the cut results

in an optimal solution y1 2.6667 , y2 1, S1 7 ,

S2 0.6667 , E 3 0.6667, S 4 S5 0 , with z 10.

The next cut is S4 + S5 ≥ 1, or, rewritten in terms of the

original variables and the new slack variable S6, it is

written as 6y1 + 3y2 + S6 = 18. The optimal solution is

then y1 y2 2 , S1 2 , S 2 1, E3 S 4 0 , S5 1,

S6 0 , with z 10 . This solution is an integer

optimum. The cuts are shown in the figure below.

4.3.2 Branch-and-Bound Methods

These methods are very flexible & are applicable to

AILP & MILPs.

Idea: Starting with the LP relaxation, subdivide the

problem into subproblems, whose union includes all

integer solutions that are not worse than the best

known integer solution.

For instance, if presently y3 = 5.2, we subdivide the

problem (the “parent”) by adding the constraint y3 ≤ 5

& y3 ≥ 6, respectively (thus creating “children”).

Example:

Max z = 5y1 + 9y2

s.t.

5y1 + 11y2 94

Constraint I

10y1 + 6y2 87

Constraint II

y1 , y2 ≥ 0 and integer.

Solution Tree

Note: Each node of the solution tree represents one

linear program.

The constraints at a node are all original constraints

plus all additional constraints between the root of the

tree & the node in question.

As we move down the tree, the problems get to be

more constrained & thus their objective values cannot

improve.

At any stage, the problem to be worked on is the

“best” active node (whose z-value is the present upper

bound (for max problems, lower bound for min

problems)), the best known integer solution is the

present lower bound (for max problems, upper bound

for min problems).

Different modes: fully automatic (specify integrality

conditions & let the optimizer do its thing), fully

manual (manually construct the solution tree & solve

the LPs graphically), or semi-automatic (manually

construct the solution tree, whose LP solutions are

obtained by some LP solver).

Same example:

If the IP problem has no feasible solution:

P: Max z = y1 + 4y2

s.t. 28y1 + 7y2 ≤ 49

30y1 6y2 ≥ 36

y1, y2 ≥ 0 and integer.

4.3.3 Heuristic Methods

Knapsack problem:

P: Max z = 12y1 + 20y2 + 31y3 + 17y4 + 24y5 + 29y6

s.t.

2y1 + 4y2 + 6y3 + 3y4 + 5y5 + 5y6 19

y1,

y2,

y3,

y4,

y5, … y6 = 0 or 1.

Greedy Method

“Value per weight” of the individual items

Variable y1

Value

12/2

per

=6

weight

Rank

1

y2

y3

y4

y5

y6

20/4 31/6 = 17/3 = 24/5 = 29/5 =

= 5 5.1667 5.6667 4.8

5.8

5

4

3

6

2

Idea: Increase the values of variables one by one,

starting with the highest rank, as long as resources are

available.

Solution: y = [y1, y2, y3, y4, y5, y6] = [1, 0, 1, 1, 0, 1] with

resource consumption 16 & z-value 89.

Swap method:

First swap move:

Leaving Entering New

ΔR

Δz

variable variable solution

y1

y2

0, 1, 1, 2 + 4 12 + 20

1, 0, 1 = +2 = + 8

New solution y = [0, 1, 1, 1, 0, 1] with resource

consumption 18 & z-value 97.

Further swap moves (terminate whenever no local

improvements are possible):

Leaving Entering New

ΔR

Δz

variable variable solution

y2

y1

1, 0, 1, 4 + 2 20 + 12

1, 0, 1 = 2

= 8

y2

y5

0, 0, 1, 4 + 20 + 24

1, 1, 1 5 = 1

= +4

New solution y = [0, 0, 1, 1, 1, 1] with resource

consumption 19 & z-value 101.

Leaving Entering New

ΔR

Δz

variable variable solution

y3

y1

1, 0, 0, 6+2 = 4 31+12 =

1, 1, 1

19

y3

y2

0, 1, 0, 6+4 = 2

31+20 =

1, 1, 1

11

y4

y1

1, 0, 1, 3 + 2 = 17 + 12 =

0, 1, 1

1

5

y4

y2

0, 1, 1, 3+4 = +1:

0, 1, 1 infeasible

y5

y1

1, 0, 1, 5+2 = 3

24+12 =

1, 0, 1

12

y5

y2

0, 1, 1, 5+4 = 1 24+20 = 4

1, 0, 1

y6

y1

y6

y2

1, 0, 1,

1, 1, 0

0, 1, 1,

1, 1, 0

5+2 = 3

29+12 =

17

5+4 = 1 29+20 = 9

No further improvements are possible, stop!

Note: Greedy alone may result in very poor solutions.

Example:

P: Max z = 10y1 + 8y2 + 7y3

s.t.

54y1 + 48y2 + 47y3 100

y1,

y2,

y3 = 0 or 1.

Greedy solution: y = [1, 0, 0] with resource

consumption 54 & z-value 10.

Optimal solution: y = [0, 1, 1] with resource

consumption 95 & z-value 15.

Another heuristic uses Lagrangean relaxation (see

Section 4.2). Given an IP problem PIP with some

objective function z. Set up the Lagrangean relaxation

PLagr of PIP by dualizing its i-th constraint using a

Lagrangean multiplier uˆi , obtaining the objective

function z Lagr z uˆi (ai1 y1 ai 2 y2 ... ain yn bi ) .

Denote an optimal solution to PIP by ( y1 , y2 ,..., yn ; z )

and an optimal solution to PLagr by ( yˆ1 , yˆ 2 ,..., yˆ n ; zˆLagr ) .

Whether or not the optimal solution to PLagr is feasible

for PIP, denote by ẑ the objective function value for PIP

with the point ( yˆ1 , yˆ 2 ,..., yˆ n ) inserted. Since

( yˆ1 , yˆ 2 ,..., yˆ n ) is optimal for PLagr , we have

zˆLagr zˆ uˆi (ai1 yˆ1 ai 2 yˆ 2 ... ain yˆ n bi ) ≥

z uˆi (ai1 y1 ai 2 y2 ... ain yn bi ) , which, in turn, is

greater than or equal to z (since uˆi 0 and

ai1 y1 ai 2 y2 ... ain yn bi 0 due to the assumption of

feasibility of PIP). We have now established the

relationship zˆLagr z from Section 4.1.

Consider

now

the

effect

of

the

term

uˆi (ai1 y1 ai 2 y2 ... ain yn bi ) on the relaxed objective

function zLagr. If (y1, y2, …, yn) is feasible for PIP, the

expression in brackets will be negative, so that

multiplying it with uˆi , where uˆi 0 , results in a

positive contribution. If, on the other hand, (y1, y2, …,

yn) is not feasible for PIP, the term will cause a negative

contribution to the value of zLagr.

Hence we have penalized the value of zLagr and the

value of this penalty is uˆi (ai1 y1 ai 2 y2 ... ain yn bi ) ,

and the larger the value of the Lagrangean multiplier

uˆi , the larger the magnitude of this penalty. Since we

are maximizing zLagr, the optimization process tends to

favor points (y1, y2, …, yn) that are feasible for PIP, and

this tendency will be stronger with larger values of

uˆi 0 ; for very large values of uˆi , the resulting

( yˆ1 , yˆ 2 ,..., yˆ n ) will therefore be feasible as long as

feasible solutions to PIP exist.

Heuristic procedure: Start with some arbitrary value

of uˆi 0 , and solve the corresponding relaxation PLagr.

If the solution is feasible for PIP, uˆi 0 will be reduced

and PLagr solved again. If, on the other hand, the

solution is not feasible for PIP, increase uˆi 0 , and

solve PLagr again.

This process can be applied to any number of

constraints rather than just a single constraint as in

this example.

Example: Consider the assignment problem from

Section 2.2.7 with a maximization objective:

P: Max z = 4x11 + 3x12 + 1x13 + 8x21 + 5x22 + 3x23 +

2x31 + 6x32 + 2x33

s.t. x11 + x12 + x13

x21 + x22 + x23

x31 + x32 + x33

=1

=1

=1

x11 + x21 + x31

x12 + x22 + x32

x13 + x23 + x33

=1

=1

=1

x11, x12, x13, x21, x22, x23, x31, x32, x33 ≥ 0.

In addition to the usual constraints, we add the

constraint

2x11 + 3x12 + x13 + 4x21 + 6x23 + 5x32 + 2x33 ≤ 8.

This is a complicating constraint, as due to its

inclusion, the nice properties of the problem (such as

integrality of all extreme points) vanishes. An obvious

way to deal with this complication is to dualize the

complicating constraint and solve the resulting

(simple) assignment problem.

Without the complicating constraint, the unique

optimal solution is x13 x21 x32 1, and xij 0

otherwise, with z 15 . (This solution does not satisfy

the complicating constraint).

Dualizing

the

additional

constraint

as

uˆ (2 x11 3x12 x13 4 x21 6 x23 5x32 2 x33 8) , we add

this penalty term to the objective function of the

original problem. Using the dual variable uˆ ½ and

solving the Lagrangean relaxation, we obtain

xˆ13 xˆ21 xˆ32 1, and xˆij 0 otherwise. This is the

same solution obtained for the assignment problem

without the complicating constraint, so that the

additional constraint is still violated.

Concluding that the value of the dual variable must be

increased, we arbitrarily set uˆ : 5 . The unique optimal

solution to the relaxed problem is then

xˆ13 xˆ22 xˆ31 1 and xˆij = 0 otherwise, with z = 8. This

solution satisfies the additional constraint and is

therefore feasible to the original problem.

Try a value between û = ½ and û = 5, e.g., uˆ 1. The

resulting relaxed problem then has the unique optimal

solution xˆ11 xˆ22 xˆ33 1, and xˆij 0 otherwise, with z

= 11. This solution is feasible for the original problem.

We may continue with penalty values between ½ and

1, but terminate the process at this point. (Actually,

the solution happens to be optimal).

Alternatively, we could have dualized all six

assignment problem constraints and solved the

Lagrangean relaxation, which would then be a

knapsack problem. Since we are maximizing and since

all coefficients are nonnegative, we may write the six

assignment constraints as “≤” constraints:

P: Max z = 4x11 + 3x12 + 1x13 + 8x21 + 5x22 + 3x23 +

2x31 + 6x32 + 2x33

– u1(x11 + x12 + x13 – 1)

– u2(x21 + x22 + x23 – 1)

– u3(x31 + x32 + x33 – 1)

– u4(x11 + x21 + x31 – 1)

– u5(x12 + x22 + x32 – 1)

– u6(x13 + x23 + x33 – 1)

s.t. 2 x11 3x12 x13 4 x21 6 x23 5x32 2 x33 8

xij = 0 or 1 for all i, j.

We must simultaneously choose dual variables u1, u1,

…, u6. The resulting relaxation is an integer problem

which is typically not easy to solve. (In the previous

approach, the relaxation could be solved as an LP, it

always had integer solutions).

Arbitrarily choose uˆ1 uˆ2 ... uˆ6 ½, which results in

the objective function zLagr = 3x11 + 2x12 + 7x21 + 4x22 +

2x23 + x31 + 5x32 + x33 + 3. Maximizing this objective

function subject to the knapsack constraints results in

the solution xˆ11 xˆ21 xˆ22 xˆ31 xˆ33 1, and xˆij = 0

otherwise. This solution violates the second, third, and

fourth of the six assignment constraints, so that we

increase the penalty parameters for the violated

constraints to, say, uˆ2 uˆ3 uˆ4 5 .

The resulting knapsack problem, has an optimal

solution xˆ12 xˆ32 1 and xˆij 0 otherwise. This

solution violates only the fifth constraint in the

original problem. At this point, we could just increase

the value of uˆ5 and the process will continue.

The above example demonstrates that (1) it is not

always apparent beforehand which approach (i.e.,

dualizing which constraints) is preferable, and (2)

which values of the dual variables will result in quick

convergence. Actually, choosing good values for the

dual variable is more of an art than a science.