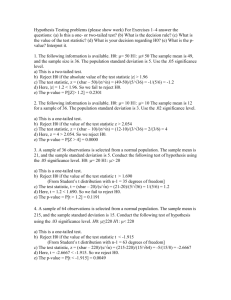

Bootstrap test: an example (SDA

advertisement

Bootstrap test: an example (SDA-lecture 4)

As illustration of the use of the bootstrap for finding the distribution of

a test statistic and performing a bootstrap test, we estimate below the

distribution of the Shapiro-Wilk test statistic. First we sketch the

context.

We wish to investigate the distribution of the data on precipitation in

the R-vector dprec.

#Preliminary investigation:

> dprec=climate[[3]]-climate[[4]]

> mean(dprec)

[1] -41.05085

> sd(dprec)

[1] 82.45614

> hist(dprec, prob=T)

> qqnorm(dprec)

This suggests normality.

Do data in dprec come from a normal distribution?

Investigate this with a test.

H0: the underlying distribution P of the data in dprec is normal

H1: the underlying distribution P of the data in dprec is not normal

#with the Shapiro-Wilk test in R:

> shapiro.test(dprec)

Shapiro-Wilk normality test

data: dprec

W = 0.9937, p-value = 0.9904

Is H0 rejected?

remember these values

Now we use the bootstrap to determine the distribution of the S-W

statistic

To see what the value 0.9937 of W and the p-value 0.9904 mean, we can

estimate the distribution of the Shapiro-Wilk test statistic W under H0

of normality with a bootstrap procedure in R:

>

> simulate.shapiro = # make function that generates the bootstrap values

function(x,B=100){

n=length(x)

tstar=numeric(B)

for (j in 1:B){

xstar=rnorm(n)

# generates samples of right size under H0

tstar[j]= shapiro.test(xstar) $statistic

# puts value S-W statistic in tstar

}

tstar}

Does it matter which normal distribution you generate the xstar from?

Now let us generate the bootstrap values:

> tstar1= simulate.shapiro(dprec, B=1000) # generate the bootstrap values

> tstar2= simulate.shapiro(dprec, B=1000) # once more

Empirical distribution of tstar 1 and tstar2 are both estimates of

the distribution of the Shapiro-Wilk test statistic under H0 of normality.

We see: the value w = 0.9937 of W indeed lies on the far right of this

distribution, and it is large enough for H0 of normality not to be rejected

(Remember that S-W test rejects for small values of the test statistic.)

Note: In this case it does not matter which normal distribution you

generate the xstar from, because the S-W statistic has the same

distribution for all normally distributed samples, so in this case the test

statistic is nonparametric under the null hypothesis of normality.

This can be seen from the 3 pictures below: they show similar estimates

of the distribution; values in tstar1 and tstar2 were simulated with

samples from N(0,1) (see R-code above), whereas values in tstar3 were

simulated with xstar-samples from N(mean(dprec),var(dprec)).

If R would not have provided the p-value of the S-W test we could have

estimated it from the generated bootstrap sample. How?

As follows.

Again note that the S-W test rejects for small values of the test statistic,

so the p-value is computed as the probability under H0 that test statistic

W is smaller than the observed value w = 0.9937 of W.

> sum(tstar1< 0.9937)/1000 # estimate of p-value P0 (W<0.9937)

[1] 0.993

# the estimate

> sum(tstar2< 0.9937)/1000 # once more

[1] 0.99

# estimate based on another bootstrap sample

We see: the p-value = 0.9904 provided by shapiro.test in R is well

estimated by this approach.

The estimated p-values 0.993 and 0.99 are the p-values of two bootstrap

tests.

Do the bootstrap tests reject H0?