Lecture Notes - Towson University

advertisement

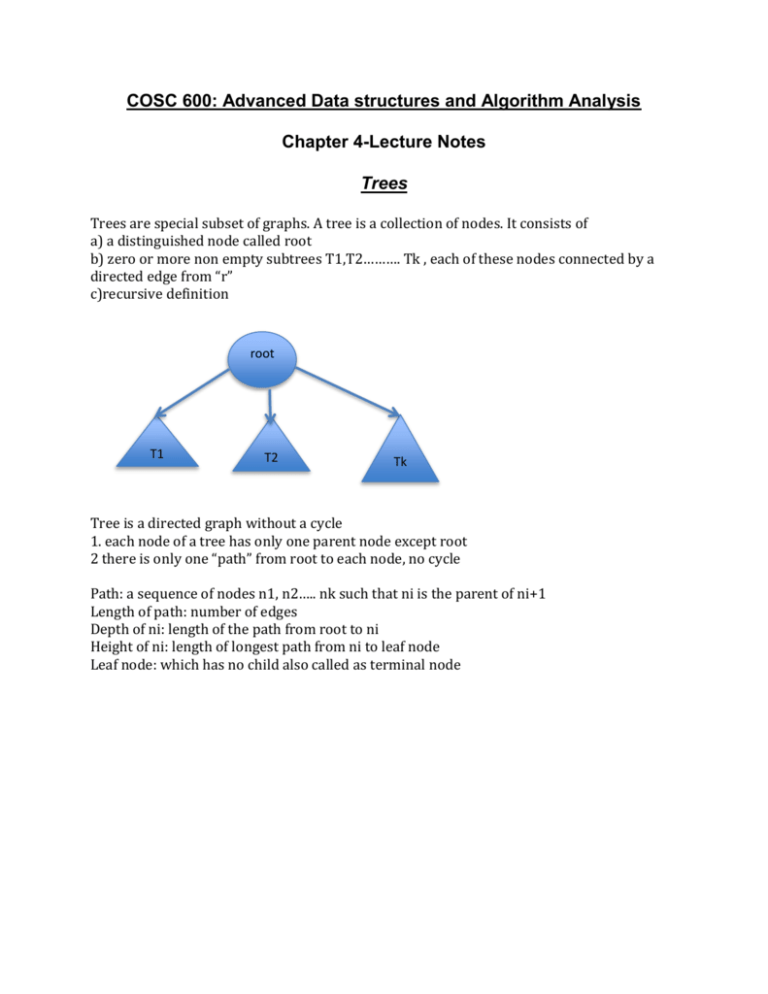

COSC 600: Advanced Data structures and Algorithm Analysis Chapter 4-Lecture Notes Trees Trees are special subset of graphs. A tree is a collection of nodes. It consists of a) a distinguished node called root b) zero or more non empty subtrees T1,T2………. Tk , each of these nodes connected by a directed edge from “r” c)recursive definition root T1 T2 Tk Tree is a directed graph without a cycle 1. each node of a tree has only one parent node except root 2 there is only one “path” from root to each node, no cycle Path: a sequence of nodes n1, n2….. nk such that ni is the parent of ni+1 Length of path: number of edges Depth of ni: length of the path from root to ni Height of ni: length of longest path from ni to leaf node Leaf node: which has no child also called as terminal node Linked list representation of a tree value Links to children nodes A D C B E G F Next sibling value Child = root of first sub tree Implementation: using linked lists A E F A B E / C / F D C B / / / / D / / Tree Search (tree traverse) 1) Depth first search(DFS)……………… use stacks 2)Breadth first search(BFS) …………… Use Queues Binary tree: special subset of tree, ordered tree Stmt: each son of a vertex is distinguished either as a left son or as right son 2) no vertex has more than one left son nor more than one right son a tree in which no node can have more than two children Implementation: left value right Linked list pointing to a left n right nodes Eg: / A B C / / E / / F / Note: For a number of nodes begin N, the average height of all possible binary tree is O(√𝑁). Let F be the # number of nodes with 2 children and H be the # no of nodes with 1 child and L be the # no of nodes with no children then 1) F+H+L = N 2) 2F+H=N-1 from 1 &2, F=L-1 Traversal method for a binary tree 1) pre order: visit + traversal of left subtree + traversal of right subtree 2)In order: traversal of left subtree + visit + traversal of right subtree 3)post order: traversal of left subtree + traversal of right subtree + visit visit: print label of nodes A C B D F E I H G M L K J Preorder for above tree : ABDGJKCEHFILM Inorder : DJGKBAHECLIMF Postorder: JKGDBHELMIFCA Expression tree example: + / * + 3 2 * 4 8 4 2 Postorder: 3 2 4 + * 8 2 * 4 / + Preorder : + * 3 + 2 4 / * 8 2 4 Inorder : ((3*(2+4)+((8*2)/4)) Problem: Generate Algorithm to convert postorder to inorder( postfix to infix) Eg: a b + c d e + * * Algorithm: 1) read a token left to right If token = operand Create a one node tree and push it into a stack If token = operator Pop two trees T1,T2 from stack and from new tree whose root is the operator and whose left and right children are T2 and T1 First two symbols are operands push them into the stack a b Next is operator + so a,b are popped and new tree is formed and pushed into stack + a b Next c,d,e are read and they are placed in stack + a b c d e Next operator + is read so trees are merged + a + + c + b + e + d + Next operator * is read so we pop two trees and form new tree with * as root * + + a + b + + c + d + e + Finally last symbol is read two trees are merged and final tree is left on the stack * + + a + * + b + + c + d + e + Binary search tree: Subset of binary tree. It is a binary tree for a set ‘s’ , s is a labeled binary tree such that each vertex V is labeled by an element l(v)∈ s 1)each vertex ‘u’ in the left subtree of ‘v’ l(u) < l(v) 2)each vertex ‘u’ in the right subtree of ‘v’ l(u) > l(v) 3)each element a∈s, there is exactly one vertex v such that l(v)=a If left subtree < root, root < right subtree then only it is binary search tree and No two node values are same . Operations: 1)find(search) 2)insert 3)delete 4)findmin/findmax 5)print Find Operation • Time Complexity – O(Height of the Binary Search Tree) That is O(N) in worst case Example: Height of the tree = N Thus, Order of growth = O(N) Find (Worst Case Example) Order of growth will be O(N), no matter how the tree is structured. Find Max and Find Min For Find Max and Find Min operations, the worst case will have the time complexity order of O(N). • L is the smallest value in this BST. • I is the largest value in this BST. • For sorting in ascending order use inorder (LVR) method. • For sorting in descending order use inorder (RVL) method. • It will have the order of O(N). Traversal & Median Value • Inorder can be used to find the median value in the BST. • It will have the order of O(N). • We can use the balanced binary search tree in order to change the order O(N) to O(logN). • Traversal in a BST will have the order O(N). • Recursion can be used for traversal operation. Insertion opration Always follow the BST rules while inserting a new node in the tree. Case 1) New node will always be a terminal node. Example ( 2 is the new node) 5 7 4 2 Case 2) In order to find the location to insert the node in some cases when following the BST protocols. The complexity will be in the order O(N). Example ( 6 is the new node) 5 4 2 Time Complexity worst case – O(Height of the BST) 6 7 Delete Operation : O(Height of BST) ≡ O(N) There are three possible cases : i)case one : The deleting node has no child ≡ terminal node (leaf) => just delete it ! ii)case two : The deleting node has only one child => reconnect the child to its parent node. iii)case three: The deleting node has two children (two subtrees) a)Find the smallest node from its right subtree and replace the deleting node with it . Delete the replaced node. => no child or only one child . b)Find the largest node from its left subthree . Example: Build(construct) a BST for a given N elements : 5,10,21,32,7 Repeat insert operations 5 10 21 7 32 21 10 7 5 7 21 32 5 32 10 T(N)=0+1+2+3+…+(N-1) ≠ O(N) because we are looking for the worst case which = O(N2) And in average case time complexity = O(log N) , (this one can the best case too ). Average depth all nodes in a tree is O(log N) On the assumption that all insertion sequences are “equally likely ”. Some of depth of all nodes ≡ internal path length Average case analysis: Insert operation repeatedly 5 4 3 2 1 5 4 3 2 1 2 5 1 3 4 2 1 5 3 4 2 4 1 3 5 2 1 4 3 5 Randomly generated BST N! 2 1 3 2 3 1 2 2 1 3 1 3 Binary search tree ᶿ(N2) insert/remove pairs . Theorem: The expected number of comparison ≡ depth of the node Needed to insert N random elements into an initially empty BST is : O (NlogN) for N≥1 (on the average , roughly ) Balanced Binary Search Tree ( AVL Tree ) AVL Tree is similar to Balanced Binary Search Tree. Height of empty BST subtree is -1. For “every” node in the tree, its height of left and right subtrees can differ by atmost 1. This is the similar to the condition of Balance. Example: 5 8 2 1 7 4 3 It is Binary Search Tree and a AVL Tree. After Inserting 10, 5 8 2 1 4 3 It is still a AVL Tree. 7 10 After Inserting 9, 5 8 2 1 10 7 4 9 3 Still,its a AVL Tree. Example: Below Tree is not AVL Tree. 7 8 2 11 1 4 10 3 5 NOTE: Height information is kept for each node. After each insert operation,update the height information of all the nodes from new inserted node to root node. NOTE: The minimum number of nodes in an AVL Tree of height h,s(h) is S(h)=s(h-1)+s(h-2)+1 s(h-1) s(h-2) s(0)=1 S(1)=2 S(2)=4 S(3)=7 S(4)=12 S(5)=20 [S(3)+S(4)+1] S(6)=33 [S(4)+S(5)+1] S(7)=54 [S(5)+S(6)+1] All the tree operations =O(log N) Except insertion needs special work called “Rotations”. Example: Inserting ‘6’ 5 5 2 AfIII 6 ____=== ___ Insert 8 4 7 1 8 2 1 4 3 3 7 6 Height of each node =max of left or right subtree +1 5 7 2 1 4 6 8 3 NOTE: After one insert operation,only nodes that are on the path from the insertion point to the root might have their balance altered. =>Insert the node to root and update the balancing information. Node that must be “Rebalanced” Violating the balancing condition of AVL tree is called .(Alpha) CASE 1 An insertion into the left subtree of the left child of CASE 2 An insertion into the right subtree of the left child of CASE 3 An insertion into the left subtree of the right child of CASE 4 An insertion into the right subtree of the right child of CASE 1 is similar to CASE 4 and CASE 2 is similar to CASE 3 CASE 1 INSER CASE 2 CASE 3 CASE 4 NOTE:(SINGLE ROTATION) The new height of the entire subtree is exactly the same. EXAMPLE 2 0 3 0 1 2 6 1 6 4 1 0 5 2 1 4 4 0 2 4 2 7 4 2 3 5 3 7 3 Node 6 violates AVL condition leads to condition. AVL Tree after Rotation 2 0 3 0 1 2 1 6 4 6 2 1 4 5 4 2 3 5 2 7 3 7 1 0 3 CASE 1 4 0 2 4 SINGLE ROTATION K K K1 K1 Z X Y X Y Z CASE 2 DOUBLE ROTATION K K K K K K A B C D B A CASE 3 C D DOUBLE ROTATION K K K K K A A K B C D CASE 4 SINGLE ROTATION B C D K K K X K Z Y EXAMPLE I) TO INSERT 5 X 1 1 8 4 1 2 Z Y 1 6 5 2) SINGLE ROTATION 3) SINGLE ROTATION 1 1 1 8 1 6 6 1 1 8 1 4 SPLAY TREES 4 1 2 3 5 5 * It is a Binary Search Tree but not a Balanced BST. * Relatively simple. * Guarantees that any M consecutive tree operations. * Starting from an empty tree take atmost O(M logN) * != O(log N) for a single operation. * O(log N) “amortized”(on the average)cost per operation. IDEA After a node is accessed,it is pushed to the root by a series of AVL rotations. WHY? i) Likely to be accessed again in the future. =>Locality. ii) Not require the maintenance of height/balance information. METHOD 1 A Series of single rotations,bottom up. K K K K K F K E K K F D K K D E A B C A K B C K K K K K K F F K K E K A E A B B C D D C K K K K K A B C D E F 7 EXAMPLE 1,2,3,4,5,6,7 6 5 2 1 4 2 3 1 3 2 1 PROBLEM An other node might be almost as deep as k,used to be (M,N) METHOD 2 Let X be a (non-root)node on the access path at which we are rotating. RULE If the parent of X is the root of the tree, => Merely rotate X and root similar to last rotation. Otherwise,X has both a parent(p) and a grandparent(G). CASE 1 ZIG -ZIG CASE ( X is a right child and P is a left child or vice versa) Double Rotation. X G D G P D X A A B C B C D CASE 2 ZIG-ZIG CASE (X and P are both left children or right children) G X P P X A G B C D A B D C DELETE OPERATION 1) Accessing the node to be deleted. => push the node to root. 2) Delete it(root).=> two subtrees TL and TR . 3) Find largest element in TL .=>it will be a root without right child of TL . 4) TR will be the right subtree of the root. EXAMPLE To delete 12 1 1 1 2 3 2 6 6 1 2 2 1 2 3 2 4 1 8 8 2 2 2 2 1 4 1 1 1 6 After 12 1 1 8 2 2 1 6 2 4 2 8 1 2 3 1 2 2 2 3 4 2 2 After deleting 12, element 10 should be root. B-Tree Main reason why it’s needed? o Reduces the disk access time M-ary Search trees o M-way branches: M M value increases == height decreases o M – (minus) 1 keys o Maintain M-ary search tree is balanced Properties of B- Tree (M and L) M = no. (#) of branches L = Record in each terminal/ block of data 1. Data items are stored at leaves 2. Non leaf nodes store up (m – 1) keys a. Key (i) represent the smallest key in subtree (i+1) 3. Root is either a leaf or has between two and more children 4. All non leaf nodes (except root) have between [m/2] and m children 5. All leaves are at the same depth and have between [L/2] and L children for some L EXAMPLE 1 Assume one block = 8192 byte ==8k . And each key == 32 bytes. Link= 4 bytes Using M-ary B-tree formula M-1 = keys M= links 32(M-1) + 4 * M <= 8192 M= (up to) 228 L= ? 8192/ (divide by) record size in this case the record size= 256 bytes So, = 8192/ (256) = 32 record/leaf. EXAMPLE 2 Suppose 10 million records Each leaf has between 16 and 32 records Each internal node (with the exception of the root) has at least 114 branches o 10,000,000/16 =(about) 625, 000 leaves ( worst case) To calculate worst case depth of B-tree o Use log functions 𝑙𝑜𝑔114 10,000,000 o The actual data is stored in leaf node o Time complexity is measured by B-tree (height)