peps_1156_LR - The Department of Psychology

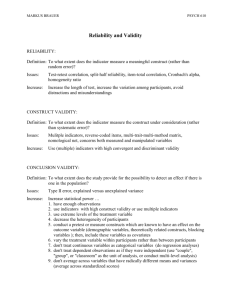

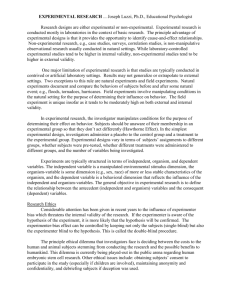

advertisement