First we run a proc corr to obtain the correlations between the

advertisement

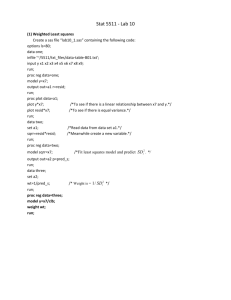

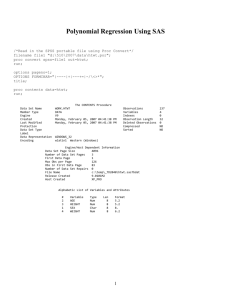

First we run a proc corr to obtain the correlations between the independent variables. This is useful for identifying variables that could cause problems with multicolinearity. We see that the variable pairs, (x1, x2), (x1, x11), and (x3, x8) are all highly correlated, r > .90. This calls into question keeping these variables all in the model. To determine if these should be removed from the model, we look at the variance inflation factor. Variance inflation factors > 30 should be removed. We first see that x11 has the highest VIF at 6793.01987. So we remove x11 from the model and re-run the model looking at the VIF for the remaining variables. proc reg; model y=x1-x10/ vif; run; quit; X3 now stands out with a VIF of 260.91318, so it is dropped from the model and the model is re-run. proc reg; model y=x1-x2 x4-x10/ vif; run; quit; Now, no more variables are left with VIF > 30. So our model will include x1, x2, and x4-x10 for possible inclusion. We now turn to looking for outliers. This can be done with the influence statement. proc reg; model y=x1-x2 x4-x10/ influence vif; output out=leverage h=lev; run; quit; There are several methods for determining outliers, but concentrate on using a combination of the studentized deleted residuals (RSTUDENT) and DFFITS. We will consider a case an outlier if RSTUDENT value is large relative to the others and DFFITS exceeds or is equal to 1. proc reg; model y=x1-x2 x4-x10/ influence vif; output out=leverage; run; quit; We see that observatiosn 25 and 12 immediately stand out with large RSTUDENT values and may be considered for exclusion. However, we verify this with DFFITS. DFFITS value for observations 12 and 25 are large, so we remove these cases from the regression model: proc reg; model y=x1-x2 x4-x10/ influence vif; output out=leverage h=lev; where obs not in(12, 25); /*this deletes 12 and 25*/ run; quit; We see that observatiosn 25 and 12 immediately stand out with the use of proc rsquare and producing the adjusted r-square, CP, and MSE statistics: PROC RSQUARE ; MODEL y=x1-x2 x4-x10 /ADJRSQ CP MSE; run; A good model is parsimonious and has a small Cp value and close to the number of variables in the model, a high adjusted R-square, and a small MSE. Using this criteria, we find that models that include the following variables are good candidates: x1 x2 x5 x6 x7 x8 x9 x10 x1 x2 x5 x7 x8 x9 x10 x1 x2 x4 x5 x6 x7 x8 x10 We obtain the PRESS statistic for these models and the full model now and output the press statistics to datasets: proc reg; model y=x1-x11/ press; where obs not in(12, 25); output out=press_modelfull press=press1; run; quit; proc reg; model y=x1 x2 x5 x6 x7 x8 x9 x10/ press; where obs not in(12, 25); output out=press_model1 press=press; run; quit; proc reg; model y=x1 x2 x5 x7 x8 x9 x10/ press; where obs not in(12, 25); output out=press_model2 press=press; run; quit; proc reg; model y=x1 x2 x4 x5 x6 x7 x8 x10/ press; where obs not in(12, 25); output out=press_model3 press=press; run; quit; All of the press values for all models are now in the press_model3 dataset. We need to square the values and take the sum of these values. The model with the lowest PRESS will be selected: data press_model3; set press_model3; press=press*press; press1=press1*press1; press2=press2*press2; press3=press3*press3; run; proc means data=press_model3 sum; var press press1-press3; run; We see from the proc means output that, excluding the full model (which we have done away with due to high multicolinearity), the best model is model 2, as its PRESS is the lowest at 14.1323646. So the best model includes the terms: x1 x2 x5 x7 x8 x9 x10 To find the equation of the model, we use look at the parameter estimates that proc reg produces: proc reg; model y=x1 x2 x5 x7 x8 x9 x10 ; where obs not in(12, 25); run; quit; We see that the final equation is: Y= 8.14898+(X1)1.18676-(X2)5.23959+(X5)0.18932-(X7)0.07784-(X8)0.01253(X9)0.24136+(X10)0.00135