COM181_cw1_answers_2k14_UUJ

advertisement

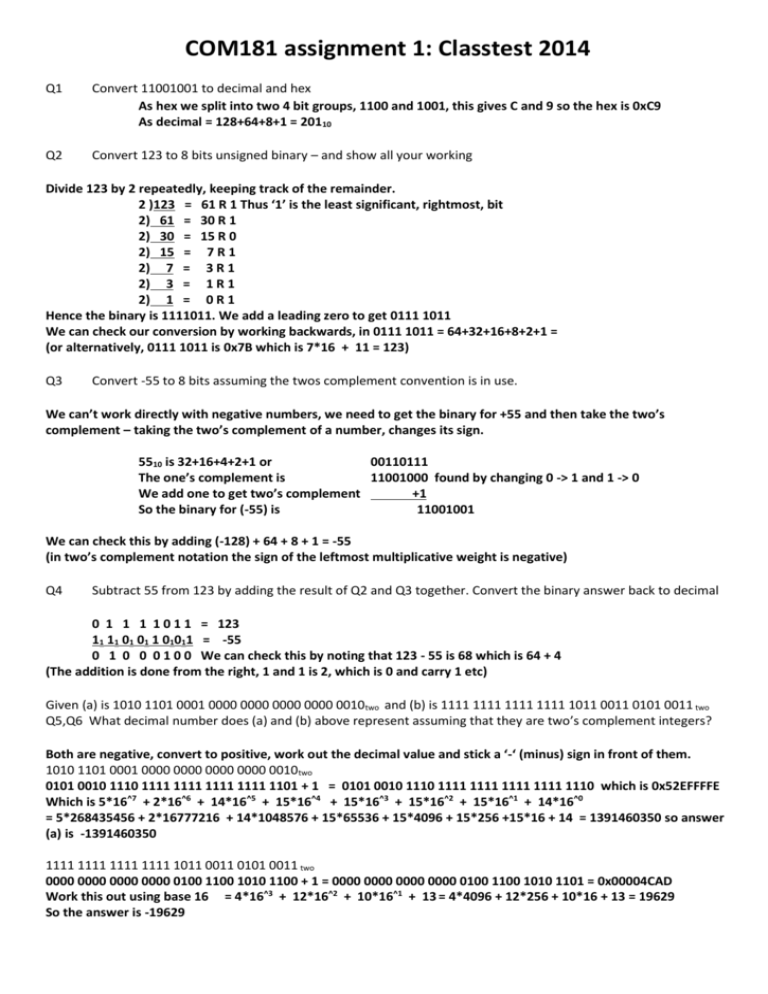

COM181 assignment 1: Classtest 2014 Q1 Convert 11001001 to decimal and hex As hex we split into two 4 bit groups, 1100 and 1001, this gives C and 9 so the hex is 0xC9 As decimal = 128+64+8+1 = 20110 Q2 Convert 123 to 8 bits unsigned binary – and show all your working Divide 123 by 2 repeatedly, keeping track of the remainder. 2 )123 = 61 R 1 Thus ‘1’ is the least significant, rightmost, bit 2) 61 = 30 R 1 2) 30 = 15 R 0 2) 15 = 7 R 1 2) 7 = 3 R 1 2) 3 = 1 R 1 2) 1 = 0 R 1 Hence the binary is 1111011. We add a leading zero to get 0111 1011 We can check our conversion by working backwards, in 0111 1011 = 64+32+16+8+2+1 = (or alternatively, 0111 1011 is 0x7B which is 7*16 + 11 = 123) Q3 Convert -55 to 8 bits assuming the twos complement convention is in use. We can’t work directly with negative numbers, we need to get the binary for +55 and then take the two’s complement – taking the two’s complement of a number, changes its sign. 5510 is 32+16+4+2+1 or 00110111 The one’s complement is 11001000 found by changing 0 -> 1 and 1 -> 0 We add one to get two’s complement +1 So the binary for (-55) is 11001001 We can check this by adding (-128) + 64 + 8 + 1 = -55 (in two’s complement notation the sign of the leftmost multiplicative weight is negative) Q4 Subtract 55 from 123 by adding the result of Q2 and Q3 together. Convert the binary answer back to decimal 0 1 1 1 1 0 1 1 = 123 11 11 01 01 1 01011 = -55 0 1 0 0 0 1 0 0 We can check this by noting that 123 - 55 is 68 which is 64 + 4 (The addition is done from the right, 1 and 1 is 2, which is 0 and carry 1 etc) Given (a) is 1010 1101 0001 0000 0000 0000 0000 0010two and (b) is 1111 1111 1111 1111 1011 0011 0101 0011 two Q5,Q6 What decimal number does (a) and (b) above represent assuming that they are two’s complement integers? Both are negative, convert to positive, work out the decimal value and stick a ‘-‘ (minus) sign in front of them. 1010 1101 0001 0000 0000 0000 0000 0010two 0101 0010 1110 1111 1111 1111 1111 1101 + 1 = 0101 0010 1110 1111 1111 1111 1111 1110 which is 0x52EFFFFE Which is 5*16^7 + 2*16^6 + 14*16^5 + 15*16^4 + 15*16^3 + 15*16^2 + 15*16^1 + 14*16^0 = 5*268435456 + 2*16777216 + 14*1048576 + 15*65536 + 15*4096 + 15*256 +15*16 + 14 = 1391460350 so answer (a) is -1391460350 1111 1111 1111 1111 1011 0011 0101 0011 two 0000 0000 0000 0000 0100 1100 1010 1100 + 1 = 0000 0000 0000 0000 0100 1100 1010 1101 = 0x00004CAD Work this out using base 16 = 4*16^3 + 12*16^2 + 10*16^1 + 13 = 4*4096 + 12*256 + 10*16 + 13 = 19629 So the answer is -19629 Given (a) is 1010 1101 0001 0000 0000 0000 0000 0010two and (b) is 1111 1111 1111 1111 1011 0011 0101 0011 two Q7,8 What decimal number does (a) and (b) above represent assuming that they are unsigned integers? With such big numbers it makes sense to use base 16 arithmetic (avoids dealing with numbers like 4 billion!) 1010 1101 0001 0000 0000 0000 0000 0010 is 0xAD100002 is 10*16^7 + 13*16^6 + 1*16^5 + 0*16^4 + 0*16^3 + 0*16^2 + 0*16^1 + 2*16^0 = 10*268435456 + 13*16777216 + 1048576 + 2 = 2,903,506,946 1111 1111 1111 1111 1011 0011 0101 0011 is 0xAD100002 ie 15*16^7 + 15*16^6 + 15*16^5 + 15*16^4 + 11*16^3 + 3*16^2 + 5*16^1 + 3*16^0 = 4026531840 + 251658240 + 15728640 + 983040 + 45056 + 768 + 80 +3 = 4,294,947,667 Q9,10 Give the hexadecimal representation of (a) and (b) above 0xAD100002 and 0xAD100002 Given (c) 7FFFFFF 16 and (d) 1000ten Q11,12 Convert (c) and (d) above to 32 bits of binary 0111 1111 1111 1111 1111 1111 1111 1111 and for 1000 ten we have to convert to binary = 512+256+128+64+32+8 0000 0000 0000 0000 0000 0011 1110 1000 (0x000003E8) Q13 Give the result in binary after performing an OR of (a) and (b) together 0111 1111 1111 1111 1111 1111 1111 1111 0000 0000 0000 0000 0000 0011 1110 1000 OR 0111 1111 1111 1111 1111 1111 1111 1111 Q14 Give the result in binary after performing an AND of (a) and (b) together 0111 1111 1111 1111 1111 1111 1111 1111 0000 0000 0000 0000 0000 0011 1110 1000 AND 0000 0000 0000 0000 0000 0011 1110 1000 Q15 Give the result in binary after performing an ADD of (a) and (b) together 0111 1111 1111 1111 1111 1111 1111 1111 0000 0000 0000 0000 0000 0011 1110 1000 + 1000 0000 0000 0000 0000 0011 1110 0111 Q16 = 0x800003E7 (confirmed by windows calculator) Convert 1234567 to hex by dividing by 16, show your working 16)1234567 16) 77160 16) 4822 16) 301 16) 18 16) 1 = 77160 remainder 7 This is the rightmost, least significant hex digit. = 4822 remainder 8 = 301 remainder 6 = 18 remainder 13 remember 13 is D in hex = 1 remainder 2 = 0 remainder 1 Hence the hex is 0x12D687 (confirmed by windows calculator) Q17 Hence give the binary for Q16 ANSWER 0x12D687 is 0001 0010 1101 0110 1000 0111 Q18 Give the binary representation for -1234567 assuming 32 bit two’s complement is used. Need to add leading zeros to the binary answer in Q17 and then invert and add one to change its sign 0000 0000 0001 0010 1101 0110 1000 0111 1111 1111 1110 1101 0010 1001 0111 1000 +1 1111 1111 1110 1101 0010 1001 0111 1001 Assuming the IEEE 754 format for 32 bit floating point numbers is to be used, answer Q19 and 20 below. The IEEE 754 has a mantissa sign bit in b31, an 8 bit exponent in b30 to b23 biased by adding 127 to the raw value and a 24 bit number stored in the remaining 23 bit - the number is normalised to 1.xxxx and the leading bit discarded. Q19 Convert 1956 to 32 bits of floating point – show your working 1956 = 111 1010 0100 So as a 24 bit number normalised this is 1.11 1010 0100 000000000000 this is stored without the leading ‘1’ We need to shift 1.11 1010 0100 ten bits to get the original number 111 1010 0100 so the exponent is 10. We add 127 to 10 to get 137 and convert this to binary as 10001001 (128+8+1). Hence Floating point is 0 10001001 11101001000000000000000 Q20 Convert 0x42280000 to binary and hence to a floating point number. 0x42280000 = 0100 0010 0010 1000 0000 0000 0000 0000 This is normalised as 1.00001000101 and needs shifted left 30 bits to get the number above. Hence the exponent is 30. Also the number is positive so the sign bit (bit 31, on the left) will be ‘0’. The exponent has to have 127 added to it so the 30 becomes 157 as unsigned binary this is 128+16+8+4+1 = 10011101. The floating point number is 0 10011101 00001000101000000000000 Confirmed using http://www.hschmidt.net/FloatConverter/IEEE754.html