This Chapter corresponds to Chapter 4 in the textbook. 3. Higher

advertisement

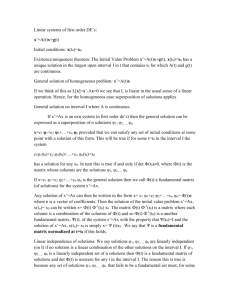

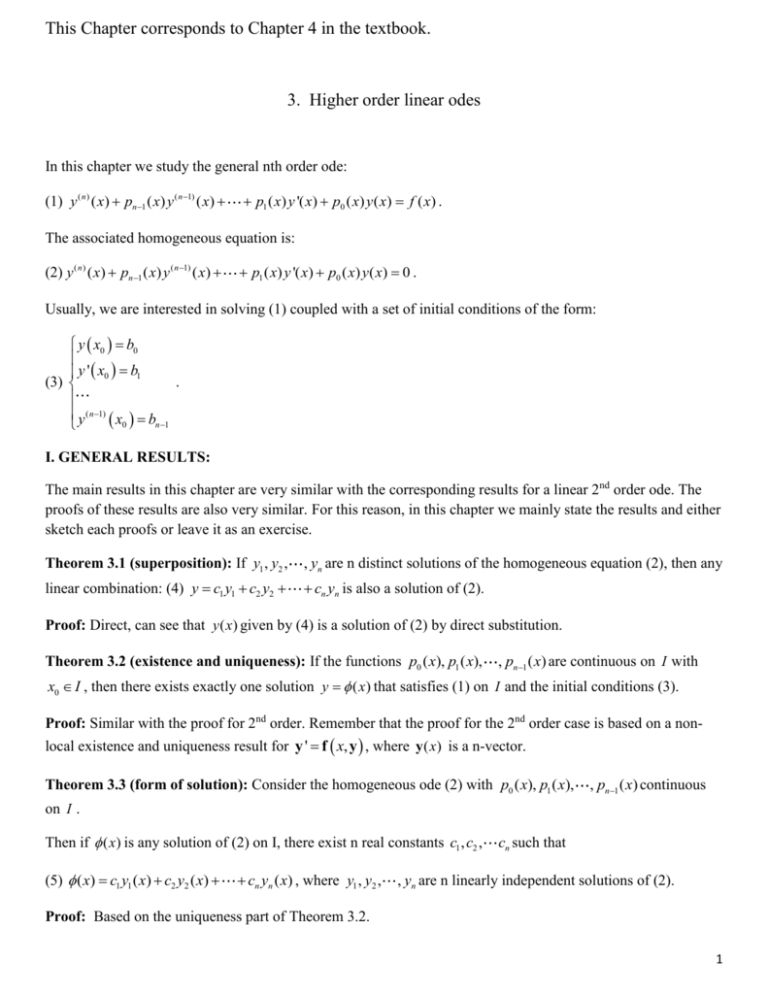

This Chapter corresponds to Chapter 4 in the textbook. 3. Higher order linear odes In this chapter we study the general nth order ode: (1) y ( n ) ( x) pn 1 ( x) y ( n 1) ( x) p1 ( x) y '( x) p0 ( x) y ( x) f ( x) . The associated homogeneous equation is: (2) y ( n ) ( x) pn 1 ( x) y ( n 1) ( x) p1 ( x) y '( x) p0 ( x) y ( x) 0 . Usually, we are interested in solving (1) coupled with a set of initial conditions of the form: y x0 b0 y ' x0 b1 (3) . y ( n 1) x b 0 n 1 I. GENERAL RESULTS: The main results in this chapter are very similar with the corresponding results for a linear 2nd order ode. The proofs of these results are also very similar. For this reason, in this chapter we mainly state the results and either sketch each proofs or leave it as an exercise. Theorem 3.1 (superposition): If y1 , y2 , , yn are n distinct solutions of the homogeneous equation (2), then any linear combination: (4) y c1 y1 c2 y2 cn yn is also a solution of (2). Proof: Direct, can see that y ( x) given by (4) is a solution of (2) by direct substitution. Theorem 3.2 (existence and uniqueness): If the functions p0 ( x), p1 ( x), , pn1 ( x) are continuous on I with x0 I , then there exists exactly one solution y ( x ) that satisfies (1) on I and the initial conditions (3). Proof: Similar with the proof for 2nd order. Remember that the proof for the 2nd order case is based on a nonlocal existence and uniqueness result for y ' f x, y , where y ( x) is a n-vector. Theorem 3.3 (form of solution): Consider the homogeneous ode (2) with p0 ( x), p1 ( x), , pn1 ( x) continuous on I . Then if ( x) is any solution of (2) on I, there exist n real constants c1 , c2 , (5) ( x) c1 y1 ( x) c2 y2 ( x) cn yn ( x) , where y1 , y2 , cn such that , yn are n linearly independent solutions of (2). Proof: Based on the uniqueness part of Theorem 3.2. 1 Theorem 3.3 states that in order to find the general solution of the homogeneous equation (2), we need only find n linearly independent particular solutions of (2). Theorems 3.2 and 3.3 are very strong results which guide our methods of finding solutions of (1) and (2). Example 1: Problems 1-6 / Section 4.1 (Boyce and diPrima). II. LINEAR INDEPENDENCE AND THE WRONSKIAN: A. Linear independence of n arbitrary functions: Definition 3.1: Let f1 ( x), f 2 ( x), f1 ( x), f 2 ( x), , f n ( x) be any n (n-1)th times differentiable functions on I. Then: , f n ( x) are called linearly dependent on I if there exist n constants k1 , k2 , kn , not all zero, such that: (6) k1 f1 ( x) k2 f 2 ( x) kn f n ( x) 0 on I (at least one function is a linear combination of the other n-1 functions); f1 ( x), f 2 ( x), , f n ( x) are called linearly independent on I if the property above does not hold, that is if (6) takes place only if (implies that) k1 k2 kn 0 . Example 2: Problems 7,8 / Section 4.1 (Boyce and diPrima). Definition 3.2: For n arbitrary (n-1) times differentiable functions on I: f1 ( x), f 2 ( x), , f n ( x) , we define their Wronskian as the determinant: (7) W f1 , f 2 , , f n ( x) f1 ( x) f1 '( x) f 2 ( x) f 2 '( x) f1( n 1) ( x) f 2( n 1) ( x) f n ( x) f n '( x) . f n ( n 1) ( x) Theorem 3.4: If n arbitrary (n-1) times differentiable functions on I: f1 ( x), f 2 ( x), dependent on I, then W f1 , f 2 , , f n ( x) are linearly , f n ( x) 0 on I . Proof: Use Definition 3.1 and a linear algebra result which states that the determinant of a matrix with linearly dependent columns is 0. 2 Note: We most often use the contra-positive of Theorem 3.4, which is: If there is a point x0 I such that W f1 , f 2 , , f n ( x0 ) 0 , then f1 ( x), f 2 ( x), , f n ( x) are linearly independent on I. Therefore, it is easy to check linear independence of functions by using this result. However, if W f1 , f 2 , , f n ( x) 0 on I , we cannot say anything about the linear independence of f1 ( x), f 2 ( x), , f n ( x) . Example 3: Problems 9,10 / Section 4.1 (Boyce and diPrima). B. Linear independence of solutions of (2): Theorem 3.5 (linear independence and the Wronskian): Consider the homogeneous n’th order ode (2) with p0 ( x), p1 ( x), , pn1 ( x) continuous on I and let y1 , y2 , , yn be n arbitrary solutions of (2). , yn are linearly dependent on I if and only if W y1 , y2 , Then y1 , y2 , y1 , y2 , , yn ( x) 0 on I , or stated another way: , yn are linearly independent on I if and only if there exists an x0 I such that W y1 , y2 , , yn ( x0 ) 0 . Proof: Relies on Theorem 3.4 and the E&U Theorem 3.2 for proving the converse (that is W y1 , y2 , , yn ( x0 ) 0 y1 , y2 , , yn are linearly dependent on I). Example 4: Problems 11 to 26 in Section 4.1 (Boyce and diPrima). Similar problems in Sections 2.1 and 2.2 in textbook. III. nTH ORDER HOMOGENEOUS LINEAR DIFFERENTIAL EQUATIONS WITH CONSTANT COEFFICIENTS: As a simpler case of the theory presented in I and II, consider now the nth order homogeneous linear equation with constant coefficients: (8) L[ y ] an y ( n ) an 1 y ( n 1) where a0 , a1 , a0 y 0 , an are (real) constants. In analogy with the second order case, we anticipate (guess) solutions of (8) of the form: (9) y erx for some suitable values of the constant r . By substituting (9) in (8), we find: (10) Z (r ) an r n an 1r n 1 a0 0 . 3 We know that any nth order equation of form (10) has n roots r1 , r2 , , rn in the complex plane. Some of these roots will often then be complex and/or repeated. Clearly, for each of these roots ri : y eri x is a solution of (8). The polynomial given in (10) is called the characteristic polynomial of (8) and (10) is called the characteristic equation associated with (8). A. Real and Distinct roots: If all roots of (10) r1 , r2 , , rn are real and distinct. Therefore we obtain n distinct solutions: y1 ( x) e r1x , y2 ( x) e r2 x , , yn ( x) e rn x of the differential equation (8). Exercise 5: Calculate W y1 , y2 , , yn ( x) for these y1 , y2 , , yn to show that these y1 , y2 , , yn are linearly independent. Example 6: Find the general solution of y (4) y ''' 7 y '' y ' 6 y 0 . Also, find the solution that satisfies the initial conditions: y (0) 1, y '(0) 0, y ''(0) 2, y '''(0) 1. See also Example 1 / Page 122 in our textbook. As these examples illustrate, the procedure of finding a general / particular solution of a linear nth order ode (8) depends on finding the roots of a nth order polynomial. If initial conditions are prescribed, then a system of n algebraic equation must also be solved. These tasks can be solved by hand (if simple enough), or by a calculator or a computer algebra system, such as Mathematica (if more complicated). B. Complex Roots: If (10) has complex roots, then they must occur in conjugate pairs i , since the coefficients ai are real numbers. Provided none of the roots are repeated, the general form of the solution is still of the form: (11) y c1e r1x c2 e r2 x cn e rn x . However, as for second order equations, we will replace for convenience all pairs of the complex valued solutions e i x and e i x by e x cos x and e x sin x as two real valued linearly independent solutions of (8). Example 7: Find the general solution of y 4 y 0 Also Example 5 / Page 128 in our textbook. 4 C. Repeated roots: If some of the roots of (10) are repeated, then (11) is not the general solution of (8). Recall that if r1 is a repeated root of the characteristic polynomial for (12) ay '' by ' cy 0 , then two linearly independent solutions of (12) are er1x and xer1x . rx rx For the more general equation (10), if a real root r1 has multiplicity s , then e 1 , xe 1 , , x s 1er1x is a set of s linearly independent solutions of (8). If a complex root i is repeated s times as a root of (10), the complex conjugate also repeats s times. Corresponding to these 2s complex valued solutions, we find the following 2s real-valued solutions: e x cos x , xe x cos x , , x s 1e x cos x e x sin x , xe x sin x , , x s 1e x sin x . These results stated in III are the main difference of a nth order linear ode versus a second order linear ode. Examples 8: 1. Find the general solution of y 4 2 y '' y 0 . 2. Find the general solution of y 4 y 0 . Also look at higher order type problems from Sections 2.1 to 2.3 in our textbook. V. The method of undetermined coefficients: A particular solution of the non-homogeneous nth order linear ode: (13) an y an1 y n n 1 a0 y f ( x) can be obtained by the method of undetermined coefficients if f ( x) is of an appropriate form (exponential, polynomial, Sine, Cosine or sums, differences and products of these). The main difference in using this method for higher order equations comes from the fact that the roots of the characteristic polynomial for the homogeneous equation may have multiplicity greater than 2. Therefore, terms proposed as a guess solution of (13) may need to be multiplied by x s with s greater than 2, in order to make them different from terms in the solution of the homogeneous equation (as in the 2nd order case we multiply each such term by x s , where s is the multiplicity of the root generating that particular term). 5 Examples 9: 1. Find the general solution of y ''' 3 y '' 3 y ' y 4e x Hint: Start by solving the homogeneous equations, then decide what is an appropriate form for the guess and find the corresponding coefficients. 2. y 4 2 y '' y 3sin x 5cos x 3. y ''' 4 y ' x 3cos x e2 x Practice also higher order problems from Problems 1 to 41 at the end of Section 2.5 in our textbook / page 156. VI. The method of variations of parameters: Consider the general non-homogeneous equation: (14) y pn1 ( x) y n n 1 p0 ( x) y f ( x) . The method of variation of parameters for finding a particular solution of (14) is a straightforward generalization of the method for second order equations. As before, it is first necessary to solve first the homogeneous equation (2). This may be difficult unless the coefficients pn1 ( x), , p0 ( x) are constants. Even so, the method of variation of parameters is more general than the method of undetermined coefficients, as it solves (14) for any continuous right hand side function f ( x) . We present now the main steps for finding a particular solution of (14) by variation of parameters. Let y1 ( x), y2 ( x), , yn ( x) be n linearly independent solutions of the corresponding homogeneous equation: (15) y pn1 ( x) y n n 1 (16) y u1 ( x) y1 ( x) po ( x) y 0 , and assume that a particular solution of (14) is of the form u n ( x ) yn ( x ) . Since we have the n functions to determine u1 ( x), , un ( x) , we will need to specify n conditions for them. Let us start by calculating successive derivatives for y ( x) . We have: (17) y '( x) u1 ' y1 ( x) u2 ' y2 ( x) un ' yn ( x) u1 y1 '( x) u2 y2 '( x) un yn '( x) . As before, in order to simplify calculations, we impose: (18) u1 ' y1 ( x) u2 ' y2 ( x) un ' yn ( x) 0 , such that y '( x) reduces to: 6 (19) y '( x) u1 y1 ' un yn ' . Continuing, we find: (20) y ''( x) u1 ' y1 ' un ' yn ' u1 y1 '' un yn '' . To simplify, we require: (21) u1 ' y1 ' un ' yn ' 0 , such that y ''( x) becomes: (22) y ''( x) u1 y1 '' un yn '' . We continue in this manner to find: (23) y ( n1) ( x) u1 y1( n1) un yn n1 if (24) u1 ' y1 n2 un ' yn n2 0 . Note that the conditions (19), (21),… (24) make already n 1 conditions for the n functions u1 , From (23) we find (25) y ( n ) ( x) u1 y1( n ) un yn( n ) u1 ' y1( n 1) , un . un ' yn( n 1) . Substituting (19), (22) … (25) into (14) we find: (26) u1 y1( n ) un ' yn( n 1) pn 1 u1 y1( n 1) un yn( n ) u1 ' y1( n 1) Using that y1 , , yn are solutions of (15), (26) reduces to: (27) u1 ' y1( n 1) un ' yn( n 1) f ( x) . un yn( n 1) p0 u1 y1 u n yn f ( x ) Concluding, the equations (18), (21), … (24) and (27) form a system of n differential equations for the n unknown functions u1 , , un . (compare this system with the 2X2 system found for a second order linear de). Think of this system as a linear system in matrix form y1 y ' 1 (28) Au ' b, where A ... n 1 y1 y2 y2 ' ... n 1 y2 yn 0 ... ... yn ' . and b ... ... 0 n 1 ... yn f ( x) ... we solve (28) by Cramer’s method, to find : (29) u1 ' f ( x)W1 ( x) , where W ( x ) is the Wronskian of y1 ( x), W ( x) , yn ( x) det A 0 , 0 ... and W1 ( x) det A1 , Α1 being matrix A with column 1 replaced by vector c . 0 1 7 In general: f ( x)Wi ( x) , i 1, 2, W ( x) (30) ui ' , n, where W ( x ) is the Wronskian of y1 ( x), , yn ( x) and Wi ( x) det Ai , Α i being matrix A with column i replaced by vector c . Explicitly: (31) ui ( x) x f ( x)Wi ( x) f (s)Wi (s) dx ds so that W ( x) W (s) x0 n (32) y ui ( x) yi ( x) , with ui ( x) given by (31). i 1 Note that formulas (31)-(32) generalize the case n=2 nicely. If needed, W ( x ) can be found from a generalization of Abel’s formula. Example: 1/239 in Boyce/diPrima Also: end of Section 4.4 in Boyce/diPrima 8