Simple Linear Regression

advertisement

Handout #5: Simple Linear Regression

Section 5.1 : Understanding the Analysis of Variance Output

Example 5.1: Consider the Home Prices dataset from the course website. This dataset includes a variety

of variables related to the prices of homes in the surrounding area.

Response Variable: CurrentPrice

Predictor Variable: SquareFeet

Assume the following structure for mean and variance functions

o

o

𝐸(𝐶𝑢𝑟𝑟𝑒𝑛𝑡𝑃𝑟𝑖𝑐𝑒|𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡) = 𝛽0 + 𝛽1 ∗ 𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡

𝑉𝑎𝑟(𝐶𝑢𝑟𝑟𝑒𝑛𝑡𝑃𝑟𝑖𝑐𝑒|𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡) = 𝜎 2

Comments:

The mean function above is called a simple linear regression model.

o

Simple implies a single predictor, i.e. Square Feet

o

Linear imples linear in the parameters, not in the form in which the predictor variables

are or are not being used. For example, the following mean function is linear in the

paraemeters, but is not a straight line model.

𝐸(𝐶𝑢𝑟𝑟𝑒𝑛𝑡𝑃𝑟𝑖𝑐𝑒|𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡) = 𝛽0 + 𝛽1 ∗ 𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡 + 𝛽2 ∗ 𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡 2

[Math Stuff]: A linear mean funtion implies that the partial derivative with respect to

any parameter is free of all other parameters.

The aboe varaince funciton is simply a constant, generically labeled 𝜎 2 .

1

Fitting a simple linear regressio model in JMP. Select Analyze > Fit Model

In the Fit Model window, we need to identify the Reponse variable and the predictor variable(s).

Comment: The Fit Model window has many options. These options will be ignored at this point and

time. Some of these options are necessary when fitting more complicated mean and/or variance

functions.

2

A partial listing of the output given by JMP’s Fit Model procedure is provided here.

3

Quantity

Value / Description

Sum of Squares

for

C. Total

Sum of Squares

for

Error

Sum of Squares

For

Model

Degrees of Freedom (DF)

for C. Total

DF for

Error

DF for

Model

Mean Square

for

Error

Note: This is our best estimate for 𝜎 2 , which is the variance in the

conditional distribution of CurrentPrice | SquareFeet, i.e. the variability

that remains in CurrentPrice that cannot be explained by SquareFeet.

Mean Square

for

Model

Mean Square

for

C. Total

(This is the variance in the

marginal distribution.)

Root Mean Square

for C. Total

(This is the standard

deviation in the marginal

distribution.)

Root Mean Square Error

Note: This is our best estimate for √𝜎 2 , the standard deviation in the

conditional distribution of CurrentPrice | SquareFeet.

4

Quantity

Value / Description

R Square

Mean of Response

Observations

Estimate

for

Intercept

Estimate

for

SquareFeet

(i.e. slope)

Std Error

for

Intercept

Std Error

for

SquareFeet

5

Section 5.2: Understanding Standard Error of Estimated Parameters

A statistical modeling approach has several advantages compared to other approaches to modeling this

type of data. This data represents a pseudo random sample of home prices. Thus, it is certainly true that

a different sample of homes will produce a slightly different regression line. This begs the question to

what degree will my regression line change from sample-to-sample. In particular, to what degree will

my y-intercept and slope change over repeated sampling.

Section 5.2.1: Standard Error for Estimated Sample Mean

First, let’s consider an easier case, the standard error for the sample mean in Marginal Distribution of

CurrentPrice.

[Math Stuff] The mathematical formula for the standard error of the sample mean, labeled as Std Err

Mean above, is given by the following.

𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟 𝑀𝑒𝑎𝑛 =

𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐷𝑒𝑣𝑖𝑎𝑡𝑖𝑜𝑛

√𝑛

Verify the calculation for the above Standard Error Mean value of $4,298.83.

6

Consider next a conceptual understanding of the standard error for the mean.

The following graphic was presented in Handout #1. The top plot contains the original data. The

standard deviation is used to measure the variation in the individual data values. The bottom plot

displays the sample mean over repeated samples. The standard error is used to measure the variation

in this plot, i.e. the variation in the sample means over repeated samples.

Standard

Deviation

Standard

Error

Individual Data Values

Sample Means over repeated samples

The advent of simulation software packages, e.g. Tinkerplots, allows us to easily understand the

variation over repeated samples. In the following depiction, a “hat” has been created and contains all

the individual data values, i.e. CurrentPrices of homes. Repeated samples can be taken by simply

clicking Run. The average from that repeated sample is displayed on the graph provided.

The collection of sample mean from 1000 repeated samples.

7

A similar depiction can be obtained in R.

R Code: BootMean()

############################################

# R function to bootstrap a simple mean

###########################################

BootMean=function(y,b=100,delay=0.2){

output.vec=rep(0,b)

xstar=runif(length(y),0.25,1)

plot(xstar,y,axes=F,ylab="",xlab="",xlim=c(0,1),type="n")

axis(2)

points(xstar,y)

rug(mean(y),ticksize=0.25,side=2)

Sys.sleep(delay+2)

points(xstar,y,col="white")

for(i in 1:b){

xstar=runif(length(y),0.25,1)

ystar=sample(y,size=length(y),replace=TRUE)

output.vec[i]=mean(ystar)

points(xstar,ystar)

rug(output.vec[i],ticksize=0.25,side=2)

Sys.sleep(delay)

points(xstar,ystar,col="white")

}

return(output.vec)

}

Original Sample

After lots of repeated samples.

After 20 repeated samples

etc…

8

Comment: The standard error is simply the standard deviation in the sample means over repeated

sampling. For well-behaved and established quantities, like the sample mean, a mathematical formula

exists for computing the standard error.

This standard error is used in the construction of the theory-based confidence interval. The standard

error quantity does not necessarily need to be computed when the sampling distribution for the sample

mean is obtained via simulation.

Visual depictions of 95% confidence interval

A 95% confidence interval contains the middle

95% of the sample means over repeated samples

The normal-based, i.e theoretical approach,

is a very reasonable approximation

9

Formula for 95% confidence interval for the sample mean.

o

𝐿𝑜𝑤𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝑆𝑎𝑚𝑝𝑙𝑒 𝑀𝑒𝑎𝑛 − 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

o

𝑈𝑝𝑝𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝑆𝑎𝑚𝑝𝑙𝑒 𝑀𝑒𝑎𝑛 + 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

where, c is the 97.5th percentile from a t-distribution with degrees-of-freedom = n-1.

T-Distribution with df = n-1 = 207 – 1 = 206

In ARC: Select Arc > Calculate quantile…

In Excel:

The output containing the 95% Confidence Interval from JMP.

Verify the calculations for the 95% confidence interval given by JMP.

o

𝐿𝑜𝑤𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝑆𝑎𝑚𝑝𝑙𝑒 𝑀𝑒𝑎𝑛 − 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

o

𝑈𝑝𝑝𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝑆𝑎𝑚𝑝𝑙𝑒 𝑀𝑒𝑎𝑛 + 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

10

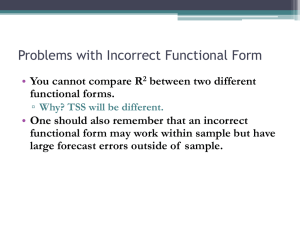

Section 5.2: Standard Error for the Slope of the Estimated Mean Function

Now that we have an understanding of the concept of standard error in a simple case, let’s now

consider the estimate of the standard error for the slope of our simple linear regression line. In

particular, we will next investigate the value 3.54 from the output.

Certainly, the estimated mean function will vary from sample-to-sample; therefor, the slope and yintercept will necessarily vary from sample-to-sample. The estimated regression line we obtained is just

one of many possible estimated mean functions.

Once again, R can be used to simulate the effect of repeated samples on the estimated slope of the

mean function over repeated samples.

R Code: BootReg()

#######################################################

# Bootstrap Regression

# Note: bootstrapping residuals here

#######################################################

BootReg=function(slr_object,b=100,delay=0){

y=slr_object$model[,1]

x=slr_object$model[,2]

resid=slr_object$residuals

output.mat=matrix(0,b,4)

plot(x,y,type="n",xlab="SquareFeet",ylab="CurrentPrice")

points(x,y)

abline(slr_object)

Sys.sleep(2+delay)

points(x,y,col="white")

11

for(i in 1:b){

residstar = sample(resid,replace=F)

ystar=y+residstar

lmtemp = lm(ystar~x)

points(x,ystar)

abline(lmtemp,col="grey")

xjitter1=min(x)+0.67*(max(x)-min(x))+runif(1,-0.2*(max(x)-min(x)),0.2*(max(x)-min(x)))

xjitter2=xjitter1 + 0.1*(max(x)-min(x))

segments(xjitter1,+lmtemp$coefficients[[1]]+xjitter1*lmtemp$coefficients[[2]],xjitter2,lmtemp$coefficients[[1]]+xjitter1*lmtemp$coefficients[[2]])

segments(xjitter2,lmtemp$coefficients[[1]]+xjitter1*lmtemp$coefficients[[2]],xjitter2,lmtemp$coefficients[[1]]+xjitter2*lmtemp$coefficients[[2]])

text(xjitter2+0.02*(max(x)min(x)),lmtemp$coefficients[[1]]+xjitter1*lmtemp$coefficients[[2]],round(lmtemp$coefficients[[2]],2),cex=0.75)

Sys.sleep(delay)

points(x,ystar,col="white")

output.mat[i,1]=lmtemp$coefficients[[1]]

output.mat[i,2]=lmtemp$coefficients[[2]]

}

Intercept = output.mat[,1]

Slope = output.mat[,2]

return(data.frame(Intercept, Slope))

}

The following graphs show the results from the above R function.

Outcomes from 3 repeated samples

Outcomes from lots of repeated samples

12

The following histogram contains the slope estimates from 1000 repeated samples.

Slope estimates from 1000 repeated samples

Give a rough sketch of the

95% confidence interval

Comment: The standard deviation of these slope estimates is 3.56, which is very close to the normalbased, i.e. theoretical, value provided by JMP.

Similar to what was done for the sample mean, the 95% normal-based confidence interval for the slope

parameter of the mean function, i.e. 𝛽1 , is given by

o

o

𝐿𝑜𝑤𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑆𝑙𝑜𝑝𝑒 − 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

𝑈𝑝𝑝𝑒𝑟 𝐿𝑖𝑚𝑖𝑡 = 𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑆𝑙𝑜𝑝𝑒 + 𝑐 ∗ 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟

where, c is the 97.5th percentile from a t-distribution with n-2 degrees-of-freedom. Note: The df is n-2

here because n-2 was used for the denominator of the variance estimate in the conditional distribution.

t-distribution with df = 207 – 2 = 205

Task: Verify the calculations for the 95% confidence

interval for the slope here.

Lower Limit:

Upper Limit:

In Excel:

Give an interpretation of the above confidence interval is laymen’s terms.

Note: The 95% confidence interval for the y-intercept, i.e. 𝛽0 , is computed in the same manner.

13

Section 5.3: Test of Significance

In this section, we will consider the significance test for the slope of the mean function that is provided

by most software packages. The quantities associated with this test, i.e, t Ratio and Prob > |t| are

shown here.

Section 5.3.1: Test of Significance for Estimated Sample Mean

Again, we will first consider an easier case of a significance test for a (population) mean. For simplicity,

suppose the following research question is of interest.

Research Question: Is there enough statistical evidence to say that the average home listing price is

different than $150,000?

The statistical hypothesis associated with this research question.

𝐻𝑂 : 𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝐻𝑜𝑚𝑒 𝐿𝑖𝑠𝑡𝑖𝑛𝑔 𝑃𝑟𝑖𝑐𝑒 = $150,000

𝐻𝐴 : 𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝐻𝑜𝑚𝑒 𝐿𝑖𝑠𝑡𝑖𝑛𝑔 𝑃𝑟𝑖𝑐𝑒 ≠ $150,000

The average home listing price for the 207 homes in our dataset is about $135,000. The purpose of a

significance test is to determine whether or not $135,000 is a likely sample mean if indeed the average

home selling price of all homes is at $150,000.

Akin to what was done when investigating the standard error, certain software packages can easily

simulate the situation of taking repeated sample of size 207 from a population whose average home

listing price is set to $150,000. In the following, Tinkerplots has been used to setup such a simulation.

14

For each trial of the simulation, the estimated sample mean is recorded. The outcomes from lots and

lots of trials are provided here.

Concept of p-value

𝐻𝑂 : 𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝐻𝑜𝑚𝑒 𝐿𝑖𝑠𝑡𝑖𝑛𝑔 𝑃𝑟𝑖𝑐𝑒 = $150,000

𝐻𝐴 : 𝐴𝑣𝑒𝑟𝑎𝑔𝑒 𝐻𝑜𝑚𝑒 𝐿𝑖𝑠𝑡𝑖𝑛𝑔 𝑃𝑟𝑖𝑐𝑒 ≠ $150,000

P-value = Proportion of the dots are as extreme or more extreme than the observed sample mean under

the assumption that the average home listing price is $150,000. That is, we assume the null hypothesis

and compute the likelihood of observing a sample mean as extreme or more extreme than the one

obtained from the actual data.

As extreme or more extreme on left-end

𝑃𝑟𝑜𝑝𝑜𝑟𝑡𝑖𝑜𝑛 ≤ $134,894.43 =

As extreme or more extreme on right-end

𝑃𝑟𝑜𝑝𝑜𝑟𝑡𝑖𝑜𝑛 ≥ $165,105.57 =

Note: $150,000 – $134,984.43 = $15,105.57, so $150,000 +

$15,105.57 = $165,105.57 is as extreme or more extreme on

the right side

P-value = ________ + ________

15

A test of significance for the mean (in the marginal distribution) can easily be obtained in JMP by

selecting Test Mean from the drop down menu after obtaining the standard summaries from Analyze >

Distribution. In the Test Mean window, simply enter the value from the null hypothesis. The following

output is returned.

The normal-based, i.e, theoretical, formula for the Test Statistic is provided next.

𝑇𝑒𝑠𝑡 𝑆𝑡𝑎𝑡𝑖𝑠𝑡𝑖𝑐 =

𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑀𝑒𝑎𝑛 − 𝜇𝑜

𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟 𝑜𝑓 𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑆𝑎𝑚𝑝𝑙𝑒 𝑀𝑒𝑎𝑛

Task: Verify the calculations for the above Test Statistic provided by JMP.

This p-value associated with this quantity is obtained from the t-distribution with df = n-1. The p-value

can be computed directly from a variety of software packages.

In Arc, select Arc > Calculate probability…

t-distribution with df = 207 – 1 = 206

In Excel:

Provide a final conclusion, using laymen’s language, for this hypothesis test.

16

Section 5.3.2: Test of Significance for Slope of the Mean Function

The regression output provided by most software packages includes a significance test for the slope and

y-intercept. The JMP output for the significance test of the slope is shown here.

Recall, the assumed mean function for the relationship between the response, i.e. CurrentPrice, and the

predictor variable, i.e. SquareFeet, was given by the quantity.

𝐸(𝐶𝑢𝑟𝑟𝑒𝑛𝑡𝑃𝑟𝑖𝑐𝑒|𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡) = 𝛽0 + 𝛽1 ∗ 𝑆𝑞𝑢𝑎𝑟𝑒𝐹𝑒𝑒𝑡

The significance test of initial importance is to ensure that the response is either positively or negatively

influenced (linearly) by the predictor variable under consideration. That is, is the slope is statistically

different than zero.

𝐻𝑂 : 𝛽1 = 0

𝐻𝐴 : 𝛽1 ≠ 0

If 𝛽1 = 0, the response does not change as a

linear function of the predictor variable.

If 𝛽1 = 0, then SquareFeet is not linearly related to

CurrentPrice. That is, there is no advantage to

considering the conditional distribution CurrentPrice

| SquareFeet.

17

Conceptually, a test of significance for the slope requires us to consider the likelihood of obtaining the

observed slope, 𝛽̂1 = 46.66, under the null hypothesis, i.e., the true slope is zero.

𝐻𝑂 : 𝛽1 = 0

𝐻𝐴 : 𝛽1 ≠ 0

If 𝛽1 = 0, then the observed pairing for each observation, i.e., (CurrentPrice, SquareFeet) is no more or

less likely than any other pairing. That is, we can essentially scramble the predictor variable to create

new pseudo pairings. The mean function is then fit using the scrambled pairings of the response and

predictor variable.

Original Data

Scrambling the predictor

variables

New pseudo pairings for which

the mean function is fit

The process described above can be repeated many times. The estimated slope from 100 such

outcomes is shown below.

Variation in estimated slopes under the

assumption 𝛽1 = 0

The observed slope is an outlier against estimated

slopes under the 𝛽1 = 0 assumption

18

The normal-based, i.e, theoretical, formula for the test of significance for the slope is given by

𝑡 𝑅𝑎𝑡𝑖𝑜 =

𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑆𝑙𝑜𝑝𝑒 − 𝛽1

𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟 𝑜𝑓 𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑆𝑙𝑜𝑝𝑒

The p-value, our measure of extremeness of the observed slope under the null hypothesis, is obtained

from a t-distribution with df = n-2.

t-distribution with df = 207 – 2 = 205

Getting p-value in ARC

Verify the calculation of the test statistic provided by JMP.

The normal-based significance test for the y-intercept proceeds similarly. The hypothesis and test

statistic are given here.

𝐻𝑂 : 𝛽0 = 0

𝐻𝐴 : 𝛽0 ≠ 0

𝑡 𝑅𝑎𝑡𝑖𝑜 =

𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑦 𝑖𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡 − 𝛽0

𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝐸𝑟𝑟𝑜𝑟 𝑜𝑓 𝐸𝑠𝑡𝑖𝑚𝑎𝑡𝑒𝑑 𝑦 𝑖𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡

19

Section 5.4 A Second Example

Example 5.2 Consider the following study that was completed by Niruupama Hikkaduwe Vithanage. This

study was completed as part of her capstone requirement. The goal of this study was to investigate the

amount of agreement between actual intake values to daily recommended intake values (DRI) for

Enegry, Vitamin A, Vitamin C, Calium, and Iron. In this example, we will consider the effect of body mass

index (BMI), a generic measure of one’s body fat levels, on the level of agreement between Energy and

EnergyDRI.

First, simply consider the level of agreement between Actual Energy Intake vs. one’s daily recommended

intake for Energy without regard to one’s body mass index.

Next, consider the relationship between Energy – EnergyDRI vs. Body Mass Index (BMI).

20

Setup of Simple Linear Regression

Response Variable: Energy Difference = Energy - EnergyDRI

Predictor Variable: BMI

Mean and variance functions

o

o

𝐸(𝐸𝑛𝑒𝑟𝑔𝑦 𝐷𝑖𝑓𝑓𝑒𝑟𝑒𝑛𝑐𝑒|𝐵𝑀𝐼) = 𝛽0 + 𝛽1 ∗ 𝐵𝑀𝐼

𝑉𝑎𝑟(𝐸𝑛𝑒𝑟𝑔𝑦 𝐷𝑖𝑓𝑓𝑒𝑟𝑒𝑛𝑐𝑒|𝐵𝑀𝐼) = 𝜎 2

The standard regression output from our analysis.

Questions:

1. What is the equation for the estimated mean function?

2. The R2 value is fairly small here. What does this imply about the relationship between Energy

Difference and BMI? Discuss.

3. What is estimated slope of the mean function, i.e., 𝛽̂1 ? Give a practical interpretation of this value.

4. What is estimated y-intercept of the mean function, i.e., 𝛽̂0 ? Does a practical interpretation of this

value exist? Why or why not.

21

5. Consider the mean function and a significance test for the slope for this example.

𝐸(𝐸𝑛𝑒𝑟𝑔𝑦 𝐷𝑖𝑓𝑓𝑒𝑟𝑒𝑛𝑐𝑒|𝐵𝑀𝐼) = 𝛽0 + 𝛽1 ∗ 𝐵𝑀𝐼

𝐻𝑂 : 𝛽1 = 0

𝐻𝐴 : 𝛽1 ≠ 0

The significance test output as provided by JMP.

a. Can we be assured that a relationship exists between Energy Difference and BMI? Explain.

b. What is the practical implication of this significance test? That is, explain the outcome of this

test using only laymen’s language.

The observed slope is not much of an outlier

against repeated samples under 𝐻𝑂 : 𝛽1 = 0

p-value will not be very small

Large amount of variation present in estimated

slopes over repeated samples

i.e. large standard error

large standard error

22