alt suppl - Chem-is

Supplemental Material for

The Use of Statistics in the High School Chemistry Laboratory

By: Paul S. Matsumoto 1 and Chris Kaegi 2

Galileo Academy of Science and Technology; Department of Science

1

or

Mathematics

2

; San Francisco, CA 94109

Given the capability of websites (1), graphing calculators, and Microsoft Excel to do the various statistical calculations, the reader should strive to gain a conceptual understanding of statistics, rather than a capability of doing the calculations. Probability is the basis of statistics; these topics will be presented using calculus (2) or algebra (3, 4).

The purpose of this supplement is to provide the reader with a background in statistics. In addition, selected experimental data from my Honors, Advanced Placement, and regular Chemistry classes will be analyzed using various statistical tests.

The presentation of statistics in this supplement will be somewhat mathematically rigorous. The rationale for this level of rigor is analogous to the rationale that a high school Chemistry teacher should have more than a first-year college Chemistry course.

That is, in both situations, a greater depth of understanding is more likely to produce a good lesson for the students.

1

Random variables (2) may be either discrete (e.g. # heads in flipping a coin 100 times) or continuous (e.g. a person’s mass). The probability function involves a discrete random variable and uses algebra, while the probability density function (pdf) involves a continuous random variable and uses calculus. These functions generate the probability for a specific value of the random variable.

Mean, standard deviation, and standard error of the mean . In an introductory statistics laboratory exercise, students roll a die many times and record the value on the face of a die, then analyze their results. The value on the face of a die is a discrete random variable and its probability function is the uniform probability function (2)

[1] f(x)

1 k where k = number of faces of the die = 6; x = number of dots on the face of the die, and f(x) = 0 for x

≠

1, 2, 3, 4, 5, 6.

In this laboratory exercise, students determine the mean and standard deviation

(SD) of their sample. The mean of the sample is

[2] sample mean = n x i while the SD of the sample is

[3] sample SD =

( mean

n

1 x i

)

2 where n = sample size. The mean of a population containing discrete random variables is

[4] Population mean =

x f ( x ) where in the case of rolling a die, the theoretical mean is

[5] mean =

6 x

1 x f ( x ) = (1+2+3+4+5+6) 1

6

= 3.5.

2

The variance of a population containing discrete random variables is

[6] Population variance =

( mean

x i

)

2 f ( x ) and [7] variance = SD

2

[or SD

var iance ] in the case of rolling a dice, the theoretical SD is

[8] SD = i

6

1

( mean

x i

)

2 f ( x )

= [(3.5

1)

2

(3.5

2)

2

(3.5

3)

2

(3.5

4)

2

(3.5

5)

2

(3.5

6)

2

] 1

6

= 1.71.

In addition, the standard error of the mean (SEM) is

[9] SEM = population SD n where the SEM is a measure of the uncertainty in the value of the mean (2, 3). Equations

2, 3, 7, & 9 are valid for any random variable, including continuous random variables (2,

3). Notice that

[10] lim n

SEM

0 that is, as the sample size increases, the SEM converges to zero. The SEM has a higher frequency of use than the SD, since the SEM is less than the SD. Nonetheless, the SD is preferred as it provides a better indication of the variation in the data than the SEM (3).

Figure 1 shows the results for this laboratory exercise from a student group.

Notice that the experimental values of the mean, SD, and SEM converges to its theoretical values. The chi-square “Goodness of fit” test (2, 3, 8) could be used to examine if the data was described by the uniform probability function.

3

A

14

12

10

8

6

4

2

0

1 2 3 4 value on the face of the die

5 6

B.

4 mean

3

2

SD

1

SEM

0

0 10 20 30 sample size

40 50 60

Figure 1. A. The histogram of the number of dots on the face of a die. B. The sample mean, sample SD, and sample SEM as a function of sample size are shown.

4

The Q-test is used to identify an outlier – either the smallest or largest data in the data set (5). The data is deleted if the Q-statistic

[11] Q =

value of the suspect data - value of nearest data

> Q c.

value of largest data - value of smallest data

Table 1 contains values of Q c

. The Q-test is used to detect an outlier and should be used to delete only a single entry.

Table 1. Q-test values for 90% confidence.

N (sample size)

3

4

5

6

7

8

9

10

Q c

0.94

0.76

0.64

0.56

0.51

0.47

0.44

0.41

For example, is the value of 25 an outlier in the following data set ?

10, 11, 13, 14, 25.

In this example,

Q

25

14

25

10

0.73 > Q c

= 0.64 thus, the value of 25 is an outlier and may be deleted in subsequent data analysis.

Regression analysis is used in “curve fitting” (2, 5, 6), a method to determine the parameters in an equation that describes the data. The method is applicable to nonlinear equations, but for simplicity, we shall demonstrate its use with the equation

[12] y = mx.

5

The basis of regression analysis using the method of least-squares is to minimize the square of the difference between the actual experimental value and its predicted value based on the best-fit line. That is, our goal concerning equation 12 is to find the value of m, where

[13] R =

(experimental value - predicted value)

2

=

(y i

- m x i

)

2

=

(y i

2 – 2 m x i y i

+ m 2 x i

2 ) is a minimum, which would occur at

[14]

R m

= - 2

x i

y i

+ 2 m

x i

2

= 0 since

2

m

R

2

(m) > 0 thus

[15] m =

x i y i

x i

2 where there is a single minimum. In cases with multiple minima, chose the absolute minimum. When the equation has more parameters, simply evaluate the derivatives with respect to those parameters, then solve the resulting system of equations. Both graphing calculators and MS Excel have build-in capabilities to evaluate a number of functions, thus the students may not need to calculate the values of the parameters in the function – they only need to understand its basis. The use of a weighted least-squares regression analysis (4, 7) is beyond the scope of this supplement.

Table 2 provides an example of using regression analysis that involves the calibration curve in a Beer’s Law laboratory exercise.

6

Table 2. Calibration curve for Cu

2+

at

λ

= 660 nm.

[Cu

2+

] (mM) Absorbance

250

125

62.5

31.25

1.308

0.686

0.359

0.186

The concentration of an unknown solution of Cu

2+

can be calculated using the Beer-

Lambert Law

[16] Absorbance = [molar absorptivity * path length] * concentration

= constant * concentration.

The value of the constant, based on the data in table 2 and equations 15 and 16 is

[17] constant =

concentrat ion * absorbance

concentrat ion

2

=

0 .

25 * 1 .

308

0 .

125 * 0 .

686

0 .

0625 * 0 .

359

0 .

03125 * 0 .

186

0 .

25

2

0 .

125

2

0 .

0625

2

0 .

03125

2

= 5.313 M

-1 thus equation 16 becomes

Absorbance = 5.313 M

-1

[Cu

2+

].

For example, if an unknown Cu 2+ solution has an absorbance = 1.0, then

[Cu

2+

] = absorbance cons tan t

1 .

0

5 .

313 M

1

0 .

190 M p-value A statistical test determines the p-value (P), the probability of error (2, 3,

8), which decides if there is a “statistically significant difference”. By convention, there is no difference when P

≥

0.05, while there is a difference when P < 0.05.

7

There are two types of errors (see table 3) in a statistical test. The subsequent pvalues in this supplement refers to the type I error. The evaluation of the type II error is beyond the scope of this supplement.

Table 3. Types of errors in a statistical test. accept the null hypothesis reject the null hypothesis null hypothesis is valid Correct decision Type I error null hypothesis is invalid Type II error Correct decision

The p-value depends on three factors. First, the value of the test statistic, which is a continuous random variable that provides an index of the “difference” in the data that is been compared in the statistical test. Second, P is equal to the definite integral of the pdf, thus P depends on the nature of the pdf. Third, the limits of integration depend upon the null (H o

) and alternative (H

1

) hypothesis.

For the Student-t pdf [equation 22 & figure 2a], there are two types of p-values corresponding to a type I error, which depends on H o

and H

1

in the statistical test, and are known as a 1-sided or 2-sided p-value. The 1-sided (or 1-tail) p-value refers to the case, where

[18] H o

: X

1

≥

X

2

H

1

: X

1

< X

2 and P =

x

f ( x ) dx

.

In this situation, X

1

is the mean of sample 1, X

2 is the mean of sample 2, x is the test statistic and f(x) is its pdf. Another 1-sided p-value refers to the case, where

8

[19] H o

: X

1

≤ X

2

H

1

: X

1

> X

2 and P =

x f ( x ) dx

.

The 2-sided (or 2-tail) p-value refers to the case, where

H o

: X

1

= X

2

H

1

: X

1

≠

X

2 which could be interpreted as:

H o

: X

1

= X

2

H

1

: X

1

< X

2 or X

1

> X

2

[20] and P =

x

f ( x ) dx

+

x f ( x ) dx

.

Notice that if the pdf is symmetric about x = 0,

x

f ( x ) dx

=

x f ( x ) dx

, and P (2-tail)

= 2 P (1-tail) for the same value of the test statistic, e.g. see tables 4 and 5.

To further clarify the difference between the 1- and 2- tail p-values, examine figure 2a, which shows an example of the pdf for the Student-t distribution. While changes in the number of degrees of freedom (df; refers to the sample size) changes the pdf, its shape remains the same. The area under the curve represents the probability. For

P < 0.05, the 1-tail case [equation 19] is represented by the area under the curve in figure

2a from t = 1.8 to infinity, while the 2-tail case [equation 20] is represented by the sum of the area under the curve in figure 2a from t = 2.2 to infinity and t = - infinity to -2.2 .

That is, for a given P (i.e. area under the curve), the value of the test statistic in the 1-tail case is less than the 2-tail case, thus it is easier to detect a statistically significant difference using the 1-tail p-value than the 2-tail p-value.

9

A.

-6

2-tail

-4 -2

0,5

0,4

0,3

0,2

0,1

0

0 t

2

1-tail

2-tail

4 6

B.

0,6

0,4

0,2

0

0 1 2 3

F

4 5 6

Figure 2. An example of the probability density function for the Student-t distribution (A; df = 10) and F-distribution (B; df

1

= df

2

= 6). t-test.

There are two types of t-tests (2, 3, 8). The 2-sample t-test (also known as an independent sample t-test or unpaired-sample t-test) compares the means of two independent samples, while the 1-sample t-test (also known as a correlated sample t-test or paired-sample t-test) usually determines if the difference between a paired set of data or the mean of a single sample is zero. Data from independent samples must be analyzed using the 2-sample t-test, while data from a paired sample should be analyzed by the 1-

10

sample t-test, but may be analyzed by the 2-sample t-test. The 1-sample t-test is more likely to detect a difference than the 2-sample t-test.

The test statistic in the 2-sample t-test is

[21a] t

difference between the means var iance in data

=

S

X

1

p

X

2

1 n

1

1 n

2 where S p

2

= (n

1

– 1) SD

1

2

+ (n

2

-1) SD

2

2 n

1

+ n

2

- 2 and n

1

and n

2

are the sample size of group 1 and 2, respectively. If n

1

= n

2

= n, then

[21b] t

( X

1

X

2

)

SD

1

2

SD

2

2 n

.

The pdf of the test statistic is the Student-t distribution (shown in figure 2a)

[22] f (t,

ν

) =

(

(

2

)

2

1

)

( 1

t

2

)

2

1 for -

∞

< t <

∞ with

ν

degrees of freedom, where

ν

= n

1

+ n

2

- 2 where the gamma function is

[23]

( x )

0 y x

1 e

y dy

= (x-1) ! (for x = a positive integer) and

[24]

Γ

(m + ½) =

Γ

(2m+1) 2 -2m

Γ

(m+1)

(for m = integer).

The probability of making a type I error, the probability of rejecting the null hypothesis when it is true is

11

P ( 1

tail )

t

f ( t ,

) dt

t f ( t ,

) dt where

0 f ( t ,

) dt

0

t f ( t ,

) dt

t f ( t ,

) dt

0 .

5

(see figure 2a to visualize this relationship) thus,

[25] P ( 1

tail )

t f ( t ,

) dt

0 .

5

t

0 f ( t ,

) dt

0 .

5

(

(

2

)

2

1

)

t

0

( 1

t

2

)

1

2 dt and

P ( 2

tail )

1

2

(

2

(

)

1

2

)

t

0

( 1

t

2

)

1

2 dt

.

Notice that both equations 23 and 24 were used to evaluate equation 25.

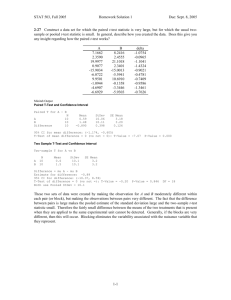

Table 4 provides an example of an experiment using the 2-sample t-test. The composition of pre- and post- 1982 pennies are different, thus their densities may differ.

As P < 0.05 (for either the 1-tail or 2-tail p-value), there is a statistically significant difference between the (average) density of pre- versus post- 1982 pennies.

12

Table 4. The density of pre- and post- 1982 pennies. The 2-sample t-test was used to determine the p-value: P (1-tail) = 0.000383; P (2-tail) = 0.000765.

X

± SD

Density (g / mL) of pre-1982 pennies

9.32

9.69

9.25

8.86

9.28 ± 0.34

Density (g / mL) of post-1982 pennies

6.59

7.57

7.5

6.29

6.99 ± 0.65

The test statistic [equation 21b] using the data in table 4 is t =

( 9 .

28

6 .

99 )

0 .

34

2

0 .

65

2

4

6 .

24 and the p-value [equation 25] was determined by using numerical methods (6) to evaluate the definite integral. A description of numerical methods is beyond the scope of this supplement.

The test statistic in the 1-sample t-test is

[26] t = difference

SEM difference

SD n or sample mean sample SD n which is also described by the Student-t distribution and used to determine its p-value

[equations 22 - 25].

The 1-sample t-test was used to analyze the data in table 5. In this experiment, the experimentally determined value of the change in enthalpy to dissolve solid sodium hydroxide in water was compared to its theoretical value. As P < 0.05 (for either the 1tail or 2-tail case), there is a statistically significant difference between the experimental and theoretical value.

13

Table 5. The experimental determination of the change in enthalpy to dissolve solid sodium hydroxide in water. The 1-sample t-test was used to determine the p-value: P(1tail) = 0.0058; P(2-tail) = 0.012.

X

Experimental value Theoretical value difference

- 37.3 kJ / mole - 44.5 kJ / mol 7.2 kJ / mole

- 39.5

- 38.8

± SD - 38.5 ± 1.1

5.0

5.7

5.97 ± 1.12

The test statistic [equation 26] using the data in table 5 is t =

5 .

97

1 .

12

3

9 .

23 and the p-value [equation 25] was determined by using numerical methods (6) to evaluate the definite integral.

In the 2-sample t-test [equation 21] or 1-sample t-test [equation 26], the value of the test statistic may increase due to an increase in the difference between the means, an increase in the sample size, or a decrease in the SD. An increase in the value of the test statistic produces a decrease in P [equation 25 & see figure 2a], thus an increase in the difference, an increase in the sample size, or a decrease in the SD raises the likelihood of detecting a statistically significant effect using either the 1- or 2- sample t-test. As there is limited control in determining the difference or SD in the data, the method under the investigator’s control to detect a statistically significant effect is to increase the sample size.

F-test was used to compare the variance of two groups of data (2, 3, 8). The test statistic is

[27]

F

SD

2

1

SD

2

2

14

where SD

2

1

SD

2

2

with n

1

and n

2

degrees of freedom, respectively and its pdf, the Fdistribution, is

[28] g(F, n

1

, n

2

) =

(

( n

1

2

) n

1

n

2

2

(

) n

2

2

)

( n

1 n

2

) n

1

2 F n

1

2

1

( 1

n

1 n

2

F )

n

1

n

2

2

for F > 0; otherwise, g (F) = 0. The pdf of the F-statistic is shown in figure 2b. The P-value is

[29]

P

(

( n

1

2 n

1

n

2

2

)

(

) n

2

2

)

( n

1 n

2

) n

1

2

F

F n

1

1

2 ( 1

n

1 n

2

F )

n

1

n

2

2 dF

1

( n

1

n

2

2

( n

1

2

)

)

( n

2

2

)

( n

1 n

2

) n

1

2

F

0

F n

1

2

1

( 1

n

1 n

2

F )

n

1

2 n

2 dF

(see figure

2b to visualize this relationship) where equations 23 and 24 were used to evaluate the gamma functions. Notice that as the difference between the SD increases, the value of F increases, thus the value of P decreases, which raises the likelihood that the SD are different.

Analysis of variance (ANOVA) refers to a group of statistical tests that differ in the number of factors that may affect the outcome of the experiment (2, 3, 4, 8). Onefactor ANOVA (1-ANOVA) is used to examine one factor that may affect the outcome of an experiment, while two-factor ANOVA (2-ANOVA) examines two factors that may affect the outcome of an experiment. A factor has many levels, e.g. the concentration of a drug is a factor , while the different concentrations of the drug refer to the levels of this factor. Higher-order ANOVA (examines three or more factors that may affect the outcome of an experiment) or repeated measures ANOVA (equivalent to multiple correlated sample t-tests) are beyond the scope of this supplement.

15

1-ANOVA was used to compare the mean of three or more levels (or experimental conditions) in a pair-wise manner. While multiple independent sample ttests could achieve the same result, this method increases the probability of error (3, 4).

1-ANOVA achieves these multiple pair-wise comparisons without incurring an increase in P. 1-ANOVA detects, but does not identify, the presence of pair(s) of data that are different. The specific pair(s) of data that are different are identified by a post-ANOVA multiple comparison test (3, 4, 8), e.g. the Tukey test.

In 1-ANOVA, the test statistic is

[30]

F

MS ( Tr )

MSE where the MSE is “mean sum error” and MS(Tr) is “mean sum treatment”. The basis of

1-ANOVA is as follows. The determination of the MSE and MS(Tr) are different methods to estimate the variance. If there was a treatment effect, then the mean among the various experimental conditions are not from the same population (i.e. they are different), thus using the SEM to estimate the population variance (basis of MS(Tr) as an estimate of the variance) is invalid. An assumption in 1-ANOVA is that all experimental conditions, irrespective of a treatment effect, have the same variance, thus the MSE, which is the average variance of all groups, is a valid estimate of the variance. The F-test was used to compare these two estimates of the variance. If the MSE and MS(Tr) are the same, then there was no treatment effect. If the MSE and MS(Tr) are different, then there was a treatment effect.

The MSE is

[31a]

MSE

i n

1

1

( x

1 i

X

1

)

2 i n

2

1

( x

2 i n t

X

2

)

2

...

i n k

1

( x ki

k

X k

)

2

16

where X k

is the mean of the k th

group, x ki

is the i th

sample in the k th

group, n k

= number of observations in the k th

group, k = number of experimental conditions, and n t

is the total number of observations = n

1

+ n

2

+ … + n k

. Equation 31a is simplified by using equations 3 & 7 to obtain

[31b]

MSE

( n

1

1 ) var iance

1

( n

2

1 ) var n t iance

2

k

...

( n k

1 ) var iance k

.

For n

1

= n

2

= … = n k

= n, equation 31b becomes

[31c]

MSE

( n

1 ) [var iance

1

var kn iance

2

k

...

var iance k

]

var iance

1

var iance

2

...

k var iance k

.

Depending if the groups have the same sample size, the MSE is the average variance

[equation 31c] or the weighted average variance [equation 31b] based on the variance of each group.

The MS(Tr) is

[32a]

MS ( Tr ) i k

1 n i

( k

X i

X

T

1

)

2 where X

T

= mean of all observations. For n

1

= n

2

= ... = n k

= n, equation 32a becomes

[32b]

MS ( Tr )

n

i

1

( X i

X

T k

1

)

2

.

The SEM is the SD of a sample of means (SD means

) from the same population and using equation 3,

17

[32c]

SEM

SD means

i k

1

( X i

X

T k

1

)

2

.

Squaring equation 9 and solving for SD 2

[32d] population SD

2

= n SEM

2 then substituting equation 32c into equation 32d, followed by using equation 7

[32e] variance = n

i

1

( X i

k

1

X

T

)

2

refers to the variance of all observations. Substituting equation 32b into equation 32e,

[32f] MS(Tr) = variance = n SEM

2

= n

i

1

( X i k

X

T

1

)

2

which shows that the MS(Tr) is an estimate of the variance based on the SEM. In contrast, when the sample size is different, the relationship between the MS(Tr) and SEM is less clear.

Table 6 contains an example of an experiment, where 1-ANOVA and Tukey’s test were used in its analysis. This experiment compares the melting points of various organic compounds. As P < 0.05, there was at least one statistically significant difference among a pair of samples. The subsequent use of the Tukey test identifies the differences to be between samples B versus C and between samples A versus C.

18

Table 6. The melting points of 3 samples. 1-ANOVA based p-value = 0.00015 and

Tukey’s test: P > 0.05 for A versus B, while P < 0.01 for A versus C and B versus C.

Sample A Sample B Sample C

Experimental data

(melting point;

º

C)

80

80

83

81 ± 1.7

X

± SD

Using equation 31c

MSE

( 1 .

7

2

4 .

5

2

3

2

)

3

10 .

71

81

72

77

77 ± 4.5

55

58

52

55 ± 3 while using equation 2 mean

80

80

83

81

72

77

55

58

52

9

71 and using equation [32f],

MS(Tr) = n

i

1

( X i k

X

T

1

)

2

3 *

( 81

71 )

2

( 77

71 )

2

( 55

71 )

2

2

588

.

The test statistic [equation 30] is

F = var iance based on the SEM average var iance of groups

MS ( Tr )

MSE

588

10 .

71

54 .

9 which was used to calculate the p-value [equation 29] by using numerical methods (6) to evaluate the definite integral.

The Tukey test has a q-statistic (4, 8) q

X b ig

X sma ll

MS E

(

2

1 n b ig

1 n sma ll

)

19

with k and n

T

-k degrees of freedom, where n

T

= total number of observations. Unlike the preceding test statistics, we do not know the pdf of the q-statistic, but values of the qstatistic for a given P and degrees of freedom are found in tables (4) or using a web-based calculator (8). A significant difference between a pair of means occurs when (8)

[33]

X big

X small

q

MSE

2

( 1 n big

1 n small

)

.

For example, to compare sample A versus B in table 6, using q (k=3, n

T

-k= 6; P = 0.05) and equation 33

81 – 77

≥

4.34 10 .

71 (

2

1

3

1

3

) ?

4 ≥ 8.2 is false, thus there is no significant difference between samples A and B. On the other hand, to compare samples A versus C using q (k=3, n

T

-k= 6, P = 0.01) and equation 33

81 – 55

≥

6.32 10 .

71

2

( 1

3

1

3

) ?

26

≥

11.9 is true, thus there is a significant difference between samples A and C.

2-ANOVA (2, 4, 8) is similar to 1-ANOVA, but examines the effect of two, rather than one factor that may affect the outcome of an experiment. In addition, 2-ANOVA identifies any interaction (4) between the two factors, which means that the effects of the two factors are not independent, i.e. the effect of a factor depends on the value of the other factor. A description of multiple comparison tests associated with 2-ANOVA is beyond the scope of this supplement.

The data in table 7 examines the effect of two factors, the [HCl] and the duration of HCl treatment, on the determination of the % zinc in post-1982 pennies using 2-

20

ANOVA. The basis of this experiment is that these pennies contain zinc and copper, where the selective oxidation of zinc by HCl, allows the determination of the % zinc in these pennies. The statistical analysis shows that there was no interaction between the effects of [HCl] and the duration of HCl treatment, thereby simplifying our conclusions.

Furthermore, the statistical analysis shows that a higher [HCl] increases the oxidation of zinc by HCl, but the duration of the HCl treatment did not affect the results.

Table 7. The effects of the concentration of HCl and the duration of the HCl treatment on the experimentally determined % zinc in post-1982 pennies. 2-ANOVA was used in data analysis. P(column) = 0.0002; P(row) = 0.38; P (interaction) = 0.60

1-day HCl treatment

[ X

± SD; n = 5]

2-day HCl treatment

[ X

± SD; n = 6]

X

± SD (column)

[HCl] = 3.0 M

45

23

30

41

18

[31 ± 12 %]

42

76

16

54

15

51

[42 ± 24 %]

37 ± 19 %

[HCl] = 6.0 M

74

73

82

74

54

[71 ± 10 %]

84

54

54

95

64

94

[74 ± 19 %]

73 ± 15 %

X

± SD (row)

51 ± 23 %

58 ± 26 %

The calculation of the test statistic in 2-ANOVA is similar to 1-ANOVA, where

F ( row )

MS ( Tr :

MSE row )

and

F ( column )

MS ( Tr : column )

MSE

21

and numerical methods (6) were used to evaluate the definite integral of equation 29 to obtain the p-value. The subsequent calculations in this section will use the data in table

7. Using equation 2,

Mean =

45

23

30

...

( add all data in table 7 )

22

55 .

14 .

The MS(Tr: row) and MS(Tr: column) terms were calculated in a similar manner as in the 1-ANOVA case [equation 32a],

MS ( Tr )

i k

1 n i

( k

X i

X

T

1

)

2 where k, n i,

and

X i correspond to the values in the columns or rows (we did not use equation 32f, since the number of samples in each experimental condition is different).

The estimates of the population variances are

MS ( Tr : rows )

k i

1 n i

( X i

X

T k

1

)

2

10 ( 51 .

4

55 .

14 )

2

2

12

1

( 58 .

25

55 .

14 )

2

255 .

9 and

MS ( Tr : columns )

i k

1 n i

( X i

X

T k

1

)

2

11 ( 37 .

36

55 .

14 )

2

2

11

1

( 72 .

91

55 .

14 )

2

6951 .

The calculation of the MSE is similar to the 1-ANOVA case, where we shall use equation

31b (we did not use equation 31c, since the number of samples in each experimental condition was different)

MSE

( n

1

1 ) var iance

1

( n

2

1 ) var n t iance

2

k

...

( n k

1 ) var iance k

=

4 ( 12

2

)

4 ( 10

2

)

22

5

4

( 24

2

)

5 ( 19

2

)

314 .

5

.

Substituting these values into the test statistic

22

F ( row )

MS ( Tr :

MSE row )

255 .

9

314 .

5

0 .

81 and

F ( column )

MS ( Tr : column )

MSE

6951

314 .

5

22 .

1 which were used to calculate the p-value. The calculation of F (interaction) is beyond the scope of this supplement.

The statistical tests in this supplement are parametric tests, where the data can be described by the normal pdf. Nonparametric tests (2, 3, 8) do not have this requirement and have less assumptions on the nature of the data than parametric tests. Nonparametric tests were not used in my Chemistry classes; because, I did not wish to overwhelm my students with statistics. The reasons for using parametric rather than nonparametric statistical tests were due to the robustness and greater power of the parametric tests (3, 4).

Further arguments concerning the use of a parametric versus nonparametric test or a description of nonparametric tests are beyond the scope of this supplement. Ultimately, it is a matter of opinion in the use of a parametric versus nonparametric test.

Table 8 is a summary of the situations to use the various statistical tests described in this supplement.

23

Table 8. Comparison of various statistical tests.

Statistical test

F-test

1-tail, 1-sample t-test

2-tail, 1-sample t-test

1-tail, 2-sample t-test

2-tail, 2-sample t-test

1-anova

2-anova

Compare

SD

√

Compare mean

√

√

√

√

√

√

# groups H o

& H

1

2

1 or 2

1 or 2

2

2

3 or more;

1 factor

3 or more;

2 factors

H o

: SD

1

=SD

2

H

1

: SD

1

≠

SD

2

H o

:

∆

x ≤ 0

H

1

:

∆

x > 0 or

H o

:

∆

x

≥

0

H

1

:

∆

x < 0

H o

:

∆

x = 0

H

1

:

∆

x

≠

0

H o

: X

1

≤ X

2

H

1

: X

1

> X

2

H o

: or

X

1

≥

X

2

H

1

: X

1

< X

2

H o

:

H

1

:

X

1

=

X

1

≠

X

2

X

2

H o

:

H

1

:

X

1

=

X

1

≠

X

2

X

2

H o

:

H

1

:

X

1

=

X

1

≠

X

2

X

2

24

References

1.

http://faculty.vassar.edu/lowry/VassarStats.html ; http://members.aol.com/johnp71/javastat.html (accessed August 2004)

2.

Freund, J.E.; Walpole, R.E. Mathematical Statistics, 4 th

ed.; Prentice Hall, NJ

1987

3.

Gantz, S.A. Primer of Biostatistics, 4 th

ed. McGraw-Hill, NJ 1997.

4.

Kleinbaum, D.J.; Kupper, L.L.; Muller, K.E. Applied Regression Analysis and

Other Multivariable Methods. 2 nd

ed.; PWS-Kent; MA. 1988.

5.

Shoemaker, D.P.; Garland, C.W.; Steinfeld, J.I. Experiments in Physical

Chemistry. 3 rd

ed. McGraw Hill, NY 1974.

6.

Cheney, W.; Kincaid, D. Numerical Mathematics and Computing, 2 nd

ed. Brooks

/ Cole Publ., CA 1985.

7.

deLevie, R. J. Chem. Educ. 1986, 63, 10 – 15.

8.

http://faculty.vassar.edu/lowry/webtext.html (accessed August 2004)

25