Iterative Solution of Linear Equations

advertisement

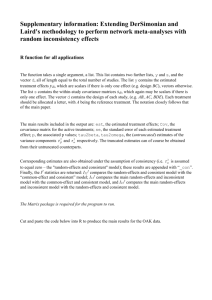

Iterative Solution of Linear Equations Preface to the existing class notes At the risk of mixing notation a little I want to discuss the general form of iterative methods a general level. We are trying to solve a linear system Ax=b, in a situation where cost of direct solution for matrix A is prohibitive. One approach starts by writing A as the sum of two matrices A=E+F. With E chosen so that a linear system in the form Ey=c can be solved easily by direct means. Now we rearrange the original linear equation to the form: Ex = b - Fx Introduce a series of approximations to the solution x starting with an initial guess x (0) and proceeding to the latest available approximation x(j). The next approximation is obtained from the equation: E x(j+1) = b - F x(j) or x(j+1) = E-1 (b - F x(j)). Understand that we never actually generate the inverse of E, just solve the equation for x (j+1). To understand convergence properties of this iteration introduce the residual r (j) = b - A x(j). Using this definition and the definition of the iteration, we get the useful expression: r(j+1) = FE-1 r(j), or r(j) = (FE-1)j r(j). If you know something about linear algebra this tells you that the absolute values of eigenvalues of FE-1 should be less than one. Another approach is to choose a matrix M such that the product of M-1 and A is close to the identity matrix and the solution of an equation like My=c can be obtained without too much effort. The revised problem can be cast either in the form M-1Ax = M-1b Or AM-1 y = b where x = Beginning of normal ME540 Notes a) Introduction i) Procedure – general 1) Assume initial values for the variable field 2) Use the nodal finite difference equations one at a time or in groups to obtain an improved value for the variables. Repeat the procedure until a converged solution is obtained. ii) Advantages Iterative methods are used because: Requires less computer storage. Is reasonably computational fast. There is, however, no guarantee that a converged solution can be obtained. iii) Symbols to be used exact solution of differential equation exact solution of finite difference equations ̂ computer solution to finite difference equations iv) Convergence The solution is said to be converged when ˆ ( j) Limit j where j is an index for the iteration number. b) Procedure – details i) Rearrange coefficient matrix Solution required H d Modify H matrix by taking each equation and dividing it by diagonal coefficient to form a “C” matrix c ij h ij h ii , gi di h ii New Expression C g The C Matrix will have all its diagonal elements with the value 1. ii) Iterative set of equations ( O ) initial value of variable for calculation Cˆ ( j) g residual r ( j) g Cˆ ( j) Introduce identity matrix, I 1 1 "0" 1 1 "0" 1 1 Add ˆ ( j1) to both sides of Cˆ ( j) g ˆ ( j1) to Cˆ ( j) g ˆ ( j1) Transpose Cˆ ( j) and let ˆ ( j1) on the right hand side be ̂ ( j) ˆ ( j1) g ˆ ( j) Cˆ ( j) g (I C)ˆ ( j) B g Bˆ ( j) Thus B IC C IB or The iterative equation can be expressed as ˆ ( j1) g Bˆ ( j) 1 c12 c13 c14 c 21 1 c 23 c 24 c 31 c 32 1 c 34 c 41 c 42 c 43 1 C =I 1 0 c13 c14 0 0 0 1 0 0 c 21 0 c 23 0 1 0 c 31 c 32 0 0 0 0 c 41 c 42 c 43 c 24 0 - 0 0 B c12 c 34 0 Introduce a lower (L) and upper (U) matrix B LU 0 c12 c13 c14 c 21 0 c 23 c 24 c 31 c 32 0 c 34 c 41 c 42 c 43 0 0 0 c 21 0 c 31 c 32 0 c 41 c 42 c 43 ˆ ( j1) g (L U)ˆ ( j) This is known as the Jacobi method Example Iterative equation for node 5 0 c12 c13 c14 0 c 23 c 24 0 c 34 0 T̂5 ( j1) g iii) T̂2 ( j) T̂4 ( j) T̂6 ( j) T8 ( j) 4 Error propagation and convergence 1) Error propagation known ̂ (O) ˆ (1) g Bˆ ( O ) ˆ ( 2 ) g Bˆ (1) g (1 B) B 2 ˆ ( O ) ˆ ( 3) g (1 B B 2 ) B 3 ˆ ( O ) ˆ ( N ) g (1 B B 2 B N 1 ) B N ˆ ( O ) Introduce computational error ( j) ( j) - (g Bˆ (j-1) ) ( - g) - Bˆ (j-1) Note (I B) g So g B B( ˆ ( j1) ) B ( j1) (j-1) B ( j 2 ) ( j 2 ) B ( j3) ( 2 ) B (1) ( 4 ) B (O) Back substitute to obtain ( j) B j (O) Indicating that errors are propagated in an identical manner To the propagation of . 2) Convergence Limit ˆ ( j) j r ( j) g Cˆ ( j) residual Convergence checks L2 Norm Limit R ( j) (r ( j) 2 1 / 2 ) R ( j) 0 (with machine accuracy) j Other tests Maximum (Local) Maximum (Global) ˆ ( j1) ˆ ( j) (ˆ ( j1) ˆ ( j) (ˆ or ( j1) ˆ ( j) ) 2 1/ 2 Will the iterative solution selected converge? Error propagation ( j) B j (O) To answer this question we look at the characteristics roots of the B matrix. B Latent root of “B” matrix vector such that the equality is satisfied Re-arranging (B ) O Non-trivial solution b11 b 21 B O b13 b1N b11 b 23 b 2N b 3N b12 b 31 b 32 b 33 b 34 Expand as a polynomial O 1 2 2 (1) N N N 0 The characteristic root are the (Eigenvalues) Maximum root ’s ( MAX ) is called the spectral radius of the matrix SR . The polynomial will converge to zero if: SR <1 Original expression (page 74 of class notes) 2 ( j1) j ˆ (O) ˆ ( j) g(1 B B B ) B ( I B) 1 if SR <1 B<1 i.e. B B(B) B 2 B 2 so general expression B P P so ˆ ( j) g(I B) 1 B j ˆ ( O ) Limit ˆ ( j) g(I B) 1 j C g (I B) g exact solution g(I - B) -1 so solution converges if Limit ˆ ( j) i.e. j c) i) Influence of search pattern Jacobi ˆ ( j1) g Bˆ ( j) g (L U) ˆ (j) You go through the complete grid pattern with ˆ ( j1) f (ˆ ( j) ) SR < 1 very slow convergence ii) Gauss Seidel Method ˆ The new values of ( j1) Lˆ ( j1) Uˆ ( j) g Where the equations are listed in the order of search. The L and the U depend of the search pattern. Rewrite expression (I L)ˆ ( j1) Uˆ ( j) g ˆ ( j1) (I L) 1 Uˆ ( j) (I L) 1 g Note standard equation So ˆ ( j1) g Bˆ ( j) (I L) 1 U is the “B” matrix for the Gauss Seidel method. i.e. the latent roots of (I – L)-1 U must be less than 1. Also the error propagation is j ( j) (I L) 1 U ( O) Example assume Dx = Dy 1 - 1 4 - 0 - 1 4 1 4 0 1 - - 1 4 0 0 1 4 0 - 1 4 0 1 4 1 0 0 0 0 1 - - 200 4 1 100 2 4 3 100 4 4 5 100 4 6 0 1 4 1 4 0 C IB I-L-U 0 1 4 0 0 0 0 0 0 0 0 0 0 1 4 0 1 4 0 0 0 1 4 0 0 1 4 0 0 0 0 0 0 0 0 0 1 4 0 1 4 0 1 4 0 0 0 0 1 4 0 1 4 0 0 0 0 0 0 1 4 0 0 0 0 1 4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 L 1 4 0 U ˆ ( j1) Lˆ ( j1) Uˆ ( j) g Equations for Gauss Seidel Node 1 ˆ ( j) ˆ ( j) ( j1) ˆ 1 2 4 50 4 4 Node 5 ˆ ( j1) ˆ ( j1) ( j) ˆ ( j1) 2 4 6 0 5 4 4 4 Change the search pattern 1 , 4 , 5 2, 3 6 ˆ ( j) ˆ ( j) ˆ ( j1) 2 4 50 1 4 4 Node 1 ˆ ( j) ˆ ( j1) ˆ 6 ( j) ˆ 5 ( j1) 2 4 0 4 4 4 Node 5 iii) Extrapolated Liebmann method ˆ ( j1) Lˆ ( j1) Uˆ ( j) g ( j1) ( j) ( j1) ( j) ˆ ˆ ˆ ˆ Subtract L U g ( j) These terms represent the change in ˆ as we go from the jth iteration to the j1th iteration. Multiply that change by (over-under relaxation factor) 0 2 ˆ ( j1) ˆ ( j) Lˆ ( j1) U ( j) g ˆ ( j) ˆ ( j1) (1 )ˆ ( j) Lˆ ( j1) Uˆ ( j) g (I L)ˆ ( j1) (1 )Iˆ ( j) Uˆ ( j) g 1 ˆ ( j1) 1 L 1 I U ˆ ( j) (1 L) 1 g B Matrix SR B 1 Error ( j1) (1 l) 1[(1 )I U] ( j1) ( O) Note If 1 we have the Gauss Seidel method. Example Use original search pattern. 1, 2, 3, 4, 5, 6 (I L)ˆ ( j1) (1 )Iˆ ( j) Uˆ ( j) g 1 1 L ˆ ( j1) (1 ) 1 1 U ˆ ( j) g 1 1 Node 3 ˆ ( j) ˆ ( j1) ˆ 3 ( j1) 2 (1 )ˆ 3 ( j) 6 25 4 4 ( j1) ˆ ( j1) ˆ 5 ˆ 6 ( j1) 3 (1 )ˆ 6 ( j) 0 4 4 Node 6 iv) Other important characteristics of the “C” Matrix 1) Property “A” If Differential equation does not have mixed derivative terms. The region is subdivided using a rectangular grid pattern. And the nodes are labelled in an alternating fashion. Black Nodes White Nodes The matrix will have property “A” if WHITE f ( BLACK ) and BLACK f ( WHITE ) Consistent ordering If one has consistent ordering the values of the Variable field at iteration (j+1) is independent of Search pattern used. Simple test: Five point approximation of T Matrix (“C”) has property A. 2 1. Select a search pattern. 2. Move node by node through the grid following the selected search pattern. Look at the four surrounding nodes. If they are not already connected to the node, draw an arrow from it with the head of the arrow located at the surrounding node. 3. Move around a rectangle created by the grid lines. If you find two arrows going with you and two arrows going against you, the search pattern has consistent ordering. If three arrows are with you and one against (or 3 against and 1 with you) you do not have consistent ordering. If the “C” matrix is consistent and has property “A” a relationship can be found between eigenvalues of the “B” matrix for the Jacobi, Gauss-Seidel and the over-under relaxation method. Poisson Equation 2 f ( x , y) Square grid x y h known on all boundaries Spectral radius for Jacobi method SR Example 1 cos cos 2 P q P4 SR If 10 x 10 mesh q4 1 cos cos 0.707 2 4 4 P = 10 SR cos q = 10 0.95 10 For the Gauss Seidel method SR GS SR 2 Jacobi thus SR 4x4 GS (0.707) 2 0.499 and 10 x 10 SR GS (0.95) 2 0.90 Asympototic rate of convergence ( j1) B ( j) [A] ( j1) B j1 ( O ) BSR so ( j1) SR ( j) ( j1) and SR ( O ) For normal convergence Using equation [A] SR Larger SR Smaller Note SR ( j1) ( j) slower convergence faster convergence It is assumed that j is very large. Asympototic rate of convergence CR C R Ln ( SR ) Look at examples Jacobi CR 4x4 0.346 10 x 10 0.051 Gauss-Seidel 0.695 0.105 Gauss-Seidel method converges twice as fast as the Jacobi method. Note The smaller SR the large CR i.e. the faster the Convergence v) Optimum over-under relaxation factor It has been shown that for an interior node OPT 4 with the smallest root of 2 a 2 4 1 0 a cos where cos P q Example 4x4 a 2 cos 1.4142 4 (1.4142) 2 2 4 1 0 4 16 4(1.4142) 2 2(1.4142) 10 x 10 2 or 1.707 OPT 4(0.293) 1.17 0.293 a 2 cos 1.902 10 (1.902) 2 2 4 1 0 0.7236 or 0.3819 OPT 4 1.528 For large values of P and q 100 x 100 1 1 1 2 2 2 2 2 P q 1.937 1 2 What happens if you do not have only interior nodes or node with property “A” and consistent ordering? Use 2 1 1 SR 2 Jacobi Implementation run program with 1 (Gauss Seidel) Assume SR i.e. Estimate SR Jacobi SR GS 1 2 2 1 1 SR GS GS SR Limit N O ( j1) N O ( j) j N where ( j1) N O ( j) ˆ k ( j) ˆ k k 1 or ( j1) MAX ˆ k ( j) ˆ k or 22 N ˆ k ( j) ˆ k ( j1) k 1 1 number of iterations 60 50 40 30 20 10 0 1 1.2 1.4 1.6 1.8 relaxation factor Figure 1 Influence of relaxation factor on number iterations to converge