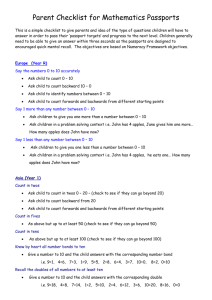

Dynamic Programming Example: Matrix Chain Multiplication

advertisement

Dynamic Programming Example: Matrix Chain Multiplication

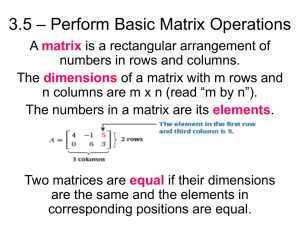

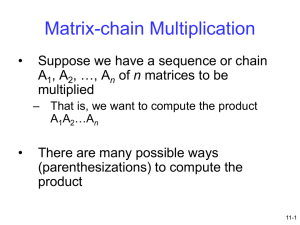

Problem: efficiently multiply a chain of N matrices, i.e. calculate in the fastest way

M = M1M2M3 … MN.

Each matrix Mk has dimension pk-1 x pk. The dimensions are stored in array

P = < p0 p1 … pN>.

Math behind this problem: matrices can be multiplied only two by two, and their order in

the chain must be preserved (partially because number of columns of the first matrix must

equal the number of rows of the second matrix). In other words, if A and B are matrices,

AB ≠ BA. Number of scalar multiplications in AaxbBbxc equals to a*b*c.

For example, M1M2M3 can be calculated as (M1M2)M3 or M1(M2M3). The order of

multiplication, i.e. the placement of parenthesis, will determine the number of scalar

multiplications.

Example: M = M1M2M3,

P = <10 100 5 50>.

If we calculate M = (M1M2)M3, then the number of scalar multiplications is:

10*100*5

to multiply K=M1M2.

Dimension of K is 10x5.

+

10*5*50

to multiply M=KM3.

Dimension of M is 10x50.

Total: 7,500 scalar multiplications.

If we calculate M = M1(M2M3), then the number of scalar multiplications is:

100*5*50

to multiply L=M2M3.

Dimension of L is 100x50.

+

10*100*50

to multiply M=M1L.

Dimension of M is 10x50.

Total: 75,000 scalar multiplications.

Therefore, the problem boils down to: given a product chain of N matrices, fully

parenthesize the chain in order to minimize the number of scalar multiplications.

A solution: brute force method using dynamic programming, i.e. write and implement a

dynamic programming formula to solve the problem.

A dynamic programming formula is always recursive. It can be implemented as:

1. plain recursive code (top down)

2. memoized code (i.e. recursive code with storage, top down)

3. iterative code (bottom up)

Dynamic Programming Example: Matrix Chain Multiplication

m[i,j] is the number of scalar multiplications needed to multiply matrices

Mi Mi+1 Mi+2 … Mj.

Each matrix Mk has dimension pk-1 x pk.

Dimensions of all matrices are stored in array P = < p0 p1 … pN>.

i=1, … n, j = 1, … n.

0

if i=j

min (m[i, k] + m[k+1,j] + pi-1* pk * pj)

if i < j

m[i, j] =

for all k

ik<j

Task: Write the recursive pseudocode which calculates m[1,n] and also records where to

put the optimal parenthesis.

//calculate m[i,j] for matrix chain P

RecursiveMatrixChain(P, i, j) {

}

Initial call: RecursiveMatrixChain(P,

)

//Iterative bottom-up implementation (textbook, p.336):

MATRIX-CHAIN-ORDER(P) {

n = length(P) – 1

for i = 1, n

m[i,i] = 0

for L = 2, n

for i = 1, n – L + 1

j=i+L–1

m [i, j] = ∞

for k = i, j-1

q = m[i, k] + m[k+1, j] + pi-1pkpj

if q < m[i,j]

m[i,j] = q

s[i,j] = k

return m and s

}

Initial call: ____________________

Order of filling m matrix for n=4 (i.e. m11 is filled first, then m22, then m33,

then m44, then m12, etc.)

1 5 8 10

2 6 9

3 7

4

//Recursive Top Down implementation (textbook, p. 345):

RMC(P, i, j) {

if i = j

return 0

m [i, j] = ∞

for k = i, j-1

q = RMC(P, i, k) + RMC(P, k+1, j) + pi-1pkpj

if q < m[i,j]

m[i,j] = q

s[i,j] = k

return m[i,j] and s

}

Initial call: ____________________

The following matrix shows the order of calculating values in m matrix for

n=4 (the subscripts are denoting order of all calls that end up on the stack,

regardless of how long they have to wait for their offspring to return. Some

of those calls return immediately, e.g. calls where p=r).

12,13,19,24 812,23

9 18

10 1

24,9,14,21,25 6 8,20

73

36,10,16,22,26 5 5,15

47,11,17,27

Q: i got really messed up in the recursive part too, although

memoization wasn't a walk in >the part either! all together i think it

took me about 6 hours to complete the homework!

it wasn't so much the degree of difficulty as it was just trying to

keep track of what you did and not messing up the numbers, i did that a

couple of times and had to redo the >entire trace all over again!

A: Yes, that's why good bookkeeping is *essential* here. And good

bookkeeping depends on understanding the principle of the algorithm.

Drawing the recursive calls like the tree in Fig.15.5 is the best.

It is easy when you realize what the pattern is: if there is i-j piece

to deal with, then split it in all possible ways (in order to find the

best way).

So,

1-4 gets split into: 1-1,2-4;

1-2, 3-4; 1-3,4-4.

This is very important to understand, this is the basic of dynamic

programming. We will use it later for graphing algorithms.

To continue the splitting: the next step is to realize that this is all

recursive, so the algorithm goes all the way down the left splits, then

comes back and does the right. That's why you must understand recursion

very well.

So, to continue this example, I will write it in the order called:

1-1

2-4;

2-2

3-4

3-3

4-4

2-3

2-2

3-3

4-4

etc.

1-2, 3-4; 1-3,4-4.