math module B

advertisement

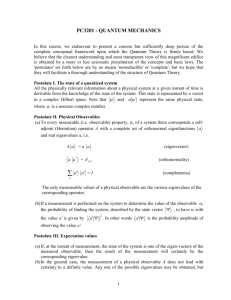

MATH MODULE B OPERATORS AND OPERATOR ALGEBRA (See also Barrante, Chapter 10) In math an operator is a symbolic form that instructs us to carry out a mathematical operation on a function. An operator is most often written with a '^' (caret) above the character. As an example we take the operator d̂ , then when f(x) is a function of x that can be differentiated: dx dˆ df ( x ) f ( x) dx dx (B.1) Another example is the multiplication operator, ĝ . When ĝ operates on a function f, the result is the product of g with f. For example if gˆ 4 x x 2 and f = y2. Then ˆ 4 xy 2 x 2 y 2 gf (B.2) When an operator operates on some function the result is generally another function, different from the original function, as was the case in the example above. Another example is if the dˆ operator is Aˆ xˆ and f = a.sin(bx), where a and b are constants, then dx dˆ Aˆ (a sin(bx )) ( xˆ )a sin(bx ) dx xa sin(bx ) ab cos(bx ) (B.3) Here the total operator is the sum of two operators (multiplication and derivative) and they are applied in sequence, left to right. The RHS of equation (B.3) is clearly a different function from that prior to the operation. We have seen, however, that in some cases the result of the operation yields a function that is linearly proportional to the initial function, then the function is termed an EIGENFUNCTION of that operator, and the proportionality constant is called the EIGENVALUE of the operator. If Âf af (B.4) Then f is an eigenfunction and a (a constant) is its eigenvalue, and the equation is an eigenvalue equation. 1 The operators that are of interest in quantum mechanics are linear, defined according to the following pair of identities: ˆ Pg ˆ Pˆ ( f g ) Pf ˆ Pˆ (af ) aPf where f and g are functions, and a is a constant. You should convince yourself that the derivative operator is linear but that operators such as log and the trigonometric functions are not. Operators are symbols, but they have algebra of their own. We have already seen this in arriving at equation (B.3) where we used a sum of operators. In general we can state ˆ Bf ˆ ( Aˆ Bˆ ) f Af (B.5) The product of two operators is defined as the successive application of them to the function and the operator on the right is applied first. Thus if ˆˆ Cˆ AB (B.6) ˆ Aˆ ( Bf ˆ ) Cf (B.7) then i.e. the result of operating with B̂ is operated on by  , and the overall result is that of operating ˆ ˆ) . with the product operator Cˆ ( AB Equations like (B.6) that relate one operator to others are called operator equations. The two sides are equal only in the sense that when they are both applied to some function the two results are the same. Some operator equations are valid only for particular functions. The difference of two operators is given by ˆ Bˆ ( Aˆ Bˆ ) Aˆ ( 1) (B.8) where - 1̂ is the operator for multiplying by -1. Multiplication of operators Multiplication of operators is associative, viz, ˆ ˆ )Cˆ Aˆ ( BC ˆ ˆ) ( AB (B.9) ˆ ˆ AC ˆ ˆ Aˆ ( Bˆ Cˆ ) AB (B.10) and it is distributive, viz 2 It can be commutative, viz ˆ ˆ BA ˆˆ AB (B.11) But it is not always this way, particularly in some cases of fundamental interest in Quantum ˆ ˆ BA ˆˆ, Chemistry. Remember that the operator written on the right is always applied first. If AB then the operators commute; if not, they do not commute. The commutator of  and B̂ is denoted by [ Aˆ , Bˆ ] and it is defined by ˆ ˆ BA ˆˆ [ Aˆ , Bˆ ] AB (B.12) when [ Aˆ , Bˆ ] = 0 the operators commute. Some general observations about commutative properties: An operator involving multiplication by x, or by a function of x will usually not commute with one involving the first derivative with respect to x. A pair of multiplication operators will commute. An operator that multiplies by a constant will commute with all other operators. Since, from above ˆˆ Cˆ AB ˆ ˆ Aˆ 2 , which means that the operator is applied twice in If  and B̂ are identical then Cˆ AA ˆ ˆ ˆ ˆ .... for m repeats. succession. The extension of this is Aˆ m AAAA Operator division is not defined, but the inverse operation is. Thus B̂ 1 instructs us to undo what B̂ does, hence Aˆ 1 Aˆ 1ˆ Eˆ (B.13) Where the operator 1̂ is that for multiplying by unity. It is usually given the symbol Ê and is called the identity operator. The inverse of a multiplication operator is that for multiplying by the reciprocal of the original entity. Operators are of Major Significance in Quantum Mechanics. One of the postulates of the theory is that all observables (the properties of a system that can be measured-position, momentum, energy, dipole moment, etc) are represented by operators. The eigenvalues of the operators are the possible values of the observables that result from actual measurements. We shall see later that commuting operators can have a set of eigenfunctions in 3 common, in which case the observables represented by the two commuting operators can simultaneously have precisely determined values. Thus  a (B.14) where a is the eigenvalue for the observable corresponding to the operator  . And B̂ b (B.15) where b is the eigenvalue for the observable corresponding to the operator B̂ . ˆ ˆ BA ˆ ˆ ] [ Aˆ , Bˆ ] 0 the observables corresponding to a and b can be precisely and Thus when [ AB simultaneously determined (more on this later). The converse is also true, viz.; operators that do not commute represent variables that cannot be specified simultaneously. This leads to the concept of the Uncertainty Principle, which is one of the cornerstones of Quantum Theory. We shall have more to say about this later in the course. Hermitian Operators It is clearly apparent that any measurement of an observable that we make will provide us with a real number (which may be zero, positive, or negative, but not complex). Thus, if operators are to represent real world observables, they must have real eigenvalues. This is true for a class of operators known as Hermitian. For an operator to be Hermitian it must satisfy the relation: ˆ v( Au u Avd ˆ ) d * * (B.16) Where the integrals are taken over all space (d), the asterisk indicates complex conjugate (Barrante, Chapter 1), and the operator is  . You can find detail on the property of Hermiticity in Barrante, page 141. Complete Set of Functions A complete set of functions is all the eigenfunctions of a particular operator. Any general function can be expressed in terms of a complete set of functions. Thus if ˆ f f n n n (B.17) where ̂ is a operator and fn is the complete set, then we can define the general function, g, as g cn f n n 4 (B.18) i.e. g can be expressed as a linear combination of the complete set of functions. To exemplify this we can think of a line, g = ax, which over a limited interval can be recreated by superimposing an infinite number of sine functions that are eigenfunctions of the second derivative operator, or by superimposing an infinite series of exponentials which are eigenfunctions of the first derivative operator. For example g ax sin(nx ) with n = 1, 2, 3, n ... infinity. Using this idea we can find the result of applying an operator ( ̂ ) to a function (g) that is not one of its eigenfunctions. We can write the algebraic sequence: ˆg ˆ c f c ˆ n n n f n cn n f n n n Thus the result of operating on g with ̂ is a linear combination of the eigenvalues of the complete set. An even more interesting result emerges if the functions fn have degenerate eigenvalues, viz if ˆ f f n n where n = 1, 2, 3, …k Then we can write the sequence ˆg ˆ c f c ˆ n n n f n ck f k ck f k g n n k and in this case, g becomes an eigenfunction of ̂ . 5 k