Statistical Model

advertisement

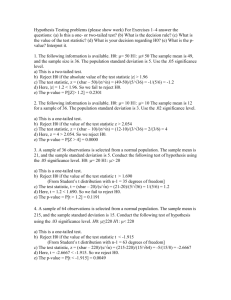

Tools of Inference 3 major tools of inference - point estimate (+ s.e.) - but maybe point isn’t enough => CI - hypothesis testing Don’t just want idea, we suspect it has a particular value and want to test that. Ex: average life span is 75 years, but scientists figure medicine and quality of life have improved so perhaps it is 85 years now. So they want to test this hypothesis, by taking a random sample and seeing whether the data supports that. We are testing a claim about the population; the claim should be made before collecting the data. New objective – we don’t just want to estimate parameters, but want to decide which of 2 contradictory hypotheses about the parameter is true. Statistical hypothesis testing is a claim about the value of a parameter of the underlying probability distribution. Examples 1. Claim about mean running time of a program. e.g. = 0.75 2. Claim p < 0.1 where p is the proportion of defective items in a manufacturing facility. 3. Claim 2 programs have same mean running time, i.e. 1 = 2 4. Claim data come from a particular distribution In hypothesis testing there are two contradictory hypotheses being considered Null hypothesis = assumed true, the current stance, without changing anything Alternative hypothesis = what we are trying to prove e.g. = 0.75 vs 0.75 p < 0.1 vs p 0.1 etc… The objective is to decide which hypothesis is correct (supported by the data) The logic is analogous to proof by contradiction. Assume a hypothesis is true (the null hypothesis), H0. It usually represents the current situation that we want to disprove, “no change”. We look for evidence against the null hypothesis in favour of the alternative hypothesis, Ha or H1. The conclusion is to either accept or reject H0. So we set up the hypothesis so that Ha is what we want to show. The evidence is given by the data and we will have evidence against H0 if the probability of observing the data is small under the assumption that H0 is true (i.e. P(data | H0) is small). Example – Manufacturing Suppose we want to show that a new manufacturing process improves rate of defectives, which was 0.1: H0: p = 0.1 Ha: p < 0.1 where p is the rate of defectives using the new process Can take random sample of products produced with the new process of size n = 200. Let X be the number of defective items in the sample. If H0 is true, then X ~ Bin(200,0.1) and E[X] = 20 (expected # of defectives) If Ha is true, we would expect fewer than 20 defective items in the sample. Let x be the observed value of X; we’d reject H0 if x is substantially less than 20. Say reject H0 if x 15 (we will look at more formal definition of cut-off later) Components to this test procedure: 1. Comparing hypotheses, H0 and Ha 2. A Test Statistic – a function of the observed data given (manufacturing example has test statistic x) 3. A Rejection Region – the set of all values of the test statistic for which H0 will be rejected (in example, x 15) 4. Decision/Conclusion How to choose a rejection region To determine the rejection region, we need to consider errors we might make. 1. Type I Error: reject H0 when it is true In example, it is possible that we observe 13 defective items even if p = 0.1, in which case H0 would be erroneously rejected. 2. Type II Error: accept H0 when it is false In example, it is possible that we observe 18 defective items even if p < 0.1, in which case H0 would be erroneously accepted. We want to minimize the probability of these two errors. Type I error is more crucial than II, since it is a lot of time/energy/money to change the current state, where as if we wrongly don’t change anything, we are no worse off than before. Notation: = P(type I error) = P(type II error) depends on the particular value of the parameters in Ha 1- is the power of the test = p(H0 rejected when it is false, the ideal situation) A powerful test has a large probability of rejecting H0 when we should 090305 R H0 is true H0 is false (Ha is true) Reject H0 Type I Error ideal situation FTR H0 FTR = Fail to Reject no change Type II Error Problem: if we adjust the rejection region so that is small, is going to increase. Solution: the Neyman-Pearson lemma fix and choose a test (rejection region) with tolerable Back to Manufacturing Example H0: p = 0.1 Ha: p < 0.1 (“one-sided alternative” or “left-sided alternative”) n = 200 Test statistic: X ~ Bin(200,p) = # of defective items in the sample x – observed value of the test statistic Rejection region: x 15 (what we chose arbitrarily before) Calculate and = p(type I error) = p(reject H0, when H0 is true) = p(x 15 when X~Bin(200,0.1)) = pbinom(15,200,0.1) [in R] = 0.143 So when H0 is true, 14.3% of samples of size 200 will lead to a type I error. We can decrease by decreasing the size of the rejection region. Suppose we wish to have = 0.05, the rejection region can be found by solving the equation p(x k when X~Bin(200,0.1) = 0.05 k = qbinom(0.05,200,0.1) = 13 Need to fix a value for p which is less than 0.1 0.05 = p(type II error when p=0.05) = p(not reject H0 when p = 0.05) = p(x > 15 when X~Bin(200,0.05)) = 0.044 Decreasing size of rejection region increases . Using a smaller value for p will make type II errors less likely. In general, when p is chosen further away from the null hypothesis’ value, there is more power because it is less likely that the two hypotheses are mixed up by the data (though it may also decrease the probability of getting the ideal situation). When p = 0.05, power = 1 - = 0.956 (lots of power) Neyman-Pearson Lemma Fix at largest value that can be tolerated and choose rejection region accordingly. Typical values of : 0.01, 0.05, 0.1 This is called the “significance level” of the test Tests for H0: = 0 Ha: three possibilities: < 0 one-sided alternative > 0 one-sided alternative 0 two-sided alternative 090310 T Significance Tests Tests for the Mean Assume X1, …, Xn ~ N(,2) with known If H0 is true, then X~N(0,2/n) X Z 0 n = 0.05, 0 = 10 Ha: < 0 If X > 10, don’t reject Ha If X << 10 we do want to reject Observed score -1.695 (Z) => reject Ha: > 0, observed scored 1.695 |Z| 1.96 for two-sided test. Example – Laptop Batteries Stated operating temp is 40C A sample of 9 batteries gives X = 41.08 Assume = 1.5 Does this contradict the claim? X 41.08 40 Z obs 0 2.116 1.5 n 9 If = 0.05, then we reject H0 since 2.116 > 1.96 = Z0.025 (i.e. it is less than 5% likely) If = 0.01, then we don’t reject H0 since 2.116 < 2.576 = Z0.005 (i.e. it is less than 1% likely) P-value Assuming H0 is true, the p-value is the probability of observing values of the test statistic “as or more extreme”. Laptop example: Zobs = 2.116 If H0 is true, this is from N(0,1) distribution. p-value = P(Z 2.116) + P(Z -2.116) = 2*0.0154 = 0.03 Smaller p-value => more evidence against H0 p-value > 0.1 => no evidence 0.05 < p-value < 0.1 => weak evidence 0.01 < p-value < 0.05 => moderate evidence p-value < 0.01 => strong evidence Power Power = 1 - = 1 – P(type II error) = P(reject H0 when it is false) First consider upper tailed test for the mean on iid, normally distributed, known, significance level H0: = 0 against Ha: > 0 reject if Zobs Z X 0 Z n X 0 Z n is the true mean of the population Power() = P(H0 is rejected when mean is ) = P( X 0 Z n ) where X ~ N(,/sqrt(n)) by Ha = P( Z n 0 Z n ) 0 Z Z ) = 1 n n where is a standard normal cdf. = P( Z 0 How to increase power? -increase , reduces the rejection region -increase n -decrease -evaluate at values of ’ further away Exercise: 0 Z Show that the power is Power(’) = when Ha: 0 < n 0 0 Z Z and power(’) = 1 - + when Ha: 0 2 2 n n Laptop Example H0: = 40 Ha: 40 = 1.5, n = 9, don’t need to know X-bar What is the power when = 0.01, ’ = 41 Z0.005 = 2.576 40 41 40 41 2.576 2.576 Power(41) = 1 - 1.5 1.5 + 9 9 = 0.3 What if not known? Assume data are realizations from X1, …, Xn, iid N(,2). Want to test H0: 0. 2 1 n Estimate by sample standard deviation s X Xi n 1 i1 Test statistic t obs X 0 s n is an observation from t-distribution with n-1 degrees of freedom By CLT, works well even if X1, …, Xn are not normally distributed. (works well => p-value is approximately accurate) 090312 R Review H0: = 0 Assume data are normally distributed (if not, by CLT X-bar is still approximately normal, so test still works well). X 0 Test statistic is tobs , where if H0 is correct tobs~t(n-1) s n Calculate p-value from t distribution with n-1 degrees of freedom. Example US increased the speed limit on interstate highways. In 1996, the Data: percent change in traffic fatalities in the year 1996 in 32 states on these highways. Let be the mean of the percent change in fatalities Test H0: = 0 vs Ha: 0 Observed X-bar = 13.75, n = 32, s = 21.33 X 0 13.75 0 Test statistic is tobs 21.33 3.65 ~ t31 s n 32 p-value = P(|tobs| 3.65) from tables: p-value < 2*0.0005 < 0.001 from R: > 1 – pt(3.65,31) + pt(-3.65,31) = 0.0009 > t.test(fatalities.change.increase) -3.65 3.65 p-value Conclusion: Strong evidence that the mean of the change in traffic fatalities was not 0. Test for in any population when n is large We wish to test, H0: = 0 The data X1, …, Xn are a random sample from some distribution with mean . When n is large we use the Z statistic, X Z obs 0 n since for large n, the t distribution approaches the standard normal. Example: Test for p in Bernoulli distribution Suppose X1, …, Xn, iid Bernouli(p), n is large E(Xi) = = p Var(Xi) = p(1-p) Want to test hypothesis about p, eg, H0: p = p0 Natural estimate of p is p 1 n Xi X n i1 Further, Var(p’) = p(1-p)/n Under H0, p’ ~ N(p0, p0(1 – p0)/n) by CLT Test Statistic: p p0 ~ N(0,1) Z obs p 0 1 p 0 n Calculate p-value using the N(0,1) distribution Example: H0: p = p0 vs p > p0 p-value = P(Z > Zobs) Example Do one on your own. p-value