Link to Speech Notes ()

advertisement

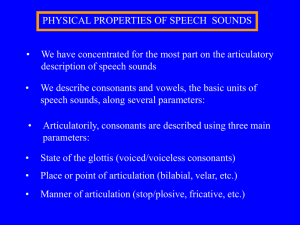

Articulatory Phonetics Links: http://allegro.sbs.umass.edu/berthier/ArticPhonetics.html http://hctv.humnet.ucla.edu/departments/linguistics/VowelsandConsonants/course/contents.html loads of sounds to hear http://www.indiana.edu/~gasser/L503/phonetics.html http://cslu.cse.ogi.edu/tutordemos/SpectrogramReading/cse551html/cse551/cse551.html http://cspeech.ucd.ie/~fred/courses/phonetics/acoustics2.html http://www.essex.ac.uk/speech/teaching-03/307/307_02.html Speech Coding: http://www.eetkorea.com/ARTICLES/2001SEP/2001SEP05_AMD_MSD_AN1.PDF http://www.otolith.com/otolith/olt/lpc.html - LPC - ADM Section from Orla Duffner's PhD transfer report attached Chapter 1 Speech and Music Characteristics This chapter provides an introduction to the theory and characteristics of human speech. Following the highly abstract and intuitive discussion of the human perception of sound this chapter is focussed more on scientific fact and provides the building blocks for future chapters where current and intended work is outlined. Since it is intended to classify audio signals, the characteristics of frequently occurring signals are prime targets for learning about the task involved. One of the most frequently researched audio signals is human speech, and will be central to future work. For these reasons, it is necessary to provide some information on speech theory in order to be able to discuss future work. 1.1 Speech formation Sound is a series of acoustic pressure changes in the medium between the sound source and the listener [9]. Speech is the acoustic wave radiated from the human vocal system when air is expelled from the lungs and is disturbed by a constriction somewhere in the vocal tract. Articulation is concerned with the paths the air takes and the degree to which it is impeded by vocal tract constrictions. Figure 1 shows the important features of the human vocal system used to generate speech. The air flows from the lungs and travels up the trachea, a tube consisting of rings of cartilage [10, p. 39]. Following this the air continues through the larynx, which acts as a gate between lungs and mouth and consists of the glottis, vocal cords and false vocal cords. They are closed during swallowing - to ensure that food is deflected from the windpipe and lungs - and are wide open during normal breathing. The air continues from the larynx through the vocal tract. It consists of the pharynx (the connection from the oesophagus to the mouth) and the mouth or oral cavity, and is about 17cm long for the average male. The nasal tract begins at the velum and ends at the nostrils. When the velum is lowered, the nasal tract is acoustically coupled to the vocal tract to produce the nasal sounds of speech. As sound propagates down the vocal and nasal tracts, the frequency spectrum is shaped by the frequency selectivity of these tubes. The resonance frequencies of the vocal tract tube are called formant frequencies, or simply formants. The shape and dimensions of the vocal tract determine the formant spectral properties, and thus different sound formations. Figure 1 - The human vocal apparatus [11, p.40] Speech exhibits an alternating sequence of sounds that have different acoustical properties. These sounds are roughly grouped under the following four headings; 1) Vowels and vowel-like sounds [10, p.43] – These correspond to longer tonal quasi-periodic segments with high energy, which are concentrated in lower frequencies. They are produced by forcing air through the glottis, with the tension of the vocal cords adjusted so that they vibrate in a relaxation oscillation, thereby producing quasi-periodic pulses of air that excite the vocal tract. The short-term power spectrum always has “peaks” and “valleys”, and is illustrated in Figure 2. These peaks correspond to the formants (i.e. resonances) of the vocal tract, which are described in greater detail in Section 1.3. Figure 2 – Vowel sound example Monophthongs and diphthongs represent the two clusters of sound in this group. Monophthongs have a single vowel quality where examples include the stressed vowels in the words beet, bit, bat, boot, but. Diphthongs are vowels that manifest a clear change in quality from start to end for example the vowels in the words ‘bite’, ‘beaut’ and ‘boat’. The fundamental frequency of the signal corresponds to the pitch of the human voice. The range usually falls between 40-250 Hz for male voices and 120-500 Hz for female voices. The duration of vowels is variable. It depends on speaking style, and usually is around 100-200 milliseconds long. 2) Fricative consonants – These are noise-like short segments with lower volume, such as 'h', 'f', 'v', ‘th’ and ‘s’ as shown in Figure 3. These unvoiced sounds are like weak or strong friction noises and are generated by forming a constriction at some point in the vocal tract, usually towards the mouth end, and forcing air through the constriction at a high enough velocity to produce turbulence. This creates a broad-spectrum noise source to excite the vocal tract. Spectral energy is distributed more toward the high frequencies. The duration of consonants are usually only tens of milliseconds long. Figure 3 - Fricative sound example 3) Stop consonants – These consist of short silent segments followed by a very short transition noise pulse or explosive sound. Also known as plosives, they result from making a complete closure, usually toward the front of the vocal tract, building up pressure behind the closure and abruptly releasing it. Examples include 'p', 't', 'k', 'b', 'd' and 'g', where an illustration of ‘g’ is shown in Figure 4. Figure 4 - Stop consonant sound example 4) Nasals - the airflow is blocked completely in the oral tract, while a simultaneous lowering of the velum allows a weak flow of energy to pass through the nose. Examples include 'm' in ‘me’, 'n' in ‘new’, and 'ng' as in ‘sing’. These sounds alternate and form the regular syllabic structure of speech. Therefore, strong temporal variations in the amplitude of speech signals are observed. An average modulation frequency is 4 Hz (it corresponds with the syllabic rate). In addition, short-term spectrum changes over time are observed. Some changes occur very rapidly, such as the spectral transition of stop consonants, whereas other changes are more gradual, like formant motions of semivowels or diphthongs (further discussed in Section 1.3). 1.2 Phonemes Linguists classify the speech sounds used in a language into a number of abstract categories called phonemes. English, for example, has about 42 phonemes including vowels, diphthongs, semivowels and consonants, although the number varies according to the dialect of the speaker and the system of the linguist doing the classification. Phonemes are abstract categories that allow us to group together subsets of speech sounds [1, p.310]. Even though no two speech sounds, or phones, are identical, all of the phones classified into one phoneme category are similar enough so that they convey the same meaning. Different languages use different sets of phonemes. In English, for example, a phoneme which is usually called /w/ is the initial phoneme in words such as will, wool and wall whereas in German, there is no /w/ phoneme. A set of symbols have been developed which represent speech sounds not only for English, but for all existing spoken languages. The International Phonetic Alphabet (IPA)1 is recognized as the international standard for the transcription of phonemes in all of the world's languages. Each phoneme can be classified as either a continuant or a non-continuant sound. Continuant sounds are produced by a fixed (non-time-varying) vocal tract configuration excited by the appropriate source. The class of continuant sounds includes the vowels, fricatives (both unvoiced and voiced), and nasals. The remaining sounds (diphthongs, 1 More information available at www.arts.gla.ac.uk/IPA/ipachart.html semivowels, stops and affricates) are produced by a changing vocal tract configuration, and are classed as noncontinuants. 1.3 Formants Each phoneme is distinguished by its own unique pattern in a sound spectrogram. For voiced phonemes, the signature involves large concentrations of energy called formants. Within each formant there is a characteristic strengthening and weakening of energy in all frequencies that is the most salient characteristic of the human voice. This cyclic pattern is caused by the repetitive opening and closing of the vocal cords which occurs at an average of 125 times per second in the average adult male, and approximately twice as fast (250 Hz) in the adult female, giving rise to the sensation of pitch [12]. Figure 5 – Audio waveform and spectrogram of speech "We were away a year ago". [13] Formant values may vary widely from person to person, but one can learn to recognize patterns which are independent of particular frequencies and that identify the various phonemes with a high degree of reliability. The blue streaks in Figure 5 display particularly strong formants in the speech signal. The monophthong vowels have strong stable formants; in addition, these vowels can usually be easily distinguished by the frequency values of the first two or three formants, which are called F1, F2, and F3. For these reasons the monophthong vowels are often used to illustrate the concept of formants, although all voiced phonemes have formants even if they are not as easy recognizable. Voiceless sounds are not usually said to have formants; instead, the plosives are visualized as a large burst of energy across all frequencies occurring after relative silence, while the fricatives may be considered as clouds of relatively smooth energy along both the time and frequency axes. In the vowels, F1 can vary from 300 Hz to 1000 Hz. The lower it is, the closer the tongue is to the roof of the mouth. The vowel /i:/ as in the word 'beet' has one of the lowest F1 values - about 300 Hz; in contrast, the vowel /A/ as in the word 'bought' (or 'Bob' in speakers who distinguish the vowels in the two words) has the highest F1 value - about 950 Hz. F2 can vary from 850 Hz to 2500 Hz; the F2 value is proportional to how far forward or back the highest part of the tongue is in the mouth during the production of the vowel. In addition, lip rounding causes a lower F2 than with unrounded lips. For example, /i:/ as in the word 'beet' has an F2 of 2200 Hz, the highest F2 of any vowel. In the production of this vowel the tongue tip is quite far forward and the lips are unrounded. At the opposite extreme, /u/ as in the word 'boot' has an F2 of 850 Hz; in this vowel the tongue tip is very far back, and the lips are rounded. F3 is also important is determining the phonemic quality of a given speech sound, and the higher formants such as F4 and F5 are thought to be significant in determining voice quality. 1.4 Pitch Analysis Pitch estimation is one of the most important problems in speech processing. Pitch detectors are used in voice coder, known as vocoders, speaker identification and verification systems. Because of its importance, many solutions have been proposed in numerous papers and books, all of which have their limitations [14, 15]. No presently available pitch detection scheme can be expected to give perfectly satisfactory results across a wide range of speakers, applications and operating environments. Pitch analysis tries to capture the fundamental frequency of the sound source by analyzing the final speech utterance. The fundamental frequency is the dominating frequency of the sound produced by the vocal chords. This analysis is quite difficult to perform. Often the fundamental frequency is hidden – it is not presented in the sound due to bandlimited transmission channel (e.g. telephone speech), especially when the pitch of voice is low. We can hear only its higher harmonics and our mind “recovers” the original fundamental frequency. Also, the quality and clarity of the audio signal affects the performance of pitch analysis. Several signal processing techniques have proved useful to improve pitch detections. These include low-pass filtering, spectral flattening and correlation, inverse filtering, comb filtering, cepstral processing and high-resolution spectral analysis. It is not intended to give a comprehensive review of existing techniques in this report, but rather to highlight the challenge of pitch analysis by introducing two well-known methods along with their limitations. 1.4.1 Short-term Autocorrelation Function The autocorrelation function representation of a signal is a convenient way of displaying certain properties of the signal. The autocorrelation function attains a maximum at samples correlating to multiples of the signal periodicity (0, P, 2P, … where P is the signal period). So regardless of the time origin of the signal, the period can be estimated by finding the location of the first maximum in the autocorrelation function. This property makes the autocorrelation function an attractive basis for estimating periodicities in all sorts of signals, including speech. One of the major limitations of the autocorrelation representation is that it retains too much of the information in the speech signal. To avoid this problem, it is useful to process the speech signal to make the periodicity more prominent while suppressing other distracting features of the signal. [10, p.150] 1.4.2 Short-term Fourier Analysis to obtain Harmonic content In a narrowband time-dependent Fourier representation of speech, sharp peaks called harmonics occur at integer multiples of the fundamental frequency. These harmonics can be used to estimate the pitch even if no peak is detected at the fundamental frequency. This method has a high noise resistance and is attractive for operation on highpass-filtered speech such as telephone speech, where the fundamental frequency may be lost or difficult to detect using autocorrelation alone [16]. 0.5 0 -0.5 0 0.5 1 time [s] 1.5 2 0.6 0.5 Lm 0.4 0.3 0.2 0.1 0 0.5 1 time [s] 1.5 Figure 6 - Speech signal energy contour 2 There are several problems in trying to decide which parts of the speech signal are voiced and which are not. It is also difficult to decipher the speech signal and try to find which oscillations originate from the sound source, and which are introduced by the filtering in the mouth. Several algorithms have been developed, but no algorithm has been found which is efficient and correct for all situations. The fundamental frequency is the strongest correlate to how the listener perceives the speakers' intonation and stress. In summary, speech is a rapidly changing, short-term signal with large and frequent variations in frequency and amplitude. There is a low long-term rhythmic content and harmonic content.