Preprocessing, Rayleigh, and scattering angle

advertisement

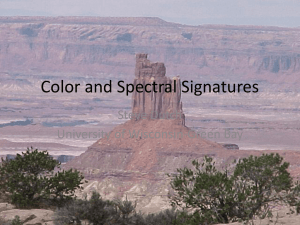

Preprocessing, Rayleigh, and scattering angle The generation of daily clean surface reflectance data involves four major steps: 1. Preprocessing 2. Rayleigh correction 3. Scattering angle correction 4. Surface image creation The first three steps involve processing one daily image at a time. The final step relies on temporal analysis of consecutive daily images and is the main focus of this work. 1.1 Preprocessing Data from the SeaWiFS sensor are available for download in L1A format. L1A data includes the raw radiance counts from the sensor’s eight bands plus spacecraft telemetry, calibration data, and navigation data. The L1A data we use comes in the form of High Resolution Picture Transmission (HRPT) data. Each HRPT file contains data that was received by a particular station as the satellite passed through the station’s receiving space. Most of the data used in the current analysis was from the U.S. receiving stations at the Goddard Space Flight Center in Greenbelt, MD (HNSG), the University of Texas at Austin, TX (HUTX), and the Monterey Bay Aquarium Research Institute in Monterey, CA (HMBR). The SeaWiFS sensor swath width is 2,800 km (± 58.3° from nadir) at the equator. Figure 2 shows three channels (red = 0.67 m, green = 0.55 m, blue = 0.41 m) of raw L1A data over Florida. To cover a large region, such as the conterminous US, 3-4 adjacent spacecraft swaths must be used. The goal of the preprocessing step is to take the raw L1A data as downloaded from the NASA Distributed Data Archive Center (DAAC) and produce daily, multi-band, georeferenced images with pixel values expressed in radiance (W cm-2 sr-1 m-1). Preprocessing consists of the following five steps. 1. Create geometry files 2. Filter bad pixels 3. Convert L1A to L1B 4. Georeference 5. Splice and crop 1.1.1 Create geometry files Geometry files are necessary to perform radiative calculations (Rayleigh and surface scattering angle corrections). For each swath we create an associated geometry file. This file contains six channels: latitude, longitude, sensor azimuth, sensor zenith, solar azimuth, and solar zenith. Thus, for each pixel in the raw L1A swath, the geometry file provides associated geolocation and sun-target-sensor angle data. These data are used in georeferencing and further corrections that have angular dependence. The geometry file is produced using a procedure built into ENVI software called seawifs_geometry_hdf_doit. This routine can be run in batch mode and requires telemetry data from the L1A file as input. 1.1.2 Filter bad pixels The L1A data occasionally has bad pixels. Bad pixels tend to occur at the top and bottom of swaths (data granules) collected by a specific viewing station. It is presumed that they are caused by data transmission noise. Bad pixels are characterized by having a value in one or more of the eight channels that is substantially different from the values in the other channels. Hence, we can employ spectral filtering for the detection and elimination of “bad pixels.” Three independent filters are used, a spike filter, a spectral variability filter, and a large jump filter. All are variations on the same theme of spectral variability. Spike filter: A pixel is removed by the spike filter IF: abs(ch1-ch2) > 200 OR abs(ch2-(ch1+ch3)/2) > 200 OR abs(ch3-(ch2+ch4)/2) > 200 OR abs(ch4-(ch3+ch5)/2) > 200 OR abs(ch5-(ch4+ch6)/2) > 170 OR abs(ch6-(ch5+ch7)/2) > 300 OR abs(ch7-(ch6+ch8)/2) > 300 OR abs(ch8-ch7) > 200 Spectral variability filter: A pixel is flagged as MONOTONIC IF: (chn+1 – chn) < 30 Where n = 1, 2, 3…7 A pixel is removed by the spectral variability filter IF: (abs(ch1-ch2) + abs(ch2-ch3) + abs(ch3-ch4) + abs(ch4-ch5) + abs(ch5-ch6))/5 > 120 AND pixel is NOT MONOTONIC Jump filter: A pixel is removed by the jump filter IF: abs(ch1-ch2) > 350 OR abs(ch2-ch3) > 350 OR abs(ch3-ch4) > 350 OR abs(ch4-ch5) > 350 OR abs(ch5-ch6) > 350 AND NOT MONOTONIC Figure 4 shows example pixel spectrums that would be eliminated by these filters. 850 800 L1A counts 750 700 650 600 550 500 450 400 0.4 0.5 0.6 0.7 0.8 0.9 Wavelength Good Spike Jump Spectral Variability Figure Error! No text of specified style in document.-1. Example pixel spectra that are removed by the various bad pixel filters If a pixel is filtered by one of the above filters, its value for all channels is set to null. Because bad pixels mainly show up as isolated speckles, spatial filtering could be used to remove them. However, we have avoided using spatial methods throughout the process in order to preserve the usefulness of the algorithm for different pixel resolutions. For example, we would like to apply the routine to the SeaWiFS global area coverage (GAC) data, which samples a single 1 km pixel for each 4 km square. 1.1.3 Convert L1A to L1B This step involves converting pixel values from digital counts (L1A) to radiances (L1B) based on sensor calibration formulae. The SeaWiFS instrument is equipped with a bilinear amplifier; high sensitivity is maintained up to about 80% of the digital output range, and then changed discontinuously to extend the dynamic range substantially (Figure 5). This prevents saturation over clouds and bright surfaces. The radiance is further modified by a number of calibration factors. Gain Curves Band gain = 0 (Earth) 80 70 60 Band 1 Band 2 g_f 50 Band 3 Band 4 40 Band 5 Band 6 30 Band 7 Band 8 20 10 0 0 200 400 600 800 1000 1200 Count Figure Error! No text of specified style in document.-2. Gain curves for all eight bands at standard (Earth-viewing) settings. The conversion from L1A digital counts to L1B radiance values can be expressed as L1B_data gf (L1A' _data ) K m K T K sm K t Offset (2-1) where gf(L1A’_data) is a gain function calculated from the relevant calibration table. L1A’_data is the L1A_data corrected for the mean dark value (i.e. what the sensor reads in known darkness). The various K values are calibration factors that account for sensor variability due to mirror side Km, sensor temperature KT, position of pixel in scan (scan modulation) Ksm, and time Kt. Finally, an Offset value is added to the overall product of the above factors (Li and Husar 1999). The gain function is a bilinear conversion function. It is calculated from the count-toradiance slopes and zero-offset counts in the calibration table. These are a function of band, detector, and band gain settings. The algorithm for calculating this function is taken from the SeaWiFS Data Analysis System (SeaDAS) specifically the l1bgen subroutine. Figure 5 shows the gain curves for all eight channels. Note the instrument resolution is highest at the low radiances that occur over the cloud-free ocean. The SeaWiFS sensor produces counts even in total darkness. This is corrected for by subtracting the mean dark value. The sensor takes a reading of an area of known darkness every scan. The median of all scans in that image, for each band, is subtracted from L1A_data to produce L1A’_data. The scan modulation factor accounts for the sensitivity of the detector to the position of a pixel in a particular scan. Ksm is available directly from the sensor calibration table. For all odd-numbered bands, Ksm decreases from the first to last pixel. For the evennumbered bands, Ksm decreases towards the center of the image. This trend is seen in Figure 8. 1.02 Gain Factor 1.015 1.01 1.005 1 0.995 0.99 0 200 400 600 800 1000 Pixel Even Bands Odd Bands 1200 1400 Figure Error! No text of specified style in document.-3. Scan modulation correction factors as provided by the sensor calibration file. We found this correction factor created a significant error in the resulting L1B radiances (and all downstream products) for the odd-numbered bands. The error is seen most clearly at the seams where two swaths, already georeferenced and corrected for molecular scattering, are spliced together and is most pronounced in the blue channel. Figure 9 shows the blue channel image of a seam from two spliced swaths. The inset shows the RGB X profile of the pixels in the image. There is a significant mismatch at the two sides of the seam; the right side has much higher reflectance values than the left. Figure Error! No text of specified style in document.-4. Channel 1 reflectance image at the seam of two spliced swaths and X profile of RGB reflectance. This problem is greatly reduced by reversing the scan modulation correction factors as provided by the calibration table so they appear as in Figure 10. 1.02 Gain Factor 1.015 1.01 1.005 1 0.995 0.99 0 200 400 600 800 1000 1200 1400 Pixel Even Bands Odd Bands Figure Error! No text of specified style in document.-5. Scan modulation correction factors reversed from original L1A order. The improvement is seen by looking at the same seam from Figure 9 using the reversed scan modulation factor (Figure 11). Figure Error! No text of specified style in document.-6. Spliced channel 1 reflectance image and RGB X profile after reversing scan modulation correction factor. The cause for this error is not known, but the calibration data as it is originally read from the sensor calibration table might be read backwards. Regardless, the correction produces much smoother seams and more reliable data, although it overcorrects somewhat at bright values and could be further tuned. 1.1.4 Georeferencing Proper georeferencing is a key step in our process as it allows us to compare the same location from different swaths and different days. Georeferencing of the SeaWiFS images is done using built-in ENVI functionality for the L1B data and a custom IDL routine for the geometry data. The built-in method employs triangulation with nearest neighbor sampling and 200 warp points in both the x and y directions. The coordinate system of the georeferenced data is geographic lat/lon with a resolution of 0.01667 degrees per pixel in both X and Y directions. This corresponds to about 1.6 km pixel resolution. 1.1.5 Splice and Crop Complete coverage of the conterminous United States requires three or four adjacent swaths, depending on the day. Adjacent swaths overlap somewhat. The images are distorted at extreme zenith angles, i.e. at the far left and right edges of the swath. Therefore, when creating the spliced image in the overlapping areas, the swath with the smallest sensor zenith angle is chosen. This results in the seam occurring where the two swaths share the same sensor zenith angle. Because each swath is recorded about 90 minutes before or after adjacent swaths, the seam can be visible even if the data are perfectly calibrated for angular dependencies due to cloud and haze movement. Once the swaths have been spliced into one large domain, they are cropped to the desired geographical region (Latitude: 24 to 52 degrees, Longitude: -125 to -65 degrees) and the corresponding viewing angle data are appended to the output. This results in a selfcontained, preprocessed file with 12 channels (eight wavelengths of radiances plus sun and sensor zenith and azimuth angles) georeferenced over the desired region. 1.2 Rayleigh correction Rayleigh correction removes the effect of air scattering from the radiance values. The resulting data is the pixel combined surface and aerosol reflectance. The method used to calculate the reflectance is a two-stream approximation proposed by Vermote and Tanré, 1992. The correction is dependent on the optical depth of the atmosphere. Since the optical thickness of the airmass is largest near the edges of each swath, the Rayleigh correction is most pronounced near the seams of the adjacent swaths. Also, the air scattering effect is strongest in the lowest blue channels and is insignificant above the green (0.55 m). Surface elevation reduces the atmospheric air optical depth. The reduction factor (P/P0) is approximated by the formula: P z exp P0 H (2-3) where z is the pixel elevation and H is the scale height (8 km) (26). Elevation data is not provided by the SeaWiFS sensor. A 1 km resolution digital elevation map (DEM) is used to determine the pixel elevation. 1.3 Scattering angle correction In order to compare the same location from two different days, its reflectance must not depend on the sun-target-sensor viewing angles. However, the land surface is nonLambertian, i.e. the reflectance of the surface is dependant on sun-target-sensor angles. The angular dependence of the surface is the Bi-Directional Reflectance Distribution Function (BRDF). These angular effects are commonly ascribed to three main types of scatter: volume scattering, which is caused by uniformly distributed, nonuniformly inclined scatterers such as the leaves of plant canopies; occlusion scattering, which is the effect of shadows cast by objects such as trees; and specular reflectance, where the incoming radiation is reflected nearly unchanged (Lucht, Schaaf, and Strahler 2000). Recent projects to determine surface BRDF often employ semi-empirical models that determine the relative influence of these three scattering types for a specific surface (Jupp and Strahler 1996; Lucht, Schaaf, and Strahler 2000; Cihlar et al. 1996). The results are used to “correct” the surface signal. Correction means changing a pixel spectrum to what it would have been measured if the sun and sensor geometry were at fixed standard values (Jupp and Strahler 1996). In order to meaningfully compare pixels from multiple days, we normalize all reflectances to a mid-range scattering angle of 150 degrees. Scattering angle is determined from the angles provided by the geometry file, solar zenith (SolZ) and azimuth (SolA) and sensor zenith (SenZ) and azimuth (SenA): ScatteringAngle 180 (2-4) where cos cosSolZ cosSenZ sin SolZ * sin SenZ * cosSolA SenA (2-5) To determine the angular effect on reflectance, it must be separated from the effects of varying aerosol loading and surface heterogeneity. To do this, we look at a single pixel in a time series for an area that is known to be relatively aerosol free and of a surface type that does not change over the time studied. Figure 17 shows three month pixel time series of the red channel and the scattering angle channel in the Southwestern United States. 180 0.36 0.34 170 160 0.32 0.3 150 0.28 0.26 140 0.24 0.22 130 0.2 1-Aug Scattering Angle (degrees) Channel 6 Reflectance 0.4 0.38 120 11-Aug 21-Aug Reflectance 31-Aug 10-Sep 20-Sep 30-Sep 10-Oct Scattering Angle Figure Error! No text of specified style in document.-7. Scattering angle time series and 0.67 m (red; channel 6) reflectance time series For the pixel in Figure 17, differences in scattering angle show a near one-to-one correlation with surface reflectance differences for this surface. This dependence is a function of surface type. Bright reddish reflectance, for example, is more affected by scattering angle than dark green vegetation. Surface reflectance dependence on scattering angle was determined using time series analysis. Figure 18 shows the reflectances of a number of clean, cloud-free days for a bright soil pixel in the southwestern United States plotted versus the scattering angle for the pixel on that day. Figure Error! No text of specified style in document.-8. Channel six (red) reflectance plotted versus scattering angle. The reflectance for this location shows a very clear dependence on scattering angle. The functional form of the dependence, however, is not simple. Therefore, we use a lookup table to normalize all pixels to a scattering angle of 150º. The pixel reflectance dependence on scattering angle for bright soil surfaces in the western United States is relatively easy to determine because a large sample of clean, cloud-free days are generally available to plot. This is not the case for other surfaces, such as darker, dense vegetation. Figure 19 shows reflectance versus scattering angle for a vegetation pixel with a low reflectance. There are fewer points than in Figure 18 as clouds and haze are much more likely in locations with dark vegetation that occur over the eastern United States. Also, vegetated areas undergo color changes over the course of the year so a relatively short time period must be sampled. Figure Error! No text of specified style in document.-9. Channel six pixel reflectance dependence on scattering angle for dark vegetation. After sampling and analyzing pixels for a variety of surface types, it was found that scattering angle dependence was important for soil and vegetation surfaces, but not as important for water. The correction method we apply only requires one angular parameter, the scattering angle. This works well in the West because ocular scattering is dominant as trees are present but not in dense canopies. Thus, the angular dependence is mostly shadow-driven and can be described sufficiently by scattering angle alone. This is less true for densely vegetated areas, which have more volume scattering. Vegetated areas are very dark and angular dependence is not as critical. However, as seen in Figure 19, scattering angle dependence does exist and so a correction is applied. All pixels are normalized to a scattering angle of 150º through the following process. 1. Classify pixel as either: a. Vegetation b. Soil c. Water (correction not applied) d. Clouds (correction not applied) 2. Using the proper lookup table, apply a correction factor based on the pixel scattering angle. Pixels are classified using a supervised classification algorithm built into ENVI. Reference spectra for the four classification types are input along with the current pixel spectrum. The pixel is classified as the type that has the most similar spectrum, which is determined by summing the squared differences between the pixel values at each channel with the values at each channel for the reference spectra. If the pixel is classified as soil or vegetation, a correction factor is applied based on a lookup table. These lookup tables were generated from the analysis above and the resulting correction factors are plotted in Figure 20. Figure 21 shows the red reflectance time series from Figure 17 after it has been normalized to a scattering angle of 150º. The correction is successful at significantly reducing the variance, though residual correlation still exists for this pixel. 1.2 1.15 Correction Factor 1.1 1.05 1 0.95 0.9 0.85 0.8 0.75 0.7 110 120 130 140 150 160 170 180 Scattering Angle Soil Correction Factors Vegatation Correction Factors Figure Error! No text of specified style in document.-10. Scattering angle correction factors for soil Channel 6 Reflectance and vegetation pixels. 0.4 0.38 0.36 0.34 0.32 0.3 0.28 0.26 0.24 0.22 0.2 1-Aug Uncorrected Variance = 0.0010 Corrected Variance = 0.00026 11-Aug 21-Aug 31-Aug 10-Sep Uncorrected 20-Sep 30-Sep 10-Oct Corrected Figure Error! No text of specified style in document.-11. Original and scattering angle corrected 0.67 mm reflectance time series'. The scattering angle correction is not successful in all instances. For soil surfaces at very high scattering angles (greater than 178 º) a bright spot is apparent, even after the scattering angle correction. This bright spot does not significantly affect our clean surface estimation because the process looks for the lowest reflectance values in the time window to represent the clean surface and the bright spot is ignored. However, it reduces the quality of aerosol retrievals. The results of steps 1-3 in overall process are daily georeferenced, Rayleigh corrected, scattering angle normalized, eight-channel reflectances cropped to the desired domain. These daily files are the input for the final step in creating the clean surface images.