here - BCIT Commons

advertisement

MATH 2441

Probability and Statistics for Biological Sciences

Calculating Probabilities III:

Random Variables and Probability Distributions

A random variable is just a way of assigning a numerical result to the outcome of a random experiment. (In

mathematical terms, a random variable is a function which produces a numerical result when it acts on

elements of the sample space). The "rule" that associates probabilities with specific values or ranges of

values of a random variable is referred to as a probability distribution.

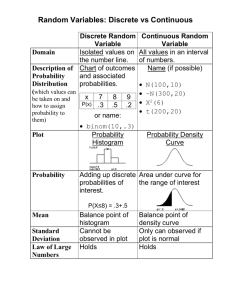

Because of their very different mathematical properties, procedures for working with discrete random

variables (random variables with possible values forming a set of distinct numerical values separated by

gaps) are different in fundamental ways from procedures for working with continuous random variables

(random variables whose possible values form one or more continuous ranges of numerical values.). We

will summarize some of these distinctions near the end of the document. However, there are also some

significant similarities between the way we work with both kinds of random variables (or perhaps "analogies"

is a better word), and you should note these as well as you review the material covered here.

A number of random variables and associated probability distributions arise frequently in applications in

biological sciences. Among the important discrete probability distributions that we will study in this course

are the binomial distribution and the Poisson distribution. We will make extensive use of two

continuous distributions: the normal distribution and the t-distribution. Two other continuous distributions

that will come up in the course are the 2-distribution (or chi-squared distribution) and the Fdistribution. There are certainly dozens, if not hundreds, of other named distributions that arise in more

specialized applications of probability and statistics.

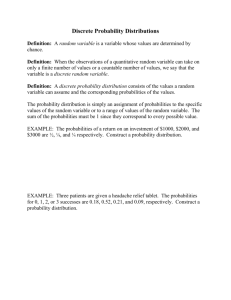

Discrete Random Variables and Probability Distributions

We've already looked at some very simple examples of discrete random variables in the first document on

this series titled "Calculating Probabilities I: Equally-likely Simple Events and Branching Diagrams." The

coin-flipping experiment described there leads to a discrete random variable.

To make things a bit more interesting here, we will consider the experiment in which a fair coin is flipped five

times (or five identical coins are flipped simultaneously). We know from previous work that this experiment

will have 25 = 32 distinct outcomes, each of which can be written as a string of five H's and T's, representing

the sequence of heads and tails observed in the experiment. It's not too hard to set up a branching diagram

to determine that the 32 possible outcomes of this experiment are:

HHHHH

HHHHT

HHHTH

HHTHH

HTHHH

THHHH

HHHTT

HHTHT

HTHHT

THHHT

HHTTH

HTHTH

THHTH

HTTHH

THTHH

TTHHH

TTTHH

TTHTH

THTTH

HTTTH

TTHHT

THTHT

HTTHT

THHTT

HTHTT

HHTTT

HTTTT

THTTT

TTHTT

TTTHT

TTTTH

TTTTT

We've sorted these 32 outcomes into columns, each corresponding to a different number of heads overall.

Now, define:

x = number of heads when a fair coin in flipped 5 times

Then, x is a random variable (because it associates a numerical value with each of the 32 outcomes listed

above), and further, it is a discrete random variable because x can have only the distinct values 0, 1, 2, 3, 4,

David W. Sabo (1999)

Calculating Probabilities III

Page 1 of 8

or 5. The listing above is organized so that the first column gives all the simple events resulting in x = 5, the

second column gives all the simple events resulting in x = 4, the third column gives the simple events

resulting in x = 3, and so on, down to x = 0 in the right-most column.

The probability distribution for x is just some way of specifying the probability of observing each possible

value of x. Since we know that the 32 outcomes listed above are simple events, and the "fair" coin

assumption means they are equally-likely and so each have a probability of 1/32, it is quite easy to work out

the probabilities associated with each of the six possible values of x.

For discrete random variables, the probability distributions are conveniently expressed in one of several

possible ways:

(i) simply tabulate the values: In this case, we can compute the probabilities associated with each

possible value of x by simply counting entries in the columns of the table above. This gives:

x

0

1

2

3

4

5

total:

Pr(x)

1/32 = 0.03125

5/32 = 0.15625

10/32 = 0.3125

10/32 = 0.3125

5/32 = 0.15625

1/32 = 0.03125

32/32 = 1

Notice that since the values, Pr(x), are just probabilities, they must obey the basic properties of probabilities:

(a) they are numbers with values between 0 and 1 inclusive

(b) the sum of the probabilities for all possible values of x must be 1.

(ii) construct a probability histogram: This is not a very practical method for discrete random variables,

but it illustrates what becomes the method of choice (indeed, the only practical method) for computing

probabilities for continuous random variables. You just construct a bar of height Pr(x) and width 1 centered

on the value of x. For the five-coin-toss example, we get:

0.350

0.3125

0.3125

0.300

Pr(x)

0.250

0.200

0.15625

0.15625

0.150

0.100

0.050

0.03125

0.03125

0.000

0

1

2

3

4

5

x

Other than the visual image this representation gives, there's no real new information here that wasn't

present in the tabulation earlier. Because we've drawn the columns for each possible value of x to have a

width of 1 unit, the shaded areas and the probabilities are equivalent.

(iii) use an algebraic formula: In many instances, actual algebraic formulas for Pr(x) can be worked out,

based on the known characteristics of the random experiment giving rise to the values of x. For example,

without going into the details of the derivation here, it is possible to discover that the values of Pr(x) for this

five-coin-toss example are given by the formula:

Page 2 of 8

Calculating Probabilities III

David W. Sabo (1999)

Pr( x )

C 5,x

32

5!

.

32 x! (5 x )!

This may look a bit scary at first, but recall that factorials are just products of whole numbers. Thus, for

example, taking x = 3, we get

Pr( x 3)

5!

5 4 3 2 1

10

0.3125

32 (3! ) (2! ) 32 3 2 1 2 1 32

(When we look at the binomial distribution in greater detail near the end of the course, you'll see that with

very little modification, this sort of formula can be used to cover an experiment with any number of coins or

their equivalent, and any degree of unequal-likelihood between the two possible simple outcomes.)

Use of Cumulative Probabilities

Very early in the course, you encountered cumulative frequencies and cumulative relative frequencies.

Cumulative probabilities are analogous. They are just probabilities that x is less than or equal to some

value. For the five-coin-toss example, we can easily tabulate cumulative probabilities using the probabilities

for the individual values of x in the table above:

k

0

1

2

3

4

5

Pr(x k)

1/32 = 0.03125

6/32 = 0.1875

16/32 = 0.5

26/32 = 0.8125

31/32 = 0.96875

32/32 = 1

Thus, for example

Pr(x 2) = Pr(x = 0) + Pr(x = 1) + Pr(x = 2) = 1/32 + 5/32 + 10/32 = 16/32 =0.5.

Cumulative probabilities are particularly useful for calculating the probability that ranges of values of x occur.

Thus, for example,

Pr(1 x 4) = Pr (x 4) - Pr(x 0)

= 0.96875 - 0.03125 = 0.9375

is a shorter calculation than

Pr(1 x 4) = Pr(x = 1) + Pr(x = 2) + Pr(x = 3) + Pr(x = 4)

= 0.15625 + 0.3125 + 0.3125 + 0.15625 = 0.9375

The difference may not look like much here, but it becomes significant in more complicated applications

where the random variable, x, may have many more possible values than just six. To calculate probabilities

using cumulative probability tables or formulas requires at the most a single subtraction, regardless of how

many possible values x may have.

The notation above is a bit awkward. In the case of actual standard probability distributions, there are

distinct symbols for the probabilities of individual outcomes as opposed to cumulative probabilities.

The detailed procedures for working with cumulative probabilities are little more complicated for discrete

probability distributions than they are for continuous probability distributions. We will look at them in greater

depth later when we deal with the binomial distribution.

David W. Sabo (1999)

Calculating Probabilities III

Page 3 of 8

Expected Values of Discrete Random Variables

Think of performing the five-coin-toss experiment many, many times, recording the number of heads

observed each time the experiment is performed. One important question is: What is the average number

of heads observed per experiment over the long run? This turns out to be quite easy to calculate.

Recall that Pr(k), the probability that you will observe the value x = k when the experiment is performed, is

equivalent to the long run relative frequency of the result x = k. So, knowing, for instance, that in the fivecoin-toss experiment, Pr(x = 1) = 5/32, we know that over the long run, 5/32 of the experiments result in

x = 1. Now, suppose that the experiment is performed N times, where N stands for some very large number.

Then, we can say the following:

x=0

x=1

x=2

x=3

x=4

x=5

will be observed approximately

will be observed approximately

will be observed approximately

will be observed approximately

will be observed approximately

will be observed approximately

(1/32)N times

(5/32)N times

(10/32)N times

(10/32)N times

(5/32)N times

(1/32)N times

Now, it is easy to estimate the sum of all the observed values of x in these N repetitions of the experiment.

The (1/32)N zeros in our list contribute (1/32)N x 0 = 0 to the sum. The (5/32)N ones in the list contribute

(5/32)N x 1 = (5/32)N to the sum. The (10/32)N twos in the sum contribute (10/32)N x 2 to the sum, and so

on. We sum up all the x-values, and divide by N to get the mean of the x values:

1

5

10

10

5

1

N 0

N 1

N 2

N 3

N 4

N 5

32

32

32

32

32

E[ x] 32

N

5

10

10

5

1

1

N

0

1

2

3

4

5

32

32

32

32

32

32

N

1

5

10

10

5

1

0

1

2

3

4

5

32

32

32

32

32

32

0 5 20 30 20 5

2 .5

32

This makes sense. Each time the experiment is done, five coin faces are exposed. Since H and T are

equally likely, it is reasonable that the long run average would have half the exposed faces being H and half

of them being T. Expressed as a number per experiment repetition, this means that an average of 2.5 H's

and 2.5 T's should occur for each repetition.

The notation, E[x], stands for "expected value of x", and is equivalent in meaning to the symbol . The first

line above simply details the sum and divide definition of a mean value. In the second line, we've removed

the common factor N from each term in the numerator, and notice that it will cancel the N in the

denominator. The third line is most revealing, because we see that (as we might have hoped had we

stopped to think deeply), the average value of x does not depend on the value of N as long as you are

contemplating enough repetitions of the experiment to make the first line approximately valid. Further,

notice that the third line is just he sum of the products of the values of x and their probabilities:

E[ x]

x Pr( x )

(RV-1)

all v alues

of x

This formula applies to any discrete random variable and its probability distribution. The value of E[x] can be

interpreted as the long run average value of x when the random experiment is repeated many times. If you

were to construct a frequency or relative frequency histogram of the outcomes of many repetitions of the

random experiment, E[x] would give you the value at x at the mean of that histogram.

Page 4 of 8

Calculating Probabilities III

David W. Sabo (1999)

The mean value of any quantity which depends on the value of x can be computed using a very similar

method, and hence a very similar formula. In general, if f(x) is some function of x, then its mean value over

many repetitions of the experiment is simply

E[f ( x )]

f ( x k ) Pr( x x k )

(RV-2)

all v alues

x k of x

In particular, the quantity

Var ( x) E[(x ) 2 ]

2

( x ) Pr( x)

(RV-3)

all v alues

of x

gives the variance of the observed values of x over the long run, and so, together with its square root (which

would be the standard deviation of the observed values of x over the long run) is a measure of how much

spread there is in the observed values of x. A probability distribution with a small value of Var(x) would give

a very tight pattern of values of x over the long run its probability histogram would be very narrow. On the

other hand, a probability distribution with a larger value of Var(x) would result in a much wider variety of

outcomes its probability histogram would be broader.

For the five-coin-toss experiment,

Var ( x ) (0 2.5) 2

1

5

10

10

5

1

(1 2.5) 2

( 2 2. 5 ) 2

( 3 2 .5 ) 2

( 4 2. 5 ) 2

(5 2.5) 2

32

32

32

32

32

32

= 1.25

Example:

A game of chance consists of flipping a coin five times. The payoff is the square of the number of heads

resulting, in dollars. What is the maximum amount of money you should be willing to pay to play this game?

Solution:

So, what this example says is: if you flip the five coins and get no heads up, you win nothing. You get $1 for

getting one head, $4 for two heads, $9 for three heads, $16 for four heads, and $25 for five heads. We

could regard winnings here as a random variable (for which we can easily work out the probability

distribution), but it is just as easy to consider winnings as a simple mathematical function, f(x) = x 2, of the

random variable x, the number of heads obtained in five flips of the coin.

Now, the less we pay to play this game, the greater our potential benefit. However, the most we should be

willing to pay is the expected value of the winnings. Using formula (RV-2), this is

E[ winnings ] 0

1

5

10

10

5

1

1

4

9

16

25

7.50

32

32

32

32

32

32

Thus, if we played this game many times, the average number of dollars won per play would be $7.50. If the

cost of playing the game is less than $7.50, then over the long run we win more money than it costs to play.

If the cost to play the game is more than $7.50, then over the long run we pay more than we win.

Here, E[winning] = $7.50 is a long run average, though. If you just play one game, you may win $25 that

game, and so would gain even if it cost you $24 to play the game. However, if you play just one game, you

might also win nothing, so that any amount paid to play would be a loss.

David W. Sabo (1999)

Calculating Probabilities III

Page 5 of 8

Continuous Random Variables

The possible values of a discrete random variable form a set of specific, isolated values, to each of which

can be assigned a probability. Even if there is in principle an infinite number of possible values of a discrete

random variable, {x1, x2, x3, …}, it makes sense to speak of Pr(x = xk), the probability of one of those

possible values occurring.

It does not make sense to talk about Pr(x = c) if x is a continuous random variable because such events, x =

c, are impossible to observe. You may think that a randomly selected apple has a mass of 176.8453 g

(pretty precise for an apple!), but how do you know it isn't really 176.84530000000001? While there is the

practical problem of present technology being limited in precision even in measuring quantities that can be

measured to many significant figures, there is a much more fundamental mathematical problem here as

well. As a result, the only meaningful events involving continuous random variables are events in which we

ask for the probability that the value of x falls into some interval of finite length: Pr(a < x < b), where a and b

are two distinct numbers. This is not as limiting in practice as you might think, because we are usually not

interested in questions of whether some quantity has a precise value, but whether its value falls within a

certain range. In fact, even the statement, x = 176.8453 g really implies that our measurement establishes

the value of x rounded to four decimal places is 176.8453 g, that is, the true value of x is somewhere

between 176.84525 g and 176.84535 g, an interval!

The most effective way for computing probabilities for continuous random variable starts with the

development of a so-called probability density function, f(x). For example, in the case of the normal

distribution, the familiar bell-curve, the probability density function can be written as:

f (x)

1

2

e ( x )

2

/ 2 2

where and are constants that would have specific values in specific applications (you should be able to

guess what these values represent!), and is just the mathematical constant 3.141592653…. . In this case,

plotting a graph of the probability density function gives the familiar bell-shape. Other probability density

functions would, of course, give other shapes.

Then, very simply:

Pr(a < x < b) = area under the graph of f(x)

between x = a and x = b.

or, in pictures,

Pr(a < x < b)

y = f(x)

x

a

Page 6 of 8

b

Calculating Probabilities III

David W. Sabo (1999)

Now, areas are expressed mathematically as integrals, and so formally, we can write

b

Pr(a x b) f ( x ) dx

(RV-4)

a

Providing f(x) is simple enough that we know how to work out the integral in this formula, we now have a

very effective way to calculate any probability we need for an event involving x.

There are some probability distributions for which (RV-4) is a practical formula. However, for the normal

distribution, and all of the other continuous distributions that we will use in this course, the integral in (RV-4)

is too impractical to attempt by hand. As a result, numerical tables have been developed to give

approximate values of the integral for most problems of practical interest.

Even though we won't use formula (RV-4) to calculate any actual numbers in this course, it does imply a

number of useful properties, which we will just note here briefly. Actual applications and implementation of

these properties will be illustrated in detail for the normal distribution in the next section of the course.

(i.)

there is no difference between strict inequality and non-strict inequality in calculations involving

continuous probability distributions. That is:

Pr(a < x < b) Pr(a x b) Pr(a x < b) Pr(a < x b)

since the difference between these is just the area above one point on the x-axis, which we know

from our study of calculus to be zero. This is consistent, for example, with the observation that the

statement x > 7.5 is indistinguishable in practice from the statement x 7.5, since x > 7.5 is

satisfied by values of x which are for all practical purposes equal to 7.5. This is not true for

discrete random variables!

Thus, we needn't be too obsessive about using < or > in place of or .

(ii.)

One property that the probability density function must satisfy is

f ( x ) dx 1

since any observation of the value of x must give a value between - and +. Thus, the total area

underneath the graph of the probability density function must always be exactly equal to 1.

area = F(b) = Pr(-< x < b)

(iii.)

Cumulative probability functions can be

defined as

F(a) = Pr(- < x a)

area = F(a) = Pr(-< x < a)

(RV-5)

That is, F(a) is the probability of observing x to

y = f(x)

have a value less than or equal to 'a', which is

the area under the graph of f(x) to the left of x

= a. As in (RV-5), we will represent the

x

generic cumulative probability function by the

a

b

symbol F(), the upper case of f(). If we have a

Pr(a < x < b) = F(b) - F(a)

formula for the cumulative probability, or, as is

more usual, if we have tables giving values of cumulative probability functions, then it is easy to

work out any probability, using the formula:

Pr(a < x < b) = F(b) - F(a)

(RV-6)

This, or some minor variation, is probably the formula you will use most often in the remainder of

the course.

David W. Sabo (1999)

Calculating Probabilities III

Page 7 of 8

(iv.)

Continuous random variables have mean values, variances, and other expected values in the same

way that discrete random variables do, except that now, the summation is replaced by an integral

involving the probability density function. Thus, for a continuous random variable x, with probability

function, f(x), we have:

E[ x] x f ( x ) dx

(RV-7a)

2 Var [ x] ( x ) 2 f ( x) dx

(RV-7b)

and, in general

E[g( x)] g( x) f ( x) dx

(RV-7c)

for some general function, g(x), of x. While we will not use these formulas much (if at all) in the

present course to calculate values of or 2 for various probability distributions, we will state the

values of these quantities in order to convey information about the overall shape of the probability

distribution. As usual, the value of tells us where the "center" of the probability distribution lies,

and the value of or 2 gives a measure of how narrow or broad the probability distribution is.

Page 8 of 8

Calculating Probabilities III

David W. Sabo (1999)