DOC - Ubiquitous Computing Lab

advertisement

09/09/2005

Lecture on Computational Complexity

Overview

Chapter 1 introduces problems, algorithms and their classifications,

problem classification, P, NP, NP-Complete problems. Chapter 2

introduces the Theory of NP-Completeness. Chapter 3 discusses

methods of proving NP completeness. Chapter 4 shows the way

problems can be analyzed using NP-Completeness theory. Chapter 5

show the techniques for proving NP-Completeness. Chapter 6 discusses

other intractable problems. Chapter 7 discusses other relevant issues.

1.1 Computers, Complexity, and Intractability

Consider the following situation:

After exhaustive brainstorming the recently recruited algorithm

designer says to the boss “ I can’t find an efficient algorithm, I guess

I’m just too dumb”

“ I can’t find an efficient algorithm, because no such algorithm is

possible!”

“I can’t find an efficient algorithm, but neither can all these famous

people.”- so recruiting somebody else will not save the employer.

Theory of computational complexity will enable you to assert the last

statement if the problem you have been asked really falls into the

category of difficult problems that well-know experts in the filed of

algorithm could not solved it, and therefore, with a high probability you

are not going to be fired.

1.2 Problems, Algorithms, and Complexity

A general question to be answered usually possessing several

parameters, or free variables, whose values are left unspecified. A

problem is described by giving: 1) a general description of all its

parameters, and 2) a statement of what properties the answer or

solution is required to satisfy.

Example of TSP

Parameters: a finite set C={c1, c2,…, cm} of cities, and for each pair of

cities ci and c j the distance d (ci , c j ) between them. A solution is an

ordering <c (1), c (2), …,c (m)> of the given cities in order to minimize

sum of distances of consecutive cities when they are arranged in a cycle

in this order.

Notion of efficiency is related to use of computing resources- computing

speed and memory. Time requirement is expressed in terms of a single

variable called size of the problem determined by the amount of bits

required to represent the problem in a computer. For TSP m can be

treated as size, again m(m-1) + could also be treated as size. It depends

upon he encoding scheme used.

Time complexity function for an algorithm expresses its time

requirement by giving for each possible input length the largest amount

of time needed by the algorithm to solve a problem instance of that size.

1.3 Polynomial Time algorithms and Intractable Problems

f(n) = O(g(n)) |f(n)| c|g(n)|

A polynomial time algorithm is bounded by a polynomial. If an

algorithm is not polynomial then it is exponential.

Comparison of several polynomial and exponential time algorithms

show fast growing nature of expo algorithms.

Time

Size n

complexity

10

20

30

40

50

60

functions

n

n2

n3

n5

2n

5n

.00001 s

.00002 s

.00003 s

.00004 s

.00005 s

.00006 s

.0001 s

.0004 s

.0009 s

.0016 s

.0025 s

.0036 s

.001 s

.008 s

.027 s

.064 s

.125 s

.216 s

.1 s

3.2 s

24.3 s

1.7 m

5.2 m

13.0 m

.001 s

1.0 s

17.9 m

12.7 d

35.7 y

366 cen

.059 s

58 m

6.5 y

3855cen

2X108 cen

1.3X1013cen

Fig. 1.2: Comparison of polynomial and exponential algorithms

Size of the largest problem instance solvable in 1 hour

Time complexity

function

n

n2

n3

n5

2n

5n

With present computer

With computer 100

times faster

with computer 1000

times faster

100N1

1000N1

N2

10N 2

31.6N2

N3

4.64N3

10N3

N4

2.5N 4

3.98N4

N5

N5 6.64

N5 9.97

N6

N6 4.19

N6 6.29

N1

Fig. 1.3 Effect of improved technology on polynomial and exponential

algorithms

Figure 1.3 shows that even if our computers speed is multiplied the

largest size of the problem that can be handled by the advanced

computers will not be a multiple of the size of the problem the current

computer can handle. This is why Jack Edmonds equated polynomial

algorithms to good algorithms and problems not solvable by poly

algorithms as not well-solved problems or intractable.

There are exceptions: for small sized problems some exponential

algorithm may be better than poly as n5 is slower than 2n for

n 20 Simplex algorithm although exponential performs on the average

very well in practice although Klee and Zadeh were too critical to find

examples where its exponential nature was revealed. Usually we do not

get algorithms requiring n100or1099 n2 time complexity .

Reasonable encoding

Encoding scheme

string

length

v[1]v[2]v[3]v[4](v[1]v[2])(v[2]v[3])

V/E list

(v[2])(v[]v3])(v[2])()

0100 /1010 / 0010 / 0000

4v 10e // 4v 10e (v e) log10 v

2v 8e // 2v 8e 2e log10 v

v 2 v 1// v 2 v 1

16/9/2005

1.4 Provably Intractable Problems

Two types of intractability- exponential time, exponential space. Later

implies former. So we deal with time complexity. TSP with a bound B is

an example of later. Possibly such problems are not well posed.

About 70 years back Alan Turing demonstrated that some problems are

really undecidable- no algorithms exist for solving them.

It is impossible to specify an algorithm which given an arbitrary

computer program and an arbitrary input to that program, can decide

whether or not the program will eventually halt when applied to that

input[1936]

Hilbert’s 10th problem(solvability of polynomial equations in integers)

are examples.

The first results of intractable decidable problems were really

artificially created. In the early 70s real life examples were produced by

Meyer, Fisher, Rabin. These problems were from finite automata theory,

formal language theory, mathematical logic.

1.5 NP-Complete Problems

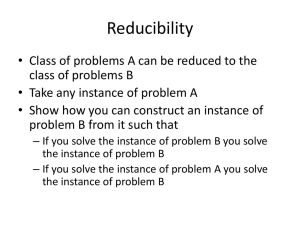

The principal technique used for demonstrating that two problems are

related is that of reducing one problem into another by giving a

constructive transformation.

Many examples are there. Prof Dantzig[1960] reduced combinatorial

optimization problems to 0-1 integer linear programming problem.

Edmonds reduced the graph theoretic problems of covering all edges

with minimum number of vertices and finding a maximum independent

set of vertices to the general set covering problem. Reduction of TSP to

shortest paths with negative edge weights allowed by Dantzig and others.

These early reductions were really isolated. The foundation for the

theory of NP-Completeness were laid down in a paper of Stephen Cook,

presented in 1971 entitled “The Complexity of Theorem proving

procedures”[1971]

First he emphasized the significance of polynomial time reducibility that

is reduction for which the required transformations can be executed

using polynomial time algorithms. Polynomial time reducibility ensures

that a polynomial time algo of one solves the other also in polynomial

time.

Second, he focused attention on the class NP of decision problems that

can be solved in polynomial time by a nondeterministic computer.

Third, he proved that one particular problem in NP,c alled the

satisfiability problem has the property that every other problem in NP

can be polynomially reduced to it. If the satisfiability problem can be

solved in polynomial time then so can be every problem in NP, and if

any problem in NP is intractable then the satisfiability problem must

also be intractable.

Finally Cook suggested that there might be some other problems in NP

which share with the satisfiability problem this property of being the

hardest member in NP.

He immediately showed that the problem “ Does a given graph G

contain a complete subgraph on a given number of vertices. Then Karp

supplied a list of 6 very important problems that formed the core of NP

complete class.

23/9/2005

Chapter 2 The Theory of NP-Completeness

2.1 Decision Problems, Languages and Encoding Scheme

A Decision problem consists of a set D of instances and a subset

Y D of yes-instances.

SUBGRAPH ISOMORPHISM PROBLEM

INSTANCE: Two graphs G1 (V1 , E1 ), G2 (V2 , E2 )

QUESTION: Does G1 contain a subgraph isomorphic to G2 ?

TRAVELLING SALESMAN

INSTANCE: A finite set of cities and non negative distances between

them and a bound B

QUESTION: Is there a tour of length <=B?

While we study decision problems optimization problems can also be

transformed into a series of decision problems. So long as cost functions

are easy to evaluate decision problems are no harder than the

optimization problems. Many decision problems can also be shown to be

not easier than their corresponding optimization problems.

For any finite set of symbols we denote by the set of all finite

strings of the symbol set. If L is a subset of then we call it a

language. Every problem is encoded into a string of symbols. These

strings can be partitioned into 3 subsets- those that are not encoding of

the problem, that are examples of yes instances and that are examples of

no instances.

Standard encoding scheme will map instances into structured strings.

a) The binary representation of an integer is a structured string

b) If x is a structured string so is [x]

c) If x1,x2,…, xn are structured strings representing the objects then

(x1,x2,…,xn) is a structured string representing the sequence

2.2 Deterministic Turing Machines and the Class P

DTM consists of a finite state control, a read-write head, and a tape

made up of a two-way infinite sequence of tape squares -2,-1,0,1,2,…

A program for a DTM specifies the following information

a) A finite set

of tape symbols, including a subset

of input symbols

and a distinguished blank symbol

b) a finite set Q of states, including a distinguished start state q0 and

two distinguished halt states qy and qn.

: (Q (qn, qy )) Q {1, 1}

c) a transition function

Date: 30/9/2005

An NDTM program is specified in exactly the same way as a DTM

programming, including the tape alphabet , input alphabet , blank

symbol b, state set Q, initial state q, halt states qy,qn, and transition

function : (Q (qn, qy)) Q {1, 1} Computation takes in 2 steps:

The first stage is the guessing stage. Input string is written in squares 1

through x and read write head is at 1 the write only head at -1 and finite

state control inactive. The guessing module directs write only head one

step at a time either to write something on the tape square being

scanned and move one sq left or right or stop at which point the

guessing module is inactive and the finite state control is activated at q0.

Notice that NDTM program M will have an infinite # of possible

computations for a given input string x, one for each possible guessed

string from * . We say that NDTM program accepts x if at least one of

these is an accepting computation.

LM {x * : M accepts x}

NP {L : there is a polynomial time NDTM program M for which LM L}

2.4 The Relationship Between P and NP

P NP

Theorem 2.1 If NP, then there is a polynomial p such that can be

solved by a deterministic algorithm having time complexity O(2 p ( n) )

q (n) c1n c2

If A is an algorithm requiring q(n) time then an NDTM need only guess

strings of length at most q(n) to get to the accepted state. Number of

guesses can be as large at most as k q ( n )

2.5 Polynomial Transformations and NP-Completeness

P and NP-P is meaningful if P NP

A polynomial transformation from a language L1 1* to a language L 2 2*

is a function f : 1* 2* that satisfies the following two conditions:

a) There is a polynomial time DTM program that computes f

b) x 1* , x L1 f ( x) L2

Lemma 2.1 If L1L2 , then L2 P implies L1 P

HAMILTONIAN CIRCUIT

INSTANCE: A graph G=(V,E)

QUESTION: Does G contain a Hamiltonian Circuit?

Lemma 2.2 If L1L2 , L2L3 L1L3

Lemma 2.3 If L1 and L2 belong to NP, L1 is No-Complete, and L1 poly

reducible to L2, then L2 id NP-Complete.

Theorem 2.1 (Cook’s Theorem) SATISFIABILITY is NP-Complete.

In NP is obvious. Now every language in NP can be described by a

polynomial time NDTM program that recognizes it.

3. Proving NP-Completeness Results

1) Showing that NP

2) selecting a know NP-Complete problem

3) constructing a polynomial transformation f from to

4) proving that f is a (polynomial) transformation.

3.2 Six Basic NP-Complete Problems

3-SATISFIABILITY (3-SAT)

INSTANCE: Collection C={c1,c2,…,cm} of clauses on a finite set U of

variables such that |ci|=3 for all i

QUESTION: Is there a truth assignment for U that satisfies all the

clauses in C?

3-DIMENSIONAL MATCHING (3DM)

INSTANCE: M W X Y , where W,X,Y are disjoint sets having the

same number q of elements.

QUESTION: Does M contain a matching, that is, a subset M’ in M such

that |M’|=q and no 2 elements of M’ agree in any coordinate?

VERTEX COVER (VC)

INSTANCE: A graph G=(V,E) and a positive integer K<=|V|

QUESTION: Is there a vertex cover of size K or less for G, that is a

subset V’ of V such that |V’|<=K and for each edge (u,v) in E, at least

one of u and v belongs to V’?

CLIQUE

INSTANCE: A graph G=(V,E) and a positive integer J<=|V|

QUESTION: Does G contain a clique of size J or more, that is a subset

of vertices V’ in V such that {V’|>=J and every 2 vertices of V’ are

joined by an edge in E?

HAMILTONIAN CIRCUIT (HC)

INSTANCE: A graph G=(V,E)

QUESTION: Does G contain a HC, that is, an ordering<v1,v2,…,vn> of

the vertices such that all consecutive edges are there including from the

last to the first vertex in G?

PARTITION

INSTANCE: A finite set A and a size s(a) +ve for each a in A.

QUESTION: Is there a subset A’ in A such that sum of elements in both

the partitions are the same?

SATISFIABILITY TO 3SAT TO (3DM AND VC), 3DM TO

PARTITION VC TO (HC AND CLIQUE)

14102005

Proving NP-Completeness Results

3-SATISFIABILITY is a restricted version of satisfiability in which all

instances have exactly three literals per clause.

Theorem 3.1 3-SATISFAIABILITY is NP-Complete.

Proof: it is easy to show that 3SAT NP since a NDTM need only guess a

truth assignment for the variables and check in polynomial time

whether that truth setting satisfies all the given 3-literal clauses.

We transform SAT to 3SAT. Let U {u1 , u2 ,..., un } be a set of variables and

C {c1 , c2, ..., cm } be a set of clauses making up an arbitrary instance of

SAT. We shall construct a collection C of 3-literal clauses on a set U of

variables such that C is satisfiable iff C is satisfiable. Now we have

U U

U j and C C j

Thus we need only to show how C j and U j can be constructed from c j . Let

c j be given by {z1 , z2 ,..., zk } where the zi ’s are all literals derived from the

variables in U . The way these variables are clauses are formed depends

on the value of k

Case 1.

k 1,U j { y j1 , y j 2 }

C j {z1 , y j1 , y j 2 },{z1 , y j1 , y j 2 },{z1 , y j1 , y j 2 },{z1, y j1 , y j 2 }

Case 2. k 2,U j { y j1}, Cj {{z1, z2 , y j1},{z1, z2 , y j1}

Case 3. k 3, U j , C j {{c j }}

Case 4. k 3, k 3, U j { y j i :1 i k 3}

Cj {{z1 , z2 , y j1}} {{ y j i , zi 2 , y j i 1}:1 i k 4}

{{ y j k 3 , zk 1 , zk }}

To prove that this is indeed a transformation, we must show that the set C is satisfiable

iff C is satisfiable. It is very easy to show that transformations are polynomial and that if

the sets of clauses are simultaneously satisfied.

3.1.2 3-DIMENSIONAL MATCHING

INSTANCE: M W X Y , where W,X,Y are disjoint sets having the

same number q of elements.

QUESTION: Does M contain a matching, that is, a subset M in M such

that | M | q | and no 2 elements of M agree in any coordinate?

Theorem 3.2 3-DIMENSIONAL MATCHING is NP-Complete.

It is easy to show that 3DM NP since a nondeterministic algorithm

need only guess a subset q | W || X || Y | triples from M and check in

polynomial time that no two of the guessed triples agree in any

coordinate.

We will transform 3SAT to 3DM. Let U {u1 , u2 ,..., un } be the set of variables

and C {c1 , c2 ,..., cm} be the set of clauses in an arbitrary instance of 3SAT.

We must construct disjoint sets W,X and Y, with | W || X || Y | and a set

M W X Y such that M contains a matching iff C is satisfiable.

The set of ordered triples can be partitioned into 3 separate classes,

grouped according to their intended functions: “truth-setting and fanout(TSFO)”, satisfaction-testing(SF)”, or “garbage collection(GC)”.

Each TSFO corresponds to a single variable u U , and its structure

depends on the total number m of clauses in C. In general TSFO

component for a variable ui involves “internal” elements

ai j [ X ]and bi j [Y ],1 j m , which will not occur in any triples outside of this

component, and “external” elements ui [ j ], ui [ j ] W ,1 j m , which will

occur in other triples. The triples making up this component can be

divided into 2 sets:

Ti t {ui [ j ], ai [ j ], bi [ j ]:1 j m}

Ti f {ui [ j ], ai [ j ], bi [ j ]:1 j m} Ti t {(ui [m], ai [1], bi [m])}

Since none of the internal elements ai j [ X ]and bi j [Y ],1 j m will appear in

nay triples outside of Ti Ti t Ti f , it is easy to see that any matching

M will have to include exactly m triples from Ti , either all from

Ti t or all from Ti f . So this will be forcing a matching to make a choice

between setting ui true or false.

Each ST component in M corresponds to a single clause c j C . It

involves only 2 internal elements s1[ j ] X and s2 [ j ] Y and external

elements from ui [ j ], ui [ j ] W ,1 i n , determined by which literals occur

in clause c j . The set of triples making this component is defined as

follows:

C j {(ui [ j ], s1[ j ], s2 [ j ]) : ui c j } {(ui [ j ], s1[ j ], s2 [ j ]) : ui c j }

Thus any matching M M will have to choose exactly one triple from

C j . This can only be done, however, if some ui [ j ]or ui [ j ] does not occur in

triples Ti M , which will be the case if and only if truth setting

determined by M satisfies clause c j .

The construction is completed by means of one large GC component G,

involving internal elements g1[k ] X and g 2 [k ] Y ,1 k m(n 1) and

external elements of the form ui [ j ]or ui [ j ] W . It consists of the following

triples:

G {(ui [ j ], g1[k ], g2 [k ]),(ui [ j ], g1[k ], g 2[k ]) :1 k m(n 1),1 i n,1 j m}

Thus each pair g1[k ] X and g2 [k ] Y must be matched with a unique

ui [ j ]or ui [ j ] that does not occur in any triples M G. There are exactly

m( n 1) such uncovered external elements.

To summarize we set

W {ui [ j ], ui [ j ]:1 i n,1 j m}

X A S1 G1 , where A {ai [ j ]:1 i n,1 j m}

S1 {s1[ j ]:1 j m}, G1 {g1[ j ],1 j m(n 1)}

Y B S2 G2 , where B {bi [ j ]:1 i n,1 j m}

S2 {s2 [ j ],1 j m}, G2 {g 2 [ j ]:1 j m(n 1)}

and M ( Ti )

( Cj ) G

Notice that M W X Y as required. Furthermore since M contains only

2mn 3m 2m2 n(n 1) triples the construction is polynomial.

It is easily verifiable that M cannot contain a matching unless C is

satisfiable. We now show that the existence of a satisfying truth

assignment for C implies that M contains a matching.

Let t : U {T , F } be any satisfying truth assignment for C. We construct

a matching M M as follows: For each clause c j C , let

z j {ui , ui ,1 i n} c j be a literal that is set true by t(one must exist). We

then set

M (T t i ) (T f i ) ({( z j [ j ], s1[ j ], s2[ j ])}) G, where G is appropriately

chosen subcollection of G that includes all g1[k ], g2[k ] and remaining u

variables.

EXACT COVER BY 3-SETS(X3C)

INSTANCE: A finite set X with |X|=3q and a collection of 3-element

subsets of X/

QUESTION: Does C contain an exact cover for X, that is, a

subcollection C C such that every element of X occurs in exactly one

member of C ?

Note that every instance of 3DM can be viewed as an instance of X3C

simply by regarding it as an unordered subset of W X Y . Thus 3DM is

just a restricted version of X3C.

21102005

***************************

VERTEX COVER and CLIQUE

Despite the fact that VERTEX COVER and CLIQUE are

independently useful for proving NP-Completeness results, they are just

really different ways of looking at the same problem. To see this, it is

convenient to consider them in conjunction with a third problem, called

INDEPENDENT SET.

An independent set in a graph G=(V,E) is a subset

V V | u, v V (u, v) E . The INDEPENDENT SET problem asks, for a

given graph G=(V,E), and a positive integer J | V | whether G contains

an independent set V having | V | J . The following relationships between

independent sets, cliques, and vertex covers are easy to verify,

Lemma 3.1 For any graph G=(V,E), and subset V V , the following

statements are equivalent:

a) V is a vertex cover for G

b) V V is an independent set for G.

c) V V is a clique in the complement G c of G, where

G c (V , E c ) with E c {{u, v}: u, v V and {u, v} E}

Thus we see that in a strong sense these three problems might be

regarded as “different versions” of one another.

Theorem 3.3 VERTEX COVER is NP-Complete.

Proof: It is easy to see that VC NP since a nondeterministic algorithm

need only guess a subset of vertices and check in polynomial time

whether that subset contains at least one endpoint of every edge and has

the appropriate size.

We

transform

3SAT

to

VERTEX

COVER.

Let

U {u1 , u2 ,..., un }and C {c1 , c2 ,..., cm} be any instance of 3SAT. We must

construct a graph G=(V,E) and a positive integer K | V | such that G has

a vertex cover of size K or less iff C is satisfiable.

The construction is as follows:

For each variable ui U , there is a truth setting component

Ti (Vi , Ei ) with Vi {ui , ui } and Ei {{ui , ui }} Note that any vertex cover will

have to contain at least one of the vertices ui , ui

For each clause c j C , there is a satisfaction testing component

S j (V j, E j ) , consisting of 3 vertices and 3 edges joining them to form a

triangle:

V j {a1[ j ], b1[ j ], a3[ j ]}

Ej {{a1[ j ], a2 [ j ]},{a1[ j ], a3[ j ]},{a2 [ j ], a3[ j ]}}

Note that any vertex cover will have to contain at least 2 vertices from

V j in order to cover all the edges of the triangle.

The only part of the construction that depends on which literals occur in

which clauses is the collection of communication edges. These are best

viewed from the vantage point of satisfaction testing components. For

each clause c j C , let the 3 literals be denoted by x j , y j , and z j . Then the

communication edges emanating from S j are given by:

E j {{a1[ j ], x j },{a2 [ j ], y j },{a3[ j ], z j }}

The construction of our instance of VC is completed by setting

K n 2m and G (V , E ), where

V ( Vi ) ( V j)

E ( Ei ) ( E j ) ( E j )

It is easy to see how the construction can be accomplished in polynomial

time. All that remains to be shown is that C is satisfiable iff G has a

vertex cover of size K or less.

First, suppose that V V is a vertex cover of G with | V | K . By our

previous remarks, V must contain at least one vertex from each Ti and

at least 2 vertices from each S j . Since this gives a total of at least

n 2m K vertices, V must in fact contain exactly one vertex from each

Ti and exactly 2 vertices from each S j . Thus we can use the way in

which V intersects each truth setting component to obtain a truth

t : U {T , F }

assignment

.

We

merely

set

t (ui ) T if ui V and t (ui ) F if ui V .

To see that this truth assignment satisfies each of the clause c j C ,

consider the 3 edges in E j . Only 2 of these edges can be covered by

vertices in V j V , so one of them must be covered by a vertex from

Vi that belongs to V . But that implies that the corresponding literal

ui or ui from clause c j is true under the truth assignment t, it follows that

t is satisfying truth assignment for C.

Conversely suppose that t : U {T , F } is a satisfying truth assignment for

C. The corresponding vertex cover V includes one vertex from each Ti

and 2 vertices from each S j . The vertex from Ti in V is ui if

t (ui ) T and ui if t (ui ) F . This ensures that at least one of the 3 edges from

each set E j is covered, because t satisfies each clause c j . Therefore we

need only include in V the endpoints from S j of the other 2 edges in E j .

HAMILTONIAN CIRCUIT(HC)

For convenience whenever v1 , v2 ,..., vn is a Hamiltonian circuit, we

shall refer to {vi , vi 1},1 i n,and {vn , v1} as the edges in the circuit.

Theorem 3.4 HAMILTONIAN CIRCUIT is NP-Complete.

Proof: it is easy to see that HC NP , because a nondeterministic

algorithm need only guess an ordering of the vertices and check in

polynomial time that all the required edges belong to the edge set of a

given graph.

We transform VERTEX COVER to HC. Let an arbitrary instance of

VC be given by the graph G=(V,E) and the positive integer K | V | . We

must construct a graph G (V , E ) such that G has a HC if G has a

vertex cover of size K or less.

Once more our construction can be viewed in terms of components

connected together by communication links. First, the graph G has K

selector vertices a1 , a2 ,..., ak which will be used to select K vertices from

the vertex set V of G. Second for each edge in E, G contains a cover

testing component that will be used to ensure that at least one endpoint

of that edges is among the selected K vertices. The component for

e {u , v} E has 12 vertices Ve {(u, e, i),(v, e, i):1 i 6} and 14 edges

Ee {{(u, e, i),(u, e, i 1)},{(v, e, i),(v, e, i 1)}:1 i 5}

{{(u, e,3),(v, e,1)},{(v, e,3),(u, e,1)}} {{(u, e,6),(v, e, 4)},{(v, e,6),(u, e, 4)}}

In the completed construction, the only vertices from this cover-testing

component that will be involved in any additional edges are

(u,e,1),(v,e,1), (u,e,6) and (v,e,6).

It may be verified that any HC will have to meet the edges in Ee in

exactly one of 3 configurations. This for example, if the circuit enters

this component at (u,e,1) it will have to exit at (u,e,6) and visit wither all

12 vertices in the component or just the 6 vertices (u,e,i), 1<=i<=6, or

(u,e,1)-(u,e,2)-(u,e,3)- (v,e,1)-(v,e,2)-(v,e,3)- (v,e,4)-(v,e,5)-(v,e,6)- (u,e,4)(u,e,5)-(u,e,6)

Additional edges in our construction will serve to join pairs of covertesting components or to join a cover-testing component to a selector

vertex. For each vertex v of V, let the edges incident on v be ordered as

ev[1] , ev[2] ,..., ev[deg( v )]

Ev {{(v, ev[i ] , 6), (v, ev[i 1] ,1)}:1 i deg(v)}

The final connecting edges in G join the first and last vertices from

each of these paths to every one of the selector vertices a1 , a2 ,..., ak . These

edges are specified as follows:

E {{ai , (v, ev[1] ,1},{ai , (v, ev[deg( v )] , 6)}:1 i K , v V } The completed graph

G (V , E ) has

V {ai :1 i K } (Ve) and

E ( Ee ) ( Ev ) E

It is not hard to see that G can be constructed from G and K in

polynomial time.

We claim that G has a HC iff G has a vertex cover of size K or less.

3.1.5 PARTITION

Theorem 3.5 PARTITION is NP-Complete

Proof: It is easy to see that PARTITION∈NP, since a non-deterministic

algorithm need only guess a subset A of A and check in polynomial

time that the sum of the sizes of the elements in A is the same as that fro

the elements remaining in A A

We transform 3DM to PARTITION. Let the sets W,X,Y, with , and

M W X Y be an arbitrary instance of 3DM. Let the elements of these

sets be denoted by

W {w1 , w2 ,..., wq }

X {x1 , x2 ,..., xq }

Y { y1 , y2 ,..., yq }

And

M {m1 , m2 ,..., mk }

Where |M|=k. We must construct a set A, and a size s(a) Z for each

a A such that A contains a subset A satisfying

s(a) s(a) iff M contains a matching.

aA

aA A

The set A will contain a total of k+2 elements and will be constructed in

two steps. The first k elements of A are {ai :1 i k} , where the element

ai is associated with the triple mi M . The size s(ai ) of ai will be specified

by giving its binary representation in terms of strings of 0’s and 1’s

divided into 3q “zones” of p log2 (k 1) each of these zones is labeled by

an element of W X Y

The representation for s(ai ) depends on the corresponding triple. It has

a 1 in the rightmost bit position of the zones labeled by w f (i ) , xg (i ) , yh (i ) and

0’s everywhere else. Alternatively we can write

s(ai ) 2 p (3q f (i )) 2 p (2 q g (i )) 2 p ( q h (i ))

Since each s(ai ) can be expressed in binary with no more than 3pq bits,

it is clear that s(a) can be constructed from the given 3DM instance in

polynomial time.

The important thing to observe about this part of construction is that, if

we sum up all the entries in any zone, over all elements of {ai :1 i k} ,

thee total number never exceed k 2 p 1 . Hence in adding up s(a) for

aA

any subset A {ai :1 i k} there will be never any carries from one zone

to the other. It follows that if we let

3q 1

B 2 pj then any subset A {ai :1 i k} will satisfy

j 0

s(a) B .

aA

The final step of the construction specifies the last 2 elements of A.

These are denoted by b1 and b2 and have sizes defined by

k

s(b1 ) 2( s(ai )) B

i 1

k

s(b2 ) ( s(ai )) B

i 1

Both of these can be specified in binary with no more that 3pq+1 bits

and thus can be constructed in polynomial time of the size of 3DM.