Lecture Note for 資管系Algorithms

advertisement

Lecture Note for 資管系 Algorithms

國立中山大學資訊管理系

黃三益

2001/9/25

Chapter 1, 2 Algorithm Analysis

Analyze the insertion sort algorithm

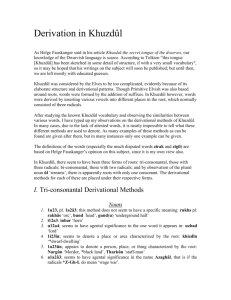

INSERTION-SORT(A)

Cost

times

For j2 to length[A]

c1

n

c2

n 1

/* insert A[j] into the sorted 0

sequence A[1..j-1] */

n 1

c4

n 1

keyA[j]

ij

While i>0 and A[i]> key c5

A[i+1] A[i]

c6

i i-1

c7

n

n

n

t

j 2 j

j 2

j 2

(t j 1)

(t j 1)

n 1

c8

A[i+1] key

T (n) c1n c2 (n 1) c4 (n 1) c5 j 2 t j c6 j 2 (t j 1) c7 j 2 (t j 1) c8 (n 1)

n

n

n

In the best case, where the input array is already sorted, tj =1 for j=2, 3, …, n.

Therefore the running time is

T (n) c1n c2 (n 1) c4 (n 1) c5 (n 1) c8 (n 1)

(c1 c2 c4 c5 c8 )n (c2 c4 c5 c8 )

We call T(n) a linear function of n.

In the worst case, where the input array is listed in descending order, tj =j for j=2,

3, …, n.

n

j 2

j

n

n(n 1)

n(n 1)

1, j 2 j 1

. Thus, the running

2

2

time becomes

T (n) c1n c2 (n 1) c4 (n 1) c5 (

n(n 1)

n(n 1)

n(n 1)

1) c6 (

) c7 (

) c8 (n 1)

2

2

2

We call T(n) a quadratic function of n.

In most cases, only the worst case is considered. Sometimes, we also consider

the average-case or the expected running time of an algorithm. For the average

case analysis, it is commonly assumed that all inputs of a given size are equally

likely.

Q: What is the average running time of INSERTION-SORT?

Merge Sort

It uses the divide-and-conquer approach:

Divide: Dividing the n-element sequence into two subsequences of n/2

elements each.

Conquer: Sort the two subsequences recursively using merge sort.

Combine: Merge the two sorted subsequences to produce the sorted answer.

The pseudo-code is as follows:

MERGE-SORT(A, p, r)

If p<r

Then q(p+r)/2

MERGE-SORT(A, p, q)

MERGE-SORT(A, q+1, r)

MERGE(A, p, q, r)

The running time T(n) is given below:

1

if n 1

T ( n)

2T (n / 2) n if n 1

Q: Show that if n=2k, T(n)=nlgn.

-notation

(g(n)) is a set of functions as defined below:

(g(n))={f(n): there exist positive constants c1, c2, and n0 such that 0c1g(n)

f(n) c2g(n) for all nn0}.

It is obvious (n2) includes many quadratic functions as such 5n2, 0.5n2-3n, and

0.5n2+9n+3. [Q: what are the values for c1, c2, and n0 in each case?]

Strictly speaking, we should denote 2n2+9n+3(n2). However, in convention,

equality (=) is abused to denote set membership. That is, we usually write

2n2+9n+3=(n2).

We say that g(n) is asymptotically tight bound for f(n) if f(n) = (g(n)).

More precisely, the running time T(n) of merge-sort should be written as

(1)

if n 1

T ( n)

2T (n / 2) (n) if n 1

O-notation

O-notation deals with only an asymptotic upper bound. It is formally defined as

below:

O(g(n))={f(n): there exist positive constants c and n0 such that 0f(n) cg(n) for

all nn0}.

Similarly, to denote that a function f(n) is a member of O(g(n)), we write f(n) =

O(g(n)).

O-notation is mainly used to analyze the worst-case running time of an

algorithm.

-notation

In contrast to O-notation, -notation provides an asymptotic lower bound. It is

formally defined as below:

(g(n))={f(n): there exist positive constants c and n0 such that 0cg(n) f(n) for

all nn0}.

O-notation is mainly used to analyze the best-case running time of an algorithm.

o-notation

o-notation denote an upper bound that is not asymptotically tight. That is

o(g(n))={f(n): for any positive constant c > 0, there exists a constant n0>0 such

that 0f(n) <cg(n) for all nn0}.

That is

f ( n)

lim

0.

n g ( n)

-notation

By analogy, -notation is used to denote a lower bound that is not asymptotically

tight. It is formally defined as below:

(g(n))={f(n): for any positive constant c > 0, there exists a constant n0>0

such that 0 cg(n) <f(n) for all nn0}.

That is

f ( n)

lim

.

n g ( n)

Exercises

2.1-1 and 2.1-4

Other common functions

(monotonically increasing): A function f(n) is monotonically increasing if mn

implies f(m) f(n).

(strictly increasing): A function f(n) is strictly increasing if m<n implies

f(m)<f(n).

(monotonically decreasing): A function f(n) is monotonically decreasing if mn

implies f(m)f(n).

(strictly decreasing): A function f(n) is strictly increasing if m<n implies

f(m)>f(n).

Floors and ceilings

x 1 x x x x 1 .

A polynomial in n of degree d is a function of the form:

d

p (n) ai ni , where

i 0

a0, a1, …, ad are called coefficients.

1 m n

, (a ) a mn (a n ) m , a m a n a m n

a

a 0 1, a 1

nb

lim

0 . That is, any exponential function grows faster than any polynomial.

n a n

ex 1 x

x 2 x3

xi

[How is it computed? Hint: use Taylor’s

2! 3!

i 0 i!

expansion equation]

1 x e x 1 x x 2 [Can you show it?]

x

lim (1 ) n e x [Can you show it? Hint: use Taylor’s expansion equation]

n

n

Logarithms

lg n log 2 n

ln n log e n

lg k n (lg n) k

lg lg n lg(lg n)

a b logb a

log c (ab) log c a log c b

log b a n n log b a

log b a

log c a

log c b

log b (1 / a ) log b a

log b a

1

log a b

a logb n n logb a

ln( 1 x) x

x 2 x3 x 4 x5

, when |x|<1. [Can you prove it?]

2

3

4

5

x

ln( 1 x) x

1 x

Factorials

The following is Stirling’s approximation:

e

1

n! 2n ( ) n (1 ( ))

n

n

n! o(n n )

n! (2n )

lg( n!) (n lg n)

Fibonacci numbers:

F0=0, F1=1, Fi=Fi-1+Fi-2.

i i

Fi

, where

5

1 5

.

2

The Fibonacci numbers grow exponentially. (Why?)

1 5

(golden ratio),

2

Exercises

2.2-5.