2 lg 2 = 2

advertisement

Algorithms Homework – Fall 2000

1.2-2 Consider linear search again (see Exercise 1.1-3). How many elements of the

input sequence need to be checked on the average, assuming that the element being

searched for is equally likely to be any element in the array? How about in the

worse case?

What are the average-case running times of linear search in -notation? Justify

your answers.

Average case, n/2 elements need to be checked.

Worst case, n elements need to be checked.

Average case running time in -notation is (n)

Justification: Let C1 = ¼, C2 = 2, and n0 = 2

Since ¼ n < ½ n, C1 = ¼ works for the average case lower bound.

Since 2n > n, C2 = 2 works for the worst case upper bound.

0 ¼ n average worst 2n

1.2-3 Consider the problem of determining whether an arbitrary sequence

(x1, x2, …, xn) of n numbers contains repeated occurrences of some number. Show

that this can be done in (n lg n) time.

Sort the elements in (n lg n) time using Merge sort. Then go through the array one

element at a time comparing it to the next element; this can be done in (n) time. If there

are any matches, then there are repeated occurrences.

1.2-4 Express the function n3/1000 – 100n2 – 100n + 3 in terms of -notation.

(n3)

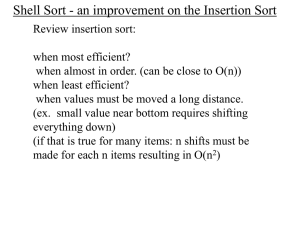

1.3-3 Use mathematical induction to show that the solution of the recurrence

T(n) = 2

if n = 2, or

2T(n/2) + n if n = 2k, k > 1

is T(n) = n lg n.

Base case:

Hypothesis:

Induction:

Proved:

2 lg 2 = 2

T(n) = n lg n = 2T(n/2) + n

2 * (n/2 lg n/2) + n

2 * (n/2 * (lg n – lg 2)) + n

2 * (n/2 * (lg n – 1)) + n

2 * (n/2 lg n – n/2) + n

n lg n – n + n

n lg n

1.3-4 Insertion sort can be expressed as a recursive procedure as follows. In order

to sort A[1 … n], we recursively sort A[1 … n – 1] and then insert A[n] into the

sorted array A[1 … n – 1]. Write a recurrence for the running time of this recursive

version of insertion sort.

T(n) = (1)

T(n – 1) +( n)

if n = 1, or

if n > 1

1.3-5 Referring back to the searching problem (see Exercise 1.1-3), observe that if

the sequence A is sorted, we can check the midpoint of the sequence against v and

eliminate half of the sequence from further consideration. Binary search is an

algorithm that repeats this procedure, halving the size of the remaining portion of

the sequence each time. Write pseudocode, either iterative or recursive, for binary

search. Argue that the worst-case running time of binary search is (lg n).

BinarySearch (A, i, j, v)

size = j – i

c = (size/2) + i

do if v < c

then BinarySearch (A, i, c – 1, v)

else if v > c

then BinarySearch (A, c + 1, j, v)

else return c

The size of the array is continually divided in half until the element is found or

determined not to exist in the array. This determination will occur by the time the array

is of size 1. The array can only be divided in half down to size 1 lg n times, and each

time 1 compare is done.

1 * lg n = lg n

1.3-6 Observe that the while loop of lines 5 – 7 of the Insertion-Sort procedure in

Section 1.1 uses a linear search to scan (backward) through the sorted subarray

A[1 … j – 1]. Can we use a binary search (see Exercise 1.3-5) instead to improve the

overall worst-case running time of insertion sort to (n lg n)?

No, because the array must already be sorted for binary search to work.

1.3-7 Describe a (n lg n) time algorithm that, given a set S of n real numbers and

another real number x, determines whether or not there exist two elements in S

whose sum is exactly x.

Use Merge sort to sort the elements in (n lg n) time. If the element at the first index, i,

is greater than x, or the element at the last index, j, is less than ½ x, return false.

Otherwise, add the element at index i to the element at index j; if the sum equals x, return

true. Otherwise, decrement j and repeat the process until the sum is less than or equal to

x or index i has been reached. If a sum that matches x has not been found, increment i,

verify that this element is less than of equal to ½ x and repeat the process until a sum

matches x or is shown not to exist.

1.4-1 Suppose we are comparing implementations of insertion sort and merge sort

on the same machine. For inputs of size n, insertion sort runs in 8n2 steps, while

merge sort runs in 64n lg n steps. For which values of n does insertion sort beat

merge sort? How might one rewrite the merge sort pseudocode to make it even

faster on small inputs?

Insertion sort beats merge sort for inputs of the following size:

2 n 43

Merge sort can be improved by calling Insertion sort for inputs that are within these

bounds.

1.4-2 What is the smallest value of n such that an algorithm whose running time is

100n2 runs faster than an algorithm whose running time is 2n on the same machine?

n 15

PROBLEMS

1-2

Insertion sort on small arrays in merge sort

Although merge sort runs in (n lg n) worst-case time and insertion sort runs in

(n2) worst-case time, the constant factors in insertion sort make it faster for small

n. Thus, it make sense to use insertion sort within merge sort when subproblems

become sufficiently small. Consider a modification to merge sort in which n/k

sublists of length k are sorted using insertion sort and then merged using the

standard merging mechanism, where k is a value to be determined.

a.

Show that the n/k sublists, each of length k, can be sorted by insertion sort in

(nk) worst-case time.

There are n/k sublists, and insertion sort works in (k2) worst-case time on k elements.

Therefore the total worst-case time to sort is k2 * n/k = kn = (nk).

b.

Show that the sublists can be merged in (n lg (n/k)) worst-case time.

Since there are lg(n/k) levels to merge together, and it takes (n) comparisons to merge

them, the worst-case for merge sort is (n lg (n/k)).

c.

Given that the modified algorithm runs in (nk + n lg (n/k)) worst-case time,

what is the largest asymptotic (-notation) value of k as a function of n for which

the modified algorithm has the same asymptotic running time as standard merge

sort?

Let (nk + n lg (n/k)) = (n lg n)

(nk) + (n lg n) - (n lg k) = (n lg n), since (n lg n) - (n lg k) (n lg n)

(nk) + (n lg n) = (n lg n)

(n lg n) + (n lg n) = (n lg n)

So, k = lg n

d.

How should k be chosen in practice?

k = 1, since insertion sort is not actually used in merge sort.

1-3

Inversions

Let A[1…n] be an array of n distinct numbers. If i j and A[i] A[j], then the pair

(i, j) is called an inversion of A.

a.

List the five inversions of the array {2, 3, 8, 6, 1}.

(2, 1), (3, 1), (8, 1), (6, 1), and (8, 6)

b.

What array with elements from the set {1, 2, …, n} has the most inversions?

How many does it have?

An array in reverse sorted order has the most inversions. It has

n 1

n(n-1)/2 =

(n i) inversions.

i 1

c.

What is the relationship between the running time of insertion sort and the

number of inversions in the input array? Justify your answer.

The running time of insertion sort and the number of inversion in the input array are

directly proportional; the more inversions there are the more time insertion sort takes to

sort.

Insertion sort best-case is increasing sorted order, worst-case is decreasing sorted order.

d.

Give an algorithm that determines the number of inversions in any

permutation on n elements in (n lg n) worst-case time. (Hint: Modify merge sort).

Put a counter in merge sort to count the number of swaps during merging.