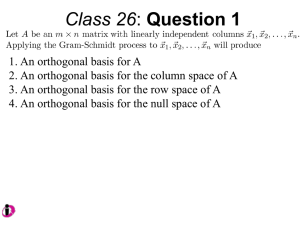

Vectors and Vector Operations

advertisement

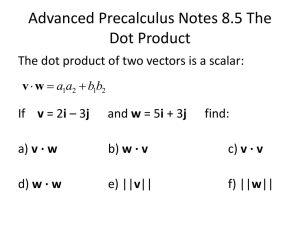

7.2 Projection on a Plane In the previous section we looked at projections on a line. In this section we extend this to projections on planes. Problem. Given a plane P through the origin and a point v, find the point w on P closest to v. v P A plane through the origin can be described as all linear combinations of any two vectors lying in the plane that do not lie on the same line through the origin. So the problem can be restated as follows. w Problem. Given u1 and u2, find a numbers c1 and c2 so that | v - (c1u1 + c2u2) | | v - (a1u1 + a2u2) | for all numbers a1 and a2. As in the case of same problem for a line instead of a plane, it turns out that the vector from w to v is perpendicular to P and w is called the orthogonal projection of v on P. Proposition 1. Let u1, u2 and v be given vectors. If c1 and c2 are numbers such that v - (c1u1 + c2u2) is orthogonal to u1 and u2, then | v - (c1u1 + c2u2) | | v - (a1u1 + a2u2) | for all numbers a1 and a2. Proof. We apply the Pythagorean theorem (1) in the previous section with y = v - (c1u1 + c2u2) and x = (a1u1 + a2u2) – (c1u1 + c2u2). By hypothesis y is orthogonal to u1 and u2, i.e. y . u1 = 0 and y . u2 = 0. One has v y – x = v - au L y = v- cu au x = au- cu w = cu y . x = y . ((a1 – c1)u1 + (a2 – c2)u2) = (a1 – c1)(y . u1) + (a2 – c2)(y . u2) = 0 so x and y are orthogonal. Then y – x = v - (a1u1 + a2u2) and (1) of the previous section becomes | v – (a1u1 + a2u2) |2 = | (a1u1 + a2u2) – (c1u1 + c2u2) |2 + | v – (c1u1 + c2u2)u |2 The right side is greater than | v – (c1u1 + c2u2)u |2 so we get | v - (c1u1 + c2u2) | | v - (a1u1 + a2u2) |. // 7.2 - 1 It turns out that finding c1 and c2 so that v - (c1u1 + c2u2) is orthogonal to u1 and u2 is equivalent to solving a system of equations. Proposition 2. Let u1, u2 and v be given vectors. Then v - (c1u1 + c2u2) is orthogonal to u1 and u2 if and only if (u1 . u1) c1 + (u1 . u2) c2 = u1 . v (1) (u2 . u1) c1 + (u2 . u2) c2 = u2 . v Proof. v - (c1u1 + c2u2) is orthogonal to u1 u1 . (v - (c1u1 + c2u2)) = 0 u1 . v - (c1(u1 . u1) + c2(u1 . u2)) = 0 the first equation in (1) holds. Similarly v - (c1u1 + c2u2) is orthogonal to u2 the second equation in (1) holds. // 0 Example 1. Find the orthogonal projection of 0 on the plane of all linear combination 3 1 1 of - 1 and - 1 . 1 1 1 -1 0 1 1 In this case u1 = , u2 = and v = 0 . One has u1 . u1 = 3, u1 . u2 = 1, u2 . u2 = 3, 1 1 3 u1 . v = 3 and u2 . v = 3, so the equations (1) become 3c1 + c2 = 3 c1 + 3c2 = 3 Multiply the first equation by three and subtract the second equation to get 8c1 = 6. So c1 = 3/4. Substitute into the first equation and solve for c2 to get c2 = 3/4. So 0 w = c1u1 + c2u2 = - 3/4 . 3/4 Another Point of View. Suppose we wanted to find c1 and c2 to satisfy all three of the equations c1 - c2 = 0 (2) - c1 - c2 = 0 c1 + c2 = 3 Clearly we can't do it. However, for a given pair of values of c1 and c2 let 7.2 - 2 e1 = 0 - (c1 - c2) = error in the first equation e2 = 0 - (- c1 - c2) = error in the second equation e3 = 3 - (c1 + c2) = error in the third equation S = (e1)2 + (e2)2 + (e3)2 = (0 - (c1 - c2))2 + (0 - (- c1 - c2))2 + (3 - (c1 + c2))2 So S is the sum of the squares of the errors in the equations (2) for a given value of c1 and c2. It is a measure of how far off c1 and c2 is from a solution of (2). As a substitute for an exact solution of (2) we might ask to find c1 and c2 to minimize S. The c1 and c2 is called a least squares solution to (2). Note that S can be written as 3 - (c1 - c2) 0 1 - 1 S = 0 - (- c1 - c2) = 0 - c1 - 1 + c2 - 1 ) 0 - (c1 + c2) 3 1 1 = | v – (c1u1 + c2u2) | So finding c1 and c2 to minimize S is just what we did to find the orthogonal projection of v on the plane through u1 and u2. We saw that c1 = 3/4 and c2 = 3/4. An Equivalent Way of Finding the Orthogonal Projection of v on P. When one writes the equations (1) in vector form and uses the fact that x . y = xTy one gets (3) (u1)Tu1 (u1)Tu2 c1 = (u1)Tv (u2)Tu1 (u2)Tu2 c2 (u2)Tv Let A be the matrix whose columns are u1 and u2. Then AT is the matrix whose rows are (u1)T and (u2)T. Then (u1)Tu1 (u1)Tu2 ATA = (u2)Tu1 (u2)Tu2 (u1)Tv ATv = (u2)Tv since the entries of ATA are rows of AT times the columns of A and the entries of ATv are rows of AT times v. So (2) becomes (4) c1 ATA = ATv c2 If the u1 and u2 are linearly independent, then ATA is invertible and (4) can be written as c1 = (ATA)-1ATv c2 7.2 - 3 The orthogonal projection w of v on the plane of all linear combinations of u1 and u2 is c1 w = c1u1 + c2u2 = A . So c2 w = A(ATA)-1ATv = Qv where Q = A(ATA)-1AT Q is called the othogonal projection on the plane of all linear combinations of u1 and u2. 1 -1 Example 2. Let P be the plane of all linear combinations of - 1 and - 1 . Find the 1 1 0 orthogonal projection Q on P and use it to find the closest point of P to 0 . 3 1 1 0 1 -1 As in Example 1, one has u1 = - 1 , u2 = - 1 and v = 0 . So A = - 1 - 1 , 1 1 3 1 1 1 -1 1 3 1 1 3 -1 T T T -1 A = - 1 - 1 1 and A A = 1 3 . It is not hard to see that (A A) = 8 - 1 3 . Then 1 -1 1 3 -1 1 -1 1 Q = A(ATA)-1AT = 8 - 1 - 1 - 1 3 - 1 - 1 1 1 1 2 2 2 0 0 1 1 -1 1 1 = 4 - 1 - 1 - 1 - 1 1 = 2 0 1 - 1 1 1 w = Qv = 1 2 0 -1 1 0 2 0 00 1 0 1 - 1 0 = 2 - 3/2 0 -1 1 3 3/2 Least Squares Curve Fitting. Just as with the case of finding the orthogonal projection of a point on a line, the process of finding the orthogonal Age of Number of projection of a point on a plane has applications to curve driver, x fatalities, y fitting. We illustrate this with an example. 20 25 30 35 Example 3. (taken from Linear Algebra with Applications, 3rd edition, by Gareth Williams, p. 380) A study was made of traffic fatalities and the age of the driver. At the right is a table of data that was collected on the number y of fatalities and the age x of the driver. A plot of the data is also at the right. 101 115 92 64 y 120 100 80 60 Often we would like to summarize a set of data values by a linear equation y = mx + b. Usually there will be no linear equation that fits the data exactly. Instead we find the linear equation that fits the data best in the sense of 7.2 - 4 40 20 0 x 0 10 20 30 40 least squares. In general we have a set (x1, y1), (x2, y2), …, (xn, yn) of data values. In our example n = 4. We want to find a linear function y = mx + b that describes the data best in some sense. We use the following measure of how well a particular linear function y = mx + b fits the data. For each pair of data values (xj, yj) we measure the horizontal distance from (xj, yj) to the line y = mx + b. This distance is yj – (mxj + b). We square this distance: (yj - (mxj + b))2. This squared value is a measure of how well the line y = mx + b fits the pair of data values (xj, yj). For example, for the first pair (20, 101) of data values in the above example this squared value would be We add up all these squared values. n S = (yj – (mxj + b))2 j=1 S is a measure of how well the line y = mx + b fits the set (x1, y1), (x2, y2), …, (xn, yn) of data values; the smaller S the better the fit. We want to find m and b so as to minimize S. This is called the line that fits the data best in the sense of least squares. 7.2 - 5