Notes11_16

advertisement

Algebraic representation of extreme points

If there is an optimal solution for an LP problem, we know (from the Extreme Point theorem) that it must occur

at an extreme point of the feasible region. Since the graphic method of solution won’t take us past three-variable

problems, we need an algebraic method for representing and working with the extreme points. In the best of all

possible worlds, we could obtain a test for boundedness, or a representation for the direction vectors, at the same

time.

The requirements of the standard form are designed to make this possible.

Standard form requires that

We seek to minimize the objective function

All constraints have positive right – hand sides.

All constraints are equality constraints – slack variables (how much our left–side value is below the allowed

maximum) are added in “≤” constraints and surplus variables (how much our left-side value is above the

required minimum) are subtracted in “≥” constraints.

All variables (including slack/surplus) are required to be non-negative.

The standard form of a problem will have more variables than the original form – so it would be harder to graph

(but is easier to manipulate algebraically).

The development of the ideas occurs in two steps:

I: Extreme points of the feasible region in the “natural” form (inequality constraints) correspond directly

to extreme points of the feasible region for the standard form (all equality constraints):

[See pages 92 - 97 in the text]

Discussion is in terms of constraints in “≤” form – details of general case (with any or all of ≤, =, and ≥ forms)

are notationally more involved but conceptually similar.

We have started with a problem with n variables and m constraints (assuming all variables have been converted

to “nonnegative”)

Minimize f (X) CT X subject to

AX b

X0

Here X is a variable n-vector, b is a constant m-vector and A is an m x n matrix.

Putting this in standard form gives:

ˆ)C

ˆ TX

ˆ

Minimize fˆ (X

subject to

ˆ b

Aˆ X

ˆ 0

X

Here we have added slack variables (s1, s2, . . ., sm) – (one for each constraint) with

s1

s2

si bi ai1x1 ai2 x2 ain xn so we have an m – vector of slack variables S b AX .

sm

ˆ X X .

ˆ are the entries of X followed by the slack variables- that is X

The entries of X

S b AX

Algebraic Representation of Extreme points p.2

ˆ

The matrix A expands A by adding a column for each slack variable (with entry 1 in the appropriate row, 0’s

ˆ A I ) The coefficient vector C

ˆ T expands C T by adding 0 coefficients for the

in the other rows) (so A

m

slack variables (m of them) .

Each inequality ai1x1 ai2 x2 ain xn bi is equivalent to thecorresponding combination

ai1x1 ai2 x2

ain xn si bi , si 0 , so that each vector X in the feasible region for the original problem

ˆ in the feasible region for the standard-form problem, and conversely (each

corresponds to exactly one vector X

ˆ matches up with some feasible X). Although more variables have been added, they are not

feasibleX

independent variables, so the dimension of the feasible region has not been increased.

ˆ X , which represents the extension of our

In addition, the function : Rn Rn m given by (X) X

b AX

problem, preserves convex combinations; that is, ai Xi ai (Xi ) if all ai 0 and ai 1.

(Quick proof

–from p. 94 of text); *With notation shown,

a X

a X

i i

i i (definition of and linearity of matrix multiplication)

ai Xi

iAX i

b a

b A ai Xi

aiXi aiXi (remember that a 1, so a b b )

i

i

aib aiAX i

ai b AX i

Xi

ai

ai Xi , as claimed)

b AX i

ˆ (X) is

Theorem 3.2.2 The vector X is an extreme point of F (the original feasible region) if and only if X

an extreme point of Fˆ (the feasible region for the standard-form problem). [Proof is an exercise – use the

property above and the definition of “extreme point” (it’s based on convexity) – you’ll want a contrapositive

argument]

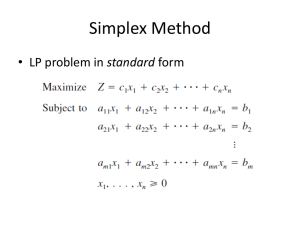

standard-form problem

Thus: solving the

ˆ)C

ˆ TX

ˆ

Minimize fˆ (X

subject to

ˆ b

Aˆ X

ˆ 0

X

is equivalent to solving the original LP problem

Minimize f (X) CT X subject to

AX b

X0

Extreme points of the (standard-form) feasible region correspond to certain square submatrices (“basis

II

ˆ

matrices”) of A

We get an algebraic criterion for identifying extreme points of Fˆ .

ˆ

ˆ

ˆ

Theorem

3.2.4 If X is an extreme point of F , then the columns of A corresponding to the nonzero

ˆ form a linearly independent set (of column vectors).

components of X

ˆ (say A , A , , A ) and if

Conversely, if we let B be any linearly independent set of columns of A

j1

j2

jm

Algebraic Representation of Extreme points p.3

X* is the unique solution to BX = b and if X* ≥ 0, then the vector X given by

x i if i { j1, j2 , , j m }

( i 1,2, ,n m ) is an extreme point of Fˆ .

x i

0 otherwise

The matrix B is calleda basis matrix and the variables corresponding

to the columns chosen to form B are the

basic variables; the others (which are set to 0) are non-basic variables. The vector X obtained by filling in

0’s (appropriately) to “fill in” all the coordinates is a basic solution. If the vector X* also satisfies X ≥ 0 (no

negative entries) it is a basic feasible solution and gives an extreme point of Fˆ - corresponding (just drop

off the slack/surplus variables) to an extreme point of F. The language basis, basic variable, basic solution,

etc. is always used with reference to a particular basis matrix [sometimes it is used to refer to the basis

matrix for the optimal solution – but we aren’t there yet]

ˆ to a modified

The columns of a basis matrix are the columns on which we could pivot to reduce the matrix A

echelon form (allowing the pivots to be out of order – row 4 pivot might to left of row 2 pivot, etc.). The

basic variables for a given basis matrix are the dependent variables (from the pivots) in the solution. We get

a unique solution by setting all the independent variables (the non-basic variables) to 0. For many of the

possible basis matrices, this will make one of the basic variables take a negative

value – then that basic

solution is not a feasible solution [the “nonnegative solutions” criterion is critical, here].

In fact, each basic solution corresponds to an intersection of m of the hyperplanes that bound the feasible

region. If that intersection is on the wrong side of some other bounding hyperplane, then some variable

(decision or slack/surplus variable)will have a negative value, telling us the intersection does not give an

extreme point (because it’s not a feasible solution).

The example on p. 97 implements the following method for searching for an optimal solution under the

assumption that the objective function is bounded below on the feasible region. The intersections of the

boundary hyperplanes (= “lines” in dimension 2) are listed in the column labeled X.

I. Find the basis matrices and the basic solutions. Eliminate the infeasible solutions and evaluate the basic

feasible solutions [table 3.1 on p. 97]

ˆ [there will be C(n+m,m) such sets]

For each set of m columns of A

1. Form the m x m matrix B consisting of these columns [keep track of which columns]

2. Solve the matrix equation BX=b to obtain a vector X* . If the solution is not unique (columns of B not

independent), or if any entries in X* are negative, discard B and X*, take the next set of columns and go to

1

3. From the [unique] solution X* with nonnegative entries, form the vector X . The entries corresponding to

the chosen columns are the entries from X*, other entries are 0.

ˆ T X and put this value and the vector X in a list of candidates.

4. Evaluate C

II. Select the optimal solution

The smallest value obtained is the minimum value. The corresponding vector X is the optimal solution

This isa refinement of the method you ere asked to criticize in question 3 of Activity 9. It eliminates (by taking

advantage of the “nonnegative” requirement) the intersections that don’t give extreme points, but still does

not deal with the boundedness problem. That will require the simplex algorithm.

We need and will use the ideas of basis, basic feasible solution, basic variable to develop the simplex

algorithm and show that it works.