Linear Independence of Vector Functions

advertisement

Chapter 3 Outline continued

Section 3.4: Determinants

Determinant of a Matrix

The determinant of square matrix A is a number |A| associated with the matrix.

The determinant of a two-by-two matrix is the product of the diagonal elements minus

the product of the off-diagonal elements:

a11 a12

a11a22 a12 a21 .

a21 a22

Before calculating the determinant of an arbitrary n n matrix, we need some

preliminaries. To calculate the determinant of an n n matrix, we use a recursive

approach. A determinant of n n matrix is related the determinant of matrices of

order (n 1) (n 1) . Below outlines important concepts needed to calculate the

determinants.

Associated with the entry aij of the n n matrix A = aij is a matrix of size

(n 1) (n 1) called the minor of aij and denoted M ij ; The minor M ij is obtained

from A by deleting the ith row and the jth column. For each minor we calculate a

number called the cofactor of aij , Cij (1)i j M ij . The determinant of an n n matrix

can be expressed in terms of the cofactors of any particular row or column. (It doesn’t

matter which one because they all give the same answer.)

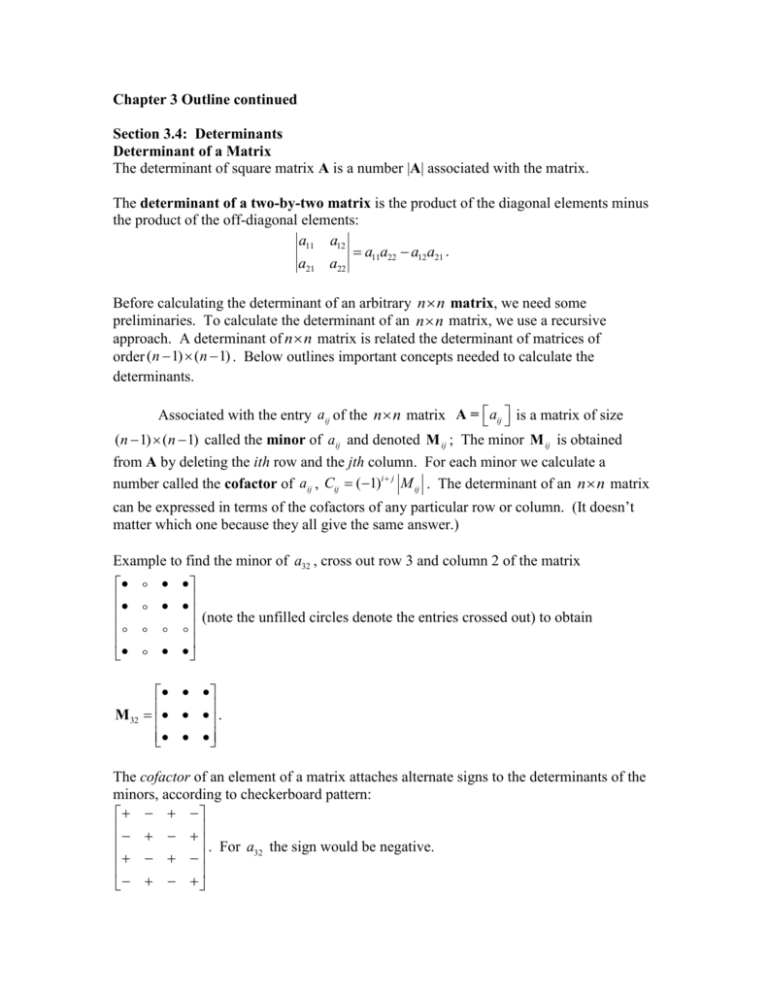

Example to find the minor of a32 , cross out row 3 and column 2 of the matrix

(note the unfilled circles denote the entries crossed out) to obtain

M 32 .

The cofactor of an element of a matrix attaches alternate signs to the determinants of the

minors, according to checkerboard pattern:

. For a the sign would be negative.

32

Determinant of an n n Matrix A: Choose any row or column and expand by the

appropriate cofactor formula using either expansion by the ith row

n

A aij Cij

j 1

or, equivalently, expansion by the jth column:

n

A aij Cij .

i 1

Repeat this process, obtaining smaller matrices at each step. The definition is completed

with the 2 2 case.

NOTE: To shorten the amount of calculation, the strategy is to choose the row or column

for expansion that has the most zero entries!

Row Operations and Determinants:

Let A be a square matrix.

If two rows of A are interchanged to produce matrix B, then

B A .

If one row of A is multiplied by a constant k and then added to another row to

produce matrix B, then B A .

If one row of A is multiplied by a constant k to produce matrix B, then B k A .

Invertible Matrix Characterization (Using Determinants):

Let A be an n n matrix. The following statements are equivalent:

A is an invertible matrix

A 0

Properties of Determinants

For n n matrices A and B, the determinant of AB is given by

AB A B

AT A

1

.

A

If A 0 , then A1

If A is an upper triangular matrix or lower triangular matrix, then

m

A aii , i.e. the determinant is the product of the diagonal elements.

i 1

If one row or column of A consists entirely of zeros, then A 0 .

If two rows or two column vectors of A are equal, then A 0 .

Section 3.5 Vector Spaces and Subspaces

Vector Space: A vector space V is a nonempty collection of objects called vectors for

which there are defined two operations, vector addition, denoted by x + y , and scalar

multiplication (multiplication by a real constant), denoted by cx , that satisfy the

following 10 properties. ( denotes the set of real numbers.)

Closure Properties:

C1. x + y V whenever x, y V

C2. cx V whenever x V and c

Addition Properties:

A1. There is a zero vector, denoted by 0, in V such tat for all x V ,

x + 0 = x . (Additive Identity)

A2. For every vector x V , there is a vector in V called its negative, denoted -x , such

that x + (-x) = 0 . (Additive Inverse)

A3. (x + y ) z = x + (y z ) , for all x, y, z V . (Associativity)

A4. x + y = y x , for all x, y V . (Commutativity)

Scalar Multiplication Properties:

S1. 1x = x , for all x V . (Scalar Multiplicative Identity)

S2. c(x + y ) = cx + cy , for all x, y V and c . (First Distributive Property)

S3. (c d )x = cx + dx , for all x V and c, d . (Second Distributive Property)

S4. c(dx) = (cd )x , for all x V and c, d (Associativity)

Note that one check the two closure requirements at once by verifying the following

property, called the closure under linear combination:

C0. cx + dy V whenever x, y V and c, d .

Definition

Vector spaces that are important in DEs (as well as other branches of mathematics) are

function spaces. Here the “vectors” are functions defined on an interval I, and they are

added and multiplied in the usual way:

( f g )(t ) f (t ) g (t ) and (cf )(t ) cf (t ) , for all t I .

Review Examples 1-6 on pp.167-168 and see how one would verify if something is a

vector space. Any of these problems would make up a good true/false question. I.E.

True/false The set V of all solutions of the first-order linear homogeneous DE,

y p(t ) y 0 , defined on some interval I, where p(t) is a continuous function on I, is a

vector space.

Note that it is necessary that DEs be linear for the solutions to form a vector space and it

is also necessary for the DEs to be homogeneous!!

Prominent Vector Spaces

2

: space of all ordered pairs (or 2-vectors)

3

: space of all ordered triples (or 3-vectors)

n

: space of all ordered n-tuples (or n-vectors)

P : space of all polynomials

Pn : space of all polynomials of degree less than or equal to n

M mn : space of all m n matrices

C ( I ) : space of all continuous functions on the interval I (I may be an open or closed

interval, finite or infinite).

C n ( I ) : space of all functions on interval I having n continuous derivatives (I may

be an open or closed interval, finite or infinite).

C n : space of all ordered n-tuples of complex numbers (a bi, c di, )

Vector Subspaces

Often a vector space V has a subset W , that itself is a vector space. Most of the

addition and scalar multiplication are “inherited” from V . Checking whether a subset is

a subspace boils down to checking for closure.

Vector Subspace Theorem: A nonempty subset W of a vector space V is a subspace

of V if it is closed under addition and scalar multiplication, that is,

i)

If u, v W , u + v W

ii)

If u W and c , then cu W .

An easy check for W being nonempty is to see if 0 is in W . If it is not, then W is not a

subspace.

As noted earlier, it is often efficient to check both closure properties at once by verifying

closure under “linear combinations”

If u, v W , and a, b , then au + bv W .

Note: the only subspaces of 2 are the following:

The zero subspace {(0,0)}, (the zero vector alone)

Lines passing through the origin and

2

itself

Since the set consisting of the zero vector alone and the set V itself are always

subspaces, we call them trivial subspaces.

Note: the only subspaces of 3 are the following:

The zero subspace {(0,0)}, (the zero vector alone)

Lines and planes passing that contain the origin and

3

itself

Section 3.6 Basis and Dimension

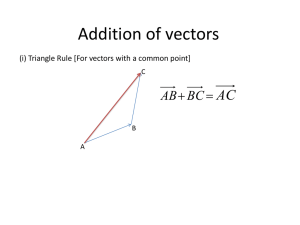

Given vectors v1 , v 2 , , v n , we can form a new vector v = c1v1 c2 v 2 cn v n , where

c1 , c2 ,, cn are scalars, called a linear combination of the vectors. The set of all of

vectors we can make from a given set of vectors is called its span.

Definition

Span: The span of a set {v1 , v 2 , , v n } of vectors in a vector space V , denoted

Span {v1 , v 2 , , v n } , is the set of all linear combinations of these vectors.

1

0

0

The vectors e1 0 , e2 1 , and e3 0 , which are the columns of the identity

0

0

1

matrix I 3 , are called the standard basis vectors of 3 .

Definition Spanning sets in n

A vector b in n is in Span {v1 , v 2 , , v n } , where v1 , v 2 , , v n are vectors in n ,

provided that there is at least one solution of the matrix-vector equation Ax b , where A

is the matrix whose column vectors are v1 , v 2 , , v n .

Span Theorem: For a set of vectors {v1 , v 2 ,

Span {v1 , v 2 , , v n } is a subspace of V .

, v n } in vector space V ,

Definition Column Space The number of elements m in the column vector of an m n

matrix to see that ColA is a subspace of m .

Linear Dependence and Independence

Definition

Linear Independence: A set {v1 , v 2 , , v n } in vector space V is linearly independent

if no vector of the set can be written as a linear combination of the others. Otherwise it is

linearly dependent.

A convenient criterion for checking if a set of vectors is linearly independent is to show

that if a linear combination of the vi is the zero vector, then all the coefficients must be

zero: c1v1 c2 v 2 cn v n 0 c1 c2 cn 0 .

Note: If any set of vectors includes 0, the zero vector, then the set is linearly dependent.

Linear Independence of Column Vectors:

The column vectors of the coefficient matrix A for Ax 0 if and only if the solution

x 0 is unique.

Linear Independence of Vector Functions

Linear Independence and Dependence of Vectors: The set of vectors

{v1 (t ), v 2 (t ), , v n (t )} on a vector space V is linearly dependent on an interval I if

there are scalars c1 , c2 ,, cn not all zero such that c1v1 (t ) c2 v 2 (t ) cn v n (t ) 0 for all

t in I. Otherwise the set is linearly independent.

The Wronskian

Wronskian of Functions f1 , f 2 ,

W [ f1 , f 2 ,

, f n ](t )

, f n on I:

f1 (t )

f1(t )

f 2 (t )

f 2(t )

f n (t )

f n(t )

f1n 1 (t )

f 2n 1 (t )

f nn 1 (t )

defined on I.

The Wronskian and Linear Independence:

If W [ f1 , f 2 , , f n ](t ) 0 , the zero function on I, where f1 , f 2 ,

{ f1 , f 2 ,

, f n are defined on I, then

, f n } is linearly independent set of functions on I.

Basis of a Vector Space

Definition

Basis of a Vector Space: The set {v1 , v 2 ,

i) {v1 , v 2 , , v n } is linearly independent

ii) Span {v1 , v 2 , , v n } = V .

The Standard Basis for

n

, v n } is a basis for vector space V provided

: The column vectors of the identity matrix I n are denoted

1

0

0

0

1

0

by e1 , e2 , , en , where e1 0 , e 2 0 , , e n 0 .

0

0

1

The standard basis for n is { e1 , e2 , , en }.

Dimension of a Vector Space

Note the number of vectors in a basis is always the same for a particular vector space.

This fact allows us to define the dimension of a vector space.

The dimension of a (finite-dimensional) vector space is the number of vectors in any

basis.

A vector space so large that no finite set of vectors spans it is called infinitedimensional.

The Dimension of the Column Space of a Matrix

Column Space of a Matrix:

The pivot columns of a matrix A form a basis for ColA. Then the dimension of the

column space, denoted dim(ColA), is the number of pivot columns in A and is called the

rank of A, denoted rank A.

Invertible Matrix Characterization (Basis for Col A):

Let A be an n n matrix. The following statements are equivalent:

A is invertible

The column vectors of A are linearly independent

Every column of A is a pivot column

The column vectors of A form a basis for ColA

RankA=n.