Simple Linear Regression, Part 2

advertisement

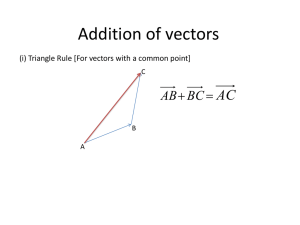

Simple Linear Regression - Part Deux Just the Essentials of Linear Algebra 2 5 Vector: A set of ordered numbers, written, by default, as a column. Ex., v 1 3 Transpose of a Vector: The transpose of vector v , written vT , is defined as the corresponding ordered row of numbers. Ex., the transpose of the vector v above is vT 2 5 1 3 2 1 5 3 Vector Addition: Addition is defined componentwise. Ex., for vectors v and u , 1 2 3 4 2 1 2 1 1 5 3 5 3 2 uv vu 1 2 1 2 1 3 4 3 4 7 Vector Multiplication: The inner product (or dot product) of vectors v and u is defined as 2 vT u 5 1 3 1 3 2 1 5 3 1 2 3 4 2 15 2 12 7 2 4 Note 1: The inner product of two vectors is a number! Note 2: vT u uT v Orthogonal: Vectors v and u are orthogonal if vT u 0 . The geometrical interpretation is that the vectors are perpendicular. Orthogonality of vectors plays a big role in linear models. Length of a Vector: The length of vector v is given by v vT v . This extends the Pythagorean theorem to vectors with an arbitrary number of components. Ex., v vT v 39 2 1 Matrices: A is an m by n matrix if it is an array of numbers with m rows and n columns. Ex., A 1 4 1 3 is a 4x2 3 2 matrix. Matrix Addition: Matrix addition is componentwise, as for vectors. Note: A vector is a special case of a matrix with a single column (or single row in the case of its transpose). Matrix Multiplication: If A is m x n and B is n x p, the product AB is defined as the array of all inner products of row vectors of A with column vectors of B. Ex., If A4 x 2 1 4 AB 4 x 3 2 2 2 1 1 4 1 1 7 3 3 and B2 x 3 , then 3 1 2 1 2 5 1 6 . 13 0 32 10 16 Transpose of a Matrix: The transpose of m x n matrix A is the n x m matrix AT obtained by swapping rows for columns in A. Ex., If A4 x 2 2 1 1 4 1 2 1 1 4 3 , the A2Tx 4 1 3 3 2 3 2 Transpose of a Product: (AB)T = BTAT Symmetric Matrix: The square matrix Anxn is symmetric if aij = aji for all 1 i n, 1 j n. Ex., In 1 3 1 A3 x 3 3 7 2 : a12 = a21 = -3; a13 = a31 = 1; a23 = a32 = -2. 1 2 5 (Note: If Anxn is symmetric, then AT A ) Theorem: for any m x n matrix A, ATA is an n x n symmetric matrix, and AAT is an m x m symmetric matrix. Linear Independence: The set of n vectors v1 ,v2 ,v3 , combination c1v1 c2v2 c3v3 ,vn form a linearly independent set if the linear cnvn 0 implies that all of the constant coefficients, ci , equal zero. rank(A): The rank of matrix A, rank(A), is the number of linearly independent columns (or rows) in the matrix. A matrix with all columns, or all rows, independent is said to be "full rank." Full rank matrices play an important role in linear models. Theorem about Rank: rank(ATA) = rank(AAT) = rank(A). This theorem will be used in regression. Inverse of a Matrix: The inverse of the n x n square matrix A is the n x n square matrix A-1 such that AA-1 = A-1A = I, where I is the n x n "Identity" matrix, I nxn 1 0 0 0 0 1 0 0 0 0 0 0 1 0 0 1 nxn Theorem: The n x n square matrix A has an inverse if and only if it is full rank, i.e., rank(A) = n. a b 1 d b 1 has 2 x 2 inverse . Ex., if A 2 x2 d ad bc c a 1 4 2 4 1 1 1 4 1 1 4 , then A1 . You should carry out A 2 1 4 3 3 2 10 3 2 10 3 2 3 1 Theorem: The invertible 2 x 2 matrix A2 x 2 c 1 0 the products AA-1 and A-1A to confirm that they equal I 2 x 2 . 0 1 Solving a System of n Linear Equations in n Unknowns a11 x1 a12 x2 a1n xn b1 a21 x1 a22 x2 a2 n xn b2 The system of n Linear Equations in n Unknowns, , can be represented by the an1 x1 an2 x2 matrix equation Ax = b , where Anxn a12 a12 a a 21 22 an1 an2 ann xn bn a1n x1 b1 b a2 n x , x 2 , and b 2 . The System has a ann nxn xn bn unique solution if and only if A is invertible, i.e., A-1 exists. In that case, the solution is given by x = A-1b . Representing the Simple Linear Regression Model as a Matrix Equation For a sample of n observations on the bivariate distribution of the variables X and Y, the simple linear regression Y1 0 1 X1 ε1 0 1 X1 ε1 Y X ε X ε 2 0 1 2 2 0 1 2 2 model Y 0 1 X ε leads to the system of n equations , Yn 0 1 X n εn 0 1 X n εn Y1 1 X1 ε1 Y 1 X 2 2 0 ε2 which can be written . The shorthand for this is Y Xβ ε , where the n x 2 1 Yn 1 X n εn matrix X is called the "design" matrix because the values of the variable X are often fixed by the researcher in the design of the experiment used to investigate the relationship between the variables X and Y. Note: Since X refers to the design matrix above, we will use x to refer to the vector of values for X, xT X 1 X2 Xn . Fitting the Best Least Squares Regression Line to the n Observations Ideally, we would solve the matrix equation Y Xβ ε for the vector of regression coefficients β , i.e., the true intercept 0 and true slope 1 in the model Y 0 1 X ε . In practice, however, this is never possible because the equation Y Xβ ε has no solution! (Why?) When faced with an equation that we cannot solve, we do what mathematicians usually do: we find a related equation that we can solve. First, since we don't know the errors in our observations, we forget about the vector of the errors ε . (Here, it is important that we not confuse the errors εi , which we never know, with the residuals ei associated with our estimated regression line.) Unable to determine the parameters 0 and 1 , we look to estimate them, i.e., solve for b0 and b1 in the matrix equation Y Xb , but the system involves n equations in the two unknowns b0 and b1 . If you remember your algebra, overdetermined systems of linear equations rarely have solutions. What we need is a related system of linear equations that we can solve. (Of course, we'll have to show that the solution to the new system has relevance to our problem of estimating 0 and 1 .) Finally (drum roll please), the system we'll actually solve is, X TXb = X TY (1.1) Next, we show what the system above looks like, and, as we go, we'll see why it's solvable and why it's relevant. (1.2) (1.3) (1.4) 1 X TX X1 1 X 1 1 1 X 2 n X n 1 X i 1 X n 1 X2 n X TXb X i 1 X TY X1 X i X i2 X i b0 nb0 b1 X i X i2 b1 b0 X i b1 X i2 1 X2 Y1 1 Y2 Yi X n X iYi Yn Now we're in a position to write out the equations for the system X TXb = X TY , nb0 b1 X i Yi (1.5) b0 X i b1 X i2 X iYi If these equations look familiar, they are the equations derived in the previous notes by applying the Least Squares criterion for the best fitting regression line. Thus, the solution to equation to matrix equation (1.1) is the least squares solution to the problem of fitting a line to data! (Although we could have established this directly using theorems from linear algebra and vector spaces, the calculus argument made in Part I of the regression notes is simpler and probably more convincing.) Finally, the system of two equations in the two unknowns b0 and b1 has a solution! To solve the system X TXb = X TY , we note that the 2 x 2 matrix X TX has an inverse. We know this since rank( X TX ) = rank( X ) = 2 , and full rank square matrices have inverses. (Note: rank( X ) = 2 because the two columns of the matrix X are linearly independent.) The solution has the form, b X TX (1.6) T where X X 1 1 n X i2 X i 2 X i2 X i 1 X TY X i 1 n n X i X 2 X i2 X i X i , and, after n a fair amount of algebra, equation (1.6) returns the estimated intercept b0 and slope b1 given in Part I of the regression notes: (1.7) X iYi n X i Yi X i X Yi Y b1 2 2 2 1 Xi X Xi n Xi (1.8) b0 Y b1 X 1 Example Although you will have plenty of opportunities to use software to determine the best fitting regression line for a sample of bivariate data, it might be useful to go through the process once by hand for the "toy" data set: x y 1 8 2 4 4 6 5 2 Rather than using the equations (1.7) and (1.8), start with X TXb = X TY and find (a) X TX , (b) X TY , (using only the definition of the inverse of a 2 x 2 matrix given in the linear algebra review), and 1 (d) X TX X TY . Perform the analysis again using software or a calculator to confirm the answer. (c) X T X 1 The (Least Squares) Solution to the Regression Model b0 is b1 The equation Y Xb e is the estimate to the simple linear regression model Y Xβ ε , where b the least squares estimate of the intercept and slope of the true regression line Y Xβ given by equations (1.8) ˆ e , where and (1.7), respectively, and e is the n x 1 vector of residuals. Now, Y Xb e can be written Y Y ˆ Xb is the n x 1 vector of predictions made for the observations used to construct the estimate b . The n Y points X i ,Ŷi will, of course, all lie on the final regression line. The Hat Matrix A useful matrix that shows up again and again in regression analysis is the n x n matrix H X X TX ˆ Xb X X TX the "hat" matrix. To see how it gets its name, note that Y -1 -1 X , called XY HY . Thus the matrix H puts a "hat" on the vector Y . The hat matrix H has many nice properties. These include: H is symmetric, i.e., HT H , as is easily proven. H is idempotent, which is a fancy way of saying that HH H . The shorthand for this is H2 H . This is also easily proven. The matrix I - H , where I is the n x n identity matrix, is both symmetric and idempotent. The Error Sum of Squares, SSE, in Matrix Form ˆ e; e YY ˆ . Using the hat matrix, this becomes e Y HY I H Y , where From the equation Y Y we have right-factored the vector Y . The error sum of squares, SSE, is just the squared length of the vector of residuals, i.e., SSE ei2 eTe . In terms of the hat matrix this becomes SSE YT I H Y , where we have used the symmetry and idempotency of the matrix I H to simplify the result. The Geometry of Least Squares ˆ e YY (1.9) One of the attractions of linear models, and especially of the least squares solutions to them, is the wealth of geometrical interpretations that spring from them. The n x 1 vectors Y , Ŷ , and e are vectors in the vector space n , (an n-dimensional space whose components are real numbers, as opposed to complex numbers). From equation (1.9) above, we know that the vectors Ŷ and e sum to Y , but we can show a much more surprising result that will eventually lead to powerful conclusions about the sums of squares SSE, SSR, and SST. First, we have to know where each of the vectors Y , Ŷ , and e "live": The vector of observations Y has no restrictions placed on it and therefore can lie anywhere in n . X1 1 1 ˆ Xb b b X 2 is restricted to the two-dimensional subspace of Y 0 1 1 Xn n spanned by the columns of the design matrix X , called (appropriately) the column space of X . We will shortly show that e lives in a subspace of n orthogonal to the column space of X . Next, we derive the critical result that the vectors Ŷ and e are orthogonal (perpendicular) in n . (Remember: ˆ Te HY T I H Y Y TH T I H Y vectors v and u are orthogonal if and only if v Tu 0 .) Y Y T H H Y 0 , where we made repeated use of the fact that H and I H are symmetric and idempotent. Therefore, e is restricted to an n - 2 dimensional subspace of n (because it must be orthogonal to every vector in the column space of X ). Combining the facts that the vectors Ŷ and e sum to Y and are orthogonal to each other, we conclude that Ŷ and e form the legs of a right triangle (in ˆ triangles, Y (1.10) . 2 e 2 Y 2 n ) with hypotenuse Y . By the Pythagorean Theorem for right or equivalently, ˆ TY ˆ eTe YTY Y ˆ Y and e sum to Y Y and are A slight modification of the argument above shows that the vectors Y ˆ Y and e form the legs of a right triangle (in n ) with orthogonal to each other, whence we conclude that Y hypotenuse Y Y . By the Pythagorean Theorem for right triangles, Yˆ Y Yˆ Y eTe Y Y T Y Y T (1.11) or equivalently, SSR + SSE = SST. This last equality is the famous one involving the three sums of squares in regression. We've actually done more than just derive the equation involving the three sums of squares. It turns out that the dimensions of the subspaces the vectors live in also determines their "degrees of freedom," so we've also shown that SSE has n - 2 degrees of freedom because the vector of residuals, e , is restricted to an n - 2 dimensional subspace of n . (The term "degrees of freedom" is actually quite descriptive because the vector e is only "free" to assume values in this n - 2 dimensional subspace.) The Analysis of Variance (ANOVA) Table The computer output of a regression analysis always contains a table containing the sums of squares SSR, SSE, and SST. The table is called an analysis of variance, or ANOVA, table for reasons we will see later in the course. The table has the form displayed below. (Note: The mean square of the sum of squared residuals is the "mean squared error" MSE, the estimate of the variance 2 of the error variable in the regression model.) Source Degrees of Freedom (df) Sums of Squares (SS) 1 Yˆi Y Residual n-2 Yi Ŷi Total n-1 Yi Y Regression 2 2 2 Mean Square (MS) = SS/df Yˆi Y Yi Yˆi n2 2 2