Review 2

advertisement

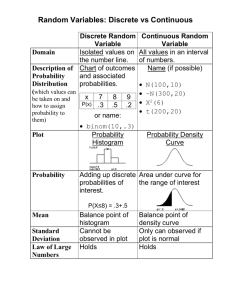

Review 2 Chapter 5 Summarizing Bivariate Data 1. Scatter plots and residual plots (1) A scatter plot a picture of bivariate numerical data in which each observation (x, y) is represented as a point on a rectangular coordinate system. It can reveal the relationship between x and y. (2) A residual plot is a scatter plot of the (x, residual) pairs. Note: The predicted or fitted values: yˆ1 a bx1 , yˆ 2 a bx2 ,, yˆ n a bx n The residuals from the least squares line: y1 - ŷ1 , y2 - ŷ 2 ,, yn - ŷ n . (i) Isolated points or a pattern of points in the residual plot indicate potential problems. A desirable plot is one that exhibits no particular pattern. An unusual residual may indicate some type of unusual behavior. (ii) It is also important to look for unusual points in the scatter plot, especially an influential observation. One method for assessing the impact of such an isolated point on the fit is to delete it from the data set and then recompute the best-fit line. 2. The least squares line or sample regression line is the line yˆ a bx with ( x x )( y y ) xy ( x )( y ) / n b ( x x )2 x2 r ( x)2 / n sy sx a = y bx . which gives the best fit to the data. Properties of the least squares line (the sample regression line). 1. The least squares line passes through ( x , y ). 2. b and r have the same sign since b = r sy sx . 3. The least squares line can be rewritten as yˆ y r yˆ y r sy sx sy sx ( x x ) . When x = x ksx , ( x ksx x ) y rks y . 3. Pearson’s sample correlation coefficient and its properties Pearson’s sample correlation coefficient zx zy r n 1 y y sy xx sx n 1 . Properties of r (1) (2) (3) The value of r does not depend on the unit of measurement for either variable. The value of r does not depend on which of the two variables is labeled x. -1 r 1. An r near 1 indicates a substantial positive linear relationship, whereas an r close to –1 suggests a prominent negative linear relationship. The strength of linear relationship based on r can be summarized as follows. Strong Strong Moderate Moderate Weak --+-------------------+------------------+-------------------+---------------------+---1 -0.8 -0.5 0 0.5 0.8 1 Figure: the strength of linear relationship based on r (4) (5) r =1 only when all the points in a scatter plot lie exactly on a straight line that slopes upward. r = -1 only when all the points lie exactly on a downward-sloping line. The value of r is a measure of the strength of linear relationship between x and y. A value of r close to zero does not rule out any other strong relationship between x and y. 4. The total sum of squares: SST0 = ( y1 y ) 2 ( y2 y ) 2 ( yn y ) 2 2 ( y y) = ( y )2 2 y n which is interpreted as a measure of total variation in y. The residual sum of squares (sometimes referred to as the error sum of squares): SSResid = ( y1 yˆ1 ) 2 ( y2 yˆ 2 ) 2 ( yn yˆ n ) 2 = y2 - ay - bxy (y 2 yˆ ) which measures the amount of variation in y that cannot be attributed to the linear relationship between x and y. The coefficient of determination: r 2 1 SSResid SSTo , which gives the proportion of variation in y that can be attributed to an approximate linear relationship between x and y. Multiplying r2 by 100 gives the percentage of y variation attributable to the approximate linear relationship. The closer this percentage is to 100%, the more successful is the linear relationship in explaining variation in y. Coefficient of determination = (Pearson’s sample correlation coefficient)2. The standard deviation about the least squares line is given by se SSResid n2 Roughly speaking, se is the typical amount by which an observation deviates from the least squares line. Chapter 6 Probability 5. Important concepts: The probability of an outcome, denoted by P(outcome), is interpreted as the long-run relative frequency of the outcome when the experiment is performed repeatedly under identical conditions. Independent outcomes: Two outcomes are said to be independent if the probability that one outcome occurs is not affected by knowledge of whether the other has occurred. More than two outcomes are said to be independent if knowledge that some of the outcomes have occurred does not change the probabilities that any of the other outcomes occur. Dependent outcomes: If the occurrence of one outcome changes the probability that the other outcome occurs, the outcomes are dependent. 6. Basic properties of probability 1) 0 P (any outcome) 1. 2) (The addition rule)If any two of outcomes A1, A2, , Ak cannot occur simultaneously, then P(A1 or A2 or or Ak) = P(A1)+P(A2)++P(Ak) 3) (The complement rule) The probability that an outcome A will not occur is equal to 1 minus the probability that the outcome will occur, that is, P(not A) = 1 – P(A) 4) (The multiplication rule) If two outcomes, A1 and A2, are independent, the probability that both outcomes occur is the product of the individual outcome probabilities, that is, P(A1 and A2 ) = P(A1)P(A2) More generally, if k outcomes, A1, , Ak, are mutually independent, then P(A1 and A2 and and Ak) = P(A1)P(A2) P(Ak) Chapter 7 Population Distribution 7. A population distribution The distribution of all the values of a numerical variable or categories of a categorical variable. A population distribution provides important information about the population. The population distribution for a categorical variable or a discrete numerical variable can be summarized by a relative frequency histogram, whereas a density histogram is used to summarize the distribution of a continuous numerical variable. Further, we represent a population distribution for a continuous variable by using a simple smooth curve that approximates the actual population distribution. Such a curve is called a continuous probability distribution (or a density curve). 8. Properties of continuous probability distributions (1) The total area under the curve is equal to 1. (2) The area under the curve and above any particular interval is interpreted as the probability of observing a value in the corresponding interval when an individual or object is selected at random from the population. (3) For continuous numerical variables and any particular numbers a and b, P(x a) = P(x < a) P(x a) = P(x > a) P(a < x < b) = P(a x b). 9. Important discrete distributions (i) Bernoulli distribution x Probability (proportion) 1 Mean: = Variance: 2= (1- ) Standard deviation: = 2 (1 ) ii) Binomial distribution n P( X x) x (1 ) n x , x = 0, 1, , n, x n n! , m!( read m factorial ) = m(m-1) 2 1. where x ! ( n x)! x Mean: = n Variance: 2 = n(1-) 0 1 Standard deviation = 2 n (1 ) 10. Important continuous distributions 1) Uniform distributions A continuous distribution is called the uniform distribution on [a, b], if its density curve is determined by 1 , a x b f ( x) b a otherwise 0 Mean: (a b) / 2 Variance: 2 (b a) 2 / 12 Standard deviation: (b a ) / 12 2) Normal distributions The density curve of a normal distribution with mean and standard deviation is determined by f(x) = 1 2 2 e 1 2 2 ( x )2 , - < x < . Find probabilities i) For the standard normal distribution, we can find various probabilities from Appendix table 2 on pages 730-731. ii) If x has a normal distribution with mean and standard deviation , we can find probabilities related to x by the following equalities. P( x < b) = P( x P(a < x) = P( a P(a < x < b) = P( a x b x ) = P( z b ) ) = P( a z ) b ) = P( a z b ) Identify extreme values i) For the standard normal distribution, we can identify the three types of extreme values from Appendix Table 2. ii) For a normal distribution with mean and standard deviation , we first solve the corresponding problem for the standard normal distribution and then translate our answer into one for the normal distribution of interest by x* = +z*, Check for normality by a normal probability plot A normal probability plot a scatter plot of the (normal score, observation) pairs A substantial linear pattern in a normal probability plot suggests that population normality is plausible. Otherwise, normality is suspect. 3) t distributions A continuous distribution is called the t distribution with d degrees of freedom, if its density curve is determined by f ( x) (( d 1) / 2) ( d / 2) 1 d 1 (1 x / d ) 2 ( d 1) / 2 , - < x < , Mean: = 0, d > 1. Variance: 2 d d 2 , d > 2. Standard deviation: 2 d d 2 , d > 2. Important properties of t distributions 1. The t curve corresponding to any fixed number of degrees of freedom is continuous, bell-shaped, symmetric, and centered at zero (just like the standard normal (z) curve). 2. Each t curve is more spread out than the z curve. 3. As the number of degrees of freedom increases, the spread of the corresponding t curve decreases. 4. As the number of degrees of freedom increases, the corresponding sequence of t curves approaches the z curve. Find probabilities related to a t distribution from Appendix Table 4 on pages 733-735. 11. Important Examples Important Examples in Notes: Examples 5.2, 5.4, 6.4, 6.7, 7.2, 7.5, 7.6, 7.7, 7.10. Important exercises in homework: Ex. 5.60, 6.13, 6.20, 7.25.