Vectors and Matrices

advertisement

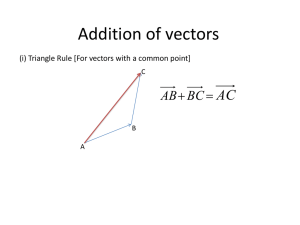

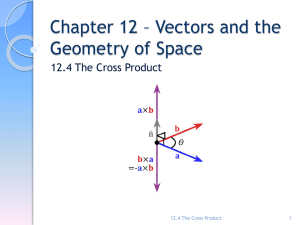

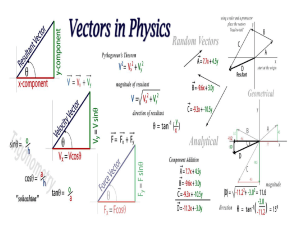

Johns Hopkins University What is Engineering? M. Karweit Vector and matrix mathematics There are many branches of mathematics. Each focuses on a method of representation and manipulation to address a particular set of problems. In science and engineering, almost all of them are important. You’ve heard of statistics to deal with problems of uncertainty and differential equations to describe the rates of change of physical systems. In this section you will learn about two more: vector analysis and matrix algebra. The reason we will discuss both of these subjects somewhat together is because they share some of the same terminology and operations. Vector analysis is the mathematics of representing physical variables in terms of coordinate systems. If we say that the sky is falling, we are implicitly defining a coordinate system. At least we’re defining which way is down. When we speak of velocity or acceleration or position, they are almost always prescribed with respect to some frame of reference, i.e., a coordinate system. Vector analysis gives us the tools to prescribe and manipulate such variables with respect to a frame of reference. Vector analysis is a part of a broader discipline called tensor analysis. Matrix algebra is the mathematics of ordered sets or arrays of data and variables. These items could be engineering measurements, prescriptions of a set of algebraic equations, or the scores that students received on the last physics test. It defines how information can be represented and operated upon with very efficient notation. But it does not necessarily deal with coordinate systems. First, we have to explain what is a vector and what is a matrix. In general, a vector is nothing more than a one-dimensional, ordered array of quantities which are denoted by a single name, say, t. Note the underscore. It’s one of the ways to distinguish a vector variable from a simple variable. The elements within the array are identified by subscripts. Thus, t3 is the third element in the array t. (Here we don’t need the underscore, because the subscript already implies an array.) t may be an array of hourly temperatures or exam grades or the dates of the past ten Easter Sundays. Using the notation of vectors is simply a convenient way of referring to information. That’s the simple explanation of a vector. However, when vectors are used to represent quantities that have direction or position in physical space, they have characteristics which can be manipulated and interpreted with a mathematics called vector analysis. In this case, vectors describe the direction along which the variable acts. Since a coordinate system has three independent spatial dimensions, these vectors are typically only three elements long—each element corresponding to a coordinate direction. We’ll get more into this later. For now, just be aware that the term “vector” is used in several different ways. The same thing is true with matrices. A matrix is a two-dimensional array of quantities denoted by a single name, say, T, whose elements are identified by two 5/2/02 Vectors and matrices 1 Johns Hopkins University What is Engineering? M. Karweit subscripts. T1,3, for example, might be the temperature on the third hour of the first day of the month, i.e., the subscript identifies the row and column in which the information resides. A spreadsheet is a good example of a matrix. It’s a way to organize data. Just like vectors, matrices can also be used to prescribe variables associated with coordinate systems. For example, they can define how a vector in one coordinate system would be represented in another coordinate system. We’ll see in our discussion on robotics, that it is useful to use a separate coordinate system for each segment of, say, a three segment robotic arm. So, if you refer to a point with respect to segment #3, where is that point with respect to segment #2, or to a “world” coordinate system? That’s where matrices come in. There’s another important application. The coordinate system need not refer to physical space. It could be a “parameter” space. For example, if you had five algebraic equations in five unknowns v,w,x,y,z, you would be looking for a solution—a single point in v-w-x-y-z “space”--that satisfies all five equations. That set of algebraic equations can be represented by a matrix equation and solved using matrix algebra. So matrices are not only spreadsheets. They’re much more to the engineer. The important point to remember right now is that the word “vector” and “matrix” can be used in several different ways. Let’s now see how vectors and matrices are actually used in science and engineering. Vector analysis Attributes of physical variables Think about some physical variables--say, mass, velocity, speed, pressure, position. Are they all qualitatively the same? No. Some of them like mass and pressure can be specified with only a single value, a magnitude—20 kg or 150 p.s.i. Others, like velocity and position require you to specify how the variable relates to a frame of reference--a coordinate system. These variables are said to have magnitude and direction. Velocity and speed are often used interchangeably. But they are entirely different kinds of variables. Speed tells how fast, but velocity tells how fast in what direction. Physical variables fall into three classes: scalars, vectors, and tensors. All three are denoted differently and have quite different characteristics. Scalars are variables whose value can be expressed purely as a magnitude. Mass, pressure, density, and distance are all scalars; and they're represented mathematically by symbols like m, p, , d. Vectors, as we described above, are linearly ordered arrays of quantities which are denoted by a single name. Here we want to consider how they’re used in reference to a coordinate system. 5/2/02 Vectors and matrices 2 Johns Hopkins University What is Engineering? M. Karweit Consider the variables velocity, acceleration, and force. Each of these requires a prescription of strength or magnitude and also the direction in which it’s acting. That’s the concept of a physical vector. Variables such as pressure and mass only require a prescription of magnitude. The pressure in the vessel is 140 p.s.i. Pressure is a scalar. Vectors connote magnitude and direction by indicating the projection of the vector variable onto each of the coordinate directions. The force vector F = (3, 4, 5) defines a force which acts 3 Newtons in the x-direction, 4 Newtons in the y-direction, and 5 Newtons in the z-direction. There are several different notations for vectors: F, a , ui . The boldface F and underlined a are exactly equivalent. Each represents a triplet of values, one for the projection of the variable onto each of the coordinate directions. The subscripted ui also represents a vector, but is slightly more specific. It suggests that there are three coordinate directions, 1, 2, 3 (corresponding to x, y, z). ui denotes the component of the vector u ( or u) in the ith direction. Or we can be more specific and write u2 as the component of the u vector in the "2", or y direction. When we refer to the variables that are vector quantities, we simple say “force vector”, or “displacement vector, or “velocity vector”. Tensors are variables whose values are matrices or collections of vectors and require several subscripts for their specification. An example is ij –the strain tensor which describes the deformation of a material. Because materials are composed of an organized collection of atoms, a deformation in one direction, say the x-direction, may be accompanied by deformations in both the y- and z- directions as well. And a deformation in the y-direction could produce yet different deformations in the x- and z-directions. To characterize this behavior we need three values in each of three directions. So we describe it in terms of the tensor ij. This tensor requires 3 x 3 values to define it, one for each of the possible combinations of i and j. (Notice that this tensor is just a particular form of a matrix.) Tensors can get arbitrarily complex because they can have many more subscripts. Such an example is the deformation tensor Cijkl. This tensor requires 3x3x3x3 values for its prescription. It describes the relationship between the stress tensor ij and the strain tensor ij. A tensor with two subscripts is called a second-order tensor. (Guess what a tensor with four subscripts is called!) In fact, one can view all physical variables as tensors. A vector with one subscript is a first-order tensor, and a scalar with no subscripts is a zeroth-order tensor. We will not use tensors at all in this course. And since they can get complicated very quickly, we will not discuss them further. We introduce them only for completeness. Vectors Back to vectors. . . 5/2/02 Vectors and matrices 3 Johns Hopkins University What is Engineering? M. Karweit Vectors are extremely important in science. Not only are they necessary for describing physical variables, but they are also necessary for describing coordinate systems, the framework within which physical things are described. So, what is a physical vector? What are it's properties? There are four. It has magnitude. It has direction. It acts along the line of its direction. It has no fixed origin. The best way to visualize this is with an arrow. The length of the arrow represents the magnitude of the vector; the orientation of the arrow with respect to a coordinate system represents the direction of the vector. Suppose you are in a room (a coordinate system) and you're physically holding an arrow in some position. You can walk around the room holding the arrow in the same orientation with respect to the room, and it would still be the same vector. Finally, if the arrow represented, say, velocity. The velocity would be in the direction of the arrow. Although we can talk about vectors without referring to a coordinate system, we almost always define and use vectors within a coordinate system. So let's discuss coordinate systems a little bit. You are all familiar with the Cartesian coordinate system x,y,z. Each of the axes is orthogonal to one another, which means that the axes are at 90 angles with respect to one another. And any point in space can be defined in terms of its distance along each of the three axes. That's the idea of a coordinate system: to provide a frame of reference from which to measure and define things. Let’s begin with a 2-dimensional example because it’s easier to sketch. We would then have simply an x,y coordinate system. Suppose we begin with a vector V given in terms of its magnitude |V| and direction . This vector can be represented in terms of its x,y components by geometrically projecting the length of the vector onto each of the two axes. Here’s a picture: In cartesian coordinates: y Vx = |V| cos V Vy = |V| sin x projection of V in x-direction So the components or projections of V onto the x and y axes are the magnitudes Vx and Vy. But this is a little awkward to deal with mathematically. There’s a more convenient way. 5/2/02 Vectors and matrices 4 Johns Hopkins University What is Engineering? M. Karweit Since each of the axes of a coordinate system specifies a direction, each axis could itself be represented as a vector. It turns out that any vector U can be completely represented as (or decomposed into) a sum of vectors in any three independent directions. This means that a vector U can be expressed directly in terms of vectors representing the coordinate directions. In a Cartesian coordinate system these vectors are denoted by the unit vectors i, j, k. (A unit vector has magnitude one.) Then U might be represented as ai + bj + ck. a,b,c are the magnitudes of the U vector in the i,j,k directions. The magnitude of U is |U| = a 2 b 2 c 2 . In the example above V can be now described in terms of component vectors as V = Vx i + Vy j . The magnitudes Vx and Vy scale the i, j unit vectors to represent V. What can we do with vectors? For one, we can add them and subtract them. Since vectors prescribe magnitude and direction, their addition and subtraction is geometric. That is, to add two vectors A and B move one of the vectors, say B , (but keep its orientation) so that its tail is at the head of A. The line between the tail of A and the head of B will be a new vector C = A + B. It’s similar for subtraction, except to get A = C – B one moves B with respect to C so that their heads (or tails) come together. Graphically, here’s what it looks like. B B A A=C-B C C=A+B Notice that both of these figures look exactly the same. That’s because the vector operations of addition and subtraction behave just like algebra. C = A + B is exactly the same equation as A = C – B. Notice above we said that for subtracting B from C, either their heads or their tails should be brought together. Sketch this to convince yourself that you get the same answer. These diagrams are presented in only two dimensions, but same rules apply in three dimensions. There’s another way to add two vectors A and B together, and that’s to consider their individual components. You simply add the projections of each of the components together. So, if A = a1 i + a2 j + a3 k B = b1 i + b2 j + b3 k C = A + B = (a1 + b1) i + (a2 + b2) j + (a3 + b3) k You should look carefully at both the graphical method and the component method to understand why they’re both the same. In practice, sometimes it’s practical to use one method, other times the other method. Let’s look at a few more examples. 5/2/02 Vectors and matrices 5 Johns Hopkins University What is Engineering? M. Karweit B E C A E A D F = A+B+C+D+E = ? D B C F = A+B+C+D+E = ? In the first example, what’s F? Remember, the resulting vector is the oriented line from the tail of the first vector to the head of the last vector. What about example two? This one, you should be able to figure out in parts. Vector multiplication and division are not defined. However, two other vector operations that are: vector dot product A B, and vector cross-product AB . Since neither of these concepts will be used in this course, we will not discuss them further. Just be aware that they exist. If you’re curious, their definitions can be found in any elementary book on vectors. The answers: F = 0, because the distance between the tail of A and the head of E is zero; and F = E. A and B are the negatives of one another, so they contribute zero. The same is true for C and D. That leaves E. That’s enough of vector analysis. The important thing to remember is vectors can always be decomposed into three component vectors—usually in the directions of the axes of the coordinate system. Now let’s turn to that other branch of mathematics that deals with vectors: matrix algebra. Matrix algebra Science and engineering often entail sets of equations and coordinate system transformations. If one had to write each individual element in a derivation or a presentation, discourses would be tediously long. And the essential ideas could be swamped by details. To present these ideas more practically we can use the more compact notation of vectors and matrices. And with this compact notation we can perform mathematical operations using matrix algebra. First, what is a vector in matrix algebra? This will be a slightly different definition than before. A vector is an ordered list of elements. There need not be any references to physical coordinate systems. The list could be 10 pieces of recorded data, the 15 unknowns in a set of 15 simultaneous equations, or the 4 coefficients of an equation. What is important is that the list is in some order, and the elements of the list can be identified by their location within the list. In each of these cases we can represent the information by a vector name, say a, and an index. Then the vector, or array of 5/2/02 Vectors and matrices 6 Johns Hopkins University What is Engineering? M. Karweit information might be a = (1, 3, 5, 2). So the third piece of recorded data could be identified as a3 (Here, a3 = 5). And any piece of data could be referred to as ai. No one will misunderstand us if we identify an array or vector as ai. But, in matrix algebra, if we want to perform mathematical operations, we must be more specific and distinguish between two types of vectors: column vectors, and row vectors. Each of these vectors could contain our list of data, so it would seem unnecessary to require two types to hold the same information. But, later we will define some rules of matrix algebra that will require us to distinguish between row and column vectors. Using the example of the array a above, a would be represented as a column 1 3 vector a= . As a row vector, A would be represented as aT=(1 3 5 2). Notice the 5 2 "transpose" sign--the "T". This means that the rows in vector a become the columns in vector aT. So we can change the representation of an array a from a column vector a to a row vector aT just be adding the superscript T. Now, a matrix. Sometimes we will need to represent a two-dimensional array of information, for example, the 4 coefficients of each equation for 4 simultaneous equations. A two-dimensional array is called a matrix. Just like the vector, we give it a name, say C. For matrices, we'll use upper-case, bold letters. (However, sometimes you will also see the notation C . Just like a vector might have a single underline, a matrix could be identified with a double underline) And, like a tensor, since there is a matrix of values rather than a linear array, an individual entry will be identified using two subscripts--the first to designate which row; the second to designate which column. So, 1 3 6 4 5 4 4 9 C3,4 would be the element in row 3 column 4. An example of C might be . 8 4 2 3 2 1 8 2 Here, C3,4 = 3. A matrix need not be "square", i.e., the same number of rows as columns. It can be 4 x 2, or 200 x 3, or even 100 x 1--but then it would be a matrix representation of a column vector. Sometimes a column vector is called a column matrix--just to remind us that a vector is just a special case of a matrix. Note that a matrix is similar to a 2nd order tensor. The difference is that a matrix can refer to almost any information, whereas a tensor refers only to information relative to a coordinate system. We identify the size of the matrix with the phrase: "a matrix of order 3x4”. 5/2/02 Vectors and matrices 7 Johns Hopkins University What is Engineering? M. Karweit Matrices can also be transposed. This means that the rows are interchanged with columns and vice-versa. It the same operation that can be carried out with vectors. For 2 3 2 1 9 T , then A = 1 4 . example, if A = 3 4 6 9 6 Certain matrices are given special names. Several of the more important ones are 1) square matrix--the number of rows equals the number of columns, e.g., 2 1 2 A = 5 2 6 , 3 rows, 3 columns 7 3 6 2) symmetric matrix--a matrix in which the elements Ai,j = Aj,i , e.g., 2 1 4 A = 1 0 3 4 3 3 3) diagonal matrix--a matrix in which the only non-zero elements are along the main diagonal, Ai,j = 0, for i j. The main diagonal are the elements Ai,i . 2 0 0 A = 0 1 0 0 0 4 4) the unit or identity matrix--a diagonal matrix with "1"s down the main diagonal. 1 0 0 A = 0 1 0 = I 0 0 1 The letter I is always used to refer to the identity matrix I. The one other concept we need for matrix algebra is the "scalar"—identical to that in vector analysis. It is just a simple number or magnitude, like 3. In fact, all these representations of information--two-dimensional arrays, one-dimensional arrays, and simple numbers--can be considered as different levels of matrices. A twodimensional array is a second-order matrix, i.e., it requires two subscripts to identify a particular value; a one-dimensional array is a first-order matrix; and a scalar is a zerothorder matrix. . Sound familiar? 5/2/02 Vectors and matrices 8 Johns Hopkins University What is Engineering? M. Karweit Unfortunately the word “order” is used in many different ways in mathematics. “An ordered set of elements” refers to the organization of information in an array. “A 2nd order matrix” is one that requires two subscripts for specification. “A matrix of order 5x3” is a matrix with 5 rows and 3 columns. “A 3rd order polynomial” has four terms (sic). You get used to it. . . Now, we need a set of rules for how we can carry out mathematics with scalars, vectors and matrices. Equality: 1. a = b if and only if a and b are the same length and the corresponding elements are identical, i.e., ai = bi. The same rules apply if aT = cT . 2. D = F if and only if D and F are the same size and the corresponding elements are identical, i.e., Di,j = Fi,j. 1 4 , and if B = If A = 3 2 1 4 , then A = B. 3 2 Matrix operations: 1. Addition, subtraction. These operations are carried out on corresponding members of like vectors/matrices. So, c = a + b ci = ai + bi cT = aT - bT ci = ai - bi G = D + F Gi,j = Di,j + Fi,j 2. Multiplication. To obtain the product G = D F, the number of columns in D (the first matrix) must equal the number of rows in F (the second matrix). If D is of order M x N and F is of order N x P, then G will be of order M x P. D (M x N) F (N x P) = G (M x P) Note: multiplication does not commute. That is, F D will not, in general, give the same result as D F. In fact, F D may not even be a permissible operation. N Multiplication is defined by the operation Gi,j = D k 1 i ,k Fk , j We can also represent this operation as follows: 5/2/02 Vectors and matrices 9 Johns Hopkins University D1,1 D2,1 D 3,1 D1, 2 D2 , 2 D3, 2 What is Engineering? D1,3 D2 , 3 D3,3 F1,1 F2,1 F 3,1 M. Karweit F1, 2 F2, 2 G2,2 = D2,1 F1,2 + D2,2 F2,2 + D2,3 F3,2 F3, 2 To obtain all the elements of G , we must carry out this process for each combination of rows in D times columns in F. In this case, the result G will be of order 3 x 2. Note, that in this example F D is not a defined calculation, because the number of columns in F does not equal the number of rows in D. The multiplication operation is valid for vectors as well. A row vector can be construed as a matrix of order 1 x N; a column vector can be construed as a matrix of order M x 1. So matrix/vector multiplication is defined, provided the number of columns in the first matrix/vector is equal to the number of rows in the second matrix/vector. Some examples: Let a be a 1 x 3 column vector; let b be a 1 x 4 column vector; and let D be a 3 x 4 matrix. Then the following multiplication operations are valid: Da bT D a aT bT b c -- a 1 x 4 column vector eT-- a 3 x 1 row vector E --a 3 x 3 matrix s --a 1 x 1 matrix, i.e., a scalar Multiplication of a scalar times a matrix/vector is also possible. If s is a scalar, then s D = s Di,j for all i and j, i.e., every element of the matrix is multiplied by s. The same rule applies to vectors. 3. Inverse. If a matrix D is square, then it may have an inverse D-1. D will have an inverse if the "determinant" of D, |D| 0. The inverse is defined as follows: Suppose there is a square matrix F such that F D = I , the identity matrix. Then F is said to be the inverse of D or D-1. If matrices were simple variables, then D 1 D D1 D = I or 1. But, since matrix division does not exist, we write D D D-1 to represent the "inverse" or reciprocal of D. 4. Determinant. Its definition is beyond the scope of this discussion. But we can offer an approximate meaning. First, determinants apply only to square matrices. If the rows of a matrix D are thought to be a collection of row vectors or column vectors, then the determinant is an indicator of how similar those vectors are--the more similar they are, the closer to zero the determinant will be. If two rows are identical, or if one is a just a constant multiple of another, then the determinant is zero. The determinant of D is represented as |D| and is a single value. How the determinant is actually calculated will not be presented. We introduce it here only because we want to refer to the inverse matrix, say D-1. And D-1 does not exist if 5/2/02 Vectors and matrices 10 Johns Hopkins University What is Engineering? M. Karweit |D| = 0. In the next section, we will relate the determinant to systems of linear equations. 5. Matrix operations on equations. Suppose we have the following matrix equation Ax = b. where A is 3 x 3, x is 3 x 1, and b is 3 x 1. That is, each side reduces to a 3 x 1 matrix (or vector). To keep this equation valid, any operation we carry out on the left-hand side of the equation, we must also carry out on the right-hand side of the equation--no different than in regular algebra. However, in matrix algebra multiplication is not commutative. So we can't say "multiply both sides of the equation by the 3 x 3 matrix D. We must be more specific. We must say "premultiply both sides of the equation. . ." or "post-multiply both sides of the equation. . ." These would lead, respectively, to DAx = Db and AxD = bD. This is pretty heavy stuff. And we’ve given almost no examples. How is all of this matrix notation and its associated mathematics useful to us? In this course, we will use it primarily to represent systems of equations. Consider the following set of simultaneous linear algebraic equations, where X,Y,Z have nothing to do with coordinate systems: 3X + 5Y + 7Z = 15 2X - 4Y - 3Z = 9 X + 2Y + Z = 1 7 3 5 X Now, consider the following matrix and vectors: A = 2 4 3 , x = Y , 1 2 Z 1 15 b = 9 . Then the matrix equation Ax = b is exactly equivalent to the three 1 simultaneous equations above. A is the coefficient matrix, x is the vector of unknowns, and b is the right-hand-side vector. (With all of the specialized vocabulary in mathematics, one would have thought that a better word would have been available to describe the right-hand side of equations.) Each side of Ax = b is a 3 x 1 column vector. Since the two sides are equal to one another, i.e., the two column vectors are equal, then each corresponding element of the two vectors must be equal. So, that says that the left hand side of row one must equal 3X + 5Y + 7Z, and the right-hand side of row one must equal 15. But that is the statement that 3X + 5Y + 7Z = 15--the first of the three equations. Rows two and three produce the remaining two equations. So, now we have a way to represent a system of equations. How do we solve them? Using the matrix representation, we can presume that A has an inverse A-1. This will be true if |A| 0. |A| will be nonzero, if the three equations are independent, i.e., if 5/2/02 Vectors and matrices 11 Johns Hopkins University What is Engineering? M. Karweit none of the equations are linear combinations of the others. Now, let's pre-multiply each side of our equation by A-1. Then, Ax = b A-1Ax = A-1b . But, since A-1 A = I, we have Ix = A-1b x = A-1b. We have solved for x, the vector of unknowns. And its solution is the product of the inverse of the coefficient matrix A times the right-hand side vector b. There is much more to matrix algebra than what we have presented here. But, even by knowing these few elements, we'll be able to use matrix algebra as a convenient tool for representing and solving many engineering problems. 5/2/02 Vectors and matrices 12