Approximating Sinusoidal Functions with Polynomials

advertisement

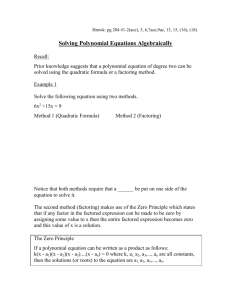

Approximating Sinusoidal Functions with Interpolating Polynomials When most mathematicians think about approximating the values of transcendental functions, particularly the sine and cosine functions, what typically comes to mind are the Taylor polynomial approximations. For instance, sin x x T1( x) sin x x x3 T3 ( x) 3! sin x x x3 x5 T5 ( x) 3! 5! and so forth. Note that x must be in radians. These polynomials have the properties: 1. They agree perfectly with the function at x 0 ; 2. The closer that x is to the origin, the better the approximation; the further that x is from the origin, the poorer the approximation; 3. The higher the degree of the approximating polynomial, the better the approximation, meaning that one gets more accuracy over a larger interval centered at the origin. We show the graphs of the sine function along with the first three Taylor polynomial approximations on the interval [0, 4] in Figure 1a. The linear approximation is in red; the sine curve and the cubic and fifth degree polynomials are essentially indistinguishable. We zoom in on the right-hand portion of the interval in Figure 1b. From these graphs, it is fairly obvious that these three properties do hold. The authors have provided a dynamic Excel spreadsheet [ 4 ] to allow readers and their students to investigate the use of Taylor polynomials to approximate the sine and cosine, as well as the exponential and logarithmic functions. Gordon [2] demonstrates how these Taylor approximation formulas can be found based on simple data analysis without any reference to calculus. However, it turns out that using Taylor polynomials to approximate the sine and cosine is not necessarily the most effective approach. Instead, we look at a different approach, the idea of polynomial interpolation. There are two primary forms of polynomial approximation. One of them was developed by Isaac Newton and other, which is attributed to Lagrange, was actually discovered by Edward Waring in 1779 and 1 0 .70 8 0.75 T1 ( x) T5 ( x),sin( x) 0 .70 6 0.5 0 .70 4 T3 ( x), T5 ( x),sin( x) 0.25 T3 ( x) 0 .70 2 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 Figure 1a: Sine function and its first three Taylor approximations 0 .7 0 .78 0 .78 2 0 .78 4 0 .78 6 Figure 1b: Sine function and its first three Taylor approximations on [0.78, 4] separately by Euler a few years later. Interpolation is based on the problem of finding a polynomial that passes through a set of n 1 data points; in general, n 1 points determine a polynomial of degree n (or possibly lower if the points happen to fall onto a lower degree curve). In this article, we consider the Lagrange interpolating formula, which we introduce later. (In contrast, regression analysis seeks to find a polynomial or other function that captur4es a trend in a set of data, but may not pass through any of the points.) Before discussing Lagrange interpolation, however, we first consider several important issues. Using Sinusoidal Behavior First, although we can theoretically obtain any desired degree of accuracy on any finite interval with Taylor polynomials simply by increasing the degree sufficiently, in reality that is not quite so simple. If we want to approximate a function at a point very far from the center at x 0 , we need a very high degree polynomial and computations with such an approximating polynomial may not be all that trivial. Moreover, there is a major issue in trying to decide what degree polynomial would be needed to achieve a given level of accuracy, say four decimal places or ten decimal places, at all points within a given interval. If we proceed unthinkingly, we likely would start by essentially picking a degree n at random, checking how accurate or inaccurate the results are, and then likely having to increase the degree continually until we reach the desired level of accuracy. It is certainly preferable to decide on the desired level of accuracy and being able to determine the degree of the polynomial that gives that accuracy. 2 We can circumvent much of this problem by using the periodicity and symmetry properties of the sinusoidal functions. Since both the sine and cosine are periodic with period 2 , all we really need is a polynomial that gives the desired level of accuracy on an interval of length 2 , or better on the interval from to that is centered at x 0 , and the value of either sin x or cos x for any x outside this interval can be found. Moreover, since the sine curve on the left of the origin is the upside-down mirror image of the portion on the right, all we actually need do is find a sufficiently accurate approximating polynomial on [0, ] . Furthermore, because the portion of the sine curve on this interval is symmetric about x 2 , we really only need something that is sufficiently accurate on [0, 2] . Finally, because the values of the sine function between 4 and 2 are the same as the values of cos x from x 4 to x 0 , we really only need an approximation that is sufficiently accurate on this fairly small interval. The comparable reasoning applies to the cosine function; all that is needed is a sufficiently accurate approximation on [0, 4] . The Error in an Approximation an approximation is. Second, we need to be able to assess just how good We begin by defining the error in an approximation as the difference between the function and its approximating polynomial. For example, with the cubic Taylor approximation to the sine, the error is sin x ( x x3 3!) . The graph of the error function sin x ( x x3 3!) on [0, 4] is shown in Figure 2a; we observe that it is actually quite small across the interval. In fact, visually, the maximum error is about 0.0024 and it occurs at the right endpoint. Similarly, Figure 2b shows the error function associated with the fifth degree approximation and we observe that its maximum, in absolute value, is about 0.000036. To measure how closely an approximation a function on an interval such as [0, 4] , we use the maximum absolute value of the error. This is equivalent to finding the maximum deviation between the function and the approximation on the entire interval. Effectively, the maximum absolute value of the error provides information on the “worst case scenario” of the approximation. At a simplistic level, we can use technology to create a table of values of the error function; for instance, if we use 500, say, uniformly spaced x-values and then identify the largest value of the error in absolute value at these points, we find that the maximum 3 absolute value of the cubic’s error is roughly 0.002454. It means that the cubic approximation is equal to the sine function to at least two decimal places on [0, 4] . (Obviously, it is conceivable that there might be some intermediate point(s) where the error becomes significantly larger, 0.0025 0 0 0.002 0.2 0.3 0.4 0.5 0.6 0.7 0.8 -0.00001 sin( x) T3 ( x) 0.0015 0.1 -0.00002 0.001 sin( x) T5 ( x) 0.0005 -0.00003 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 -0.00004 0.8 Figure 2a: Error between the sine function and its cubic Taylor approximations Figure 2b: Error between the sine function and its fifth degree Taylor approximations but this is quite unlikely. One can also apply optimization methods from calculus to find the actual maximum absolute value of the error, if desired, but that would not be appropriate at an algebra or precalculus level.) If we wanted greater accuracy, we would use a higher degree polynomial. Thus, the maximum error with the fifth degree Taylor polynomial at the same 500 points is 3.62646 10-5 , so the fifth degree Taylor polynomial and the sine function are equal to at least four decimal places on [0, 4] . We leave it to the interested reader to conduct a comparable investigation to see how accurate the successive Taylor polynomial approximations cos x 1 x2 2! cos x 1 x2 x4 2! 4! x2 x4 x6 cos x 1 2! 4! 6! are to the cosine function on the interval [0, 4] . 4 The Lagrange Interpolating Polynomial As discussed in [3], the Lagrange interpolating polynomial of degree 1 that passes through the two points ( x0 , y0 ) and ( x1 , y1 ) is L1 ( x) y0 x x0 x x1 y1 . x0 x1 x1 x0 For instance, if the points are (1,5) and (2,3) , then L1 ( x) y0 x x0 x x1 x2 x 1 y1 5 3 5( x 2) 3( x 1). x0 x1 x1 x0 1 2 2 1 You can easily show that this is equivalent to the point-slope form by multiplying it out. Similarly, the Lagrange interpolating polynomial of degree 2 that passes through the three points ( x0 , y0 ) , ( x1 , y1 ) , and ( x2 , y2 ) is L2 ( x) y0 ( x x1 )( x x2 ) ( x x0 )( x x2 ) ( x x0 )( x x1 ) y1 y2 . ( x0 x1 )( x0 x2 ) ( x1 x0 )( x1 x2 ) ( x2 x0 )( x2 x1 ) Notice that this expression is composed of the sum of three distinct quadratic functions. Each component function contains two of the three possible linear factors ( x x0 ) , ( x x1 ) , and ( x x2 ) , so that each component contributes zero to the sum at two of the three interpolating points x x0 , x x1 , and x x2 . At the third point, each component contributes, respectively, 10 (3, 8) f 2 ( x) (6, 4) 5 (1, 2) f0 ( x) 0 0 1 2 3 4 5 6 7 8 -5 f1( x) -10 L2 ( x) Figure 3: Lagrange interpolating polynomial L2 ( x) through the three points (1, 2), (3, 8) and (6, 4), and its three component quadratic functions 1 f0 ( x ) ( x 3)( x 6) 5 f1 ( x ) f2 ( x) 4 3 4 ( x 1)( x 6) ( x 1)( x 3) 5 y y0 , y y1 , and y y2 . That is, at x x0 , the second and third term are zero, so that the only contribution is from the first term, which contributes y0 to the sum, so L2 ( x0 ) y0 , and so on for the other two points. See Figure 3 for the graph of the Lagrange interpolating polynomial through the points (1, 2) , (3,8) , and (6, 4) , as well as the three component quadratic functions. The authors have created an interactive spreadsheet [5] to allow interested readers and their students to investigate the Lagrange interpolating polynomial, as well as its components, in a dynamic way for any choice of interpolating points. In general, the n + 1 points ( x0 , y0 ) , ( x1 , y1 ) , … ( xn , yn ) determine a unique polynomial of degree at most n. the Lagrange formula for this polynomial consists of a sum of n polynomial terms of degree n, each involving n of the possible n + 1 linear factors ( x x0 ) , ( x x1 ) , … ( x xn ) . We now apply these ideas to approximate the values of the sine function on the interval [0, 4] using a quadratic Lagrange polynomial. To do so, consider the three points (0,0) , ( 8,sin( 8)) , and ( 4, 2 2) . (Using π/8 has the added advantage that sin (π/8) can be calculated exactly using the half-angle formula.) We construct the associated quadratic interpolating polynomial L2 ( x) 0 ( x 8 )( x 4 ) 2 ( x 0)( x 8 ) ( x 0)( x 4 ) sin( ) 8 (0 8 )(0 4 ) ( 8 0)( 8 4 ) 2 ( 4 0)( 4 8 ) x( x 0.785398) x( x 0.392699) 0.707107 (0.392699)(0.392699) (0.785398)(0.392699) 2.481532 x( x 0.785398) 2.292637 x( x 0.392699), 0.382683 rounded to six decimal places. We show the graph of this quadratic function (in red) along with the sine curve (in blue) on [0, 4] in Figure 4 and observe that the two are essentially indistinguishable. 0.75 0.5 0.004 0.002 6 0 0.25 0 -0.002 0.2 0.4 0.6 0.8 A more informative view is the associated error function shown in Figure 5. Notice that the error function appears to oscillate in a somewhat sinusoidal pattern that ranges from about –0.0035 to about 0.0035. In fact, using the same 500 points as before, the largest negative error is –0.003638 and the largest positive error is 0.003467, rounded to six decimal places. Therefore, the maximum deviation is 0.003638. Note that this is only slightly larger (i.e., a slightly worse approximation) than what we achieved with the cubic Taylor approximation. On the other hand, this is accomplished with a quadratic approximation, so the accuracy is quite impressive. In fact, we have the comparable two decimal place accuracy as we had with the cubic Taylor polynomial. We could certainly improve on this level of accuracy by moving to a cubic interpolating polynomial, but will not do so here. Instead, we will attempt to improve on the accuracy by a more insightful approach rather than increasing the level of computation by using a higher degree polynomial. Notice, also from Figure 5, that the error is zero at each of the three interpolating points, as should be expected, and that the error is very small on either side of these points. This suggests that we are essentially wasting the excellent accuracy just to the left of the origin and just to the right of x 4 . In turn, this suggests that it might be helpful if we choose slightly different interpolating points for a quadratic interpolating polynomial that give us the advantage of the very small errors on either side of the endpoints. For example, suppose we choose the points x 0.05 and x 0.75 along with x 8 at the center. (Different results will occur with other choices of the two points.) The resulting quadratic interpolating polynomial is 7 L2 ( x) sin(0.05) ( x 8 )( x 0.75) ( x 0.05)( x 0.75) sin( 8) (0.05 8 )(0.05 0.75) ( 8 0.05)( 8 0.75) sin(0.75) ( x 0.05)( x 8 ) (0.75 0.05)(0.75 8 ) ( x 0.392699)( x 0.75) ( x 0.05)( x 0.75) 0.382683 (0.05 0.392699)(0.05 0.75) (0.392699 0.05)(0.392699 0.75) ( x 0.05)( x 0.392699) 0.681639 . (0.75 0.05)(0.75 0.392699) 0.049979 As before, the graphs of this function and the sine curve are indistinguishable between 0 and 4 . However, as seen in Figure 6, the error function with this approximation is considerably smaller than with the previous approximation. In particular, the maximum absolute error at the same 500 points is 0.002551. Presumably, with a little experimentation with the interpolating points, one could almost certainly improve on this further. 0.003 0.001 0 0.2 0.4 0.6 0.8 -0.001 -0.003 Figure 6: Error of the quadratic approximating polynomial to sine function From a pedagogical standpoint, another advantage to using interpolating polynomials to approximate the sinusoidal functions instead of Taylor polynomials is that the latter require the use of radians while interpolating polynomials can be used with either radians or degrees. In some ways, degrees might make classroom investigations a little simpler for many students. For instance, since we restrict our attention to the interval [0, 45] , the center is x 22.5 , and so it is slightly easier to examine what 8 happens to the level of accuracy when the endpoints are chosen symmetrically, say x 3 and x 42 . The resulting Lagrange polynomial is then ( x 22.5)( x 42) ( x 3)( x 42) ( x 3)( x 22.5) L ( x) sin(3) sin(22.5) sin(42) 2 (3 22.5)(3 42) (22.5 3)(22.5 42) (42 3)(42 22.5) ( x 22.5)( x 42) ( x 3)( x 42) 0.382683 (3 22.5)(3 42) (22.5 3)(22.5 42) ( x 3)( x 22.5) 0.669131 . (42 3)(42 22.5) 0.052336 Again, the graphs of this approximating polynomial and the sine curve are indistinguishable. The corresponding error function is shown in Figure 7. The maximum absolute error in the approximation over the same 500 points is now 0.002670, which is not quite as good as our previous attempt. Obviously, if we change the interpolating points, we will get other approximating polynomials and it is likely that some of them will give better results. The authors have also created an interactive spreadsheet, available from the NCTM website, that allows interested readers and their students to investigate dynamically the way that the Lagrange interpolating polynomial approximates the sine function in either radians or degrees for any choice of the interpolating points and see the effects, both graphically and numerically. 0.004 0.002 0 0 5 10 15 20 25 30 35 40 45 -0.002 -0.004 Figure 7: Error of the quadratic approximating polynomial to sine function in degrees We suggest that interested readers can use these kinds of investigation as some very valuable classroom projects for their students. Students can be tasked with selecting 9 other possible interpolating points to see how small they can make the maximum absolute error. Many students tend to respond to such investigations as a challenge to get the “best” answer in the sense of the smallest possible value for the maximum error. The authors have found that most students really throw themselves into such explorations on a competitive basis. Clearly, in the process, they achieve extremely deep insights into the mathematics. Also, a series of comparable investigations can be conducted to approximate the values of the cosine function, but we will not go into that here. Instead, we leave that for the interested readers and their students. It makes a wonderful classroom activity or for individual or group explorations. The authors have created an interactive spreadsheet so that readers can investigate dynamically the way that the Lagrange interpolating polynomial approximates the cosine function in radians or degrees for any choice of the interpolating points. The Behavior of the Error Function We next consider the behavior patterns of the error function. Look at Figure 4, which shows the error function associated with the quadratic approximation to the sine function. The shape of the curve is reminiscent of a cubic polynomial. Realize that the quadratic is based on three interpolating points, ( x0 , y0 ) , ( x1 , y1 ) , and ( x2 , y2 ) . At each of these points, there is exact agreement between the function and the quadratic interpolating polynomial, so that the error must be zero. Consequently, the error function will have three real zeros, so that the appearance of a cubic pattern is not coincidental. Similarly, Figure 8 shows the error function associated with a cubic approximation interpolating the four points x 0.03 , x 0.24 , x 0.54 , and x 0.76 , to the sine, and its shape is suggestive of a quartic polynomial, which can be investigated using the interactive spreadsheet that approximates the sine function with Lagrange interpolating polynomials. 0.00006 0.00004 0.00002 0 0 0.2 0.4 0.6 0.8 -0.00002 -0.00004 -0.00006 Figure 8: Error of the cubic approximating polynomial to sine function 10 In general, given n 1 interpolating points ( x0 , y0 ) , ( x1 , y1 ) , … , ( xn , yn ) , the error must be zero at each xi . Thus, the error function must contain n 1 linear factors x xi , and hence must contain the polynomial ( x x0 )( x x1 ) ( x xn ) . In fact, there is a formula for the error associated with the interpolating polynomial Ln ( x) based on n 1 interpolating points ( x0 , y0 ) , ( x1 , y1 ) , … , ( xn , yn ) : sin ( n1) ( ) En ( x) sin x Ln ( x) ( x x0 )( x x1 )( x x2 ) (n 1)! ( x xn ), where is some real number between 0 and 4 , in this case, and it depends on x0 , , xn , and x ; also, sin ( n1) indicates the n 1st derivative of the sine function. While we will not go into the details here, interested readers are referred to [1]. However, with the interactive spreadsheets, we can search for the best possible quadratic (or cubic) interpolating polynomials based on three (or four) points. In this case, we want the maximum absolute error of an approximation to be as small as possible. In the process of finding the quadratic interpolating polynomials with the smallest error, we find that the maximum absolute error tends to be small if the error is oscillatory and somewhat evenly distributed throughout the interval of approximation. It is true for both sine and cosine functions, as well as for any number of the interpolating points. This ( x x0 )( x x1 ) again is not coincidental. The polynomial component ( x xn ) in the error formula En ( x) not only dictates the shape of the error function, but also gives us a way to minimize the error in interpolation. Since there is no explicit way to represent the dependence of on x0 , minimize the maximum value of ( x x0 )( x x1 ) , xn , and x , we only seek to ( x xn ) . Notice that this may not give the best approximation since we do not know the value of and hence the value of sin ( n1) ( ) , but it does give a very good approximation. 11 The issue of finding the interpolating points that minimize the maximum of the product (the so-called MiniMax problem) has been studied extensively. The best points to use are known as the Chebyshev nodes (see, for instance, [ ]), for which max ( x x0 )( x x1 )( x x2 ) 0 x 4 ( x xn ) 1 2n is minimal. For the quadratic interpolating polynomial on the interval [0, 4] , the Chebyshev nodes are x2 x0 5 1 0.05 , cos 8 6 x1 3 1 0.39 , and cos 8 6 cos 1 0.73 , rounded to two decimal places. The associated error function 8 6 is shown in Figure 9. The maximum absolute error at the same 500 points is 0.002393. By far, this is the best result we have obtained. Likewise, we use the four Chebyshev nodes x0 and x3 7 5 3 1 0.03 , x1 cos 1 0.24 , x2 cos 1 0.54 , cos 8 8 8 8 8 8 1 cos 1 0.76 to obtain the cubic interpolating polynomial for a very 8 8 good approximation on [0, 4] . The corresponding maximum absolute error at the same 500 points is 0.000058, so it gives at least four decimal place accuracy. Figure 9 shows its error function. 0.003 0.001 0 0.2 0.4 0.6 0.8 -0.001 -0.003 Figure 9: Error of the quadratic approximating polynomial at the Chebyshev nodes to sine function We summarize the error results in Table 1. Clearly, the quadratic interpolating polynomial with Chebyshev nodes is a better fit to the sine function than the cubic Taylor 12 polynomial. Similarly, the maximum error with the cubic Taylor polynomial is much larger than that for the cubic interpolating polynomial with Chebyshev nodes. Thus, the interpolation approach lets us use a lower degree polynomial to obtain a better approximation compared to Taylor polynomials. Moreover, if we use the same degree for both the interpolating and Taylor polynomials, the interpolating polynomial produces a much better approximation, although admittedly hand or calculator calculations with Lagrange interpolating polynomials are more complicated than those with Taylor polynomials that are centered at the origin. Also, the error formula for the interpolating polynomial En ( x) sin x Ln ( x) sin ( n1) ( ) ( x x0 )( x x1 )( x x2 ) (n 1)! ( x xn ) can be used to estimate the maximum absolute error. For example, for the quadratic Chebyshev polynomial, the corresponding absolute error is capped by 0.041667, because E2 ( x) max sin( ) 0 x / 4 3!22 max cos( ) 1 0 x / 4 2 0.041667 . 3!2 3!22 Similarly, the maximum absolute error for the cubic Chebyshev polynomial is E3 ( x) max sin (4) ( ) 0 x / 4 4!23 max sin( ) 2 2 0 x / 4 3 0.003683 . 4!2 4!23 This estimate lets us determine the lowest degree polynomial that gives the desired accuracy. Therefore, when we think about approximating the values of transcendental functions, we should consider the interpolation approach. Table 1. Comparison of Errors Method T3 (3rd degree Taylor at x 0 ) Maximum Absolute Error 0.002454 T5 (5th degree Taylor at x 0 ) 0.000036 L2 (with Chebyshev nodes) 0.002393 L3 (with Chebyshev nodes) 0.000058 References [1] Burden, R., and J. Faires, Numerical Analysis, 9th Edition, Brooks/Cole, 2010. 13 [2] Gordon, S. “Exposing the Mathematics Wizard: Approximating Sinusoidal Functions with Polynomials.” The Mathematics Teacher, 104 (May 2011): 676-682. [3] Author. “Interpolation and Polynomial Curve Fitting.” The Mathematics Teacher, (to appear). 14