Approximations_notes

advertisement

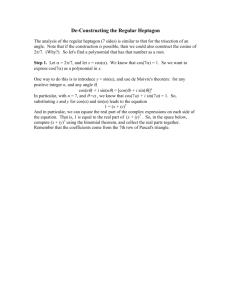

Johns Hopkins University What is Engineering? M. Karweit APPROXIMATIONS A. Introduction A. What are approximations? Deliberate misrepresentations of physical or mathematical things, e.g., 3, an atom is spherical, the drag force on a moving tank is zero. B. Why do we need them? 1. To simplify mathematical equations, so that they are easier to analyze d 2 g and solve, e.g., motion of a pendulum is sin( ) 0 ; dt 2 L d 2 g approximate as 2 0 for small . L dt a) polynomial approximations b) power series approximations 2. To reformulate calculus problems so that digital computers can be used df ( x ) f ( x h) f ( x ) to obtain solutions, e.g., if h is small. dx h a) finite differences b) finite elements 3. To reduce the quantity of data that must be stored or transmitted, e.g., a telephone transmits only a limited range of frequencies so that voice communication can be accommodated over unsophisticated wires. a) JPG, GIF image compression b) MPEG, AVI video compression c) WAV audio storage 4. To focus on certain aspects of physical problems, e.g., fluid dynamicists view water not as billions of moving and colliding molecules, but as a “continuum” of matter whose properties reflect only the average behavior of the molecules. a) continuum approximation b) discarding terms as being small compared to others 1/11/06 Approximations 1 Johns Hopkins University What is Engineering? M. Karweit B. Polynomial approximation of functions—Taylor’s Theorem 1. First, suppose f(x) is the nth order polynomial f(x) = a0 + a1x + a2x2 + a3x3 + . . . + anxn then the coefficients can be expressed as 1 1 (n) a 0 f (0), a1 f (0), a 2 f (0), ..., a n f (0) 2! n! so 1 1 f ( x ) f (0) f (0) x f (0) x 2 f ( n ) (0) x n 2! n! 2. Generalization to other expansion values : Replace x by = x + h. Then consider f() = f(x + h) = g(h), i.e., temporarily assume x is fixed and h is the independent variable. Then: g(h) = f() . . . g(n)(h) = f(n)(). For h = 0, g(0) = f(x) . . . g(n)(0) = f(n)(x). Then, f() = f(x + h) = h2 h3 hn f ( x ) hf ( x ) f ( x ) f ( x ) f 2! 3! n! (n) ( x) 3. Generalization to non-polynomial functions: For non-polynomials there will be a residual Rn. h2 h3 h n ( n) f ( x h) f ( x) hf ( x) f ( x) f ( x) f ( x) Rn 2! 3! n! h n1 h n2 ( n 1) where Rn f ( x) f ( n2 ) ( x) , by induction. (n 1)! (n 2)! If these terms approach a finite limit, then the nth order polynomial approximates the function f(x) with error Rn. 4. Example: expand f(x) = cos(x) about x = 0. Use 1 1 f ( x ) f (0) f (0) x f (0) x 2 f 2! n! f(x) = cos(x) cos(0) 1 f(x) = -sin(x) -sin(0) 0 f(x) = -cos(x) -cos(0) -1 f(x) = sin(x) sin(0) 0 f (iv)(x) = cos(x) cos(0) 1 (n) (0) x n x2 x4 x6 x8 cos( x ) 1 2! 4! 6! 8! 1/11/06 Approximations 2 Johns Hopkins University What is Engineering? M. Karweit C. Numerical methods using Taylor series expansion 1. Finite difference approximation for a derivative: a) From f ( x h) f ( x) hf ( x) h2 h3 hn f ( x) f ( x) f 2! 3! n! ( n) rearrange to obtain f ( x h) f ( x ) h h2 h n 1 f ( x ) f ( x ) f ( x ) f h 2! 3! n! ( x) Rn (n) ( x ) Rn . Then, the “forward difference” approximation to the derivative is f ( x h) f ( x ) f ( x ) O(h) , with the remaining terms contributing to h the error. O(h) is read “of order h” and represents the first term which is neglected in the Taylor series. Usually this first term is the most important contributor to the error. If h is small, and/or if the higher order derivatives are small, even a simple “finite difference” expression may be a good approximation. b) A better approximation Consider h2 h3 h n ( n) f ( x) f ( x) f ( x) Rn 2! 3! n! Then replace h by -h to obtain h2 h3 h n ( n) f ( x h) f ( x) hf ( x) f ( x) f ( x) f ( x) Rn 2! 3! n! Subtracting the 2nd equation from the 1st and rearranging terms yields f ( x h) f ( x h) 2h 2 2h 4 (5) f ( x ) f ( x ) f ( x) 2h 3! 5! This becomes a “centered difference” formulation for the derivative f ( x h) f ( x h) f ( x ) O(h 2 ) 2h Notice that the error is O(h2) rather than O(h). If h is small, O(h2) is better. f ( x h) f ( x) hf ( x) c) Finite difference approximation for a 2nd derivative f ( x h) 2 f ( x ) f ( x h) f ( x ) O( h 2 ) 2 h 1/11/06 Approximations 3 Johns Hopkins University What is Engineering? M. Karweit 2. Finite difference approximation for an integral a) Trapezoidal rule Ah Ah f ( x)dx f ( A) xf ( A)dx 2 f ( A) f ( A h) O(h A h 3 ) A b) Simpson’s rule Ah A h x2 h Ahf ( x)dx Ah f ( A) xf ( A) 2! f ( A)dx 3 f ( A h) 4 f ( A) f ( A h) O(h 5 ) D. Fourier series approximations If f(t) = f(t + nT), where n is any integer and T is a constant, then f(t) is said to be periodic with period T. f(t) can be represented as 2 f (t ) a 0 a k cos( k 0 t ) bk sin( k 0 t ) , where 0 and T k 1 T T 2 2 a k f (t ) cos( k 0 t )dt , and bk f (t ) sin( k 0 t )dt T0 T0 This representation is useful for oscillating functions, e.g., tides, audio signals. E. Generalized representations of functions 1. f(x) (or f(t)) can be represented by any set of “basis functions”, i.e., functions whose combinations can produce all possible values of f(x) within a specified range, say A to B. 2. Possible basis functions a) polynomials b) sines, cosines c) wavelets d) many more. . . 3. Truncation of the infinite series which represents a given function constitutes an approximation to that function. 1/11/06 Approximations 4