statistic hence

advertisement

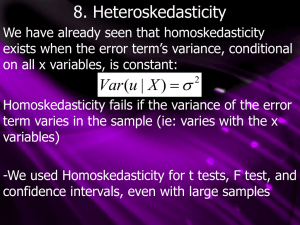

Principles of Econometrics – Eviews session Notes by José Mário Lopes1 1- Wage regression (Example 7.6 and 8.1 in Wooldridge) First, let’s learn how to import the data from Excel and how to create some variables. Just open a new Workfile and define it to have 526 observations (the size of our cross section sample). Now, you have created a new workfile in EViews. Let’s import the data from Excel. 1 If you find any mistakes or typos, please contact me, jmlopes@fe.unl.pt 1 In the box that will appear, write down the names of the variables you have in columns in EViews. Click OK (you should verify that the data were well inserted by checking the values for some of the observations in the sample) Now, let’s generate a couple of variables. For instance, imagine you wanted to get a “male” binary variable. You can derive it from “female”. Just click Genr and write down the formula of the new series you are creating. 2 You can also generate a new variable which is 1 for married males and zero otherwise. You should just click Genr and write down marrmale=married*male Or, for instance, a dummy variable which is 1 for married female and 0 otherwise: marrfem=married*female and so on. Often, equations with dependent variables defined in monetary units are estimated using the (natural) log instead of the level of the variable. By doing that, we can get the variable lwage. Let’s estimate a very simple model now and analyze the test statistics and diagnostic checking. The model I will estimate is simply ln( wagei ) 0 1 marrmalei 2 marrfemi 3 sin gfemi 4 educi 5 exp eri 6 exp eri 2 7 tenurei 8 tenurei u i 2 3 Click on New Object/Equation and write down the model. Estimation Output gives us Dependent Variable: LWAGE Method: Least Squares Date: 09/28/09 Time: 17:40 Sample: 1 526 Included observations: 526 Variable Coefficient Std. Error t-Statistic Prob. C MARRMALE MARRFEM SINGFEMALE EDUC EXPER EXPER^2 TENURE TENURE^2 0.321378 0.212676 -0.198268 -0.110350 0.078910 0.026801 -0.000535 0.029088 -0.000533 0.100009 0.055357 0.057835 0.055742 0.006694 0.005243 0.000110 0.006762 0.000231 3.213492 3.841881 -3.428132 -1.979658 11.78733 5.111835 -4.847105 4.301614 -2.305553 0.0014 0.0001 0.0007 0.0483 0.0000 0.0000 0.0000 0.0000 0.0215 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood Durbin-Watson stat 0.460877 0.452535 0.393290 79.96799 -250.9552 1.784785 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion F-statistic Prob(F-statistic) 1.623268 0.531538 0.988423 1.061403 55.24559 0.000000 4 Let’s analyze some features of these results (forgetting, for the moment, any heteroskedasticity problems that may, and do, appear in the regression). a) Why have we left singmale away from the right-hand side variables? Try using it too in the regression to see what happens. There is an error that stems from multicollinearity, one of our assumptions. b) education is significant at the 5% level. We know this because the t-stat has a pvalue of 0.0000%, which means we reject the null of nonsignificance. The estimate indicates that, if we add an extra year of schooling , wage increases about 7.8% (this is approximate). c) Married males earn about 21.2% more than single males. Notice that single males are the reference group, since they do not appear in the regression. This is an approximation (the exact impact is about 23.7%, see chapter 7 to understand why) d) How do we interpret the impact of tenure on the log of the wage? e) R squared is about 46.1%. What does this mean? It means that the explained variation in the log of the wage is 46%, which means that about 54% is left unexplained. f) R squared increases with added explanatory variables even if they do not mean much. Hence, it is best to also look at the adjusted R squared, which is about 45%. The Akaike and Schwarz criteria are also used when specifying a model – we should try to minimized them to achieve a good model, since they penalize adding extra variables that are meaningless. g) The F statistic is 55.24599. It tests the Null that all slopes are zero. This test follows an F distribution with (number of restrictions, T-(number of restrictions+1)) degrees of freedom. The critical value is, thus, is about 1.94 (check this in a table, for instance the one given in any undergraduate Statistics handbook, such as Hogg and Tanis). Hence, we reject the null. Fortunately, you do not have to go through the table, since EViews gives you the p-value, which is under 5% as you can see above. Now, how can you perform a specific test on one or more of the parameters? 5 Test, for instance, the Null that Married females and Single females have an identical discrepancy in wage comparing to single males. (c(3)=c(4)): 6 Notice the degrees of freedom of the F statistic. They are correct: there is one restriction and 9 coefficients were estimated in the unrestricted model (hence, 5269=517). At the 5% level, we do not reject the Null (at the 10%, we would reject the null) The results for the chi-square statistic concur with this. Next, I’ll show you how to perform a Chow test, to ascertain if the regression is significantly different for males and females. First, estimate the pooled model (this is just the restricted model) Now let’s estimate the unrestricted model, which allows for differences in all parameters. Just estimate the equation again, but now restrict your sample 7 Do the same for females, After this, you can perform a regular F test, where the output for all of these regressions gives you the Sum of Squared Residuals. For the restricted model, take what the output gives you. For the unrestricted model, take the sum of the SSR of the two. After that, it is just a simple F test. And now, for heteroskedasticity… Homoskedasticity stated in terms of the error variance is Var (u | x1 , x 2 , x3 ,...) 2 (MLR 5) Heteroskedasticity does not make the estimators of the j to be biased nor inconsistent. But the variances of these estimators become biasedthe usual t tests are no longer valid, just as the F test. The standard errors, hence, the statistics we usually use are not valid under heteroskedasticity Moreover, in the presence of heteroskedasticity, OLS fails to be asymptotically efficient (remember, homoskedasiciy is required so that OLS is blue) We must correct these standard errors? How do we do that? See Wooldridge, page 272 on. Why not use always robust standard errors? 8 The answer is that, with small sample sizes, robust t statistics can have distributions not very close to the t distribution, whereas if homoskedasticity holds and errors follow a Normal distribution, the usual (non-robust) t statistics follow a t distribution exactly. Reporting the heteroskedasticity-robust standard errors, which we can do with the White heteroskedsticity consistent coefficient covariance: we get, after clicking OK, 9 2- Next class we’ll do more on heteroskedasticity: Savings and Smoke regressions in chapter 8 – an example of heteroskedasticity. We’ll see how to test for heteroskedasticity and how to transform the model in order to have an efficient estimator. 10