Control_charts_for_variables

advertisement

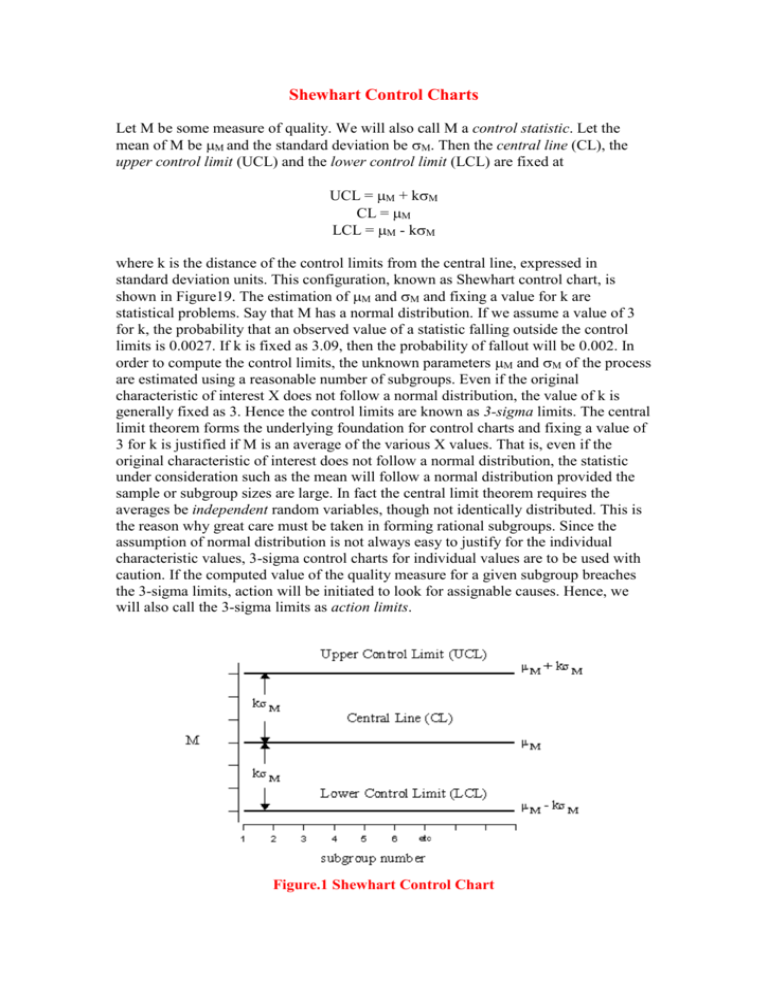

Shewhart Control Charts

Let M be some measure of quality. We will also call M a control statistic. Let the

mean of M be M and the standard deviation be M. Then the central line (CL), the

upper control limit (UCL) and the lower control limit (LCL) are fixed at

UCL = M + kM

CL = M

LCL = M - kM

where k is the distance of the control limits from the central line, expressed in

standard deviation units. This configuration, known as Shewhart control chart, is

shown in Figure19. The estimation of M and M and fixing a value for k are

statistical problems. Say that M has a normal distribution. If we assume a value of 3

for k, the probability that an observed value of a statistic falling outside the control

limits is 0.0027. If k is fixed as 3.09, then the probability of fallout will be 0.002. In

order to compute the control limits, the unknown parameters M and M of the process

are estimated using a reasonable number of subgroups. Even if the original

characteristic of interest X does not follow a normal distribution, the value of k is

generally fixed as 3. Hence the control limits are known as 3-sigma limits. The central

limit theorem forms the underlying foundation for control charts and fixing a value of

3 for k is justified if M is an average of the various X values. That is, even if the

original characteristic of interest does not follow a normal distribution, the statistic

under consideration such as the mean will follow a normal distribution provided the

sample or subgroup sizes are large. In fact the central limit theorem requires the

averages be independent random variables, though not identically distributed. This is

the reason why great care must be taken in forming rational subgroups. Since the

assumption of normal distribution is not always easy to justify for the individual

characteristic values, 3-sigma control charts for individual values are to be used with

caution. If the computed value of the quality measure for a given subgroup breaches

the 3-sigma limits, action will be initiated to look for assignable causes. Hence, we

will also call the 3-sigma limits as action limits.

Figure.1 Shewhart Control Chart

The control charting procedure is actually a sequence of tests of hypothesis. If one is

using a Shewhart chart with mean as the quality measure, the chart is nothing but a

sequence of tests that the current mean is equal to the hypothesised mean (central

line). The control limits may therefore be viewed as confidence limits.

The parameters of the probability distribution of the quality characteristic may be

estimated using extensive past (or historical or retrospective) data. This may help to

hypothesise the constants of a control chart such as the mean, standard deviation etc.

Such control charts based on the hypothesised values will be called standards given

charts. We will also describe nominal or target values as standard values. But it is

necessary that the target or nominal values are consistent with the historical

performance.

Certain terms relevant to the technique of control charting are summarised below:

central line: A line on a control chart representing the long term average or a standard

(nominal or target) value of the quality measure.

control limits: These are the lines on a control chart which are used as the criteria for a

signal for action. That is, control limits help find whether the data indicate a state of

statistical control or not.

lower control limit: A (control) limit for the points below the central line.

upper control limit: A (control) limit for the points above the central line.

The Shewhart control charts can be classified as of variables or attribute type

depending on the type of quality measure M used. See the following table:

Control Chart Types

Quality

Name of

Measure M Chart

Average

chart

Type

Variables

Standard

S-chart Variables

deviation S

Range R

R-chart Variables

Proportion

p-chart Attribute

defective p

Number of

np-chart Attribute

defectives np

Number of count or

Attribute

defects c

c-chart

Defects per

u-chart Attribute

unit u

If the characteristic of interest has a standard value (such as nominal or target), then

such charts are distinguished from those charts having no standard values.

Note that the Shewhart control chart model is based on the following assumptions:

1. The control statistic is normally distributed so that the control limit constant k

can be fixed as 3.

2. The variation between subgroups is absent when the process is in control.

3. The control statistic is independently distributed (and there is no

autocorrelation in the production process).

If any of the assumptions is violated, the frequency of false alarms(chart showing an

out-of-control situation when it is actually under control) will increased. False alarms

result in unnecessary engineering investigation of the process variables, stoppage of

production etc and are expensive. Hence it is necessary to ensure that the control chart

assumptions are not grossly violated

Establishing a Control Procedure

The following are the steps for establishing control of a production process

recommended in ANSI/ASQC Standards A1 (1987) and B3 (1986). Steps 1 to 4 are

preliminary ones, steps 5 to 7 are for starting the control chart, while steps 8 to 11

relate to using a control chart during production. It should be noted that these steps are

merely guidelines, and the actual practice may vary depending on the type and nature

of the industry or process.

1. Selection of Quality Characteristic

Select the characteristic(s) affecting the performance of the product. Characteristics

selected may be features of materials or the component parts or even finished product.

(eg. the COUNT of cotton yarn produced by a spinning machine by which we mean

the number of 840 yard length in one pound of yarn).

2. Analysis of Production Process

For the quality characteristic(s) selected, study the production process in detail to

determine the kind and location of defects that are likely to give rise to special cause

of variation. Also:

Review the requirements imposed by the specification limits.

Study the relation between each production step and the selected characteristic, noting

where and how variation may arise from causes associated with raw material,

machine, human operation, etc.

Study the method of inspecting a unit, and avoid inspection errors due to faulty

gauges, human errors, etc.

Decide whether the entire output of a product forms a single process having a

common cause system or two or more distinct streams (eg different conveyor lines,

machines, shifts, operators, etc, may warrant separate controls).

Decide the earliest point in the production process at which inspection and testing can

be carried out.

3. Planning Subgrouping and Data collection

Decide what quality data should be recorded, and how to divide the data into

subgroups. Also bear in mind the following points:

Clear instructions for inspecting an individual unit and recording the result are

required. The record may be a measured value or a symbol to denote whether the unit

is conforming or not, depending on the type of chart to be used.

Decide how the observed values are to be grouped, so that the units in any subgroup

are produced under identical conditions. Subgrouping requires technical knowledge of

the production process and familiarity with production conditions. While making a

decision on subgrouping based on time, such as once during every half an hour, hour

or day or from every production lot, etc, the periodic factors associated with the

production process should not coincide with the time of sampling and such biases

should be avoided. If inspection of a unit is simple, like using a ‘go and no-go gauge’,

a sample of 4 or 5 every half hour or every hour is preferable. If sampling is done

frequently, a consecutive 4 or 5 or even 10 units may be grouped to form a subgroup.

When subgrouping is based on the order of production, use of small and frequent

subgroups is preferable to infrequent large subgroups.

Keep all records of inspection data (check sheets) in a complete form for future

reference so that one can identify the source, time, location of the trouble, etc, easily

and quickly.

4. Choice of Quality Measures

Decide the statistical measures of quality to be used for the control chart(s).

Sometimes the unit must be noted on an attribute basis and the control chart may be of

p or np type (to be discussed later).

5. Collection and Analysis of Preliminary data

Once decisions have been made on the choice of characteristic, subgroup planning

and the statistical measures used for the control chart, collect and analyse some

inspection data to establish a preliminary or trial control chart ie., central line and

control limits. It is desirable to have sample-by-sample data for at least 25 subgroups.

If past data are available, they may be directly used for subgrouping.

6. Establishment of Control Limits

From the analysis of the preliminary data, establish control limits as action limits. The

control limits are to be used as action limits for future inspection results as they are

plotted subgroup by subgroup. Use of 3-sigma limits is generally recommended,

employing the relevant formulae to compute the central line and control limits.

7. Preparing Control Chart for Use

On a suitable form or squared paper, lay out a chart with vertical scales at the left for

the statistical measures chosen and with a horizontal scale for the subgroup number,

possibly supplemented by date and sample number. Computer software also proves

handy for this purpose.

8. Plotting Points on the Control Chart and Taking Action

Decide on the general type of action that is to be taken if a point falls outside the

control limits.

The action may be on the lot of product sampled or on the production process. As

soon as the results of inspection are available from a sample, compute the value of the

statistical measure and plot a point on the chart at once. If a point falls outside control

limits, take the action that is deemed appropriate. Even though all the points fall

within the control limits, indications of trouble or change in the process may be

observed by unusual patterns such as the following:

a series of points falling close to one of the control limits

a long series of points falling above or below the central line

a series of points revealing a trend.

9. Assumption of Existence of Control

It is usually not safe to assume that a state of control exists before 25 successive

subgroup points plotted fall within the 3-sigma limits. In addition, if not more than 1

out of 35, or not more than 2 out of 100, fall outside the 3-sigma control limits, a state

of control may ordinarily be assumed to exist.

10. Review of Control Chart Standards

The standards initially adopted for constructing the control charts should usually be

reviewed after 10 to 25 points have been plotted. If special causes are located and

eliminated, it is necessary to alter the standard (limits). Even though a fairly high

degree of control is attained, it is desirable to have a definite schedule for review, such

as after every 50 or 100 points.

11. Record Keeping

It is often necessary to keep all control chart records as a permanent records of quality

history. These records are also useful in maintaining good producer and consumer

relationship.

Some of the concepts, such as computing the control limits, drawing a control chart,

etc, will explained in the following sections. Some theory behind control charting has

already been presented.

In the next section, the construction of

using an example.

and related control charts is discussed

Xbar and Related Control Charts

-chart is used to control the mean of a quality characteristic (ie the quality

measure M is the mean). This chart will be accompanied by either the range (R) chart

or standard deviation (S) chart which will monitor the increase in (within subgroup)

variability over time.

To construct both charts, it is necessary to estimate the mean () and standard

deviation () of the quality characteristic during a state of control (ie when the

process is subjected to only chance causes) using historical data. The approach

followed in SPC for estimating and will be explained considering the following

example.

The estimated values of and can be used in constructing the control charts limits.

Quick methods for computing the control limits using published control limit factors

will also be discussed in this section.

Cotton yarn is produced by a spinning machine (called a ring spinning frame). This

machine will draws slivers of cotton (which are produced by several preparatory

machines) and spins them into yarn of continuous lengths. A typical spinning mill will

have a number of spinning frames, each machine having a number of spindles with

the spinning operation done by each of the spindles. Let us consider controlling the

process of spinning yarn with respect to the quality characteristic COUNT of yarn

produced by a given spinning frame. The term COUNT means the number of 840

yard lengths in one pound of yarn. Obviously, the higher the COUNT, the thinner the

yarn. A unit for inspection purposes is fixed at a 120 yard length of yarn (called a lea).

Lea testing and measuring instruments are available which will quickly determine the

quality characteristics COUNT, strength, number of thick and thin places, etc.

Let the nominal or target COUNT be 40, ie a pound of yarn will give 40(840) = 33600

yards of length. Assume that the lower and upper specification limits for COUNT are

respectively 39.8 and 40.2.

The mill has been sampling five leas from randomly selected spindles from the

spinning frame for testing during a production shift. On some days/shifts samples

were not taken. Multiple samples were taken on a few shifts. Table.2 provides the

historical data collected by the mill. This Table indicates certain important process

conditions that were noted during the period of study.

The mill found that the input for the spinning machine, namely the yarn slivers

produced in the preparatory process, was not uniform during certain periods. Such

cases, indicated as 'input sliver problem', occurred intermittently. It took some time to

locate the sources of trouble in the preparatory stages and correct this problem.

Samples numbered 17 and 34 are associated with clear (engineering) evidence that

they represent unusual production conditions or measurement problems. These

samples must be dropped. The same is the case with subgroups associated with 'input

sliver problem' and hence the samples numbered 4, 14, and 21 are also dropped. All

cases where there is no strong technical evidence for lack of control such as sample 25

(casual operator employed) will be included in the analysis using control charts.

It is also very likely that certain unusual production conditions or special causes

would have existed during the period of data collection which are not evident in the

ordinary course of production operations. A trial control chart will be used to detect

the presence of special causes so that further technical investigation can be initiated to

locate and eliminate the sources of trouble.

TABLE.2 Retrospective Data for Trial Control Chart

Sample #

Date

Shift

Obs-1

Obs-2

Obs-3

Obs-4

Obs-5

1

28-09

1

40.029

39.928

39.998

40.053

40.004

2

28-09

2

39.984

39.995

40.089

40.034

40.080

3

28-09

3

39.971

39.966

39.996

39.957

39.918

4

29-09

1

41.009

40.895

40.990

41.041

40.997 input sliver problem

5

29-09

2

40.033

39.978

40.066

40.018

39.994

6

29-09

3

40.006

40.001

40.041

39.942

39.904

7

30-09

2

39.968

40.091

40.024

40.008

40.006

8

30-09

3

40.000

39.952

39.941

39.988

40.065

9

04-10

1

40.070

40.019

39.901

40.004

39.980

10

04-10

2

40.005

39.965

40.053

39.963

39.981

11

04-10

3

40.004

39.996

40.037

40.057

39.995

12

05-10

1

40.049

40.031

39.953

40.032

40.025

13

05-10

2

40.041

39.956

40.018

39.853

39.991

14

05-10

3

40.508

40.989

40.950

40.839

41.005 input sliver problem

15

06-10

2

40.027

39.932

39.994

39.999

40.010

16

06-10

3

40.092

40.016

40.030

40.002

40.014

17

07-10

1

40.054

*

*

*

*

18

07-10

2

40.072

39.967

40.073

39.922

39.918

19

07-10

3

40.018

39.939

39.965

40.064

39.994

20

08-10

1

40.060

40.005

40.010

39.973

40.082

21

08-10

2

39.031

38.076

38.996

39.012

39.598 input sliver problem

22

08-10

3

39.975

40.006

39.960

40.143

39.981

23

11-10

1

39.949

40.032

39.912

40.002

39.997

24

11-10

1

40.004

40.032

40.020

40.063

40.063

25

11-10

2

40.152

40.011

40.067

39.999

39.977

26

11-10

3

40.057

39.995

40.024

40.006

39.939

27

12-10

1

40.055

40.074

40.053

39.966

40.088

28

12-10

2

40.048

40.006

39.965

39.977

39.984

29

12-10

3

39.952

40.053

39.968

39.914

40.028

30

13-10

1

40.006

39.980

40.071

40.003

40.017

31

13-10

2

39.934

39.993

39.993

40.072

39.910

32

13-10

2

40.015

40.013

40.012

40.031

40.067

33

13-10

3

39.941

39.981

40.077

39.971

39.922

34

14-10

1

60.027

59.936

60.012

40.068

40.037 count mix up in lab

35

14-10

2

39.993

40.067

40.052

40.023

40.004

36

14-10

3

40.087

40.054

39.995

39.943

40.031

spinning motor

failed

casual operative

37

15-10

1

40.948

40.974

39.945

40.029

41.023

38

15-10

2

39.897

39.958

40.003

40.056

40.095

39

15-10

3

40.068

40.019

40.111

40.021

39.954

How COUNT varies during a shift is important for rational subgrouping and

effectiveness of the control charts. More studies must be done by collecting data in the

same shift at different time intervals to understand how other process variables such

as interference due to doffing, operatives, maintenance schedules, etc, affect COUNT.

They may suggest more frequent sampling during a given shift of operation, etc. With

the available data, the subgrouping is made based on shifts over time.

Let us first quickly explore the distribution of COUNT (without samples 4, 14, 17, 21

and 34) using the following plots:

Figure 2 Plots to Explore the Distribution of COUNT

Obviously the historical data appear to indicate mostly periods of stable production

(which can be modelled by a normal distribution) as well as some unstable periods

which may be associated with special causes. We will use the control charts to test

this.

In order to estimate the true mean () or standard deviation () of the quality

characteristic COUNT, the historical data will NOT be pooled. The retrospective data

may contain periods dominated by one or more special causes, and a pooled estimate

of the standard deviation can be used only when the process is in control, ie

dominated only by chance causes. The Shewhart control charts allow only variation

within a subgroup, and any extra variation between subgroups is inadmissible and is

attributed to the presence of special causes. For any given subgroup i, the usual

standard deviation Si (n-1 in divisor) or the range Ri will be used to estimate . If

there are k (say) such subgroups, the mean of the k subgroup standard deviations (Si

values) or ranges (Ri values) will be used to estimate the process . Similarly the

mean of the k subgroup means (

i (say) values) is used to estimate the true process

mean . Consider the following table which gives the means, standard deviations and

ranges for the COUNT data. Note that this table omits the samples 4, 14, 17, 21 and

34 and relates to a total of 34 subgroups only. The subgroup numbers match with the

original sample numbers for easy reference.

Table 3 Table of Subgroup Means, Ranges and Standard Deviations

Old sample

number

Subgroup i

1

2

3

5

6

7

8

9

10

11

12

13

15

16

18

19

20

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

i

40.0024

40.0364

39.9616

40.0178

39.9788

40.0194

39.9892

39.9948

39.9934

40.0178

40.0180

39.9718

39.9924

40.0308

39.9904

39.9960

40.0260

Ri

Si

Old sample

number

Subgroup i

0.125

0.105

0.078

0.088

0.137

0.123

0.124

0.169

0.090

0.062

0.096

0.188

0.095

0.090

0.155

0.125

0.109

0.046971

0.047784

0.028343

0.034296

0.054888

0.044998

0.048915

0.061933

0.037320

0.027797

0.037417

0.073578

0.036060

0.035626

0.077378

0.048275

0.044153

22

23

24

25

26

27

28

29

30

31

32

33

35

36

37

38

39

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

i

40.0130

39.9784

40.0364

40.0412

40.0042

40.0472

39.9960

39.9830

40.0154

39.9804

40.0276

39.9784

40.0278

40.0220

40.0638

40.0018

40.0346

Ri

Si

0.183

0.120

0.059

0.175

0.118

0.122

0.083

0.139

0.091

0.162

0.055

0.155

0.074

0.144

0.329

0.198

0.157

0.074542

0.047564

0.026235

0.070279

0.043356

0.047620

0.032673

0.056727

0.033872

0.062883

0.023341

0.059923

0.031316

0.055453

0.123918

0.078305

0.058901

The overall mean of the 34 (= k) subgroups estimates . Let us denote the grand or

overall mean as

=

where

(X double bar). That is

= (40.0024+40.0364 + ....+ 40.0018+40.0346) / 34 = 40.008

i

is the ith subgroup mean. The process standard deviation is estimated as

=

where

/c4

is the average of the subgroup standard deviations, viz

where Si is the standard deviation of the ith subgroup and c4 is a constant that

ensures

an unbiased estimator of . That is c4 = E(S)/. Hence, the constant c4 is

known as the unbiasing constant. c4 is purely a function of the subgroup size n and

values are given in Table in the appendix 1 to this chapter. For COUNT data,

= (0.046971 + 0.047784 + .... + 0.078305 + 0.058901) / 34 = 0.050372

giving

= 0.050372 / 0.94 = 0.0535872.

It is also possible to estimate the process sigma using ranges. That is, the estimator

will be

=

where

/d2

is the mean of the subgroup ranges given by

and d2 is the unbiasing constant for the range. That is, d2 = E(R)/ values are given in

appendix for selected subgroup sizes. For COUNT data, we find

= (0.125 + 0.105 + .... + 0.198 + 0.157) / 34 = 0.12715

yielding

= /d2= 0.12715 / 2.326 = 0.0546647. In general (ie if subgroup size n >

2), the range estimate of is inefficient compared to the estimate based on the

standard deviation.

The control limits of an

-chart can be established using the above estimated values

of and . If if X ~ N( , ), then

limits for the

~ N( , /n). Hence the 3-sigma control

-chart are:

±3

Hence the control limits for the

are:

/n

-chart based on the standard deviation estimate of

,

.

For COUNT data, it is easy to see that

LCL = 40.008 - 3(0.0535872/ 5) = 39.936.

UCL = 40.008 + 3(0.0535872/ 5) = 40.080.

Hence the control limits for the

-chart based on the range estimate of are:

,

The

.

-chart control limits for the COUNT data are:

LCL = 40.008 - 3(0.0546647/ 5) = 39.935

LCL = 40.008 + 3(0.0546647/ 5) = 40.081.

It is easy to compute the control limits using the control limit formulae appearing in

Appendix. The table also gives certain constants (A2, A3, B1, B2 , B3, B4, D1, D2, D3,

D4) called control limit factors for computing control limits. For example, Appendix 1

gives the control limits (based on the

estimate of ) as ±A2

with control

limit factor A2 = 0.577 (which is equal to 3/d2n). The control limit factors are useful

for manual computation of control limits.

The control limits will be displayed on a time sequence plot or run chart for the mean.

That is, the subgroup means

i

will be plotted against the subgroup number i with

reference lines for the control limits. The overall mean

will also be placed on the

chart to produce a central line. The following is a MINITAB

COUNT data based on the

-chart output for the

estimate of .

Figure 4 Xbar-Chart

None of the plotted points (subgroup means

i ) crossed the control limits and hence

we call the mean COUNT to be under control. Note that the significance of subgroup

means is tested using the estimated which is based on the variation within the

subgroups. In other words, the above chart indicates that there is no significant shift in

the mean COUNT level for the production period related to the 34 subgroups given

the amount of variation within a subgroup due to chance causes.

In order answer the question whether the variability within the subgroups is stable,

either an R-chart (range chart) or a S-chart (standard deviation chart) will be used.

These charts evaluate the variability within a process in terms of the subgroup ranges

and standard deviations. One of these two charts will accompany the

monitoring the variation within the subgroups over time.

S chart: The control limits are given by LCL = B3

line being

and UCL = B4

-chart for

with the central

. For the given subgroup size of 5, one finds the factors for control limits

B3 = 0 and B4 = 2.089. For COUNT data,

= 0.050372 and hence

LCL = 0(0.050372) = 0 and UCL = 2.089(0.050372) = 0.1052.

These control limits are then placed on a run chart of Si values with a reference central

line for = 0.050372. The following is the S chart for COUNT data drawn using

MINITAB:

Figure 5. S-Chart

breaches the UCL of 0.1052. This means that the variability within the process

represented by the 34 subgroups is not in control. Now consider the computation of

control limits for the R-chart.

R chart: The control limits are given by LCL = D3 and UCL = D4 with the

central line being . For the given subgroup size of 5, one finds the factors for

control limits D3 = 0 and D4 = 2.115. For COUNT data,

= 0.12715 and hence

LCL = 0(0.12715) = 0 and UCL = 2.115(0.12715) = 0.2689.

These control limits are then placed on a run chart of Ri values with a reference

central line for

= 0.12715. The following is the R-chart for COUNT data drawn

using MINITAB:

Figure 6 R-Chart

Again subgroup #32 signals that the variability within the process is not under control.

We will be using either

- and S-charts or

- and R-charts for monitoring the

process mean level and the variation within the process respectively. It is therefore

desirable to show the two charts on a single display as shown below:

Figure 7 Two Charts On a Single Display

From the above analysis of retrospective data, we find that the quality characteristic

COUNT is not under control. The reasons for an increase in variability for subgroup

#32 must be explored and then the charts must be revised. This is discussed in the

next section.

Revision of Control Charts

When a signal (which could possibly be a false alarm) is obtained for lack of control

from a control chart, one usually looks for the presence of special causes. In the event

of finding and eliminating a special cause, it is necessary to revise the control limits

deleting the subgroup(s) which signalled the presence of special causes. It may also

be possible that this identified special cause affected the process represented by

certain subgroups adjacent to the one that signalled its presence. Any such subgroup

which was influenced by the special cause variables must be dropped. In other words,

we identify a set of subgroups that represents a process subjected to only chance

causes.

An out-of-control point on the

-chart need not necessarily be an out-of-control

point on the R- or S-chart (and vice versa). Such a point need not be dropped from the

associated R- or S-chart; nor will it call for a revision. For a normally distributed

quality characteristic X, the mean

and the sample variance S2 are independently

distributed, and hence such an action may be justified. (In the revised charts given in

Figure 8 the out of control point #32 was dropped for both charts but this need not be

done.)

Consider the signal given by the subgroup #32 in case of the COUNT data. Assume

that a process variable was identified that caused an increase in variability for

subgroup #32. We will drop #32 and recompute the control limits without this

subgroup. If a thorough investigation reveales no trouble with the process, then the

signal given by the subgroup #32 is a false alarm and we will not recompute the

control limits and use them directly for any real time control of COUNT.

Figure 8 shows the revised control charts drawn for the COUNT data using

MINITAB. Subgroup #32 was ignored for control limit calculations but not for

display.

Figure 8 Revised Control Charts

Here all the points (other than subgroup #32) lie within the control limits (sometimes

another revision may be necessary if a further point falls outside the revised limits).

Hence, one sets the Standard values as for the mean and standard deviation as:

standard value for mean =

'

= 40.00

standard value for standard deviation = ' =

/c4 = 0 04814/ 0.94 = 0.0512

It is also possible to fix a standard value for the range of subgroup size 5 as = R'4=

d2'= (0.0512)(2.326) = 0.1191. This value sightly differs from the figure of 0.1210

displayed on the revised R-chart. This value can also be used as a standard value for

range. But estimation of the true process sigma using standard deviations is more

efficient than estimating it using ranges. While using MINITAB for standard values,

they should be input as historical mean and sigma in the appropriate windows.

Using the fixed standard values, one can draw

- and R-charts or

- and S-charts

for future use, ie for on-line or real timecontrol. That is, the production process will

be sampled for quality monitoring, ie subgroups are formed one by one. As soon as a

subgroup is formed, the point must be plotted on the control charts for the standards

known case. If a later subgroup is associated with a special cause, then it is necessary

to disregard the subgroup while revising the standards or during the periodic revision

of the chart once 50 or 100 subgroups are taken. Before any real time control of the

process takes place, it is also necessary to compare the standard values with the

constraints imposed by the specifications. This is discussed below:

By natural process limits (also called natural tolerance limits), we mean the limits

which include a stated fraction of the individuals in a population. For populations

following a normal distribution, the natural process limits are . If placed around

the standard level, these limits identify the boundaries which will include 99.7% of

the individuals of a process that is properly centred and in a state of statistical control.

Natural process limits will be mostly used to compare the natural capability of the

process to specification limits. Consider Figure 9. Here the proportion defective

is

where

,

the cumulative standard normal distribution function.

Specification limits, as discussed earlier, are determined externally and have no

mathematical relationship with control limits. Specification limits should be

sufficiently wider than the natural process limits; otherwise, the percent defective

production will be high.

Figure 9 Natural Process and Specification Limits

Since control limits of the

chart are set at ± 3/n the control limits will lie

within the natural process limits as can be seen from Figure 9a

Figure 9a Natural Process and Control limits

Whenever a signal is received from the control chart for lack of control, one should

see that the value falling outside the control limits does not exceed the lower or upper

specification limit. If this is so, it indicates a major shift in the process level. It is

usually desirable to have the state of the art of the process such that the natural

process limits are within the specification limits. The control limits of the

-chart

are also ideally set at a distance of

inside the natural tolerance limits.

This is to reduce the risk of accepting an unsatisfactory shift in process level near the

specification limits.

In the above discussion, we have assumed that the values of and are known, rather

than estimated using a large number of subgroups. If they are estimated, the interval

that includes a desired fraction of individuals will be called a statistical tolerance

interval and a constant different from 3 will be used in constructing such intervals.

This course does not cover this topic. We will discuss process capability ratios, which

are more popular than the statistical tolerance intervals, in a separate section. Before

any real time control begins, it is necessary to ensure that the process is capable of

meeting the specification requirements, ie it will avoid production of nonconforming

units.

Average Run Length (ARL) and Operating Characteristic (OC) Curves

The performance of a control chart is revealed by its ARL and OC curves.

By average run length (ARL), we mean the average number of times the process will

have been sampled before a shift in the process level is signalled by the control chart.

By operating characteristic (OC) curve, we mean a curve showing the probability of

accepting a process as a function of the process level. Recall that by false alarm we

mean the situation when a control chart gives a signal for lack of control when it is in

control. The probability of such a false alarm can be read from the OC curve.

If the process is operating at an acceptable level, the probability of accepting the

process as

in control should be high from its control chart. This is equivalent to saying that if the

process is operating at an acceptable level, then the average run length at that process

level should be very high. Similarly, if the process is operating at an unacceptable

level, the probability of accepting the process should be low and a signal for a shift in

the process level should come from the control chart with a smaller number of

subgroups drawn. That is, the ARL at unacceptable process level should be small.

If sampling is done for every h hours, then the quantity (ARL)h will be the average

time to signal (ATS). That is, the measure ATS expresses the ARL in time units. It is

also possible to express the ARL in terms of the number of units produced. That is,

the ARL can be multiplied by the number of units that will be produced during a

sampling interval so that the performance of the control chart can be evaluated in

terms of the quantity of units produced. We will be following the terminology of ARL

only, rather than the linear transformations of it.

OC curve of

Chart

Suppose that we have an

-chart configured only with action lines (that is, with only

3-sigma limits). Let 0 be the acceptable process level and 1 be the unacceptable

process level. Also let 1 = 0 +k where is the population standard deviation

which is not affected by a shift in the average level of the process. The constant k

stands for the shift in the process level in standard deviation units. Let the signal from

the

chart for out of control be a point falling outside the control limits (upper or

lower). For the

chart, the upper and lower control limits are UCL = 0 + 3/n

and LCL = 0 - 3/n since

follows normal distribution with mean and standard

deviation /n. Then the probability of accepting the process (ie a subgroup average

falling within the limits) at the unacceptable process level 1 is

Pa = Pr { LCL

UCL = = +k } or

where denotes the standard cumulative normal distribution. Substituting for UCL

and LCL and simplifying one gets:

Pa =[+3-k n ] -[-3-k n].

Figure 10 shows the OC curves for a given level of (upward) shift k in standard

deviation units for various subgroup sizes. Note that large subgroup size n leads to

faster detection of shifts, ie it keeps Pa low.

Figure 10 OC Curves of Xbar-Chart for Various Subgroup Sizes

If k is zero, then there is no shift in the process level. In such a case, Pa is

[3]- [-3] = 0.99865-(1-0.99865) = 0.9973.

This means that if the process is operating at an acceptable level, the control chart

accepts it with a very high probability. Suppose that k = 2. This means that the

process has shifted upwards to an unsatisfactory level above two standard deviations

to the acceptable level. Now Pa stands for the probability of failing to pick up a shift

in the process level with a single sample after the shift has occurred. Let the subgroup

size be 5. In this case Pa will be

[3-2 5]- [-3-2 5] = ( -1.47214)- ( -7.4721)

= 0.070492-0.000000

0.0705,

which is a low figure. That is, the control chart will detect the shift with the very high

probability of 0.9295. In case of a shift in process average, 1- Pa is the probability of

detecting the shift with one sample (subgroup) only. If the shift is not detected with

the first subgroup, it may be detected with the second subgroup and so on. Thus we

may have a run of points falling within the control limits and then a shift may be

detected with a point falling outside the limits. One therefore has the run length (RL)

distribution (geometric) given in Table 4. Here the definition of run length includes

the last subgroup that gave a signal.

Table 4 Run Length Distribution

Run Length Probability

1

(1-Pa)

2

Pa(1-Pa)

3

Pa2(1-Pa)

4

Pa3(1-Pa)

.

.

.

.

m

.

.

.

Pam-1(1-Pa)

.

.

.

The mean and variance of the run length are:

ARL = E(RL) = m Pam-1(1- Pa ) = 1/ (1- Pa ).

V(RL) = Pa / (1-Pa )2.

For example, let the process level shifts to two standard deviations on the upper side.

Then the average run length will be 1/ 0.0705 = 14.18. That is, the chart will detect

the shift in the process average with about 14 subgroup averages plotted on the chart.

The variance of the above RL is 185.37.

For example, to draw the OC curve of an

-chart having UCL = 11, LCL = 9,

subgroup size = 5 and = 0.70, consider the following:

Pa = Pr (LCL

UCL)

= ( ZUCL) - ( ZLCL)

where

and

For the given problem '/n = 0.3130495. For assumed values, Pa can be computed

Using and Pa values, the OC curve is then drawn as shown in Figure 11. This OC

curve gives the Pa values when the shift is either upward or downward. Note that the

Pa is high at the central value 10 and starts to drop if the process level shifts from 10.

If one considers an unacceptable process level, say 11, the Pa is still high, which is not

desirable.

Figure 11 OC Curve of an Xbar-Chart

OC Curve of R-chart

Table 5, giving the percentage points of the distribution of relative range W = R/

(for normal distribution), is useful in constructing the OC curve of an R-chart.

Table 5 Percentage Points of W

w values for

Pr[W

w]

0.999

0.995

0.990

0.975

0.950

0.900

0.750

0.500

0.250

0.100

0.050

0.025

0.010

0.005

0.001

n=4 n=5 n=6 n=10

5.31

4.69

4.40

3.98

3.63

3.24

2.62

1.98

1.41

0.98

0.76

0.59

0.43

0.34

0.20

5.48

4.89

4.60

4.20

3.86

3.48

2.88

2.26

1.70

1.26

1.03

0.85

0.66

0.55

0.37

5.62

5.03

4.76

4.36

4.03

3.66

3.08

2.47

1.93

1.49

1.25

1.06

0.87

0.75

0.54

5.97

5.42

5.16

4.79

4.47

4.13

3.59

3.02

2.51

2.09

1.86

1.67

1.47

1.33

1.08

The probability that R will be less than or equal to UCL is the same as W being less

than or equal to a limit of UCL/ . This implies that = (UCL/w). Using the above

table, w values are obtained and then the OC curve of an R-chart is drawn. For

example, let n = 4 and UCL = 2.0. A table of true R and Pa values is obtained as

shown below:

Table 6 Points for drawing OC Curve of an R-chart

w for n =

=

4

(UCL/w)

0.999 5.31

0.3766

0.995 4.69

0.4264

Pa

true R= d2(d2=

2.3059)

0.8685

0.9833

0.990

0.975

0.950

0.900

0.750

0.500

0.250

0.100

0.050

0.025

0.010

0.005

0.001

4.40

3.98

3.63

3.24

2.62

1.98

1.41

0.98

0.76

0.59

0.43

0.34

0.20

0.4545

0.5025

0.5510

0.6173

0.7634

1.0101

1.4184

2.0408

2.6316

3.3898

4.6512

5.8824

10.0000

1.0481

1.1587

1.2705

1.4234

1.7602

2.3292

3.2708

4.7059

6.0682

7.8166

10.7251

13.5641

23.0590

Charts with Probability Limits

The standard control chart factors are based on the assumption that the control statistic

is normally distributed and hence "three sigma" limits can be used. This may be hard

to justify in the case of sample variance, range etc. One therefore makes use of the

exact distribution of the control statistic, and the control limits fixed in this manner

are known as probability limits.

When working with an S-chart, one can avoid using the factors for control limits

namely B1 to. B4 and use the probability limits directly. It is known that if X ~ N( ,

), then

with n-1 d.f.

It therefore follows that

If the process sigma is estimated, then the control limits become

and

where

=

/c4is the usual unbiased estimate of sigma. For a subgroup size of 4, one

has c4 = 0.921 and 20.001 = 0.024 and 20.999 = 16.266. Once

computation of the control limits

will be straight forward.

is computed, the

Some directly use the sample variance S2 instead of S and draw an S2 chart. Note that

S2 is an unbiased estimate of 2 . For given k rational subgroups, one may compute

the mean of the sample variances

and set the control limits at

where n is the subgroup size. The control limits of the S-chart based on probability

limits are not the square root of the corresponding control limits of the S2 chart

since

is not equal to

An R-chart with probability limits may also be constructed using the percentage

distribution of the relative range W. For example, when the subgroup size is five, the

probability limits for an

R-chart having 0.002 false alarm probability are

LCL = 0.37

/d2

UCL = 5.48

/d2.

and

Supplementary Run Rules for Shewhart Control Charts

The operation of a control chart with only action limits is slow in detecting shifts of

smaller order in the process level. In control charts with only action limits, the signal

comes only if a point falls above or below the control limits. In order to improve the

performance or sensitivity of the chart, it is usual to consider warning limits which are

set at ±2 as well as at ±1 (the symbol is used to represent the standard deviation

of the control statistic, ie M). The rules for using these limits differ according to

practice. In general, the warning limits are used in the same manner as control limits,

but a count of continuous or interrupted runs of points lying between the warning and

action limits is also taken as a signal for action. A control chart with the three zones

for warning is shown in Figure 12

Zone A : +2 to +3 and -2 to -3 limits

Zone B : +1 to +2 and -1 to -2 limits

Zone C : -1 to +1 limits.

Figure 12 Control Chart with Warning Lines

When the process is in control, the probability that a point will fall in each of the

zones can be found. If the characteristic of interest X ~ N( , ), then

~ N( , n ) or

The control limits for the

statistic

~ N( 0 ,1).

-chart are

and ±3 for the standardised

. Hence, one has the following table:

Table 7 Warning Zone Probabilities

Zone

A

B

C

beyond A

Probability of a point falling in the Zone

2[(3) - (2)] = 2[0.99865-0.97725] = 0.04280

2[(2) - (1)] = 2[0.97725-0.84134] = 0.27182

2[(1) - (0)] = 2[0.84134-0.50000] = 0.68268

2(-3) = 0.00270

The following is a list of tests (or the supplementary run rules, also called Western

Electric run rules) generally adopted for use:

Test or Rule 1: one point beyond zone A (usual signal with action

limits).

Test or Rule 2: nine points in a row in Zone C and beyond.

Test or Rule 3: six points steadily increasing or decreasing.

Test or Rule 4: fourteen points in a row alternating up and down.

Test or Rule 5: two out of three points in a row in Zone A or beyond.

Test or Rule 6: four out of five points in a row in Zone B or beyond.

Test or Rule 7: fifteen points in a row in Zone C (above and below

central line).

Test or Rule 8: Eight points in a row on both sides of central line with

none in Zone C.

The sample graphs showing the eight different rules are shown in Figure 13. The

following points on the application of the run rules are noteworthy (see Nelson

(1984)).

1. When the process is in statistical control, the probability of a false alarm is less

than five in a thousand for each of the rules.

2. If rules 1 to 4 are routinely applied then the probability of a false alarm is

about one in a hundred.

3. If the first four rules are supplemented by rules 5 and 6 when it is desirable to

have earlier warning, it will increase the probability of a false alarm to about

two in a hundred.

4. Rules 7 and 8 are diagnostic tests for stratification. These rules show when the

observations in a subgroup have been taken from two or more processes. Rule

7 reacts when the observations in the subgroup always come from both

processes. Rule 8 reacts when the subgroups are taken from one process at a

time.

Figure 13 Illustration of Run Tests

One should be careful while using several or all of the above run rules simultaneously.

If k rules with probability of false alarm i for rule i are used, then the overall false

alarm probability will be

provided the rules are assumed to be "independent". Assuming that the probabilities

of all the false alarms are equal to 0.005 (being the maximum), the total probability of

false alarm will be

= 1-(1-0.005)8 = 0.039.

That is, nearly four out of 100 cases could be a false alarm. Hence, one should be

careful in applying a number of tests simultaneously, particularly tests 7 and 8. The

commonly used run rules are 1, 5, 6 and 8. MINITAB has a provision to perform one

or more of the above tests when the subgroup sizes are equal. (see Figure 14).

Figure 14 MINITAB Run Test Sample Output

Whenever run rules are used, no closed form expression for the OC function or Type I

error is available. Further, the run rules are not independent. For example, rules 5 and

8 cannot be assumed to be independent. In such a situation, obtaining an overall value

of Type I error as

will be approximate and may be

avoided. This is one of the reason why SPC researchers prefer to use the concept of

ARL in place of the OC curve or Type I error. Champ and Woodall (1987) have

provided a method of obtaining the exact ARLs for Shewhart charts with run rules for

a normally distributed quality characteristic. The procedure is based on Markov chain

formulation and is computationally intensive and hence not discussed here. Table 8

gives the ARL values for an

chart with certain run rule combinations and for

various levels of shifts in process level. This table is based on the method of Champ

and Woodall and produced using a SAS procedure.

Table 8 Average Run Lengths for Various Shift Levels and Run rules

Process level shift (in

terms)

Rule 1

Rules 1 & 5

Rules 1 & 6

Rules 1, 5 & 8

0 or no shift

370.352

(369.653)

225.410

(224.226)

166.034

(163.582)

122.036

(118.347)

0.5

155.205

(154.621)

77.715

(76.501)

46.176

(43.538)

36.171

(32.335)

1.0

43.889

(43.363)

20.003

(18.824)

12.663

(10.202)

11.725

(8.491)

2.0

6.302

(5.778)

3.646

(2.633)

3.680

(1.923)

3.502

(2.199)

(Standard Deviations of Run Lengths are in brackets.)

It is noted that the in-control ARL (corresponding to shift level zero) is decreasing

with the application of run rules. The gain is that of reducing the ARL corresponding

to a shifted process level, which is the main purpose of the run rules. In practice, one

may perform a sensitivity analysis: that is, varying the process level and finding what

types of signals (false negative or false positive) are obtained from the chart.

For successful application of supplementary run rules, symmetric distribution of the

control statistic at least is necessary.

Zone Control Chart

Jaehn (1991) introduced the zone control chart with the objective of signalling at

roughly the same time as a Shewhart chart with the commonly used run rules. A zone

control chart has eight zones, four on each side of the central line with boundaries of

the central line, one and two sigma limits (see Figure 15). Scores will be assigned to

each zone in the following manner:

Zone

Score

Between central line and one sigma

0

Between one and two sigma

2

Between two and three sigma

4

Beyond three sigma

8

The score for points between the central line and one sigma can also be fixed at one

instead of zero. For the first observation of the control statistic, the score is shown in a

circle drawn in the appropriate zone. For the subsequent observations of the control

statistic, the circled scores will be cumulated. That is, the score for the latest one is

added to the previous score. This cumulation will take place only when an observation

falls on one side of the central line and the cumulation will stop if a point falls on the

other side of the central line. At this point, the score restarts on the latest actual score.

This scoring method is simple to apply and no pattern checking or counts of points are

needed to apply the supplementary run rules. Once the cumulative score reaches or

exceeds 8, the zone control chart signals action for an assignable cause.

It may be seen that the scoring method roughly corresponds to the application of run

rules, viz:

1. A point falling outside the three sigma limits,

2. Two of three consecutive points falling outside the two sigma limits on the

same side of the central line,

3. Four out of five consecutive points falling outside the one sigma limits on the

same side of the central line, and

4. Eight consecutive points falling on the same side of the central line.

This does not mean that zone control chart and the Shewhart chart with the usual tests

will give the signals at the same time. For instance, the score is reset if a point is on

the opposite side. The usual run rules 5 and 8 discussed earlier do not reset. The zone

control chart basically combines the points of different zones but not necessarily the

run rules.

Figure 15 Zone Control Chart

Davis et al. (1994) have shown that zone control charts with score zero instead of one

(for points between the central line and one sigma) outperform the Shewhart charts

whenever the shift to be detected is of the order of two standard deviations or less.

Also because of its simplicity, the zone control chart is a viable alternative to

Shewhart charts. However does not compare favourably with more complicated charts

such as CUSUM and EWMA (to be discussed later).

Fast initial response (FIR) is a technique used to provide a rapid signal in case of an

initial out-of-control situation whenever decisions are based on a cumulative type of

control statistic such as a score considered in the zone control chart. That is, the FIR

feature gives a "head start" by initialising the score at a state where a signal is very

likely after the few observations if the process is not in control. It is also possible to

introduce the FIR feature to the zone control chart; the FIR feature will be further

discussed in the section on CUSUM charting.

It is also possible to adopt a different weighting pattern for initial process conditions

and yet another weighting pattern to detect shifts of smaller order once the process

condition is stable. This practice is currently empirical and more research is required

in the area.

Control Chart for Individual Measurements (X-chart or I-chart)

The I-chart for individual observations monitors the process level in terms of a single

measurement per sample. This chart is equivalent to using an

-chart with subgroup

size equal to one. Control charts for individual measurements are used under any of

the following situations:

Technology permits 100% inspection of individual units.

Slow production rate and hence samples can accumulate.

Chemical processes and continuous process conditions such as paper production etc.

For a series of measurements X1, X2, X3, ....Xk, we compute

.

That is, the process mean is estimated using the sample mean. The sample standard

deviation S, given by

,

can be used to estimate the true sigma through the unbiasing constant c4 (c4

corresponding to sample size k). That is, we estimate as S/c4 and set the control

limits at

± 3S/c4

with the central line at

range given by

. It is also common to estimate using the average moving

.

That is, is estimated as

then set at

=

/d2 (d2 for sample size 2). The control limits are

±3

/d2

with the central line at

.

Example: Consider the series of measurements of a quality characteristic (one-at-atime data) given in Table 9

Table 9 Data for I-Chart

4.1 5.9 5.0 4.4 5.4 5.0 4.1 5.0 6.1 4.5

4.2 3.5 3.0 4.3 5.6 6.6 7.3 5.0 3.9 4.7

6.1 5.3 2.5 6.2 5.4 5.7 5.2 3.1 4.5 4.9

5.2 4.1 5.2 6.1 5.3 7.3 5.6 5.0 4.9 5.0

6.4 5.3 4.3 6.3 3.9 4.7 7.1 4.5 4.1 5.4

(Read from left to right)

It is easy to compute

= 5.0440 , S = 1.0475 giving

= S/c4 = 1.0475 / 0.9949 =

1.0528. The control limits are therefore 5.044 3(1.0528). That is, LCL = 1.8856 and

UCL = 8.2024. The following control chart is then drawn for the data in Table 9

Figure 15 I-Chart Based on the Standard Deviation Estimate of Sigma

It is easy to compute the average of the moving ranges (absolute values) as

= (1.8 + 0.9 + 0.6 + 1.0 + .... + 2.6 + 0.4 + 1.3)/49 = 1.1735

and compute

=

/d2 = 1.1735 / 1.128 = 1.0404. The control limits of the Ichart (based on the moving range estimate of sigma) are 5.044 3(1.0404), ie LCL =

1.9228 and UCL = 8.1652. The individual values are then shown on the I-chart in

Figure 16

Figures 15 and 16 both suggest that the quality characteristic under consideration is

under control.

One may also conduct the supplementary run tests (preferably using MINITAB).

Figure 16 MINITAB I-Chart Output

The assumption of normality and independence for individual measurements must be

justified for using the Shewhart chart for individual measurements. Random errors

such as measurement error are likely to be present in individual values. Further, the

moving range values may have correlated errors. These aspects should be noted

before applying the chart for individual measurements.

The normality assumption of the control statistic is more relevant in the case of a

Shewhart chart for individual values. There are several methods available for testing

the normality assumption such as regression test, chi square goodness of fit test,

distance tests, moment test, etc. MINITAB provides for three tests namely, 1) the

Anderson-Darling test, 2) the Ryan-Joiner test and 3) the Kolmogorov-Smirnov test.

To apply these tests, a value for alpha, the Type I error, needs to be chosen (usually

10% or above) and compared with the P-value that is displayed in the MINITAB

normal plot. If the displayed P-value is smaller than the alpha value chosen, one

rejects the assumption of normality.

On violation of the normality assumption, transformations need to be tried (log,

square root, square etc). Box and Cox (1964) provided maximum likelihood methods

for choosing normalising transformations. The Box-Cox power transformation is

useful for correcting non-normality as well as unstable variation in the process data.

MINITAB provides for optimal estimation of the appropriate power of the

transformation

Y()=

Y

{ log Y

e

= 0

=0

with common transformations such as square root, etc, being particular cases as seen

below:

Value of Transformation

2

Y2

0.5

Y

0

loge Y

-0.5

1/Y

-1

1/Y