STATISTIC 378 – APPLIED REGRESSION ANALYSIS

advertisement

STATISTICS 378 – APPLIED REGRESSION ANALYSIS

Serious Crime Analysis in Metropolitan U.S. Regions (1976-1977)

Terence Fung

Kamal Harris

Colin Sproat

Prit Phul

S T A T I S T I C A L

A N A L Y S I S

Table of Contents

Introduction ....................................................................................................... 2

Data Explanation ............................................................................. 2

Conclusion ........................................................................................................ 3

Best Model ...................................................................................... 3

Interpretation of the model .......................................................................................................... 3

Confidence Interval ..................................................................................................................... 3

Correlation ................................................................................................................................... 4

Importance of Variables .............................................................................................................. 4

Shortcomings of Model ................................................................................................................ 4

Methodology ..................................................................................................... 5

Data Splitting ................................................................................... 5

Checking assumptions .................................................................... 5

Independence .............................................................................................................................. 5

Normality ..................................................................................................................................... 6

Homoscedasticity ........................................................................................................................ 7

Fitting the best model ...................................................................... 7

Collinearity ................................................................................................................................... 7

Selection Method ......................................................................................................................... 9

Interaction Terms......................................................................................................................... 9

Final Model and influence ........................................................................................................... 9

Fitting model to prediction data ..................................................... 10

Appendix......................................................................................................... 12

Correlation .................................................................................... 12

Interpretation of best model .......................................................... 12

Collinearity .................................................................................... 13

Selection Method .......................................................................... 14

Influential Points – Training Group ................................................ 15

Fitted Model - Holdout Group ........................................................ 16

Collinearity – Holdout Group ......................................................... 16

Influential Points – Holdout Group................................................. 17

1

Introduction

Information regarding 141 Standard Metropolitan Statistical Areas (SMSAs) in the United States is provided

in Neter et al. (1996)1. The study contains 11 variables that pertain to that specific metropolitan area between

the years of 1976-1977. We would like to find the relationship and importance of our regressors through the

least-square regression method. By finding this relationship, we want to see how well our model explains

serious crimes in any given metropolitan area. The goal of the study is to evaluate what is the best model that

can estimate the crime rate for a metropolitan area.

Data Explanation

We defined serious crime as our dependent

variable. Unlike many of the other variables,

serious crime is a random variable that has

potential to be predicted by the other

variables through the least-square method.

X2, X3, X4, X5, X6, X7, X8 X9, X10 which

correspond with their variable number from

the data set given. Variable X12 is a logic

term. To deal with this, we introduced two

extra variables: X13 and X14. This way we can

use binary term to indicate which region it is

located and by using this method our model

will not be influenced by these values in an

unnecessary way. We use binary terms to

indicate the regions as follows: if the area is

in NE, then (X12, X13, X14) = (1, 0, 0); if the

area is in NC, then (X12, X13, X14) = (0, 1, 0);

if the area is in S, then (X12, X13, X14) = (0, 0,

1); if the area is in W, then (X12, X13, X14) =

(0, 0, 0).

1

Neter, Kutner, Nachtsheim, and Wasserman (1996). “Applied Linear Regression Models”, IRWIN, pp.1367-1368.

2

Conclusion

Best Model

Interpretation of the model

From the residual analysis of our data, the best fit model for these 141 observations is:

Yp = -2.70029 + 1123.22515tX2 – 26.94736tX6 + 0.1153c8 – 229.5947c10 -0.44122X12 – 0.18717X13 + ε.

It should be noted that this model simply predicts number of crimes in an area but will not provide an exact

value. An increase of 1 unit in the inverse of the land area squared will increase our yp by 11223.22515 with

all other variables held constant. An increase of 1 unit in the inverse of the active physicians in the area will

decrease our yp by 26.94736 with all other variables held constant. An increase of 1 unit in the centered

percentage of high school graduates in the area will increase our yp by 0.1153 with all other variables held

constant. An increase of 1 unit in the centered inverse of the total personal income in the area will decrease

our yp by 229.5947 with all other variables held constant. If the observation occurs within NE region, the yp

is expected to decrease by 0.44122, and if the observation occurs within NC region, the yp is expected to

decrease by 0.18717.

The value of R2 for the complete dataset is 0.7676. 76.76% of the variability in y can be explained by the

regression model. If we add enough variables to our model R2 will increase, meaning that R2 is biased

towards the number of variables. A better indicator would be R2adj , which is unbiased towards the number of

variables in the model. R2adj for the complete dataset is 0.6746. 67.46% of the variability in y can be explained

by the regression model. R2adj is a value that can be used to safeguard us from over-fitting the model, so if we

have added any unnecessary terms to the model, R2adj will decrease significantly, which is not the case.

Mean squared error (MSE) is 0.04999, which is the lowest amongst all of the models available to us via the all

possible regression method. MSE is an unbiased estimator of population variance in normal distributed data.

The smaller we can make MSE, the smaller the variance in the model will be, which is ideal.

Mallow’s Cp for our data = 7. When values fall close to p, regression equations show minimum bias. It is

preferable to have a small Cp statistic but it must be large enough to be a good estimator. Cp always equals p

for the full model, hence this statistic was of no use to us to find our best model.

Confidence Interval

If yp = ln((y/(X3*1000))/(1-(y/(X3*1000)))) then y = X3*1000exp{yp}/(1+exp{yp}). To find the 95%

confidence interval for the dataset, we found the 95% confidence interval for the means of each observation.

Then we took the average of the lower and upper bounds. Then, by using the mean of the X3 values in place

of X3, we converted the confidence interval into a 95% confidence interval for the number of serious crimes

in the area. We found that the 95% CI for yp was (-3.6664, -2.2564). Considering multiple areas at a time

and total number of serious crime, we found the 95% CI to be (45379.02, 56762.94).

3

Correlation

A correlation matrix was generated to see which variables are correlated with one another (See Appendix –

Correlation).The matrix shows that only c10 and tX6 (Total personal income versus Number of active

physicians) are strongly positively correlated. This relationship is valid because physicians are generally earning

higher personal income. As a result, the more physicians in the area, it is the expected that the total personal

income of the area will also increase. We did not find any strongly correlated variables.

Importance of Variables

Through the forward and stepwise selection methods, it was found that X12 – NE region was the most

important variable in indicating the serious crimes. The second most important variable is tX6 – Number of

active physicians in the area. Base on our model, if the area is located in the NW region, the predicted

number of serious crimes is predicted to be lower than another region. This relationship also holds with the

number of physicians in the area.

Shortcomings of Model

Some of our observations have been determined to be influential points, therefore our model must be used

with caution. This is because influential points exert great leverage on the model such that our model is

explained by the 5 or 6 observations as opposed to the entire dataset. We found that the influential points

had a large impact on β1, the coefficient corresponding to the land area. The difference between β1 for the in

the holdout group with and without influential point in the dataset was 13178. Normally, robust regression

would be performed in order to correct for influential points. Robust regression will decrease the effect of

influential points on the model and is extremely resilient to outliers unlike normal least-square method.

4

Methodology

Data Splitting

Our data set was split into two groups: training and holdout. This was achieved by using the DUPLEX

algorithm. This method begins with defining zjj = [xij - / (Sjj)0.5] where Sjj = ∑(xij – )2 . Then, using the

Cholesky method we obtained ZTZ = TTT where T is an upper triangular matrix. We then define W = ZT-1.

This gives us an orthogonal matrix with unit variance. The W-Matrix was calculated using the R-statistical

package and then entered into Microsoft Excel where we calculated the Euclidean distances of all data points.

There were a total of 141C2 = 9,870 distances calculated. Next we found the maximum distance in our data

and found it to be (1,27). We put observations 1 and 27 into the training group. We then deleted the point

and found the next largest distance as (4,7) and we put observations 4 and 7 into the holdout group. Now the

next point that was the farthest away from the observation already in our training group will be added to the

training group. This was done by adding the distances of all the prior training group observations to a specific

observation. This variable is taken out of the distance matrix and then the same method is done for the

holdout group. Since our dataset is relatively small, we only placed 25 values in our holdout group and the

remaining 116 data points were placed in our training group.

The observations entered into our holdout group (in ascending order) were: 2, 3, 4, 7, 10, 12, 15, 17, 19, 24,

42, 48, 59, 68, 73, 78, 92, 107, 112, 113, 124, 133, 137, 138, and 139.

The remaining observations were placed into our training group.

To check the validity of the split we calculated

with 1/p = 1/13.

The generated determinant using the R-Statistical package generates 142.1728 and 127.9630 for training and

holdout respectively. Applying it to the equation, we found a value of 2.983374. For even data splitting this

value should be close to 1, but because we only put 25 observations into our holdout group. The value under

3 is acceptable.

Checking assumptions

Independence

In order to perform least-square linear regression we must account for the three assumptions: independence

normality, and homoscedasticity. Each variable from the observations have no effect on the variables of the

other observations. Assuming that the standard areas were selected randomly, we can say that the

observations are independent of each other. In addition, the least-square errors of the model should be

uncorrelated and normally distributed. When the values are uncorrelated this implies that covariance is zero

and under normal distribution this implies independence.

5

Normality

The split dataset was entered into SAS. We must

check our dataset for normality and constant

variance. Without these assumptions we cannot

create a valid model.

It is clear from this graph that normality assumption

does not hold because it has a light-tail distribution.

To fix this, we will have to perform a

transformation on Y.

The transformation we used was:

ln((y/(X3*1000))/(1-(y/(X3*1000)))).

yp =

The variable X3 – Total Population, was given in terms of thousand, so we multiplied it by 1,000. Y is the

number of serious crimes in the area. A more plausible description would be the serious crimes in the area in

comparison to the population of the area. Serious crime is a random variable that follows Poisson

distribution, which is a discrete distribution from [0, ∞). We converted this into a normal distribution which

is continuous from (-∞, ∞).

Y* = y/(X3 * 1000) is a proportion, hence the range of the dataset is [0,1]. If we divide Y* by 1-Y*, we get:

Y*/ (1-Y*) which has a range of [0, ∞). Now if we take the natural logarithm, we get yp = ln(Y*/(1-Y*)).

Since the ln(0) = -∞, the new range of the yp is (-∞, ∞) which is the same as a normal distribution.

Once we did our transform of y, we again check for normality of the model:

It is clear from this graph that normality assumption

holds because it follows a linear pattern. Now we

check for homoscedasticity.

6

Homoscedasticity

Homoscedasticity does not hold for this dataset. We

want observations in this graph to hold along a

horizontal band. For this to occur, transformation of

our variables is required.

Looking at the residuals versus regressor graphs, the

following transforms were made:

tX2 = 1/X22

tX3 = 1/X3

tX6 = 1/X6

tX7 = 1/X7

tX9 = 1/X9

tX10 = 1/X10

where tX* is the new transform variable.

This graph shows that homoscedasticity holds for the

model after the transformations were made. This also

implies that the expected value of the least-square

error is zero, which is also an assumption that must

hold.

Fitting the best model

Now that our assumptions are satisfied, we may now begin to fit our best model.

Collinearity

Before selecting our best model, we must check for collinearity between the variables (See Appendix –

Collinearity-Training Group). We found that tX3, tX6, X8, and tX10 had collinearity problems. To fix this, we

centered the variables by taking the mean of the variables subtracting from the variable in the observations

(c* = Xi* - mean(X*)).

c3 = tX3 - 0.0021298;

c8 = X8 - 54.35;

c9 = tX9 - 0.0047905;

c10 = tX10 - 0.000347226;

After centering our data, we removed the collinearity problems and now we may try to find best model.

7

8

Selection Method

We performed forward, backward, and stepwise selection method to remove variables not deemed to be

important in finding yp (See Appendix – Selection Method).

Forward Method: tX2, tX6, c8, X12, X13.

Backward Method: tX2, c8, c10, X12, X13.

Stepwise Method: tX2, tX6, c8, X12, X13.

After reducing the model, we performed the all possible regression method using R2 , Cp, R2Adj and MSE as

criterion on tX2, tX6, c8, c10, X12, X13 select the best model. R20 is the R2 adequate subset. Any R2 value under

R20 is considered unusable. R20 = 1 – (1- R2k+1)(1+ da,n,k) where da,n,k = kFa,k,n-k-1/(n-k-1). At the α= 0.05

significance level, da,n,k ~ 0.121682. R20 = 1 – (1- 0.5074)(1+ 0.121682) = 0.447459. Any R2 value below this is

deemed unacceptable. MSE should be such that it is at the minimum, and it is not a value that is increasing.

Mallow’s Cp should be a value that is as close to our p as possible because Cp helps out determine how many

variables we use. We found that the model could not be narrowed down further with R2 = 0.5074, Cp = 7,

R2Adj = 0.4803, and MSE = 0.05081. (See Appendix – Selection Method)

Interaction Terms

We then attempted to see if interaction terms could be added to our model in order to give us a better model

that can give us better estimation. We used our judgment to include what we feel to be logical interaction

terms. We felt that ‘Number of physicians’ with ‘Total personal income’ and ‘Percent of high school

graduates’ with ‘Total personal income’ could possibly interact with one anther. The all regression method

was performed on the models with interaction terms. We found for the 8 variable model, R2 = 0.5516, Cp =

9, R2 Adj = 0.5181, MSE = 0.04711. For the 7 variable model with x610 added, R2 = 0.5282, Cp = 8, R2 Adj =

0.4983, MSE = 0.04905. And for another 7 variable model with x810 added, R2 = 0.5259, Cp = 8, R2 Adj =

0.4952, MSE = 0.04935. We feel that with all three possible models, there are no significant differences

between the new models and our original best model and therefore no interaction terms were added to the

model.

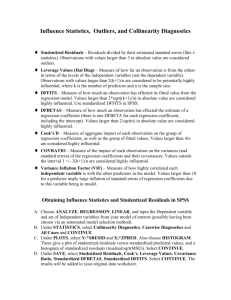

Final Model and influence

We found that our best model from the training group was:

yp = -2.69327+1106.12535tX2 -24.47901tX6 + 0.01299c8 – 332.16264c10 – 0.45876X12 – 0.20684X13 + ε.

This model must now be tested for influential points in the training group. If influential points are in our

model, our model must be used with caution because only a few other observations have an affect on our

model. We will use six tests to check for possible influential points: R-Student, hii, Cook’s D, DFFITS,

Covariance Ratio, and DFBETAS (See Appendix – Influential Points-Training Group).

R-Student detects possible outliers, these occur when R-student > 3 with R-student > 2 indicating possible

outliers. Variables targeted under R-student criteria were: 1, 42, 49, 66, 85, 88, 106, 116.

Cooks D “measures square distance between the least-square estimates based on all points.” Points have

considerable influence on the least-square estimate if cooks D > 1. The variable targeted under Cooks D was:

49.

9

hii is the diagonal element of the Hat matrix, these values range from 0 < hii < 1. The values on the diagonal

measure the distance of the i-th observation from the center of the x – space. Variables far away from the

center will have significant leverage over the dataset. The criteria of hii is that the values above 2p/n =

2(7)/116 = 0.1207 have leverage over the dataset. The values targeted under hii: 49, 116.

Covariance Ratio expresses the role of the i-th observation on the precision of the estimate. A covariance

ratio outside the range of 1 ± 3p/n. = 1 ± 3(7)/116 = [0.8190,1.1810] would indicate that the i-th data point

has large influence. Values targeted by the covariance ratio were: 1, 49, 66, 85, 106.

DFFITS is the value of R-student multiplied by the leverage of the ith observation, if the data point is an

outlier, we will have a large R-student value hence a large value for DFFITS. |DFFITS| > |2√p/n| =

|2√7/116|= 0.4956 is considered a possible influential point. Values targeted by DFFITS were: 1, 42, 49, 66,

85, 106, 107, 112, 116.

DFBETAS is the value that indicates how much the regression coefficient changes in standard deviation

units. |DFBETAS|> |2/√ n|=|2/√ 116|=0.1857 are considered possible influential points. Values

targeted under DFBETAS were: 1, 42, 49, 66, 85, 106, 116.

Using the criteria, we felt that observations 1, 49, 66, 106, and 116 were potential influential points.

To see if they have influence on our model we remove them and refitted our model to see if any significant

change occurred. From the dataset and see how the model changes. A large change in this model indicates

these values are influential points.

To test whether or not the values are outliers or influential points, we removed them from our model and

then fit the new least-square line to see if the influential points made a significant change in out best fit

model. After removing the influential points, our model changed to

yp = -2.64213 – 4110.19878tX2 – 57.57935tX6 + 0.01022c8 – 129.71397c10 – 0.47682x12 – 0.18943X13 + ε.

From looking at the change in β1, we can see a change in our model when influential points are removed

from the original training group dataset. Our model was built with influential point hence must be interpret

with caution.

Fitting model to prediction data

Now that we have found our best model using the estimation data, we must now check the validity of the

model by fitting our model through the prediction dataset. For the center transform of X8 and X10 we use the

means of X8 and X10 columns from the prediction dataset. We then checked the model for collinearity and we

found CN for our prediction data was 11.19037 which is less then the required value of 30 to be considered

to have collinearity. Collinearity in the prediction set is not a factor.

To see how well our model can be used to predict the holdout group data we checked the R2 and R2Adj. If the

absolute difference between R2 estimation and R2 prediction is greater then 0.1 this model is no good in

predicting serious crime in the holdout group. We found that the difference for R2 was 0.6544 – 0.5646 =

0.0898 and for R2Adj, the difference was 0.5393 – 0.5392 = 0.0001. So our model seems good for predicting

serious crime in the holdout group.

10

The best fit model in the holdout group is

yp = - 2.80488 + 23394tx2 + 26.66752tx6 + 0.00974c8 – 340.22446c10 - 0.5151x12 – 0.10199x13 + ε.

Next, the holdout group must be tested for influence points (See Appendix – Influential Points-Holdout Group).

From these criterions, we feel that observations 7, 15, and 22 could be potential influential points in the

holdout group. We decided we would remove observations 7, 15, 22 to see how the model was affected.

After removing the observations, we found the new model for the holdout group is

yp = -3.01936 + 36572tx2 + 179.4668tx6 + 0.00688c8 – 1116.6124c10 – 0.6844x12 – 0.19492x13+ ε.

Again, we found significant change in the coefficients of the two models and hence we feel that observations

7, 15, 22 are influential points and this model must be used with caution.

11

Appendix

Correlation

Pearson Correlation Coefficients, N = 141

Prob > |r| under H0: Rho=0

yp

tx2

tx6

c8

c10

x12

x13

yp

1.00000

-0.01298

0.8785

-0.30794

0.0002

0.38814

<.0001

-0.25578

0.0022

-0.49832

<.0001

-0.06806

0.4226

tx2

-0.01298

0.8785

1.00000

-0.00629

0.9409

-0.20201

0.0163

-0.02943

0.7290

0.19667

0.0194

-0.05092

0.5488

tx6

-0.30794

0.0002

-0.00629

0.9409

1.00000

-0.23114

0.0058

0.89171

<.0001

-0.04200

0.6209

0.02350

0.7821

c8

0.38814

<.0001

-0.20201

0.0163

-0.23114

0.0058

1.00000

-0.18551

0.0276

-0.16998

0.0439

0.12609

0.1363

c10

-0.25578

0.0022

-0.02943

0.7290

0.89171

<.0001

-0.18551

0.0276

1.00000

-0.08804

0.2992

-0.05941

0.4841

x12

-0.49832

<.0001

0.19667

0.0194

-0.04200

0.6209

-0.16998

0.0439

-0.08804

0.2992

1.00000

-0.27965

0.0008

x13

-0.06806

0.4226

-0.05092

0.5488

0.02350

0.7821

0.12609

0.1363

-0.05941

0.4841

-0.27965

0.0008

1.00000

Interpretation of best model

Root MSE

0.16977

R-Square

0.7676

Dependent Mean

-2.87257

Adj R-Sq

0.6746

Coeff Var

-5.91009

12

Analysis of Variance

Source

DF

Sum of

Squares

Mean

Square

F Value

Pr > F

Model

6

6.45218

1.07536

21.51

<.0001

Error

134

6.69894

0.04999

Corrected Total

140

13.15113

Collinearity

Before Centering:

Variable

DF

tx3

1

x8

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

Variance

Inflation

-66.45402

81.26821

-0.82

0.4150

27.88359

1

0.00797

0.00350

2.28

0.0245

2.21071

tx9

1

91.29262

46.95957

1.94

0.0541

54.32695

tx10

1

-1039.41076

495.82432

-2.10

0.0380

34.83511

Number

Eigenvalue

Condition

Index

11

0.00717

34.75088

12

0.00402

46.37989

13

0.00291

54.56172

13

After Centering:

Number

Eigenvalue

Condition

Index

11

0.02331

15.04390

12

0.01358

19.70937

13

0.01068

22.22573

Selection Method

Summary of Stepwise Selection

Step

Variable

Entered

Variable

Removed

Number

Vars In

Partial

RSquare

Model

RSquare

C(p)

F

Value

Pr > F

1

x12

1

0.2367

0.2367

58.1027

35.36

<.0001

2

tx6

2

0.1221

0.3588

32.8997

21.51

<.0001

3

c8

3

0.0558

0.4146

22.4531

10.69

0.0014

4

x13

4

0.0617

0.4764

10.6995

13.08

0.0005

5

tx2

5

0.0212

0.4976

7.9682

4.65

0.0333

Summary of Backward Selection

Variables

Kept

Parameter

Estimate

Standard

Error

Type II SS

F Value

Pr > F

Intercept

-2.72834

0.02840

466.28614

9227.91

<.0001

tx2

1104.33799

522.57050

0.22566

4.47

0.0368

c8

0.01325

0.00296

1.01362

20.06

<.0001

c10

-457.33004

108.03921

0.90541

17.92

<.0001

x12

-0.46273

0.05805

3.21106

63.55

<.0001

14

Summary of Backward Selection

Variables

Kept

x13

Parameter

Estimate

Standard

Error

Type II SS

F Value

Pr > F

-0.21588

0.04929

0.96937

19.18

<.0001

Summary of Forward Selection

Step

Variable

Entered

Number

Vars In

Partial

R-Square

Model

R-Square

C(p)

F

Value

Pr > F

1

x12

1

0.2367

0.2367

58.1027

35.36

<.0001

2

tx6

2

0.1221

0.3588

32.8997

21.51

<.0001

3

c8

3

0.0558

0.4146

22.4531

10.69

0.0014

4

x13

4

0.0617

0.4764

10.6995

13.08

0.0005

5

tx2

5

0.0212

0.4976

7.9682

4.65

0.0333

Influential Points – Training Group

Obs

Residual

RStudent

Hat Diag H

1

0.5925

2.8248

0.0785

0.7028

0.8246

42

-0.4751

-2.2091

0.0573

0.8304

-0.5447

48

-0.0212

-2.2191

0.9981

418.7112

-51.3459

66

-0.6408

-3.0334

0.0554

0.6371

-0.7347

85

0.4890

2.2816

0.0609

0.8170

0.5812

88

0.4497

2.0467

0.0221

0.8357

0.3079

106

-0.6674

-3.2211

0.0822

0.6115

-0.9642

112

0.3349

1.5723

0.0948

1.0057

0.5088

116

0.4239

2.3777

0.3476

1.1439

1.7354

15

Cov Ratio

DFFITS

Fitted Model - Holdout Group

Root MSE

0.19139

R-Square

0.6544

Dependent Mean

-2.85990

Adj R-Sq

0.5393

Coeff Var

-6.69223

Analysis of Variance

Source

DF

Sum of

Squares

Mean

Square

F Value

Pr > F

Model

6

1.24871

0.20812

5.68

0.0018

Error

18

0.65935

0.03663

Corrected Total

24

1.90806

Collinearity – Holdout Group

Number

7

Eigenvalue

Condition

Index

0.01977

11.19037

16

Influential Points – Holdout Group

Obs

Residual

RStudent

Hat Diag

H

Cov

Ratio

DFFITS

2

-0.0143

-0.1055

0.5228

3.1124

-0.1104

7

0.3325

2.1801

0.2326

0.3462

1.2003

13

0.0470

0.2697

0.2147

1.8439

0.1410

15

-0.0274

-0.4254

0.8921

12.8400

-1.2232

17

0.3429

2.1051

0.1376

0.3419

0.8410

18

-0.2451

-1.5717

0.2821

0.8040

-0.9851

20

-0.0470

-0.2823

0.2822

2.0116

-0.1770

21

-0.1177

-0.8195

0.4468

2.0564

-0.7365

22

0.3103

1.8470

0.1263

0.4747

0.7023

24

0.1321

1.4046

0.7456

2.7188

2.4043

25

-0.0765

-0.4920

0.3683

2.1395

-0.3757

17