A real symmetric matrix h is positive semidefinite if 0 for all row

advertisement

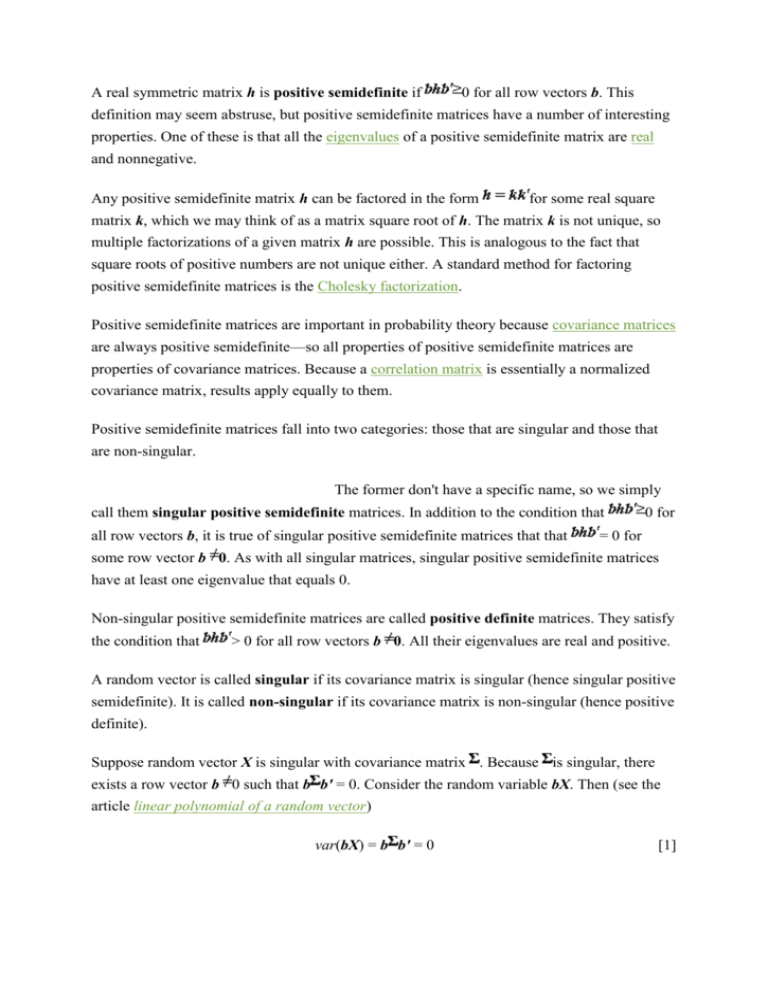

A real symmetric matrix h is positive semidefinite if 0 for all row vectors b. This definition may seem abstruse, but positive semidefinite matrices have a number of interesting properties. One of these is that all the eigenvalues of a positive semidefinite matrix are real and nonnegative. Any positive semidefinite matrix h can be factored in the form for some real square matrix k, which we may think of as a matrix square root of h. The matrix k is not unique, so multiple factorizations of a given matrix h are possible. This is analogous to the fact that square roots of positive numbers are not unique either. A standard method for factoring positive semidefinite matrices is the Cholesky factorization. Positive semidefinite matrices are important in probability theory because covariance matrices are always positive semidefinite—so all properties of positive semidefinite matrices are properties of covariance matrices. Because a correlation matrix is essentially a normalized covariance matrix, results apply equally to them. Positive semidefinite matrices fall into two categories: those that are singular and those that are non-singular. The former don't have a specific name, so we simply call them singular positive semidefinite matrices. In addition to the condition that all row vectors b, it is true of singular positive semidefinite matrices that that 0 for = 0 for some row vector b 0. As with all singular matrices, singular positive semidefinite matrices have at least one eigenvalue that equals 0. Non-singular positive semidefinite matrices are called positive definite matrices. They satisfy the condition that > 0 for all row vectors b 0. All their eigenvalues are real and positive. A random vector is called singular if its covariance matrix is singular (hence singular positive semidefinite). It is called non-singular if its covariance matrix is non-singular (hence positive definite). Suppose random vector X is singular with covariance matrix . Because is singular, there exists a row vector b 0 such that b b' = 0. Consider the random variable bX. Then (see the article linear polynomial of a random vector) var(bX) = b b' = 0 [1] Since our random variable bX has 0 variance, it must equal (with probability 1) some constant a. This argument is reversible, so we conclude that a random vector X is singular if and only if there exists a row vector b 0 and a constant a such that bX = a [2] Dispensing with matrix notation, this becomes [3] Since b 0, at lease one component is nonzero. Without loss of generality, assume 0. Rearranging [3], we obtain [4] which expresses component X1 as a linear polynomial of the other components Xi. We conclude that a random vector X is singular if and only if one of its components is a linear polynomial of the other components. In this sense, a singular covariance matrix indicates that at least one component of a random vector is extraneous. If one component of X is a linear polynomial of the rest, then all realizations of X must fall in a plane within . The random vector X can be thought of as an m-dimensional random vector (m < n) sitting in a plane within . This is illustrated with realizations of a singular two-dimensional random vector X in Exhibit 1. Realizations of a Singular Random Vector Exhibit 1 Realizations of a singular 2dimensional random vector are illustrated. If a random vector X is singular, but the plane it Ads by Contingency Analysis sits in is not aligned with the coordinate system of , we may not immediately realize that it is singular from its covariance matrix . A simple test for singularity is to calculate the determinant | | of the covariance matrix. If this equals 0, X is singular. Once we know that X is singular, we can apply a change of variables to eliminate extraneous components Xi and transform X into an equivalent m-dimensional random vector Y, m < n. The change of variables will do this by transforming (rotating, shifting, etc.) the plane that realizations of X sit in so that it aligns with the coordinate system of . Such a change of variables is obtained with a linear polynomial of the form: X = kY + d [5] Consider a three-dimensional random vector X with mean vector and covariance matrix [6] We note that has determinant | | = 0, so it is singular. We propose to transform X into an equivalent 2-dimensional random vector Y using a linear polynomial of the form [5]. For convenience, let's find a transformation such that Y will have mean vector 0 and covariance matrix I: [7] We first solve for k. We know (see the article linear polynomial of a random vector) Från http://www.riskglossary.com/link/positive_definite_matrix.htm