Improving the Quality of Forecasting using Generalized AR Models

advertisement

IMPROVING THE QUALITY OF FORECASTING

USING GENERALIZED AR MODELS:

AN APPLICATION TO STATISTICAL

QUALITY CONTROL

M.SHELTON PEIRIS1

Department of Statistics

The Pennsylvania State University

University Park PA 16802-2111, USA.

Abstract

This paper considers a new class of ARMA type models with indices to describe

some hidden features of a time series. Various new results associated with this class

are given in general form. It is shown that this class of models provides a valid,

simple solution to a misclassification problem which arises in time series modeling.

In particular, we show that these types of models could be used to describe data

with different frequency components for suitably chosen indices. A simulation

study is carried out to illustrate the theory. We justify the importance of this class

of models in practice using a set of real time series data. It is shown that this

approach leads to a significant improvement in the quality of forecasts of correlated

data and opens a new direction of research for statistical quality control.

Introduction

It is known that many time series in practice (e.g. turbulent time series, financial

time series) have different frequency components. For example, there are some

problems which arise in time series analysis of data with low frequency

components.

Key words: Time series, Misclassification, Frequency, Spectrum, Estimation,

Correlated data, Quality control, Forecasts, Autoregressive, Moving average,

Correlation, Index.

1

Current address: School of Mathematics and Statistics, The University of Sydney,

NSW 2006, Australia.

One extreme case to show the importance of these low frequency components is the

analysis of long memory time series (see for instance, Beran (1994), Chen et. al.

(1994)). However, there is no systematic approach or a suitable class of models

available in literature to accommodate, analyze and forecast time series with

changing frequency behaviour via a direct method. This paper attempts to introduce

a family of autoregressive moving average (ARMA) type models with indices

(generalized ARMA or GARMA) to describe hidden frequency properties of time

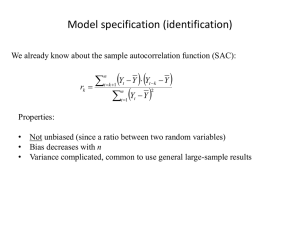

series data. We report some new results associated with the autocorrelation function

(acf) of underlying processes.

Consider the family of standard ARMA (1,1) processes (see, Abraham and Ledolter

(1983), Brockwell & Davis (1991)) generated by

X t X t 1 Z t Z t 1 ,

(1.1)

where , 1 and Z t is a sequence of uncorrelated random variables (not

necessarily independent) with zero mean and variance

noise, and denoted by WN (0,

2

2

, known as white

).

Using the backshift operator, B (i.e. B

j

X t X t j , j 0) and the identity

0

operator I B , (1.1) can be written as

I B X t I B Z t .

(1.2)

The process in (1.2) has the following properties:

i.

The autocovariance function (acovf), k satisfies:

1

0 2 1 2 1 2

1 2 2

k k 1 1, k 2

ii.

The spectral density function (sdf), f x is

2

f x

2 1 2Cos 2

; .

2 1 2Cos 2

(1.3)

It is well known that for any time series data set, the density of crossings at a certain

level x t x may vary. We have noticed that this property (say, the degree of level

crossings) is very common in many time series data sets (see figures 1 to 4 in

Appendix I). These series display similar patterns in the acf, the pacf, and the

spectrum and hence one may propose the same standard ARMA model (for

example, AR (1) model) for all four cases (based on the traditional approach). In

other words, these series cannot identify from each other using standard models

and/or techniques and leads to a ‘misclassification problem’ in time series. This

encourages us to introduce a new, generalized version of (1.2) with additional

parameters (or indices) 1 0 and 2 0 to control the degree of frequency or

level crossings satisfying

I B 1 X t I B 2 Z t

,

(1.4)

where 1 , 1 . This class of models covers the traditional ARMA (1.1)

family given in (1.1) when 1 2 1 . Also standard AR (1) and MA (1) when

1 1, 2 0 and 1 0, 2 1 respectively. It is interesting to note that the

degree of level crossings of data can be controlled by these additional parameters

1 and 2 and (1.4) constitutes a wide variety of important processes in practice.

Hence (1.4) is called ‘generalized ARMA (1,1)’ or ‘GARMA (1,1)’. Although

(1.4) can be extended to general ARMA type models, for simplicity this paper

considers the family of GAR (1) and its applications. That is, we consider a GAR

(1) process generated by

I B X t

Z t ; 1 1; 0 .

(1.5)

This class of models covers the standard AR (1) family when 1 . It can be seen

from the spectrum that the degree of frequency of data can be controlled by this

additional parameter . For example, when

a = 1, {X t } in (1.5) converges for

0

1

and reduces to the class of fractionally integrated white noise processes.

2

Hence, (1.5) constitutes a general family of AR(1) type models with a number of

potential applications. There are many real world data sets with varying frequencies

(especially in finance where the data has high frequency components) and (1.5) can

be applied using existing techniques with simple modifications. With that view in

mind, Section 2 reports some theoretical properties of the underlying GAR(1)

process given in (1.5).

2 Properties of GAR(1) Processes

Let

I B

j j B j ,

(2.1)

j 0

0

where R, 0; B I , 0 1 and

j 1 j

j

1

j!

j 1

; j 1.

If is a positive integer, then j 0 for j 1. For any non-integral 0,

it is known that

j

j

,

j 1

where is the gamma function given by

x 1 t

t e dt ; x 0

0

x ; x 0

x 1 x 1; x 0.

(2.2)

It is easy to see that the series j 0 j j converges for all since 1.

Thus X t in (1.5) is equivalent to a valid AR process of the form

j

j X t j Z t

j 0

(2.3)

2

with j j .

Now we state and prove the following theorem for a stationary solution of (1.5).

2

Theorem 2.1: Let Z t ~ WN 0, . . Then for all 0 and 1, the infinite

X t j j Z t j

series

j 0

converges absolutely with probability one, where j

Proof. Let I B

(2.4)

j

.

j 1

j 0 j j B j ,

where

j 1 j

j

j

; j 0.

j 1

Since

E j j Z t j

j 0

2

j

j 0

j

2

2

E

Zt j

2

and j 0 j j , the result follows.

Note:

For 1 , (2.4) converges for all 0 1 2 .

Now we consider the autocovariance function of the underlying process in (1.5).

Let k Cov X t , X t k E X t X t k be the autocovariance function at lag k of

X t satisfying the conditions of theorem 2.1.

It is clear from (2.3) that k satisfy a Yule-Walker type recursion

j

j k j 0; k 0

(2.5)

j 0

and the corresponding autocorrelation function (acf), k , at lag k satisfies

j

j k j 0; k 0 .

(2.6)

j 0

It is interesting to note that k k is a solution of (2.6), since j 0 j 0 for

any 0 . However, the general solution for k may be expressed as

k k , , k ,

where is a suitably chosen function of k , , and . To find this function ,

we use the following approach:

The spectrum of X t in (1.5) is

fx 1 e

i 2

2

;

2

1 2Cos 2

2

2

.

(2.7)

Note: In a neighbourhood of 0 ,

f xg ~

2

1 2 ,

2

where g stands for generalized.

Now the exact form of k (or k ) can be obtained from

k e ik f x ( )d

2

Cosk

d .

0

1 2Cos 2

(2.8)

We first evaluate the integral in (2.8) for k 0 and report this result together with

other cases (ie. for k 1 ) in Section 3 as our main results.

3

Main Results

Theorem 3.1:

0 Var X t 2 F , ;1; 2 ,

(3.1)

where F 1 , 2 ; 3 ; is the hypergeometric function given by

1 j 2 j 3 j

.

j 0 1 2 3 j j 1

F 1 , 2 ; 3 ;

(3.2)

(See Gradsteyn and Ryzhik (GR)(1965), p.1039).

Proof: From GR, p.384,

0

d

1 2Cos

where Bx, y

2

1 1

B , F , ;1; 2 ,

2 2

(3.3)

x y

is the beta function.

x y

1 1

2 2

Since B , , the result follows.

Using (3.2) it is easy to see that for 1 ,

F 1,1;1; 2 1 2

1

.

(See GR, p.1040).

Hence (3.1) reduces to the corresponding well known result for the variance of an

AR (1) (standard) process satisfying X t X t Z t . That is,

Var X t

2

, 1.

1 2

Using

3 3 1 2

,

3 1 3 2

F 1 , 2 ; 3 ;1

(3.4)

the result of Theorem 3.1 reduces to the Var X t for a fractionally differenced

I B X t

white noise process satisfying

1 and 1

Z t with 0

1

. That is, when

2

1

, (3.1) gives

2

0

2 1 2

.

2 1

(3.5)

(Compare with Brockwell and Davis (1991) p466, eq. 12.4.8).

As it is not easy to evaluate the integral in (2.8) directly for k 0 . We find an

expression for k via,

k E X t X t k

2 j

j 0

j k

k 2 j

.

An explicit form of k is given in Theorem 3.2.

Theorem 3.2:

k

2 k k F , k ; k 1; 2

; k 0.

k 1

j

Proof: Since X t j 0 j Z t j ,

k 2 k j

j 0

2 k

j 0

jk

2j

,

.

j j k 2

j

2 j 1 j k 1

From p.556 of Abramovitz & Stegun (1965) we have

(3.6)

j k j 2

k 1 j j 1

j 0

j

k F , k ; k 1; 2

k 1

and hence (3.6) follows.

Note: When k 0 , Theorem 3.2 reduces to Theorem 3.1.

The autocorrelation function plays an important role in the analysis of the

underlying process. The Corollary 3.1 below gives an expression for k for

any k 0 .

Corollary 3.1: The autocorrelation function of GAR (1) process in (1.5) is

k k

k F , k ; k 1; 2

k 1F , ;1;

2

.

(3.7)

Note: When 1 , (3.7) reduces to the acf of a fractionally differenced white noise

process given by Brockwell & Davis (1991), p.466, eq.12.4.9. Further it is

interesting to note that (3.7) reduces to the acf of a standard AR (1) process

2

2

2

when 1 , since F 1, k 1; k 1; F 1,1;1; 1

1

(see

GRp1040).

Thus the results of the above two theorems provide a new set of formulae in a

general form. Obviously, our new result for k in (3.6) supersede all existing

results for standard AR (1) and fractionally differenced white noise processes.

Remark: An important consequence of our new result of Theorems 3.2 yields

0

Cosk d

1 2Cos

2

k k F , k ; k 1; 2

k 1

(3.8)

for any 0. When k 0 the equation (3.8) reduces to the equation (3.3) in

Theorem 3.1 (also see GR p384).

Note: The new result in equation (3.8) is particularly useful in many theoretical

developments of the class of generalized ARMA (GARMA) processes with indices.

We now discuss a method of estimating parameters ( and ) of (1.5) in Section 4.

4

Estimation of Parameters

4.1 Exact Likelihood Estimation

Consider a stationary normally distributed GAR (1) time series X t generated by

(1.5). Let X T be a sample of size T from (1.5) and let X T X 1 , , X T , then

it is clear that X T ~ N T (0, ), where 0 is a column vector of 0’s of order

T 1 and is the covariance matrix (order T T ) of X T . Stationarity of X t

implies that the covariance matrix is a Toeplitz form with entries given by the

equation (3.6).

With the normality assumption, the joint probability density

function of X T is given by

f X T , 2

T

2

To estimate the parameters

1

1

2 exp

X T 1 X T .

2

,

(4.1)

and 2 one needs a suitable optimization

algorithm to maximize the likelihood function given in (4.1) (or the corresponding

log likelihood function).

This can be done by choosing an appropriate set of

starting-up values since we have the covariance matrix in terms of the parameters

, and 2 .

Denote the corresponding vector of the estimates by ̂ ,

where ˆ ˆ , ˆ, ˆ 2 . We use the Method of Moments (MOM) techniques as

described below to find a suitable set of starting up values for the ML algorithm.

4.2 Initial Values

Method of moment estimates of and can be obtained by approximating 1 and

2 from (3.7). It is easy to see that

1

and

1 F ,1 ;2; 2

2F , ;1;

2

,

(4.2)

2

2 F ,2 ;3; 2

3F , ;1;

2

2

1

2.

(4.3)

The corresponding (approximate) MOM estimates of and are given by

ˆ 2r2 r1 r1 and ˆ r1 ˆ .

̂ 2 can be found from the equation involving 0 in Theorem 3.1. Next section

considers a real time series and illustrate the usefulness of GAR (1) modeling in

practice. Methods described by Granger and Joyeux (1980), Geweke and PorterHudak (1983) and Peiris and Court (1993) can be used to estimate the parameters in

(1.5) and these results will be discussed in a future paper (see also Peiris et. al.

(2003)).

5

An Application

Consider the yield data (monthly differences between the yield on mortgages and

the yield on government loans in the Netherlands, January 1961 to December 1973)

from Abraham and Ledolter (1983). The data for January to March 1974 are also

available for comparison. The time series, the acf, the pacf, and the spectrum (see

Appendix II, Fig. 5) suggest that an AR(1) model (standard) is suitable and

Abraham and Ledolter found the following estimates (values in parentheses are the

estimated standard errors):

ˆ 0.97 (0.08) , ˆ 0.850.04 , and ˆ 0.024 .

However, we fit a generalized AR (1) model for the data using the methods

described in Section 4. The results are:

ˆ 0.970.13 , ˆ 0.910.06 , ˆ 0.870.10 , and 2 0.042 .

The first three forecasts from the time origin at t 156 and the corresponding 95%

forecast intervals are:

0.65 and 0.65 0.23

0.78 and 0.78 0.32

0.80 and 0.80 0.40 .

The true (observed) values of the last three readings are 0.74, 0.90, and 0.91

respectively,

The corresponding results due to Abraham and Ledolter (1983) are

0.56 and 0.56 0.30

0.62 and 0.62 0.40

0.68 and 0.68 0.46 .

Our results (estimates) are closer to the true values than in Abraham and Ledolter

(1983). Also note that our new results provide shorter forecast intervals in all three

cases above, although the upper confidence limits are slightly higher than the

corresponding results of Abraham & Ledolter (1983). Appendix II Fig. 6 gives a

simulated series from (1.5) with estimated parameters delta = d = 0.87, alpha = a =

0.91 and the residual variance = 0.042 for comparison.

ACKNOWLEDGEMENTS

The author wishes to thanks the referee and Bovas Abraham for many helpful

comments and suggestions to improve the quality of the paper. This work was

completed while the author was at the Pennsylvania State University. He thanks

Professor C. R. Rao and the Department of Statistics for their support during his

visit. Also he thanks Jayanthi Peiris for her excellent typing of this paper.

REFERENCES

Abraham, B. and Ledolter, J., Statistical Methods for Forecasting, John Wiley, New

York (1983).

Abramowitz, M. and Segun, I., Handbook of Mathematical Functions, Dover,

NewYork (1968).

Beran, J., Statistics for Long Memory Processes, Chapman and Hall, New York

(1994).

Brockwell, P.J. and Davis, R.A., Time Series: Theory and Methods, SpringerVerlag, New York (1991).

Chen, G., Abraham, B. and Peiris, S. , Lag window estimation of the degree of

differencing in fractionally integrated time series models, J. Time Series Anal., 15,

(1994) pp. 473-487.

Geweke, J. and Porter-Hudak, S., The estimation and application of long memory

time series models, J. Time Series Anal. 4, (1983) pp.231-238.

Gradshteyn, I.S. and Ryzhik, I.M., Tables of Integrals, Series, and Products, 4th

ed.., Academic Press, New York (1980).

Granger, C.W.J. and Joyeux, R., An introduction to long memory time series

models and fractional differencing, J. Time Series Anal., 1, (1980) pp. 15-29.

Peiris, M.S. and Court, J.R. , A note on the estimation of degree of differencing in

long memory time series analysis, Probab. and Math Statist., 14, (1993)pp. 223229.

Peiris, S., Allen, D. and Thavaneswaran, A., An Introduction to Generalized

Moving Average Models and Applications, Journal of Applied Statistical Science

(2003) (to appear).

Appendix I

Fig.1

Fig.2

Fig.3

Fig.4

Appendix II

Fig.5

Fig.6