SS 4062 Numerical Methods IV 14 Lectures

advertisement

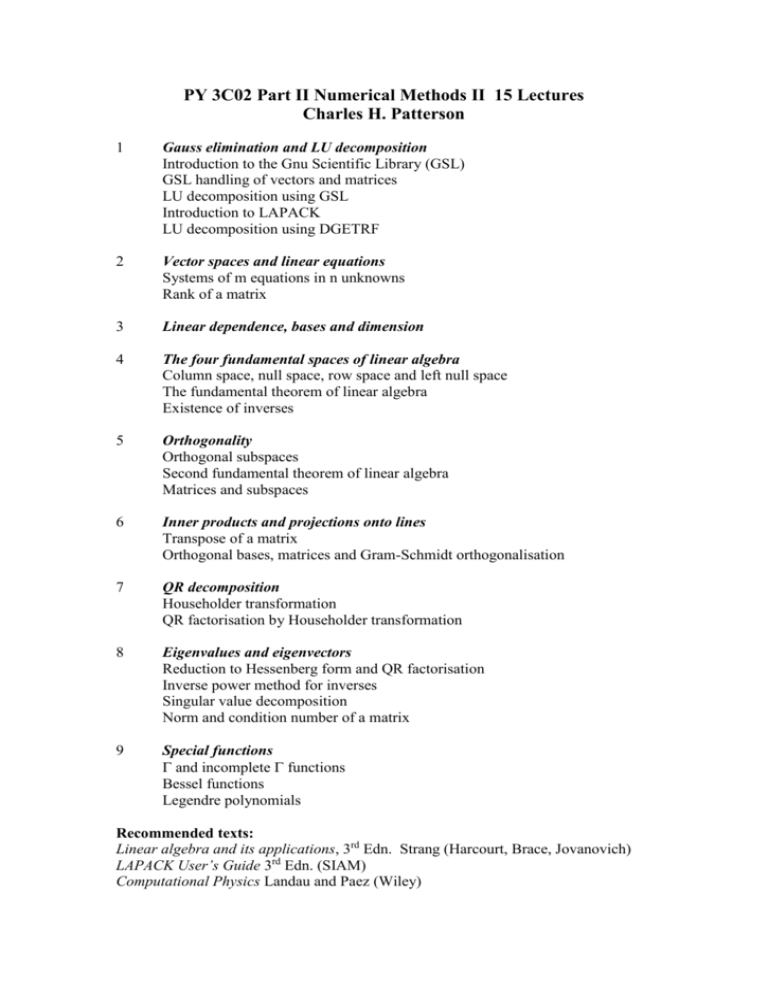

PY 3C02 Part II Numerical Methods II 15 Lectures

Charles H. Patterson

1

Gauss elimination and LU decomposition

Introduction to the Gnu Scientific Library (GSL)

GSL handling of vectors and matrices

LU decomposition using GSL

Introduction to LAPACK

LU decomposition using DGETRF

2

Vector spaces and linear equations

Systems of m equations in n unknowns

Rank of a matrix

3

Linear dependence, bases and dimension

4

The four fundamental spaces of linear algebra

Column space, null space, row space and left null space

The fundamental theorem of linear algebra

Existence of inverses

5

Orthogonality

Orthogonal subspaces

Second fundamental theorem of linear algebra

Matrices and subspaces

6

Inner products and projections onto lines

Transpose of a matrix

Orthogonal bases, matrices and Gram-Schmidt orthogonalisation

7

QR decomposition

Householder transformation

QR factorisation by Householder transformation

8

Eigenvalues and eigenvectors

Reduction to Hessenberg form and QR factorisation

Inverse power method for inverses

Singular value decomposition

Norm and condition number of a matrix

9

Special functions

and incomplete functions

Bessel functions

Legendre polynomials

Recommended texts:

Linear algebra and its applications, 3rd Edn. Strang (Harcourt, Brace, Jovanovich)

LAPACK User’s Guide 3rd Edn. (SIAM)

Computational Physics Landau and Paez (Wiley)

I Gauss Elimination and LU Decomposition

Consider the system of linear equations

2u + v + w = 5

4u – 6v

= -2

-2u + 7v +2w = 9

(1)

(2)

(3)

Which can also be written in matrix form A.x = b

1 1 u 5

2

4 6 0 v 2

2 7 2 w 9

If we take a geometrical point of view, each of these equations corresponds to a plane

in the 3-D {u,v,w} Cartesian space. It is relatively easy to see that a unique solution

to the problem exists if all three planes meet at a single point – which corresponds to

the solution to the simultaneous equations. Alternatively, two planes may be parallel

and not intersect or the 3 planes may meet in pairs along lines, or all 3 planes may

meet along a line.

If we rewrite the set of equations in terms of the columns of the matrix A then we can

gain some insight into what kind of solution is possible, if any.

2 1

1 5

u 4 v 6 w 0 2

2 7

2 9

We see that the vector on the rhs must be constructed from a linear combination of 3

vectors on the lhs whose coefficients are u, v and w.

Consider the example

u + v + w =2

2u

+ 3w = 5

3u + v + 4w = 6

(4)

(5)

(6)

In this case the lhs of (4)+(5) equals the lhs of (6) = 6 but the rhs of (4)+(5) = 7 does

not. Hence the equations are inconsistent and there is no unique solution. However,

if we change the rhs of (6) to 7 then a solution is possible. Only pairs of these

equations give any information about the solution (with rhs (6) = 7).

u + v + w =2

2u

+ 3w = 5

-----------------------2v + w = 1

(4)

(5)

(5) – 2 (4) This is the equation of a line

u + v + w =2

3u + v + 4w = 7

-----------------------2v + w = 1

(4)

(6)

(6) – 3 (4) This is same the equation of a line

If instead rhs (6) = 6 then we simply have two inconsistent lines in the solution.

We can understand why by considering this example in terms of its column vectors.

1 1

1 2

u 2 v 0 w 3 5

3 1

4 6

All three vectors lie in the same plane and any one can be expressed as a linear

combination of the other two.

Exercise: prove that the three column vectors above lie in the same plane (i.e. they

are linearly dependent).

Now consider solution of the first set of equations by Gauss elimination.

2u + v + w = 5

4u – 6v

= -2

-2u + 7v +2w = 9

(1)

(2)

(3)

In Gauss elimination we begin by subtracting multiples of the first equation from

subsequent equations so that the first column has zeros below the diagonal element.

2u + v + w = 5

– 8v -w = -12

8v +3w = 14

(1)

(2) – 2(1)

(3) –(-1)(1)

the coefficient of u is the first pivot

the coefficient of v is the second pivot

Repeating the elimination so as to eliminate the coefficient of v in the last equation

2u + v + w = 5

– 8v -w = -12

2w = 2

(1’)

(2’)

(3’) –(-1)(2’) the coefficient of w is the third pivot

Using the Gauss elimination process we have brought the equations into upper

triangular form. (If we wrote the equations in matrix form, the transformed A matrix

would have non-zero elements only on the diagonal and above). The equations can

easily be solved now using back substitution.

Gauss elimination breakdown can occur if a zero appears in a pivot position. This

might be an indication that there is no unique solution to the equations (i.e. they are

singular) or it might simply mean that we need to reorder the equations.

Non-singular example

u + v + w =

2u + 2v + 5w =

4u + 6v + 8w =

u + v + w =

3w =

2v + 4w =

(7)

(8)

(9)

(7)

(8) - 2(7)

(9) – 4(7)

reorder

u + v + w =

2v + 4w =

3w =

(7)

(9) – 4(7)

(8) - 2(7)

Singular example

u + v + w =

2u + 2v + 5w =

4u + 4v + 8w =

u + v + w=

3w =

4w =

(7)

(8)

(10)

(7)

(8) - 2(7)

(10) – 4(7)

The second pivot is unavoidably zero.

Gauss elimination by LU decomposition

In order to automate Gauss elimination for N equations in N unknowns (in matrix

form A.x = b) we need a systematic way of subtracting one row in a matrix from

another. To subtract a multiple n of row j from row i, form a square matrix L1 with

dimension N which has 1’s on the diagonal and put –n into position (i,j) and multiply

the matrix A on the left by L1.

For the equations

2u + v + w = 5

4u – 6v

= -2

-2u + 7v +2w = 9

(1)

(2)

(3)

From the previous page we know that we need to subtract 2(1) from (2) and -1(1)

from (3) so

1 0 0

L1 2 1 0

0 0 1

1 0 0

L2 0 1 0

1 0 1

Then we need to subtract -1(2’) from (3’) to reach the triangular form of the

equations.

1 0 0

L3 0 1 0

0 1 1

Applying these operations to A in turn we have

1 1 u 2 1

1 u

2

u

b 1 c1

L 3 L 2 L 1 4 6 0 v 0 8 2 v U v L 3 L 2 L 1 b 2 c 2

2 7 2 w 0 0

w

b c

1 w

3 3

These equations are equivalent to U.x = c. In practice

(1) Find U = L3 L2 L1.A = L-1.A

(2) Find L = (L3 L2 L1) -1= L1-1L2-1L3-1

L. U = A

(3) A.x = b = L. U. x = b => U. x = L-1. A. x = L-1. b = c

(4) Solve L.c = b for c by back substitution

(5) Solve U.x = c for x by back substitution

This is a fast method for solving simultaneous equations. It is also one of several

kinds of matrix decomposition, here A into L.U.

In situations where a zero pivot is found it may be possible to save the situation by

reordering the equations using a permutation matrix. Consider the 2x2 system

0 2 u b1

3

4

v b2

In order to perform LU decomposition we need to reorder the equations and this can

be done using a permutation matrix. It consists of the identity matrix, except that if

the ith and jth equations are to be swapped then the (i,i) and (j,j) elements become 0

and the (i,j) and (j,i) elements become 1.

0

1

3

0

1 0 2 u 0 1 b1

0 3 4 v 1 0 b2

4 u b2

2 v b1

Note that the orders of the equations and the components of b are reversed, but not the

variables. If necessary, the order of the variables can be changed by post-multiplying

by a permutation matrix. More than one permutation can be carried out using

products of permutation matrices.

Introduction to the Gnu Scientific Library (GSL)

The Reference Manual for GSL is located at www.gnu.org/software/gsl

The types of problems which GSL can handle are

Complex Numbers

Special Functions

Permutations

Sorting

Linear Algebra

Fast Fourier Transforms

Random Numbers

Random Distributions

Histograms

Monte Carlo Integration

Differential Equations

Numerical Differentiation

Series Acceleration

Root-Finding

Least-Squares Fitting

IEEE Floating-Point

Wavelets

Roots of Polynomials

Vectors and Matrices

Combinations

BLAS Support

CBLAS Library

Eigensystems

Quadrature

Quasi-Random Sequences

Statistics

N-Tuples

Simulated Annealing

Interpolation

Chebyshev Approximations

Discrete Hankel Transforms

Minimization

Physical Constants

The manual explains how to use the library in detail. We will begin by considering

use of GSL to get values of a Bessel function (Special Functions) and to solve a

system of simultaneous equations by LU decomposition. We will focus on the

Vectors and Matrices, BLAS and CBLAS, Linear Algebra and Eigensystems libraries.

All sections contain example programmes.

The GSL library is installed on the cphys server. GSL header files are included using,

e.g.

#include </usr/include/gsl/gsl_sf_bessel.h>

The name gsl_sf_bessel.h means a gsl, special function call to the function Bessel

The programme bessel.c

#include <stdio.h>

#include </usr/include/gsl/gsl_sf_bessel.h>

int

main (void)

{

int i=1 ;

double x = 0.5;

double y = gsl_sf_bessel_zero_J0 (i);

double z = gsl_sf_bessel_J0 (x);

printf ("J0(%g) = %.18e\n", x, z);

return 0;

}

Calculates the first zero of the Jo Bessel function and the value of Jo(0.5). It is

compiled with the line

gcc -o bessel bessel.c -lgsl

-lgsl is the gsl library

The programme LU_decomp.c

#include <stdio.h>

#include <stdlib.h>

#include </usr/include/gsl/gsl_linalg.h>

int

main ()

{

int s ;

double a_data[] = { 0.18,

0.41,

0.14,

0.51,

double b_data[] = { 1.00,

0.60,

0.24,

0.30,

0.13,

2.00,

0.57,

0.99,

0.97,

0.19,

3.00,

0.96,

0.58,

0.66,

0.85 };

4.00 };

gsl_matrix_view m = gsl_matrix_view_array (a_data, 4, 4);

gsl_vector_view b = gsl_vector_view_array (b_data, 4);

gsl_vector *x = gsl_vector_alloc (4);

gsl_permutation * p = gsl_permutation_alloc (4);

gsl_linalg_LU_decomp (&m.matrix, p, &s);

gsl_linalg_LU_solve (&m.matrix, p, &b.vector, x);

printf ("x = \n");

gsl_vector_fprintf (stdout, x, "%g");

gsl_permutation_free (p);

gsl_vector_free (x);

return 0;

}

Initialises the matrix m with the data in the 4x4 array a_data and the vector b with

the data in the 4 vector b_data. It allocates a solution vector x and permutation

matrix p before calculating the LU decomposition of m and solving the system of

equations

a_data.x = b_data

It then prints x before releasing the memory allocated to x and p. It compiles with

gcc -o LU_decomp LU_decomp.c -lgsl

Exercise: Download source files from the PY3CO2 webpage, compile and run the

programmes in bessel.c and LU_decomp.c

Introduction to LAPACK

LAPACK is a library of linear algebra routines in fortran77 distributed with Linux or

available from netlib.org. It consists of a number of drivers, which completely solve

various problems, plus a set of computational routines which perform various specific

tasks. The naming convention for the drivers and routines is described on p 12 of the

LAPACK manual. Briefly it is: XYYZZZ where

X indicates the data type

S

single precision

D

double precision

C

complex

Z

Complex*16

YY indicates the matrix type

DI

diagonal

GB

general banded

SY

symmetric

ZZZ computation performed

Computational Routines

TRF factorise

TRS solve Ax=b

CON reciprocal condition number

RFS bounds on error

TRI find inverse

Drivers

LLS linear least squares

SEP symmetric eigenvalue problem

SVD singular value decomposition

NEP nonsymmetric eigenvalue problem

The libraries are installed on the computers in the undergraduate computer lab. We

will use C programmes to call the fortran libraries. An important difference between

C and fortran is that fortran always uses ‘call by reference’: when a subroutine is

called, the argument in the subroutine is treated as the address of the argument by the

subroutine. In C ‘call by value’ is usual for a single variable (i.e. not an array) so that

a copy of the value of the variable is passed to the function. If the value of the

variable is changed by the function and you need that value, then a call by reference

must be enforced using the argument &a instead of a for the function. (& is the

‘address of’ operator.) Also, arrays in fortran are arranged in column major order

(sequential elements in memory are arranged in columns so that A(1,3) follows

A(1,2)) whereas arrays in C are in row major order (sequential elements in memory

are in so that B[3][1] follows B[2][1]).

II Vector Spaces and Linear Equations

See Strang pp63-69

The space Rn consists of column vectors with n components which are real numbers.

e.g. R2 is the x,y plane.

1 1 0

e.g. addition of vectors in R2

2 0 2

Within any vector space we can add two vectors and we can multiply them by scalars

and will obtain another vector in the same space. Conditions which vector addition

and multiplication by a scalar must meet according to the definition of a vector space

are given in Strang Ex 2.1.5. The vector spaces of interest to us lie within the

standard spaces Rn. For example a 2-D plane in R3 which passes through the origin

satisfies the definition of a vector space and leads to the idea of a subspace.

Subspaces

A subspace is a non-empty subset that satisfies:

(1) Addition of two vectors in the subspace results in another vector in the subspace

(2) Multiplication by a scalar results in another vector in the subspace

The null vector, 0, forms the smallest possible subspace.

Column space of A

Consider the system of 3 equations in 2 unknowns

1 0

b1

5 4 u b

v 2

2 4 b3

For such a case where there are more equations than unknowns we expect that there

will usually be no solution. The system A.x = b can only be solved if b can be

expressed as a linear combinations of the columns of A. This can clearly be seen if

we rewrite the equations as

1 0 b1

u 2 v 4 b2 .

3 4 b3

A.x = b can only be solved if b lies in the plane spanned by the two column vectors.

This plane is a subspace of R3 called the column space of A denoted R(A) (the range

of A). It is a subspace of Rn where n is the number of elements in a column of A.

Null space of matrix A

Solutions to A.x = b with b = 0 always have the solution x = 0 (the null vector).

There may be additional solutions which satisfy A.xi = 0. These solutions constitute

the null space of A denoted N(A).

Solution of m equations in n unknowns

Solution for m equations in n unknowns is similar to that for n equations in n

unknowns as far as forward elimination goes. When the matrix has been reduced to

echelon form we are able to distinguish basic variables (have a pivot in echelon form)

from free variables (have no pivot in echelon form).

For a matrix with more columns n than rows m (n > m) there can be at most m pivots

and there will be at least n-m free variables. Then the homogeneous system has at

least n-m nontrivial solutions.

If the number of pivots is r then there are r basic variables and n-r free variables. r is

called the rank of the matrix.

To find homogeneous solutions (b = 0)

(1) After elimination reaches U.x = 0 identify free and basic variables.

(2) Give one free variable the value 1 and set the others to zero and solve U.x = 0 for

the basic variables.

(3) Every free variable produces its own solution and combinations of these form the

null space of A.

To find particular solutions (b ≠ 0)

(1) as above

(2) Let each free variable have the value zero

(3) solve for x

A general solution to A.x = b is a combination of particular and homogeneous

solutions.

There are 2 extreme cases for the value of the rank, r.

(1) r = n (number of columns) there are no free variables in the solution vector and

the null space contains only the null vector.

(2) r = m (number of rows) there are no zero rows in U. There are no constraints on b,

the column space is all of Rm and for every b the equations can be solved.

III Linear dependence, basis and dimension

Given a set of vectors vi look for combinations:

c1v1 + c2v2 + c3v3 + … + cnvn = 0

If there exists any solution other than ci = 0 for all coefficients ci the vectors are

linearly dependent – it is possible to express some of the vectors as linear

combinations of others.

When an n x m matrix is reduced to echelon form the non zero rows are independent

and the columns with pivots are independent.

A set of vectors in Rm must be linearly dependent if n > m.

If a vector space V consists of all linear combinations of particular vectors w1, w2, w3,

…, wn then those vectors span the space. Every vector in V can be expressed as a

combination of the wi.

To decide whether b is a combination of the columns of a matrix A composed of the

column vectors wi, solve A.x = b.

To decide whether the columns of A are independent (i.e. wi are linearly independent)

then solve A.x = 0. If a nontrivial solution can be found they are dependent.

Spanning involves the column space; independence involves the null space.

A basis for a vector space is a set of vectors : it is linearly independent and it spans

the space.

Every vector in the space is a combination of the basis vectors because they span the

space. Every combination is unique because the basis vectors are linearly

independent.

Any two bases for the same space contain the same number of vectors. This is the

dimension of the space.

IV The four fundamental subspaces

(1) The column space of A, R(A)

(2) The null space of A, N(A), contains all vectors : A.x = 0.

(3) The row space of A (the column space of AT), R(AT)

(4) The left null space of A, N(AT), contains all vectors : y . AT = 0

The row space of A R(AT) has the same dimension r as the row space of U and it has

the same bases because the two row spaces are the same.

The null space of A N(A) is the same as the null space of U. If the system A.x = 0 is

reduced to U.x = 0 then none of the solutions (which constitute the null space) is

changed. It has dimension n – r. If homogeneous solutions to U.x = 0 are found, they

constitute a basis for N(A).

The column space or range of A R(A). We find a basis for R(A) by finding a basis

for R(U). This latter basis is found by identifying the columns of U which contain

pivots – all other columns of U can be expressed in terms of these pivot column

vectors. The columns of A which form a basis for R(A) are the same columns as

those which form a basis for U because both A.x = 0 and U.x = 0 share the same

inhomogeneous solutions, x. Combinations of columns of A or U with coefficients

given by the components of x for a basis for either A or U.

The left nullspace of A N(AT). AT is an n x m matrix and its null space is a subspace

of Rm. The dimension of the column space of a matrix plus the dimension of the null

space must add to equal the number of columns. Since AT has m columns and the

dimension of the column space of AT is r the left null space must have dimension m –

r.

Fundamental theorem of linear algebra

R(A) = column space of A

N(A) = null space of A

R(AT) = row space of A

N(AT) = left nullspace of A

dimension r

dimension n – r

dimension r

dimension m- r

Inverses and transposes

Inverse of a square matrix is defined by A.A-1 = I where I is the nxn unit matrix.

The inverse of a product of matrices is given by (AB) -1 = B-1 A-1 (prove by pre and

post-multiplying by AB.

One test for invertibility is that a matrix should have a full set of nonzero pivots. If so,

by definition, it is non-singular and is therefore invertible. A square matrix is

invertible iff it is non-singular.

Transposes of matrices are defined below:

(AT)ij = A ji

(A+B) T = AT + B T

(A B) T = B T AT

(A-1) T = (AT) -1

If AT = A A is a symmetric matrix

If A-1exists it is also symmetric

If A is symmetric and can be factored into LDU without row or column exchanges

which would destroy the symmetry the U = LT and A = LDLT is the LDU

factorisation.

Problem 1. Use the programme dgetri.c to invert a matrix by solving A-1 L = U-1 for

A-1. DGETRI requires P, L and U which are computed by first calling DGETRF.

The output should pre and post-multiply the inverse by the original matrix to show

that the inverse is indeed obtained.

The programme dgetri.c calls the LAPACK routines DGETRF and DGETRI to

factorise a square matrix into LU form and then invert it by Gauss-Jordan elimination.

They are available at

www.tcd.ie/Physics/People/Charles.Patterson/SS/SS4062

and dgetri.c is compiled with cc –o dgetri –llapack –lblas –lg2c dgetri.c

–lblas is a call to the basic linear algebra subroutines which come in 3 levels:

Level 1

Level2

Level 3

vector operations

matrix-vector operations

matrix-matrix operations

y = ax + y

y = aAx + by

C = aAB + bC

-lg2c is the Gnu fortran to c converter. Information on how to call the DGETRF and

DGETRI routines can be found from the LAPACK man pages >man dgetrf

> man dgetri